5 Free ChatGPT Competitors You Should Know About For 2023.

Use these amazing Deep Learning Models to automate tasks and get ahead.

If you like my writing, I would really appreciate an anonymous testimonial. You can drop it here.

If you liked this post, make sure you hit the heart icon in this email.

This is a reader-supported publication. To support my writing you can give me a tip at Paypal or Venmo. Any amount helps a lot.

Recommend this publication to Substack over here

Subscribe to my other publication, Tech Made Simple, here

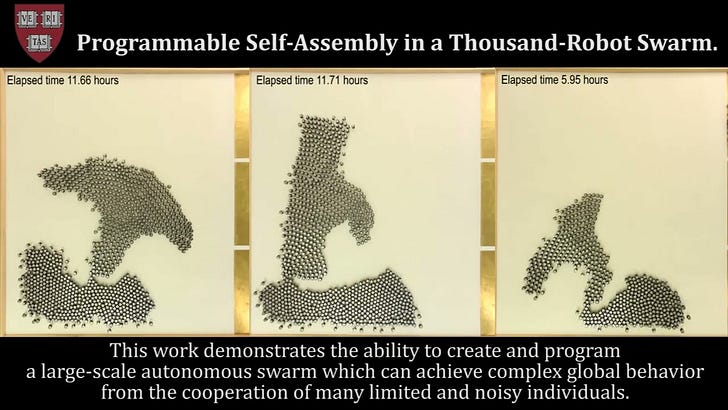

2022 has been a crazy year for Machine Learning and AI Research. Big Tech Companies have released a lot of amazing libraries that will benefit developers a lot. We have seen some great research papers, both from Big Tech Companies and Smaller Groups. Amongst my favorites was the research into self-assembling AI, which shows the potential of exploring alternative modes of AI.

And of course, this has been the year of the Large Language Models. ChatGPT has taken the internet by storm, with people coming up with all kinds of use cases and ideas about how it could be used. While a lot of this content has been the product of influencers creating videos with a lot of fluff and misinformation, this buzz is proof of the potential behind such technology. However, ChatGPT is currently gated behind APIs and access restrictions. While it can do some pretty cool things, more open source/access solutions are crucial to anyone looking to build their own applications using these large language models. In this article, I will be sharing some of these models with you, so that you have an idea of what to pick.

OPT

To start off this list, I will be sharing Meta’s version of the GPT, called the Open Pretrained Transformer, or OPT. The OPT has several exciting features that you make it a viable replacement for GPT. For example, when it comes to Zero-Shot NLP evaluation, OPT has pretty similar accuracy to the GPT model.

Furthermore, when it comes to detecting hate speech, OPT actually outperforms DaVinci (an upgraded version of GPT-3). Thus, if this is a high priority for your solutions, OPT becomes a more appealing option.

Another interesting aspect of this model is energy efficiency. Training OPT used only 1/7th of the carbon footprint of GPT-3. This improves its viability to actively interact as a foundation model in a larger system.

We developed OPT-175B with energy efficiency in mind by successfully training a model of this size using only 1/7th the carbon footprint as that of GPT-3. This was achieved by combining Meta’s open source Fully Sharded Data Parallel (FSDP) API and NVIDIA’s tensor parallel abstraction within Megatron-LM. We achieved ~147 TFLOP/s/GPU utilization on NVIDIA’s 80 GB A100 GPUs, roughly 17 percent higher than published by NVIDIA researchers on similar hardware.

Expect to see Meta on this list again. They have adopted a completely open-source approach, where they share their models, training data, logs, and a lot more. This is an unprecedented move with a lot of implications for the Machine Learning sector. To learn more about this (and the OPT Model), check out the following article.

The OPT Model has a lot of potential when it comes to being a GPT replacement, given that it seems to be designed as an alternative to it. However, Meta is not the only Tech Giant with a horse in the race.

PALM

To understand why the PaLM model is so amazing, we need to first understand the Pathways ecosystem. Pathways is the Google Architecture that creates all their Large Language Models. If you’re not interested in these details and want to get into the PaLM model directly, just scroll down a bit. The details are at the end of the section.

Google’s Pathways ecosystem was announced with a lot of fanfare. And it delivered in a big way this year, with models like Flamingo, Gato, and the amazing PaLM (the Pathways Language Model). These models have shaken up the machine learning space and contributed a lot to the discussion around Deep Learning and Transformers.

Their results have been mind-blowing, with Google contributing to several key insights into LLMs and their potential as possible stepping stones to AGI. The above video is an example of their models beating even humans at certain tasks.

While a lot of people have been talking about the individual models themselves, the real innovation is in the Pathways architecture. Pathways make 3 major contributions to the Large Language Model paradigm-

Multi-Modal Training- Pathways models are trained on multiple types of data including video, picture, and text among others. This makes it very different from GPT, which is primarily text-based.

Sparse Activation- Instead of using the entire architecture for every inference, only a subset of the neurons are used for any one task. As a result, your model can enjoy the benefits of lots of neurons(better performance, more tasks) while keeping running costs low. This was the stand-out component (according to me). I looked into various sparse activation algorithms and made a video on the most one promising here.

Use of Multiple Senses- It’s one thing for a model to be able to take multiple types of inputs for different tasks. It’s much harder for a model to use multiple kinds of input for the same task. Models using the Pathways architecture are able to do this, giving them much larger flexibility.

Recently someone used Reinforcement Learning with Human Feedback to improve PaLM. This is similar to how ChatGPT was trained from GPT-3. Thus, in many ways, this setup might be even better than ChatGPT (keep in mind the multi-modal capabilities). Luckily, they shared their work, so you can check out the project here.

A lot of internet gurus have been talking about ChatGPT and its potential to replace Google as a search engine. There are multiple reasons why this is not likely. However, there is another Language Model that is better suited to disrupt Google’s dominance in the search space. And it is created by their rival big-Tech company Meta. Told you they’d show up again.

Sphere

Machine Learning researchers at Meta have released a new Large Language Model (LLM) called Sphere. With its amazing performance on search-related tasks, and ability to parse through billions of documents, combined with Meta’s other work in NLP, Meta has positioned itself well to disrupt the search market.

Sphere is capable of traversing through a large corpus of information to answer questions. It can verify citations and even suggest alternatives citations that would match the content better, something I haven’t seen anywhere else.

Sphere’s capabilities give it interesting potential. It might not be enough to replace Google as an all-purpose search engine. However, when it comes to search engines for research, this will be a godsend. The Open Source nature of Sphere also allows people to change the underlying corpus that the model searches through. This gives it a lot of flexibility. Based on my investigation into this model, Sphere is the model that has the most commercial viability among all the LLM models. If you want to hear more about this, use my social media links at the end of the article to reach out to me. Would love to discuss this over call/in person.

BLOOM

As described on Hugging Face, “BLOOM is an autoregressive Large Language Model (LLM), trained to continue text from a prompt on vast amounts of text data using industrial-scale computational resources. As such, it is able to output coherent text in 46 languages and 13 programming languages that is hardly distinguishable from text written by humans. BLOOM can also be instructed to perform text tasks it hasn’t been explicitly trained for, by casting them as text generation tasks.”

You won’t be the only one who can’t spot the difference between BLOOM and GPT. This is not an accident. BLOOM was created to disrupt the Big Tech stranglehold on Large Models. Over the last few years, tech companies have been conducting research using insane amounts of computation powers that normal researchers/groups can’t replicate. This makes it impossible for independent people to verify and critique the research put out by Big Tech Companies. This also led to Data Scientists often taking the findings from these papers out of context and thus creating inefficient and expensive pipelines.

BLOOM was an attempt to counter this. Think of it as a model for the people. A model that is not controlled by Big Tech and thus promotes free research. Thus, if you’re looking for open-source alternatives to ChatGPT, BLOOM might be the model for your needs. I first started covering it around June, before these models were cool. I’ve had okay results with it. It hasn’t been as powerful as ChatGPT (or the others on this list), but given its free nature, it might appeal to the edgy teens amongst you.

Galactica

Lastly, we have another model by Meta. No, they haven’t paid me (although Zuck if you’re reading this, call me). Remember, how I mentioned how Sphere could be the Google for researchers? Well, Zuck wasn’t content with picking just one fight. He also had a ChatGPT equivalent, geared toward research people.

Imagine a ChatGPT, but trained on a lot of research texts. Such a model could explain Math formulae, help you write papers, create Latex for you etc, etc. That is what Galactica was. It could a lot, beyond just research-related tasks. Yannic Kilcher has a fantastic video on it, so I’ll just link it down there for anyone interested in learning more.

Unfortunately, there was a lot of controversy around this model, which lead to Meta taking it down. However, I hope they bring it back soon. Keep your ears on the ground for news of this (or some variant). It is clearly a powerful model and one that can be useful to a variety of people.

For Machine Learning a base in Software Engineering, Math, and Computer Science is crucial. It will help you conceptualize, build, and optimize your ML. My daily newsletter, Technology Made Simple covers topics in Algorithm Design, Math, Recent Events in Tech, Software Engineering, and much more to make you a better Machine Learning Engineer. I have a special discount for my readers.

Save the time, energy, and money you would burn by going through all those videos, courses, products, and ‘coaches’ and easily find all your needs met in one place.

I am currently running a 20% discount for a WHOLE YEAR, so make sure to check it out. Using this discount will drop the prices-

800 INR (10 USD) → 533 INR (8 USD) per Month

8000 INR (100 USD) → 6400INR (80 USD) per year

You can learn more about the newsletter here. If you’d like to talk to me about your project/company/organization, scroll below and use my contact links to reach out to me.

Reach out to me

Use the links below to check out my other content, learn more about tutoring, reach out to me about projects, or just to say hi.

If you like my writing, I would really appreciate an anonymous testimonial. You can drop it here- https://docs.google.com/forms/d/1Oco7l3A-rE6Ao4E0mB2hgwO6W4nWXwQz3sCa_IYQM5s/edit

To help me understand you fill out this survey (anonymous)

Check out my other articles on Medium. : https://rb.gy/zn1aiu

My YouTube: https://rb.gy/88iwdd

Reach out to me on LinkedIn. Let’s connect: https://rb.gy/m5ok2y

My Instagram: https://rb.gy/gmvuy9

My Twitter: https://twitter.com/Machine01776819