Are Transformers effective for Time Series Forecasting [Breakdowns]

How well does Machine Learning’s Favorite Architecture stack up against simple autoregressive models

Hey, it’s Devansh 👋👋

In my series Breakdowns, I go through complicated literature on Machine Learning to extract the most valuable insights. Expect concise, jargon-free, but still useful analysis aimed at helping you understand the intricacies of Cutting-Edge AI Research and the applications of Deep Learning at the highest level.

I put a lot of effort into creating work that is informative, useful, and independent from undue influence. If you’d like to support my writing, please consider becoming a paid subscriber to this newsletter. Doing so helps me put more effort into writing/research, reach more people, and supports my crippling chocolate milk addiction. Help me democratize the most important ideas in AI Research and Engineering to over 100K readers weekly. Many companies have a learning budget that you can expense this newsletter to. You can use the following for an email template to request reimbursement for your subscription.

PS- We follow a “pay what you can” model, which allows you to support within your means, and support my mission of providing high-quality technical education to everyone for less than the price of a cup of coffee. Check out this post for more details and to find a plan that works for you.

2022 was a breakout year for Transformers in AI Research. ChatGPT has been all the rage recently. People have been using it for all kinds of tasks- from writing sales emails and finishing college assignments, to even as a possible alternative to Google Search. Combine this with other Large Language Models like BERT, AI Art generators like Stable Diffusion and DALLE, and Google’s hits like GATO and Minerva for robotics and Math, and it seems like huge Transformers trained on a lot of data and compute seem like God’s Gift to Machine Learning.

Transformers were created as upgrades to Recurrent Neural Networks, to handle sequential data. While they have undeniably been much better at processing text information, RNNs have had a strong presence in tasks involving Time Series Forecasting. This leads to the question, “Are Transformers Effective for Time Series Forecasting?” The authors of a paper with the same name set out to this? To do so, they compared Transformers with a simple model, that they refer to as DLinear.The architecture of DLinear can be seen below.

By comparing the performance of DLinear against Transformer based solutions, the authors provided their answer to the utility of Transformers in Time Series Forecasting (TSF). In this article, I will be breaking down their findings and going to their results to help you better understand the findings of these researchers.

Spoiler Alert: The results were not pretty for Transformers.

Benefits of Using Simple Linear Systems

In a world of exceedingly complex and non-linear architectures, DLinear might look extremely out of place. However, there are several benefits to using a simpler model like DLinear. To quote the authors- “Although DLinear is simple, it has some compelling characteristics:

An O(1) maximum signal traversing path length: The shorter the path, the better the dependencies are captured [18], making DLinear capable of capturing both short-range and long-range temporal relations.

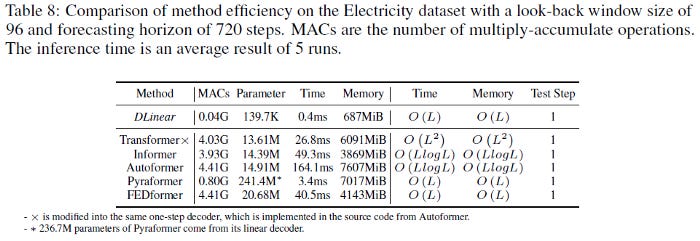

High-efficiency: As each branch has only one linear layer, it costs much lower memory and fewer parameters and has a faster inference speed than existing Transformers (see Table 8).

Interpretability: After training, we can visualize weights from the seasonality and trend branches to have some insights on the predicted values [8].

Easy-to-use: DLinear can be obtained easily without tuning model hyper-parameters.”

The success of using linear models also hints at the possibility that many TSF tasks have a strong linearity in them. The key differentiator of Deep Learning has been the introduction of non-linearity which makes it possible to model very complex relationships. The performance of DLinear and other simpler architectures might imply that TSF is mostly a Linear Problem. This is just conjecture on my part, based on my reading and experiences. If you have different experiences, let me know.

Now for the part that you have all been waiting for? How well do Transformers perform in Time Series Forecasting? Does a simple model like DLinear hold a candle to Transformers? Let’s look into a few experiments and their outcomes.

Transformers vs DLinear

The experiment setup was pretty straightforward. They compared the DLinear model against various Transformers across all the datasets mentioned. They also used a variety of time steps to add rigor to their findings. The results can be found in the table below-

Obviously, these numbers can be hard to understand without any other context. Fortunately, the authors graphed the results of their experiments to help us visualize some of the differences.

I’m going to zoom in on the Exchange Rate Dataset just because the graph is not as messy as the other two. Thus the differences in performance are easier to see-

It seems like DLinear just doesn’t break down the way the transformer models do. The authors made the following observation about their experiments-

When the input length is 96 steps and the output horizon is 336 steps, Transformers [28, 27, 29] fail to capture the scale and bias of the future data on Electricity and ETTh2. Moreover, they can hardly predict a proper trend on aperiodic data such as Exchange-Rate. These phenomena further indicate the inadequacy of existing Transformers for the TSF task.

The note about aperiodic data being a challenge for transformers is particularly interesting to me. It’s something that I will be looking into more in the future. If you have any experiences/thoughts you’d like to share, please do leave them in the comments. If you’d like to have a more extended conversation, I always leave my social media links at the end of every article. I love it when my readers/viewers use that to reach out to talk about their inputs, feedback, or even just projects they’re working on. Makes for very interesting discussions and helps me learn a ton.

To finish this article, let’s talk about why Transformers are not effective for Time Series Forecasting.

We introduce an embarrassingly simple linear model DLinear as a DMS forecasting baseline for comparison. Our results show that DLinear outperforms existing Transformer-based solutions on nine widely-used benchmarks in most cases, often by a large margin

-The Conclusion by the authors

Why Transformers fail at Time Series Forecasting

The authors had some very salient observations about Transformers and why they might be ineffective for TSF-based tasks. Their analysis points to the attention mechanism as a possible weakness for time series forecasting tasks-

More importantly, the main working power of the Transformer architecture is from its multi-head self-attention mechanism, which has a remarkable capability of extracting semantic correlations between paired elements in a long sequence (e.g., words in texts or 2D patches in images), and this procedure is permutation-invariant, i.e., regardless of the order. However, for time series analysis, we are mainly interested in modeling the temporal dynamics among a continuous set of points, wherein the order itself often plays the most crucial role.

Their observations square very well with my observations. When I was working on Supply Chain Forecasting, I noticed that ARIMAs and other simpler models were much better at adapting to supply chain shocks, where input values suddenly increase or decrease. Keep in mind that this is just my anecdotal experience working on that one project, but it does align with their prognosis of the situation.

If any of you would like to work on this topic, feel free to reach out to me. If you’re looking for AI Consultancy, Software Engineering implementation, or more- my company, SVAM, helps clients in many ways: application development, strategy consulting, and staffing. Feel free to reach out and share your needs, and we can work something out.

That is it for this piece. I appreciate your time. As always, if you’re interested in working with me or checking out my other work, my links will be at the end of this email/post. If you like my writing, I would really appreciate an anonymous testimonial. You can drop it here. And if you found value in this write-up, I would appreciate you sharing it with more people. It is word-of-mouth referrals like yours that help me grow.

Reach out to me

Use the links below to check out my other content, learn more about tutoring, reach out to me about projects, or just to say hi.

Small Snippets about Tech, AI and Machine Learning over here

AI Newsletter- https://artificialintelligencemadesimple.substack.com/

My grandma’s favorite Tech Newsletter- https://codinginterviewsmadesimple.substack.com/

Check out my other articles on Medium. : https://rb.gy/zn1aiu

My YouTube: https://rb.gy/88iwdd

Reach out to me on LinkedIn. Let’s connect: https://rb.gy/m5ok2y

My Instagram: https://rb.gy/gmvuy9

My Twitter: https://twitter.com/Machine01776819