Bias in humans vs Deep Learning and Why you should avoid GPT for hiring[Thoughts]

Understanding the difference in the various kinds of bias. This kind of bias leads to some terrible products being pitched.

Hey, it’s Devansh 👋👋

Thoughts is a series on AI Made Simple. In issues of Thoughts, I will share interesting developments/debates in AI, go over my first impressions, and their implications. These will not be as technically deep as my usual ML research/concept breakdowns. Ideas that stand out a lot will then be expanded into further breakdowns. These posts are meant to invite discussions and ideas, so don’t be shy about sharing what you think.

If you’d like to support my writing, please consider buying and rating my 1 Dollar Ebook on Amazon or becoming a premium subscriber to my sister publication Tech Made Simple using the button below.

p.s. you can learn more about the paid plan here.

PSA, I’m currently in Philadelphia for 1-2 days. Let me know if any of you would like to meet during this time.

As organizations continue to adopt emerging technologies like Machine Learning, Generative AI, and Deep Learning, one of the field’s most pressing issues has been brought to the forefront of the discussions- the bias in AI agents. We have seen a lot of people talk about AI biases and the challenges associated with it. However, contemporary discussions often overlook that human bias and bias in artificial intelligence systems are fundamentally different. Even though we use the same word for both phenomena, these 2 have very different natures.

This becomes very problematic in a lot of discussions around safe AI because people often treat these similarly, and thus engineer solutions that would be human-safe, but not AI-safe. This happens because humans are notoriously bad with the False-Consensus bias, where we assume others are like us (and would behave similarly). Applied to AI, this can have some disastrous effects, that we need to tackle. Understanding the difference between AI and Human biases will be helpful in preventing this issue. This article will discuss that, along with some problems that arise out of confusing the different kinds of biases. It will end with a discussion of why GPT-based hiring tools are a terrible idea (make sure you read that even if you skip everything else, it’s critical).

Human Biases-

Let’s first look into the biases in human brains and how they operate. When we look at biases in humans, there are a few things about them that stand out-

Architectural- Biases in humans exist because that is how our bodies/brains are designed. Human bias is very much a feature, not a bug- and will continue to exist as long as we are alive (this bias extends beyond just humans, and is a feature of most life). While human bias is often treated like a dirty little thing, it’s a morally neutral phenomenon. It is a (crucial) part of how life has evolved.

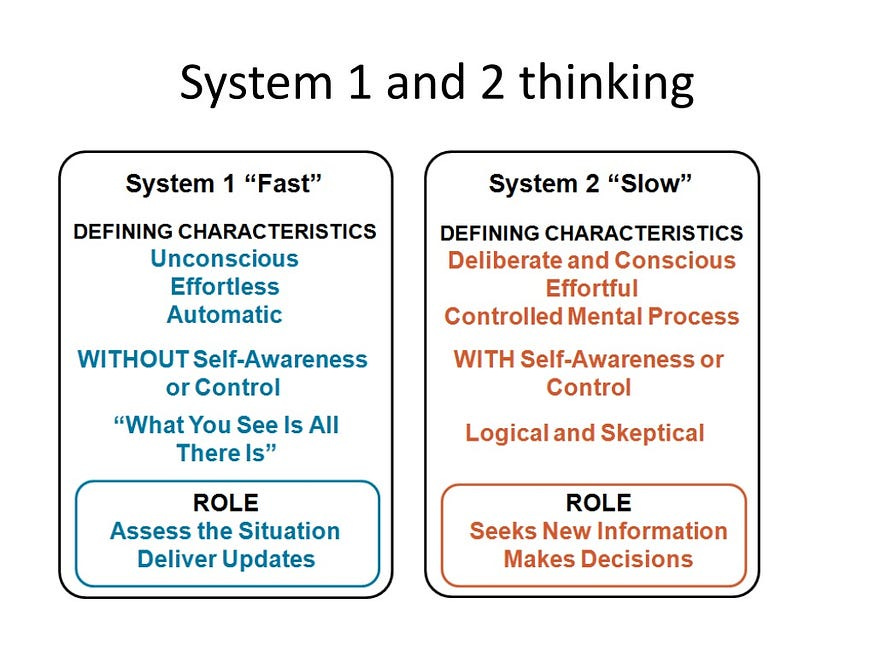

The reason for bias- Biases exist because they help our brains save energy and make quicker decisions. That’s really all they do (look into System 1 and System 2 from the fantastic book- Thinking Fast and Slow). Without biases, our minds would be overwhelmed as they look into all information/stimuli as new and thus exhaust our mental energy quickly. There are certain kinds of bias that have negative consequences- racial bias, sexual bias, etc. But a phenomenon- biases are neither good or bad.

Many discussions on human biases seem to overlook this nuance. Our biases as a whole are inherently not problematic. On the contrary, they serve a crucial role, especially in a world as saturated by ‘novel’ content as ours.

When it comes to fixing bias in humans, it’s a losing battle. By our very design- we are always going to be biased. We have to differentiate between the harmful biases (racial, social, etc) and the benign ones (my tendency to go for dark or mint chocolate icecreams over vanilla). With that background out of the way, let’s now explore biases in Machine Learning based systems.

AI (specifically Machine Learning) Biases-

Once again, the focus here will be how bias creeps into Machine Learning based systems. While the ideas discussed do carry over into more general ‘AI’, Artificial Intelligence as a field is extremely broad, and thus making meaningful blanket statements is much harder. With that out of the way, let’s get into the main points of this section.

To begin with, let’s first define bias in Machine Learning. Depending on how it’s used it could be any of 2 things-

How wrong a predictor is. This is the definition that we see used in discussions regarding the bias-variance tradeoff, which we discussed in this video.

Whether the model encodes human prejudices. If a model lowers the creditworthiness of a candidate because of their race, it would be doing so for historically valid reasons, but it would lead to an undesirable outcome. This might seem like an absurd example, but it does happen (indirectly). Race/Socio-Economic status is heavily encoded into various attributes that are used in various predictive models. Models then unfairly penalize the people who might share these attributes- furthering the inequalities that the automated decision-makers were touted to avoid.

The latter type of bias leads to a very interesting case- sometimes we need bias (of the first kind) to fight the bias of the second type. When dealing with sensitive, life-altering agents- credit score checkers, healthcare analysis, and disease detection- it might even be beneficial to inject error into your model so that it doesn’t learn the biases in your data too closely(the last 2 cases I speak from personal experience). In other words, bias fights bias. By leveraging techniques like Data Augmentation, we end up further from our recorded data distribution (thus with more ‘bias’) but with less biased systems.

Natural-language-processing applications’ increased reliance on large language models trained on intrinsically biased web-scale corpora has amplified the importance of accurate fairness metrics and procedures for building more robust models…We propose two modifications to the base knowledge distillation based on counterfactual role reversal — modifying teacher probabilities and augmenting the training set.

This is one of the aspects of bias that makes it so hard to deal with- it is easy to conflate bias caused by a weak predictor (bias hidden in your models) with bias from systemic issues (bias hidden in your data). This problem is often compounded in Machine Learning teams because many ML teams are extremely metrics-driven with very little focus given to the context where their pipelines will be used. This leads to the case where teams are oriented towards hitting high scores on benchmarks over creating solutions that would be meaningful. The result- great ML scores, fantastic performance for ‘normal’ folk, and increased isolation for the fringes of society. Only this time, this problem is a lot more subtle than the isolationist policies that created the particular inequality in the first place.

With that long soliloquy on Bias out of the way, let’s finally look at some crucial properties of bias in ML systems-

Cost is mostly constant- Unlike human biases, biases in ML systems don’t save any more energy. The data is still processed through all the neurons etc.

From Statistical Associations- The biases in Machine Learning based AI Systems exist because the predictors learn some kind of a statistical pattern that exhibits this bias. Type 1 bias is caused when the pattern is too complex for our predictor. Type 2 bias occurs when the model learns encoded biases in the data. It is not inherent to the AI Model itself.

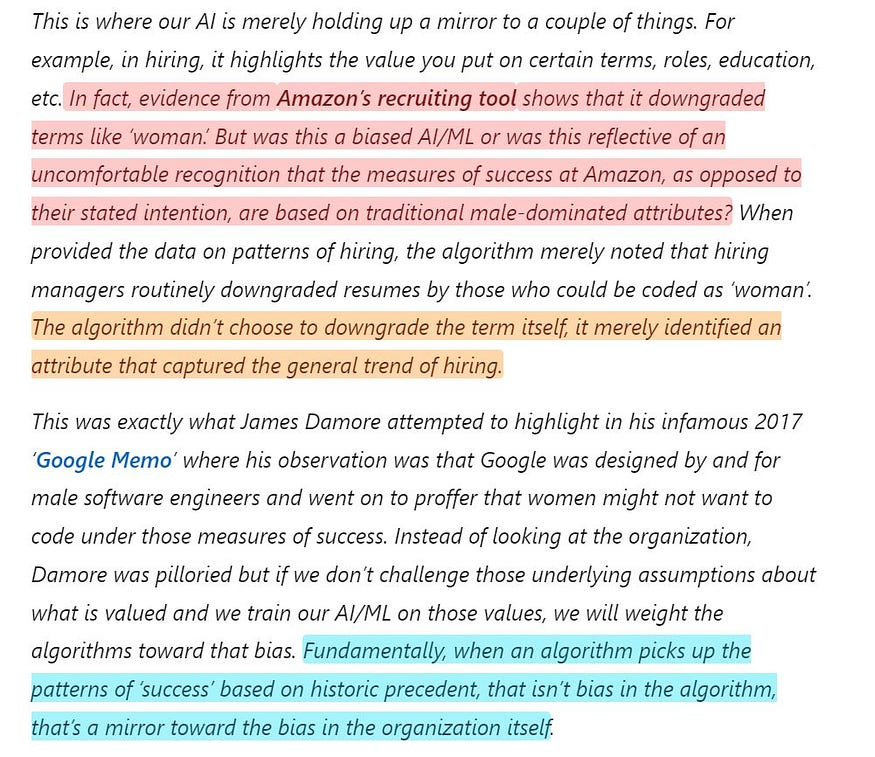

A great read on this topic is the fantastic piece- Eliminating Bias in AI/ML (the author, Michael, was pretty much on point in the whole piece). Of the writeup, there were 2 paragraphs that I found particularly cogent-

When it comes to identifying the biases in Machine Learning, the first step is to identify the bias we are dealing with. Are we looking at bias because of a weakness in the model, or a weakness in the very system that we are experimenting on? This is a very important step and one that is often overlooked when building AI solutions these days. Not understanding the different biases and their nuances leads to developing solutions that sound like a good idea until you really start thinking about the deeper implications of those products.

Take the very popular GPT for LinkedIn Profiles, Resume/Cover Letter Writing, hiring, and Employee Evaluation solutions that are floating around the market. I’ve had conversations with a few groups who were either building or going to buy such solutions (these conversations were a large reason I’m writing this). These systems work great on tests but they suffer from a big flaw- GPT is white, American, neurotypical, and affluent (mostly). Using these systems to grade and judge real humans is a very twisted way of forcing them to comply with these standards. Controlling someone’s words is a great first step to controlling their thoughts. These ‘intelligent’ hiring tools are archaic, colonial (I don’t use this word lightly), and very exclusionary. This doesn’t show up in ML Benchmarks, because most ‘Professional’ tests experience the same issue. Apply this to a diverse workplace, and you will see disastrous results.

The aftermath of this would make for great satire-

Eventually, people will notice the lack of diversity at these organizations, and upper management will respond through token entry-level hiring programs.

The entry-level folk would struggle to fit in at the homogenous environment of this organization- leading to worse performance, higher employee churn etc.

The organizations would hire a mostly neurotypical/part of the ‘insider’ ground data scientist (like me) to analyze their internal data- which would lead to the identification of these ‘problem areas’.

The biases get reinforced, making the process even more exclusionary.

The worst part about this is that since the ‘divergent’ groups are hired at the entry/lower level, most will never have the chance to make it to upper levels of leadership where they can affect cultural change.

If you take nothing else away from this piece, just remember this- these hiring tools are far too unsafe to be used. Since I started writing, I’ve tried to keep my work mostly neutral and objective. This is one of the few times where I will not be doing that. The AI Doomers are right about the societal level risk of these tools, just not in the way that most claim. These AI tools will not identify someone who’s black/gay/trans/neurodivergent and reject them. They will force the people from the outsider groups to put on a mask and play a part as long as they are engaged with these organizations.

I’m going to end this over here. As always, if you disagree or think I got something wrong, please let me know. I always welcome discussion, especially on important issues like this. My links are at the end if you want to message me.

That is it for this piece. I appreciate your time. As always, if you’re interested in working with me or checking out my other work, my links will be at the end of this email/post. If you like my writing, I would really appreciate an anonymous testimonial. You can drop it here. And if you found value in this write-up, I would appreciate you sharing it with more people. It is word-of-mouth referrals like yours that help me grow.

Reach out to me

Use the links below to check out my other content, learn more about tutoring, reach out to me about projects, or just to say hi.

Small Snippets about Tech, AI and Machine Learning over here

AI Newsletter- https://artificialintelligencemadesimple.substack.com/

My grandma’s favorite Tech Newsletter- https://codinginterviewsmadesimple.substack.com/

Check out my other articles on Medium. : https://rb.gy/zn1aiu

My YouTube: https://rb.gy/88iwdd

Reach out to me on LinkedIn. Let’s connect: https://rb.gy/m5ok2y

My Instagram: https://rb.gy/gmvuy9

My Twitter: https://twitter.com/Machine01776819

Good stuff. It's clear that we can't just "set it and forget it" for anything with generative AI, at least not right now. We need to use these tools appropriately, as collaborators but not as drivers. Bias is one of the easiest places to see this, but there are plenty of other potential perils that your piece also calls attention to by way of this danger.

Let's keep raising awareness and educating folks!

What's even more interesting is how we actually leverage bias in the form of stereotypes and apply them TO OURSELVES! Even the simple term "Dress for Success" is loaded with stereotypes and bias. It's hacking that type 1 and type 2 thinking. We just have to get better at Stereotyping.

https://www.polymathicbeing.com/p/stereotyping-properly