Meta AIs bombshell about Big Data and Deep Learning [Breakdowns]

Noone, absolutely no human being alive could have predicted this

Hey, it’s Devansh 👋👋

In my series Breakdowns, I go through complicated literature on Machine Learning to extract the most valuable insights. Expect concise, jargon-free, but still useful analysis aimed at helping you understand the intricacies of Cutting Edge AI Research and the applications of Deep Learning at the highest level.

If you’d like to support my writing, please consider buying and rating my 1 Dollar Ebook on Amazon or becoming a premium subscriber to my sister publication Tech Made Simple using the button below.

p.s. you can learn more about the paid plan here.

Thanks to the amazing success of AI, we’ve seen more and more organizations implement Machine Learning into their pipelines. As the access to and collection of data increases, we have seen massive datasets being used to train giant deep-learning models that reach superhuman performances. This has led to a lot of hype around domains like Data Science and Big Data, fueled even more by the recent boom in Large Language Models.

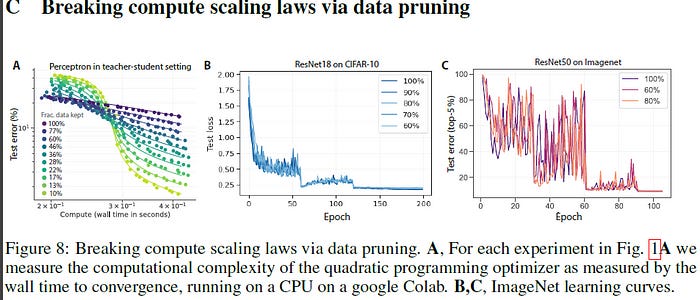

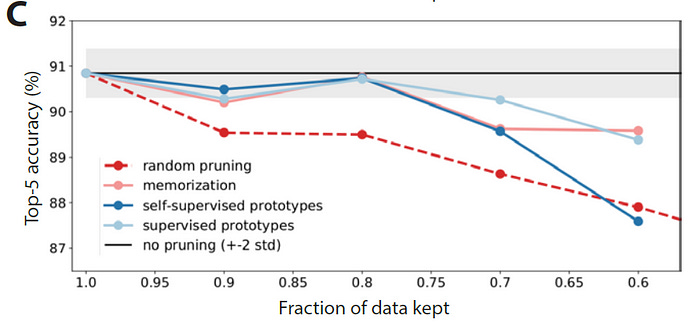

Big Tech companies (and Deep Learning Experts on Twitter/YouTube) have really fallen in love with the ‘add more data, increase model size, train for months’ approach that has become the status quo in Machine Learning these days. However, heretics from Meta AI published research that was funded by Satan- and they claim that just scaling dataset size with no other thought is extremely inefficient. And completely unnecessary. In this post, I will be going over their paper- Beyond neural scaling laws: beating power law scaling via data pruning, where they share ‘evidence’ about how selecting samples intelligently can increase your model performance, without ballooning your costs out of control.

While this paper focuses on Computer Vision- the principles of their research will be interesting to you regardless of your specialization.

Such vastly superior scaling would mean that we could go from 3% to 2% error by only adding a few carefully chosen training examples, rather than collecting 10x more random ones

- From the paper.

We will cover the reasons why adding more randomly adding more samples is inefficient and the protocol that Meta AI researchers came up with to allow you to pick the most useful data samples to add to your pipelines. Sound like Pagan Witchcraft? Read on and find out.

Here we focus on the scaling of error with dataset size and show how both in theory and practice we can break beyond power-law scaling and reduce it to exponential scaling instead

Why adding more data becomes inefficient

When it comes to small datasets, ‘add more data’ is one of the go-to ideas you will come across. And it works. Unfortunately, people apply this to larger datasets/projects without thinking things through. Unfortunately, as you continue to add each new sample to your training data, the expected gain from adding that sample will go down. Very quickly. Especially when your training data order is in the order of millions (big research projects use Petabytes of data to gain 1–2% performances).

Why does this happen? Think of how gradient descent works. Your model parameters are updated based on the error calculated. If your error is not very high, you will not see huge changes to your model weights. This makes sense. If you’re almost right, you don’t want your model to change a lot.

Classical randomly selected data generates slow power law error scaling because each extra training example provides less new information about the correct decision boundary than the previous example.

In such a case, adding more standard samples to your training will not do much. Your model will predict something very close to your original, and the resulting changes to your parameters are negligent. You’ve essentially just wasted computation. If you have already trained with billions of samples (many of these 99% accuracy projects do), then any given image will not add too much new information to your overall training. This logic also holds true for architectures like trees, which aren’t calculating gradients but are relying on other kinds of error.

Instead, you can save a whole bunch of time, electricity, and computational resources by just picking only the samples that will add some information to your overall training. If you have already gotten a good amount, this would be a good time to implement chaotic data augmentation/inject model noise to make your systems more robust. I’ve covered these many times in my content, so check out my other articles/videos for more information.

So now that we have covered the need for having a pruning/filtering protocol that can select the best samples for adding more information to the predictions, let’s go over how you can pick the best samples. The approach that Meta researchers came up with is different to the one system I built and have used extensively, but it has some great results regardless. I will be looking to compare it against what I did soon. For now, let’s just go over their system.

How to Pick the best data samples for Machine Learning

So what kind of a procedure did Meta AI come up with to pick the best samples? Some very fancy deep learning computation on the cutting edge. No. Turns out this research team was feeling rebellious. Their solution was simple, cost-effective, and applicable to projects of multiple sizes and capabilities.

Their idea is relatively simple. Cluster the samples. For any given sample, it is easy/hard depending on how far it is from the nearest centroid. Pretty elegant. In their words-

To compute a self-supervised pruning metric for ImageNet, we perform k-means clustering in the embedding space of an ImageNet pre-trained self-supervised model (here: SWaV [31]), and define the difficulty of each data point by the distance to its nearest cluster centroid, or prototype. Thus easy (hard) examples are the most (least) prototypical.

I really like this solution. It makes intuitive sense, is not super expensive to work with,and doesn’t explicitly need labeling(a huge plus). Despite this, it holds up really well, when compared to the other costlier protocols used to prune out the uninformative samples. This is exciting and definitely warrants further investigation. Integration of Self-Supervision into data quality checks is something I’ve talked about before, but this is a clear indication that this approach has a lot of potential for the future.

However, this isn’t what stood out to me the most. When it comes to clustering, having the right number of clusters is very important. However, this approach is very robust to the choice of k. Truly exceptional stuff.

This allows the researchers to not have to spend a lot of resources setting up the experiments, which I appreciate. Too many papers are impractical because of how much was put into getting the perfect conditions. It allows them to follow through on the promise made early in the paper and really deliver a solution that is practical and innovative.

This paper has a couple of other interesting aspects to explore. For example they mentioned different pruning strategies for small and large datasets (keep easy and hard samples respectively). Their analysis of different classes and how they cluster, was pretty interesting too. Another standout to me was their approach to force alignment between clusters (unsupervised) and classes (supervised). They took all the samples of the class and averaged out the embeddings. This approach beats everything else and has some interesting implications for latent space encoding that I will cover another time. If you’re interested in that, make sure you connect with me to not miss out. All my relevant links are at the end of this article.

That is it for this piece. I appreciate your time. As always, if you’re interested in reaching out to me or checking out my other work, links will be at the end of this email/post. If you like my writing, I would really appreciate an anonymous testimonial. You can drop it here. And if you found value in this write-up, I would appreciate you sharing it with more people. It is word-of-mouth referrals like yours that help me grow.

Reach out to me

Use the links below to check out my other content, learn more about tutoring, reach out to me about projects, or just to say hi.

Small Snippets about Tech, AI and Machine Learning over here

Check out my other articles on Medium. : https://rb.gy/zn1aiu

My YouTube: https://rb.gy/88iwdd

Reach out to me on LinkedIn. Let’s connect: https://rb.gy/m5ok2y

My Instagram: https://rb.gy/gmvuy9

My Twitter: https://twitter.com/Machine01776819

Excellent summary! Question, through. Isn't this just the same argument the Active Learning community has been hammering all along?