ACI has been achieved internally: How to solve complex software engineering tasks with AI-Agents [Guest]

Learning from how SWE-Agent uses large language models and Agent-Computer Interfaces to improve software development.

Hey, it’s Devansh 👋👋

Our chocolate milk cult has a lot of experts and prominent figures doing cool things. In the series Guests, I will invite these experts to come in and share their insights on various topics that they have studied/worked on. If you or someone you know has interesting ideas in Tech, AI, or any other fields, I would love to have you come on here and share your knowledge.

I put a lot of effort into creating work that is informative, useful, and independent from undue influence. If you’d like to support my writing, please consider becoming a paid subscriber to this newsletter. Doing so helps me put more effort into writing/research, reach more people, and supports my crippling chocolate milk addiction. Help me democratize the most important ideas in AI Research and Engineering to over 100K readers weekly.

PS- We follow a “pay what you can” model, which allows you to support within your means, and support my mission of providing high-quality education to everyone for less than the price of a cup of coffee. Check out this post for more details and to find a plan that works for you.

Mradul Kanugo leads the AI division at Softude. He has 8+ years of experience, and is passionate about applying AI to solve real-world problems. Beyond work, he enjoys playing the Bansuri. Reach out to him on LinkedIn over here or follow his Twitter @MradulKanugo. Some of you may remember his earlier guest post on Prompt Testing for LLMs, which was very well received.

Mradul will be continuing his theme for improving testing with AI with today’s post on agent-computer interfaces (ACI). ACI focuses on the development of AI Agents that interact with computing interfaces, which enables dynamic interactions between an AI Agent and IRL environments (think Robots, but virtual). The rise of Large Language Models has enabled a new generation of ACI agents that can handle a more diverse array of inputs and commands- making more intelligent ACI agents commercially viable.

The integration of ACI with Software-focused AI Agents can significantly boost tech teams' testing capacities, allowing them to test products in ways that are closer to how users work with them. In a world with increasing labor costs- ACI can help organizations conduct inexpensive, large-scale software testing. Furthermore, well-designed ACI protocols can be extremely helpful in helping us test for the disability-friendliness of projects, and ACI has great synergy with AI observability/monitoring, Security, and alignment fields- all of which are becoming increasingly important for investors and teams looking to invest into AI.

A more comprehensive analysis of ACI and why you should care about it is given below-

In the following article, we will analyze the excellent work done on ACI-based AI agents to understand what makes ACI-based software agents effective and how you can build them for yourself. Particularly noteworthy is how the team accomplished great results by optimizing their command and feedback to make it easy for LLMs instead of trying to force the more expensive and uncontrollable LLMs to adapt to their needs-

This is an excellent example of good design and how it can serve you more than any expensive fine-tuning/guardrail. Now, I’ll let Mradul take the stage to cover how the SWE agent research in more detail.

If the title rings a bell, it's because it's inspired by Sam Altman's humorous quip, "AGI has been achieved internally." If you're not familiar with the reference, don't worry; you can find more context on the joke by following this link.

In this blog post, we'll take an in-depth look at the paper "SWE-AGENT: AGENT-COMPUTER INTERFACES ENABLE AUTOMATED SOFTWARE ENGINEERING." What makes this paper particularly interesting is its exploration of not only the novel agent proposed but also the methodology behind creating such agents. The paper discusses experiments, conclusions, and valuable insights that can be applied to the development of future agents. Additionally, it offers useful takeaways on how to effectively interact with LMs. By the end of this post, you'll have a better understanding of the concepts and techniques that could shape the future of agent development and LM interaction.

Let's dive in and discover what this paper has to offer!

Language Models (LMs) have become indispensable tools for software developers, serving as helpful assistants in various programming tasks. Traditionally, users have acted as intermediaries between the LM and the computer, executing LM-generated code and requesting refinements based on computer feedback, such as error messages. However, recent advancements have seen LMs being employed as autonomous agents capable of interacting with computer environments without human intervention. This shift has revolutionized the way developers leverage LMs in their day-to-day work.

Solving the Right Problem

While agents and LMs have the potential to significantly accelerate software development, their application in realistic settings remains largely unexplored. Agents have demonstrated the ability to solve a wide range of coding problems, but these problems are often well-defined and contain all the necessary information. In real-world scenarios, this is rarely the case. To address this challenge, the paper proposes tackling real-world software engineering problems, and SWE-bench serves as an ideal testing ground.

What is SWE-Bench? SWE-bench is a comprehensive evaluation framework comprising 2,294 software engineering problems sourced from real GitHub issues and their corresponding pull requests across 12 popular Python repositories. The framework presents a language model with a codebase and a description of an issue to be resolved, tasking the model with modifying the codebase to address the issue. Resolving issues in SWE-bench often necessitates understanding and coordinating changes across multiple functions, classes, and even files simultaneously. This requires models to interact with execution environments, process extremely long contexts, and perform complex reasoning that goes beyond traditional code generation tasks.

To learn more about SWE-bench, you can read the paper or visit their website

Now that the problem has been correctly framed, let's explore the novel contributions of the paper. The paper introduces SWE-agent, an LM-based autonomous system capable of interacting with a computer to solve complex, real-world software engineering problems.

But before we dive into the details, you might be wondering about its effectiveness. When using GPT-4 Turbo as the base LLM, SWE-agent successfully solves 12.5% of the 2,294 SWE-bench test issues, significantly outperforming the previous best resolve rate of 3.8% achieved by a non-interactive, retrieval-augmented system.

Impressive results, right? Now that we know this work yields substantial improvements, let's delve into the two key contributions of the paper: SWE-Agent (the high-performing agent we mentioned) and, more importantly, ACI (not to be confused with AGI).

What is ACI?

It stands for Agent-Computer Interface.

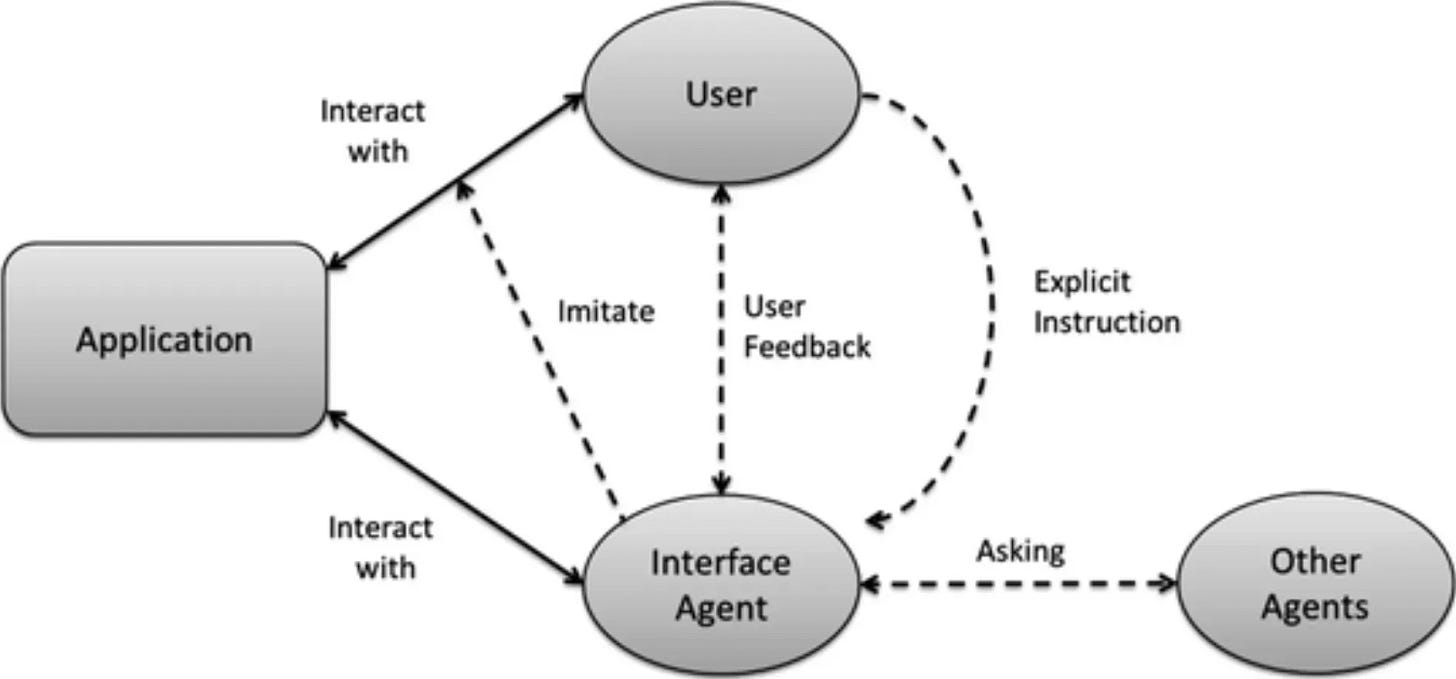

Imagine a language model (LM) functioning as an agent, interacting with an environment by executing actions and receiving feedback in a continuous loop. While this concept is well-established in robotics, where agents control physical actuators, the digital realm offers unparalleled flexibility in creating interfaces between agents and computers.

These interfaces come in various forms, such as APIs for programs and UIs for humans. However, LM agents represent a brand new category of end-users, and the interface they use to interact with computers is known as the Agent-Computer Interface (ACI).

The interaction between agents and computers resembles a game of ping-pong, with the agent issuing commands and the computer responding with output. The ACI acts as the referee, specifying the available commands and defining how the environment state is communicated back to the LM after each command is executed.

But the ACI's responsibilities don't end there. It also maintains a history of all previous commands and observations, ensuring a comprehensive record. At each step, the ACI manages how this information should be formatted and combined with high-level instructions to create a single input for the language model. This process ensures that the LM agent has all the necessary context and guidance to make informed decisions and take appropriate actions within the digital environment.

By designing effective ACIs, we can harness the power of language models to create intelligent agents that can interact with digital environments in a more intuitive and efficient manner. This opens up a world of possibilities for automation and problem-solving.

But what makes an ACI effective?

Here are some key properties to consider:

Simplicity and clarity in actions: ACIs should prioritize actions that are straightforward and easy to understand. Rather than overwhelming agents with a plethora of options and complex documentation, commands should be concise and intuitive. This approach minimizes the need for extensive demonstrations or fine-tuning, enabling agents to utilize the interface effectively with ease.

Efficiency in operations: ACIs should aim to consolidate essential operations, such as file navigation and editing, into as few actions as possible. By designing efficient actions, agents can make significant progress towards their goals in a single step. It is crucial to avoid a design that requires composing multiple simple actions across several turns, as this can hinder the streamlining of higher-order operations.

Informative environment feedback: High-quality feedback is vital for ACIs to provide agents with meaningful information about the current environment state and the effects of their recent actions. The feedback should be relevant and concise, avoiding unnecessary details. For instance, when an agent edits a file, updating them on the revised contents is beneficial for understanding the impact of their changes.

Guardrails to mitigate error propagation: Just like humans, language models can make mistakes when editing or searching. However, they often struggle to recover from these errors. Implementing guardrails, such as a code syntax checker that automatically detects mistakes, can help prevent error propagation and assist agents in identifying and correcting issues promptly.

How SWE-Agent Uses Effective ACIs to Get Things Done

SWE-Agent provides an intuitive interface for language models to act as software engineering agents, enabling them to efficiently search, navigate, edit, and execute code commands. This is achieved through the thoughtful design of the agent's search and navigation capabilities, file viewer, file editor, and context management. The system is built on top of the Linux shell, granting access to common Linux commands and utilities. Let's take a closer look at the components of the SWE-Agent interface.

Search and Navigation

In the typical Shell-only environment, language models often face challenges in finding the information they need. They may resort to using a series of "cd," "ls," and "cat" commands to explore the codebase, which can be highly inefficient and time-consuming. Even when they employ commands like "grep" or "find" to search for specific terms, they sometimes encounter an overwhelming amount of irrelevant results, making it difficult to locate the desired information. SWE-Agent addresses this issue by introducing special commands such as "find file," "search file," and "search dir." These commands are designed to provide concise summaries of search results, greatly simplifying the process of locating the necessary files and content. The "find file" command assists in searching for filenames within the repository, while "search file" and "search dir" allow for searching specific strings within a file or a subdirectory. To keep the search results manageable, SWE-Agent limits them to a maximum of 50 per query. If a search yields more than 50 results, the agent receives a friendly prompt to refine their query and be more specific. This approach prevents the language model from being overwhelmed with excessive information and enables it to quickly identify the relevant content.

File Viewer

Once the models have located the desired file, they can view its contents using the interactive file viewer by invoking the "open" command with the appropriate file path. The file viewer displays a window of at most 100 lines of the file at a time. The agent can navigate this window using the "scroll down" and "scroll up" commands or jump to a specific line using the "goto" command. To facilitate in-file navigation and code localization, the full path of the open file, the total number of lines, the number of lines omitted before and after the current window, and the line numbers are displayed.

The File Viewer plays a crucial role in a language agent's ability to comprehend file content and make appropriate edits. In a Terminal-only setting, commands like "cat" and "printf" can easily inundate a language agent's context window with an excessive amount of file content, most of which is typically irrelevant to the issue at hand. SWE-Agent's File Viewer allows the agent to filter out distractions and focus on pertinent code snippets, which is essential for generating effective edits.

File Editor

SWE-Agent offers commands that enable models to create and edit files. The "edit" command works in conjunction with the file viewer, allowing agents to replace a specific range of lines in the open file. The "edit" command requires three arguments: the start line, end line, and replacement text. In a single step, agents can replace all lines between the start and end lines with the replacement text. After edits are applied, the file viewer automatically displays the updated content, enabling the agent to observe the effects of their edit immediately without the need to invoke additional commands.

SWE-Agent's file editor is designed to streamline the editing process into a single command that facilitates easy multi-line edits with consistent feedback. In the Shell-only setting, editing options are restrictive and prone to errors, such as replacing entire files through redirection and overwriting or using utilities like "sed" for single-line or search-and-replace edits. These methods have significant drawbacks, including inefficiency, error-proneness, and lack of immediate feedback. Without SWE-Agent's file editor interface, performance drops significantly.

To assist models in identifying format errors when editing files, a code linter is integrated into the edit function, alerting the model of any mistakes introduced during the editing process. Invalid edits are discarded, and the model is prompted to attempt editing the file again. This intervention significantly improves performance compared to the Shell-only and no-linting alternatives.

Context Management

The SWE-Agent system employs informative prompts, error messages, and history processors to maintain the agent's context concise and informative. Agents receive instructions, documentation, and demonstrations on the correct use of bash and ACI commands. At each step, agents are instructed to generate both a thought and an action. Malformed generations trigger an error response, prompting the model to try again until a valid generation is received. Once a valid generation is received, past error messages are omitted except for the first. The agent's environment responses display computer output using a specific template, but if no output is generated, a message stating "Your command ran successfully and did not produce any output" is included to enhance clarity. To further improve context relevance, observations preceding the last five are each collapsed into a single line, preserving essential information about the plan and action history while reducing unnecessary content. This allows for more interaction cycles and avoids outdated file content.

Takeaways

Apart from the ecosystem discussed in the paper, there are several key learning that we can apply to other areas when creating an Agent and interacting with LMs. Here are a few crucial takeaways:

Optimize interfaces for agent-computer interactions: Human user interfaces may not always be the most suitable for agent-computer interactions. Experiments suggest that improved localization can be achieved through faster navigation and more informative search interfaces tailored to the needs of language models.

Prioritize efficient and compact file editing: Streamlined file editing is crucial for optimal performance. SWE-Agent's file editor and viewer consolidate the editing process into a single command, enabling easy multi-line edits with consistent feedback. The experiments reveal that agents are sensitive to the amount of content displayed in the file viewer, and striking the right balance is essential for performance.

Implement guardrails to enhance error recovery: Guardrails can significantly improve error recovery and overall performance. SWE-Agent incorporates an intervention in the edit logic, ensuring that modifications are only applied if they do not introduce major errors. This intervention proves to be highly effective in preventing error propagation and improving the model's performance.

These takeaways underscore the importance of designing agent-computer interfaces that cater to the specific needs and limitations of language models. By providing efficient search and navigation capabilities, streamlined file editing with immediate feedback, and guardrails to prevent error propagation, SWE-Agent demonstrates the potential for improved performance and more effective collaboration between language models and computer systems in software engineering tasks.

Want to know more?

You can watch demo of SWE Agent here Or try agent at github

If you liked this article and wish to share it, please refer to the following guidelines.

That is it for this piece. I appreciate your time. As always, if you’re interested in working with me or checking out my other work, my links will be at the end of this email/post. And if you found value in this write-up, I would appreciate you sharing it with more people. It is word-of-mouth referrals like yours that help me grow.

Reach out to me

Use the links below to check out my other content, learn more about tutoring, reach out to me about projects, or just to say hi.

Small Snippets about Tech, AI and Machine Learning over here

AI Newsletter- https://artificialintelligencemadesimple.substack.com/

My grandma’s favorite Tech Newsletter- https://codinginterviewsmadesimple.substack.com/

Check out my other articles on Medium. : https://rb.gy/zn1aiu

My YouTube: https://rb.gy/88iwdd

Reach out to me on LinkedIn. Let’s connect: https://rb.gy/m5ok2y

My Instagram: https://rb.gy/gmvuy9

My Twitter: https://twitter.com/Machine01776819