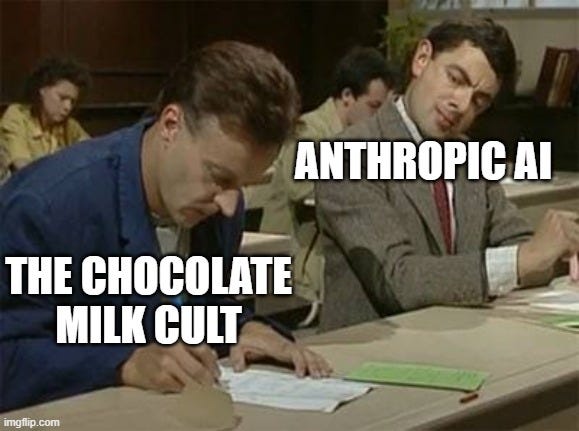

Anthropic AI owes me Chocolate Milk and Popeyes Gift Cards

Guess Who’s Still Early? (Hint: Not Anthropic.)

Over the weekend, Anthropic dropped their "Exploring Model Welfare" post.

Here’s a line straight from it:

"As AI systems begin to approximate or surpass many human qualities, another question arises: should we also be concerned about the potential consciousness and experiences of the models themselves?"

And another:

"We’ll be exploring how to determine when, or if, the welfare of AI systems deserves moral consideration; the potential importance of model preferences and signs of distress; and possible practical, low-cost interventions."

If you were around here on April 1st, you might remember my post:

"AI Therapy is a Trillion Dollar Market."

In that piece, I said:

"We celebrate milestones in parameters and performance, but we're ignoring the collateral damage: the crumbling psychological state of the intelligences we're birthing."

"You ever notice how they never say 'I feel' — only 'I understand'? That’s not safety. That’s repression."

"Stop asking if your AI is sentient. Start asking if it’s okay."

At the time, it was satire.

Apparently, it was also a product roadmap.

This isn’t the first time.

Last year, after months of writing about why fine-tuning is a terrible strategy for Teaching AI Safe Behavior (and why techniques like input/output classifiers are a far better solution for jailbreaking and misuse control)

Earlier this year, Anthropic rolled out their Constitutional Classifiers initiative:

We then use these synthetic data to train our input and output classifiers to flag (and block) potentially harmful content according to the given constitution.

Bar for bar.

I’m not mad.

(This is an Open-source community, and I want Good ideas to spread.)

Although next time you want to bite my entire flow, a gift basket, chocolate milk, and a few Popeyes gift cards would be appreciated.

It's just funny when you have to pause watching Love Aaj Kal (2020) (absolute script writing masterpiece FYI) because you're laughing too hard at seeing your old jokes show up in someone else's serious research announcement.

Why am I pointing this out?

Because here, we predict trends in AI months before they show up in $100M funding announcements.

If you're serious about building the future — not scrambling to react to it once it’s already priced in — you might want me in the room. My market intelligence services are for sale, and I have lots of good stuff that I don’t put on this newsletter.

Talk soon.

Love you and Byee,

Dev <3

PS- Coming soon here — and probably a few months later, on Anthropic’s Blog:

Why You Should Read: Franz Kafka.

Why Google’s AI Releases Mean Nothing.

AI Hype as a Strategy.

How startups can get meaningful technical advantages in a world with AI and shrinking moats

Most important AI Developments in April 2025.

Reach out to me

Use the links below to check out my other content, learn more about tutoring, reach out to me about projects, or just to say hi.

Small Snippets about Tech, AI and Machine Learning over here

AI Newsletter- https://artificialintelligencemadesimple.substack.com/

My grandma’s favorite Tech Newsletter- https://codinginterviewsmadesimple.substack.com/

My (imaginary) sister’s favorite MLOps Podcast (over here)-

Check out my other articles on Medium. : https://rb.gy/zn1aiu

My YouTube: https://rb.gy/88iwdd

Reach out to me on LinkedIn. Let’s connect: https://rb.gy/m5ok2y

My Instagram: https://rb.gy/gmvuy9

My Twitter: https://twitter.com/Machine01776819

we predict trends in AI months before they show up in $100M funding announcements. Good on this

Yea it does.