How to Protect your AI by using Guardrails [Guest]

The 3R Framework That Prevents AI Implementation Disasters

It takes time to create work that’s clear, independent, and genuinely useful. If you’ve found value in this newsletter, consider becoming a paid subscriber. It helps me dive deeper into research, reach more people, stay free from ads/hidden agendas, and supports my crippling chocolate milk addiction. We run on a “pay what you can” model—so if you believe in the mission, there’s likely a plan that fits (over here).

Every subscription helps me stay independent, avoid clickbait, and focus on depth over noise, and I deeply appreciate everyone who chooses to support our cult.

PS – Supporting this work doesn’t have to come out of your pocket. If you read this as part of your professional development, you can use this email template to request reimbursement for your subscription.

Every month, the Chocolate Milk Cult reaches over a million Builders, Investors, Policy Makers, Leaders, and more. If you’d like to meet other members of our community, please fill out this contact form here (I will never sell your data nor will I make intros w/o your explicit permission)- https://forms.gle/Pi1pGLuS1FmzXoLr6

When AI goes wrong, it rarely breaks with a bang. It fails quietly: by scaling bad assumptions, degrading trust, and burning out teams in the name of “innovation.” The failure has nothing to do with budgets, team size, or any of the standard issues flagged in rushed post-mortems. So what causes the collapse? All the teams optimized for capability before control.

In the following guest post,

, shares a powerful 3R Guardrail Framework that is designed to prevent intelligent systems from creating organizational stupidity. Applying this framework ensures that your intended use of AI is realistic, grounded, and most importantly, useful.As you read the guest post, some questions that I’d love to hear your opinions on-

Flipping the Frame: The post presents the 3R's as a way to prevent disaster. Let's flip it. How would you use this same framework to go on offense? For example, how can the "Root" question be used not just to solve a problem, but to define a market position that competitors can't easily replicate?

The Role of Intelligence vs Reliability: Many assumptions around AGI and its impacts come from it doing economic tasks. When you think about it, modern AI has the “intelligence” to do most office work, but it needs to be integrated into services to do them reliably. Perhaps it’s time to question “how much intelligence is too much intelligence” and prioritize reliability over intelligence benchmarks.

The Advantage of Being Boring: Most companies are chasing "disruptive" AI, leading to erratic products and unreliable user experiences. How do you turn your disciplined, guardrail-driven approach into a competitive advantage? What communications/product differentiation would that take?

Drop your thoughts below, on these and anything else.

Let’s stay connected—find me on LinkedIn and Medium to further the conversation.

Two years ago, an executive friend asked me: "What will AI do for us?"

Today, the smarter question is: "What should AI do for us?"

The difference isn't semantic, it's strategic. And after helping dozens of organizations implement AI successfully, I've learned that the most dangerous moment isn't when you're moving too slowly, it's when you're moving too fast without boundaries…

And in today’s world, we have to move fast.

Picture driving mountain roads at night. You drive through every curve, trusting your reflexes. But you also see the guardrails, and know that while they don’t slow you down, they stop you from the biggest risks of a crash by containing. They give you confidence to maintain speed safely.

AI guardrails work the same way. They're not restrictions on innovation. They're the foundation that makes sustained innovation possible.

The Data Behind AI's Failure Problem

Before we dive into solutions, let's face the numbers. According to recent research from RAND Corporation and S&P Global Market Intelligence, over 80% of AI projects fail, twice the rate of traditional IT projects. The share of businesses scrapping most of their AI initiatives jumped to 42% in 2024, up from 17% the previous year.

Even more sobering: between 70-85% of current AI initiatives fail to meet their expected outcomes, while the average organization scraps 46% of AI proof-of-concepts before they reach production.

Here's what nobody talks about in AI implementation case studies: most failures aren't technical. They're strategic.

I've analyzed hundreds of AI rollouts across industries. The pattern is consistent: Organizations optimize for capabilities instead of clarity.

They ask "Can this AI tool automate our process?" instead of "Should this AI tool automate our process?" The result? Implementations that work technically but fail organizationally.

Consider these common scenarios:

Customer service AI that reduces costs but destroys satisfaction

Content generation tools that scale output but dilute brand voice

Predictive models that optimize metrics but ignore team dynamics

Automation that speeds processes but creates accountability gaps

Each case involves perfectly functional AI. The failure isn't in the technology; it’s in a framework where no guardrails were set.

The "Move Fast and Break Things" Problem

Silicon Valley's famous mantra has shaped how many organizations approach AI. The accelerationist mindset, get superhuman intelligence as quickly as possible, treats "anything that slows down the adoption of the technology as roadblock and negative".

But speed without strategy is just expensive chaos.

RAND's research identified five leading root causes for AI project failure, with misunderstandings about project purpose and domain context being the most common reason. In other words, teams rush to implement before they understand what they're actually trying to solve.

The accelerationist approach assumes AI can't cause large-scale harm. Reality disagrees. 47% of organizations report experiencing at least one negative consequence from generative AI use, including bias amplification, security breaches, and operational disruptions.

What we need isn't less innovation, it's smarter innovation.

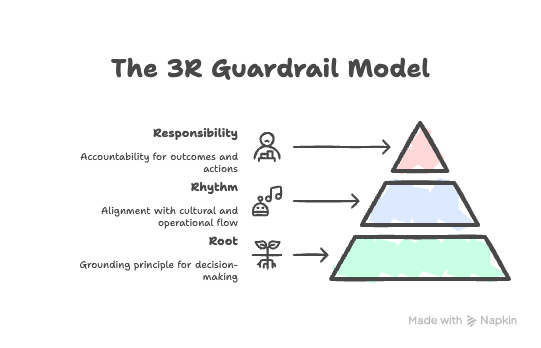

The 3R Guardrail Framework

After working with leaders from different industries, I developed what I call the 3R Guardrail Model. It's a systematic approach to evaluating AI decisions before they create irreversible consequences.

Think of it as a checklist for strategic AI implementation that balances speed with wisdom.

1. ROOT — Why Are We Using This?

Core principle: Motive before method.

Most AI disasters start here, with unclear motivation. Teams adopt AI from competitive pressure, investor expectations, or FOMO rather than strategic clarity.

Critical questions:

Are we solving a real problem or chasing a trend?

Would we pursue this if competitors weren't watching?

If this AI underperforms, does it still align with our core objectives?

Red flags: "Everyone's using ChatGPT." "Our investors expect AI integration." "This demo looked impressive."

Green lights: "This solves our specific bottleneck in customer onboarding." "This enhances our team's ability to focus on high-value work."

Technical implementation: Document the specific problem and measurable outcome before evaluating any AI tool. Define success metrics that align with business objectives, not just technical capabilities. Consider whether simpler, non-AI solutions might achieve the same result more reliably.

2. RHYTHM — Where Does This Fit Our Culture?

Core principle: Innovation must match organizational bandwidth.

Even brilliant AI implementations fail when they outpace human adaptation. Research shows that 75% of organizations report they are either nearing, at, or past the change saturation point, with 45% of workers feeling burned out by frequent organizational changes.

Critical questions:

What existing processes need to change for this to work?

Does our team have bandwidth for training and adaptation?

How does this fit our current change management capacity?

Red flags: "We'll figure out training later." "People will adapt quickly." "This should be intuitive."

Green lights: "We can phase this in over three months." "Our team lead is excited to champion this." "This builds on workflows people already understand."

Technical implementation: Map AI rollouts to your team's natural change rhythm. Design phased deployments with clear success gates. Factor in API integration time, model training periods, and user interface adaptation. Plan for rollback procedures if adoption stalls.

3. RESPONSIBILITY — Who's Accountable for Outcomes?

Core principle: Automation doesn't eliminate accountability, it centralizes it.

This is where organizations get dangerous. They assume AI decisions are AI responsibility. But models hallucinate, amplify bias, and make errors. Data quality and readiness issues account for 43% of AI project obstacles, while lack of technical maturity adds another 43%.

Critical questions:

Who owns the outcome when AI makes mistakes?

What review processes catch bias or unexpected results?

How do we escalate when AI recommendations conflict with human judgment?

Red flags: "The AI handles it automatically." "We'll monitor it eventually." "The vendor guarantees accuracy."

Green lights: "Sarah reviews all AI outputs before publication." "We audit monthly for bias and effectiveness." "Clear escalation protocols exist for edge cases."

Technical implementation: Establish human-in-the-loop systems for every high-impact AI use case. Define logging requirements for AI decisions, implement model performance monitoring, and create automated alerts for confidence thresholds. Set up A/B testing frameworks to validate AI recommendations against human judgment.

Real-World Application: SaaS Support Transformation

Imagine a Series A SaaS startup approached us to do the following:

Replace human customer support with AI chatbots. We have 40% more tickets and need to automate.

Now, instead of rushing implementation, we apply the 3R framework:

ROOT Analysis: Their stated goal was cost reduction, but deeper conversation revealed the real need: scaling customer experience without quality degradation.

This clarity shifts the approach from replacement to augmentation, a hybrid model that preserves human expertise for complex issues.

RHYTHM Assessment: Their support team was already handling 40% more tickets due to rapid growth. Adding major workflow disruption would have pushed them past the breaking point.

We design a three-phase rollout matching their actual bandwidth: Phase 1 (AI for FAQ routing), Phase 2 (Response suggestions), Phase 3 (Automated resolution for simple issues).

RESPONSIBILITY Structure: Instead of full automation, the support lead retains escalation ownership with clear AI handoff protocols. We establish real-time quality monitoring and weekly review sessions to catch issues early.

The result? Strategic, successful implementation of AI to decrease cost WHILE ALSO decreasing risk,

Addressing the "Guardrails Slow Innovation" Myth

The most common pushback I hear is: "Guardrails will slow us down in a competitive market."

This thinking is backwards. Here's why guardrails actually accelerate sustainable innovation:

1. Reduced Debugging Time: Organizations that invest in data engineers and ML engineers can substantially shorten the time required to develop and deploy AI models. Proper infrastructure setup prevents the expensive refactoring that comes from rushed implementations.

2. Higher Success Rates: Teams with clear frameworks make better technology choices. Focus on the problem, not the technology, is one of the most effective ways to avoid the "shiny object" syndrome that leads AI projects to failure.

3. Faster Scaling: When you build with clear boundaries, scaling becomes replication rather than reconstruction. Teams that skip guardrails often succeed with pilots but fail at production scale.

4. Competitive Advantage: While competitors rush to implement the latest models, organizations with strong guardrails consistently deliver reliable AI experiences. Customer trust compounds over time.

The Silicon Valley Paradox: The "move fast and break things" culture that made sense for web applications becomes dangerous with AI systems that affect real business operations. As one industry observer noted, "AI accelerationists view regulations and guardrails as unnecessary and overly cautious", but this approach leads to the high failure rates we're seeing across the industry.

Why This Matters to Technical Teams

You are a knowledgeable reader; you probably understand AI capabilities better than most. You know what's technically possible. But technical possibility and organizational wisdom are different things.

The 3R framework isn't about limiting AI's potential, it's about maximizing AI's impact by ensuring it aligns with human systems. The most sophisticated models are only as effective as the organizational context that implements them.

Organizations with strong AI guardrails will consistently outperform those without them. Not because they move slower, but because they move more strategically.

Moving Forward: Your Next Steps

AI will continue accelerating. New capabilities will emerge faster than most organizations can evaluate them. But speed isn't strategy. The future belongs to leaders who can harness AI's power while maintaining their organizational soul.

Your guardrails aren't barriers to innovation. They're the foundation that makes sustained innovation possible.

Before you implement your next AI tool, pause and ask:

Why are we doing this? (ROOT)

How does this fit our rhythm? (RHYTHM)

Who's accountable for the outcome? (RESPONSIBILITY)

Those three questions might be the difference between AI that transforms your organization and AI that derails it.

I, Joel Salinas helps mission-driven leaders navigate AI integration with wisdom and clarity through Leadership in Change. Subscribe for more

Thank you for being here, and I hope you have a wonderful day.

Dev <3

I provide various consulting and advisory services. If you‘d like to explore how we can work together, reach out to me through any of my socials over here or reply to this email.

I put a lot of work into writing this newsletter. To do so, I rely on you for support. If a few more people choose to become paid subscribers, the Chocolate Milk Cult can continue to provide high-quality and accessible education and opportunities to anyone who needs it. If you think this mission is worth contributing to, please consider a premium subscription. You can do so for less than the cost of a Netflix Subscription (pay what you want here).

If you liked this article and wish to share it, please refer to the following guidelines.

That is it for this piece. I appreciate your time. As always, if you’re interested in working with me or checking out my other work, my links will be at the end of this email/post. And if you found value in this write-up, I would appreciate you sharing it with more people. It is word-of-mouth referrals like yours that help me grow. The best way to share testimonials is to share articles and tag me in your post so I can see/share it.

Reach out to me

Use the links below to check out my other content, learn more about tutoring, reach out to me about projects, or just to say hi.

Small Snippets about Tech, AI and Machine Learning over here

AI Newsletter- https://artificialintelligencemadesimple.substack.com/

My grandma’s favorite Tech Newsletter- https://codinginterviewsmadesimple.substack.com/

Check out my other articles on Medium. : https://rb.gy/zn1aiu

My YouTube: https://rb.gy/88iwdd

Reach out to me on LinkedIn. Let’s connect: https://rb.gy/m5ok2y

My Instagram: https://rb.gy/gmvuy9

My Twitter: https://twitter.com/Machine01776819

I 100% agree with all of this. (In fact, I even quote the same research reports and stats in my own work).

One of the other key failure drivers I've seen is that many leaders think AI is a technology transformation, so they outsource it to the CTO. Or worse yet, to the IT team as a whole (in other words, without your third R).

But AI isn't just a technology transformation, because it doesn't just impact a few specific functions. Where many previous tech transformations (CRM, ERP, etc.) affected select functions, AI potentially impacts every single function, role, and process. And that makes it a whole business transformation - the largest I've seen in my lifetime.

So, leaders who outsource AI adoption are outsourcing their own core jobs. And that can't end well.

I'm curious what your thoughts are about how AI adoption/implementation compares to previous tech transformations. More specifically, how is AI the same as (and thus subject to the hard-earned lessons learned from) past tech transformations, and how is it different (where do we need to write new playbooks)?

https://substack.com/@hamtechautomation/note/c-141049513?r=64j4y5&utm_medium=ios&utm_source=notes-share-action