Interesting Content in AI, Software, Business, and Tech- 8/9/2023 [Updates]

Content to help you keep up with Machine Learning, Deep Learning, Data Science, Software Engineering, Finance, Business, and more

Hey, it’s Devansh 👋👋

In issues of Updates, I will share interesting content I came across. While the focus will be on AI and Tech, the ideas might range from business, philosophy, ethics, and much more. The goal is to share interesting content with y’all so that you can get a peek behind the scenes into my research process.

If you’d like to support my writing, consider becoming a premium subscriber to my sister publication Tech Made Simple to support my crippling chocolate milk addiction. Use the button below for a discount.

p.s. you can learn more about the paid plan here. If your company is looking looking for software/tech consulting- my company is open to helping more clients. We help with everything- from staffing to consulting, all the way to end to website/application development. Message me using LinkedIn, by replying to this email, or on the social media links at the end of the article to discuss your needs and see if you’d be a good match.

A lot of people reach out to me for reading recommendations. I figured I'd start sharing whatever AI Papers/Publications, interesting books, videos, etc I came across each week. Some will be technical, others not really. I will add whatever content I found really informative (and I remembered throughout the week). These won't always be the most recent publications- just the ones I'm paying attention to this week. Without further ado, here are interesting readings/viewings for 8/9/2023. If you missed last week's readings, you can find it here.

Join 90K+ tech leaders and get insights on the most important ideas in AI straight to your inbox through my free newsletter- AI Made Simple

Community Spotlight-

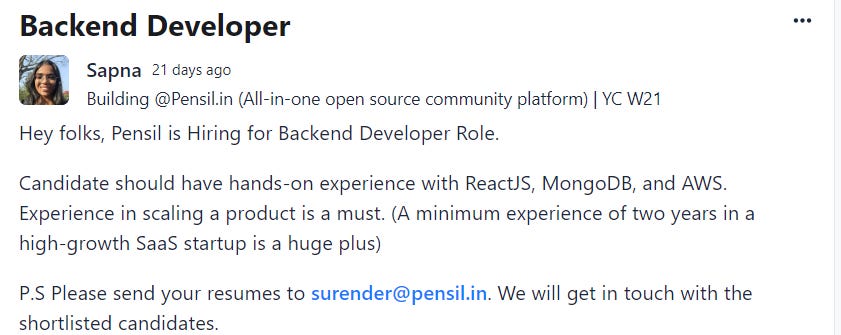

Since a lot of you are looking for work, here is one job opening I came across over here.

A lot of recruiters reach out to me with open positions, and I share them on my Instagram, so if you're interested in seeing open positions- follow me over here. I've been sharing open roles pretty regularly, and plan to do so 2-3 times per week, at least.

If you're doing interesting work and would like to be featured in the spotlight section, just drop your introduction in the comments/by reaching out to me. There are no rules- you could talk about a paper you've written, an interesting project you've worked on, some personal challenge you're working on, ask me to promote your company/product, or anything else you consider important. The goal is to get to know you better, and possibly connect you with interesting people in the community. No costs/obligations are attached.

AI Papers/Writeups

Operationalizing Machine Learning: An Interview Study

Organizations rely on machine learning engineers (MLEs) to operationalize ML, i.e., deploy and maintain ML pipelines in production. The process of operationalizing ML, or MLOps, consists of a continual loop of (i) data collection and labeling, (ii) experimentation to improve ML performance, (iii) evaluation throughout a multi-staged deployment process, and (iv) monitoring of performance drops in production. When considered together, these responsibilities seem staggering -- how does anyone do MLOps, what are the unaddressed challenges, and what are the implications for tool builders?

We conducted semi-structured ethnographic interviews with 18 MLEs working across many applications, including chatbots, autonomous vehicles, and finance. Our interviews expose three variables that govern success for a production ML deployment: Velocity, Validation, and Versioning. We summarize common practices for successful ML experimentation, deployment, and sustaining production performance. Finally, we discuss interviewees' pain points and anti-patterns, with implications for tool design.

Relevance-guided Supervision for OpenQA with ColBERT

Systems for Open-Domain Question Answering (OpenQA) generally depend on a retriever for finding candidate passages in a large corpus and a reader for extracting answers from those passages. In much recent work, the retriever is a learned component that uses coarse-grained vector representations of questions and passages. We argue that this modeling choice is insufficiently expressive for dealing with the complexity of natural language questions. To address this, we define ColBERT-QA, which adapts the scalable neural retrieval model ColBERT to OpenQA. ColBERT creates fine-grained interactions between questions and passages. We propose an efficient weak supervision strategy that iteratively uses ColBERT to create its own training data. This greatly improves OpenQA retrieval on Natural Questions, SQuAD, and TriviaQA, and the resulting system attains state-of-the-art extractive OpenQA performance on all three datasets.

The Larger They Are, the Harder They Fail: Language Models do not Recognize Identifier Swaps in Python

Large Language Models (LLMs) have successfully been applied to code generation tasks, raising the question of how well these models understand programming. Typical programming languages have invariances and equivariances in their semantics that human programmers intuitively understand and exploit, such as the (near) invariance to the renaming of identifiers. We show that LLMs not only fail to properly generate correct Python code when default function names are swapped, but some of them even become more confident in their incorrect predictions as the model size increases, an instance of the recently discovered phenomenon of Inverse Scaling, which runs contrary to the commonly observed trend of increasing prediction quality with increasing model size. Our findings indicate that, despite their astonishing typical-case performance, LLMs still lack a deep, abstract understanding of the content they manipulate, making them unsuitable for tasks that statistically deviate from their training data, and that mere scaling is not enough to achieve such capability.

The Lottery Ticket Hypothesis: Finding Sparse, Trainable Neural Networks

Neural network pruning techniques can reduce the parameter counts of trained networks by over 90%, decreasing storage requirements and improving computational performance of inference without compromising accuracy. However, contemporary experience is that the sparse architectures produced by pruning are difficult to train from the start, which would similarly improve training performance.

We find that a standard pruning technique naturally uncovers subnetworks whose initializations made them capable of training effectively. Based on these results, we articulate the "lottery ticket hypothesis:" dense, randomly-initialized, feed-forward networks contain subnetworks ("winning tickets") that - when trained in isolation - reach test accuracy comparable to the original network in a similar number of iterations. The winning tickets we find have won the initialization lottery: their connections have initial weights that make training particularly effective.

We present an algorithm to identify winning tickets and a series of experiments that support the lottery ticket hypothesis and the importance of these fortuitous initializations. We consistently find winning tickets that are less than 10-20% of the size of several fully-connected and convolutional feed-forward architectures for MNIST and CIFAR10. Above this size, the winning tickets that we find learn faster than the original network and reach higher test accuracy.

AI recognition of patient race in medical imaging: a modelling study

In our study, we show that standard AI deep learning models can be trained to predict race from medical images with high performance across multiple imaging modalities, which was sustained under external validation conditions (x-ray imaging [area under the receiver operating characteristics curve (AUC) range 0·91–0·99], CT chest imaging [0·87–0·96], and mammography [0·81]). We also showed that this detection is not due to proxies or imaging-related surrogate covariates for race (eg, performance of possible confounders: body-mass index [AUC 0·55], disease distribution [0·61], and breast density [0·61]). Finally, we provide evidence to show that the ability of AI deep learning models persisted over all anatomical regions and frequency spectrums of the images, suggesting the efforts to control this behaviour when it is undesirable will be challenging and demand further study.

How Amazon tackles a multi-billion dollar bot problem

In case you missed it, I broke down how Amazon detects robotic ad clicks. Catching bots is crucial for their ads to be effective, but it has a lot of very interesting challenges that the company had to deal with, including a lack of ground truth labels. For the breakdown, I went over 3 Amazon Publications- Invalidating robotic ad clicks in real-time, Self Supervised Pre-training for Large Scale Tabular Data, and Real-Time Detection of Robotic Traffic in Online Advertising. Check it out, because it has a lot of interesting insights, both in ML experimentation and Deploying systems in Production.

GPT-4 Can't Reason

GPT-4 was released in March 2023 to wide acclaim, marking a very substantial improvement across the board over GPT-3.5 (OpenAI's previously best model, which had powered the initial release of ChatGPT). Despite the genuinely impressive improvement, however, there are good reasons to be highly skeptical of GPT-4's ability to reason. This position paper discusses the nature of reasoning; criticizes the current formulation of reasoning problems in the NLP community and the way in which the reasoning performance of LLMs is currently evaluated; introduces a collection of 21 diverse reasoning problems; and performs a detailed qualitative analysis of GPT-4's performance on these problems. Based on the results of this analysis, the paper argues that, despite the occasional flashes of analytical brilliance, GPT-4 at present is utterly incapable of reasoning.

Tech Writeups-

Increase Business Value from the Cloud with Effective Cloud Governance

In this blog, we’ll cover the challenges around implementing cloud governance, recommendations on implementing and maintaining an effective cloud governance program, and customer success stories.

Cloud governance is a set of rules, practices, and oversight that ensures cloud use is accountable to your business objectives. Cloud governance spans multiple disciplines, including security, finance, people, processes, and operations, that overlap to complete a cloud governance strategy. In addition to the security and operational topics we discuss in this post, we recommend that you review the AWS Cloud Financial Management Guide and the people perspective of the AWS Cloud Adoption Framework (CAF) to complete your comprehensive cloud governance strategy.

The True Meaning of Technical Debt 💸

🌀 Luca Rossi writes some amazing content for Software Engineers and Managers who are obsessed with productivity. Loved his work on Tech Debt-

Ward describes debt as the natural result of writing code about something we don't have a proper understanding of.

He doesn't talk of poor code — which he says accounts for a very minor share of debt.

He talks of disagreement between business needs and how the software has been written.

But how do we land to such disagreement? In my experience, there are two offenders:

🎨 Wrong Design — what we built was wrong from the start!

🏃 Rapid Evolution — we built the right thing, but the landscape changed quickly and made it obsolete.

Let's see both more in detail 👇

Cool Videos-

An Exact Formula for RISK* Combat

A very interesting differential equation.

Continuous Delivery Pipelines: How to Build Better Software Faster • Dave Farley • GOTO 2021 - Dave Farley

I'll catch y'all with more of these next week. In the meanwhile, if you'd like to find me, here are my social links-

Reach out to me

Use the links below to check out my other content, learn more about tutoring, reach out to me about projects, or just to say hi.

Small Snippets about Tech, AI and Machine Learning over here

AI Newsletter- https://artificialintelligencemadesimple.substack.com/

My grandma’s favorite Tech Newsletter- https://codinginterviewsmadesimple.substack.com/

Check out my other articles on Medium. : https://rb.gy/zn1aiu

My YouTube: https://rb.gy/88iwdd

Reach out to me on LinkedIn. Let’s connect: https://rb.gy/m5ok2y

My Instagram: https://rb.gy/gmvuy9

My Twitter: https://twitter.com/Machine01776819

This is so well researched and written. Really informative, thank you.

thanks a lot ! If you have any recommendations for a podcast in addition to videos, that would be really valuable. thx !