Interesting Content in AI, Software, Business, and Tech- 03/13/2024 [Updates]

Content to help you keep up with Machine Learning, Deep Learning, Data Science, Software Engineering, Finance, Business, and more

Hey, it’s Devansh 👋👋

In issues of Updates, I will share interesting content I came across. While the focus will be on AI and Tech, the ideas might range from business, philosophy, ethics, and much more. The goal is to share interesting content with y’all so that you can get a peek behind the scenes into my research process.

I put a lot of effort into creating work that is informative, useful, and independent from undue influence. If you’d like to support my writing, consider becoming a premium subscriber to my sister publication Tech Made Simple to support my crippling chocolate milk addiction. Use the button below for a lifetime 50% discount (5 USD/month, or 50 USD/year).

A lot of people reach out to me for reading recommendations. I figured I’d start sharing whatever AI Papers/Publications, interesting books, videos, etc I came across each week. Some will be technical, others not really. I will add whatever content I found really informative (and I remembered throughout the week). These won’t always be the most recent publications- just the ones I’m paying attention to this week. Without further ado, here are interesting readings/viewings for 03/13/2024. If you missed last week’s readings, you can find it here.

Reminder- We started an AI Made Simple Subreddit. Come join us over here- https://www.reddit.com/r/AIMadeSimple/. If you’d like to stay on top of community events and updates, join the discord for our cult here: https://discord.com/invite/EgrVtXSjYf.

Community Spotlight: vaibhav nakrani

vaibhav nakrani shares really well-made slides covering various topics in Machine Learning Engineering and Research. Creators like him are exceptionally helpful to me b/c their work is how I find new ideas that I didn't know about. If you are looking for snippets in about various ideas in AI, would super duper recommend his LinkedIn.

If you’re doing interesting work and would like to be featured in the spotlight section, just drop your introduction in the comments/by reaching out to me. There are no rules- you could talk about a paper you’ve written, an interesting project you’ve worked on, some personal challenge you’re working on, ask me to promote your company/product, or anything else you consider important. The goal is to get to know you better, and possibly connect you with interesting people in our chocolate milk cult. No costs/obligations are attached.

Previews

Curious about what articles I’m working on? Here are the previews for the next planned articles-

This tracks with Google’s own findings. Internally, 97% of Google software engineers are satisfied with Critique.

But what is Critique exactly and what makes it so good?

How does this pair with Google’s actual process of code review?

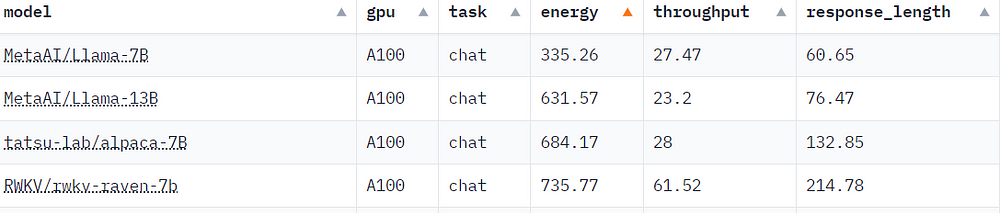

RWKV.

This got pushed back from last week due to personal circumstances, but I hope to get this done in by the end of this week. These reasons were also why I my recommendations for both last week and this week are shorter than usual.

Highly Recommended

These are pieces that I feel are particularly well done. If you don’t have much time, make sure you at least catch these works.

As with all of his posts, Sebastian Raschka, PhD shared a lot of valuable insights on his post about MLContest report. Every single post, article, or video/course he creates is very high-level and it always awes me how much he can put into all of this without slowing down.

What are the recent trends in machine learning, deep learning, and AI? Competitions are usually a great place to look for the tools that are actually used and what works well in practice. I really enjoyed the MLContest report last year and am delighted read this year's write-up. Here are some highlights that stood out to me:

Battle of the Backbones: A Large-Scale Comparison of Pretrained Models across Computer Vision Tasks

In the post above, Seb also shared this great paper. Very important research for CV folk.

Neural network based computer vision systems are typically built on a backbone, a pretrained or randomly initialized feature extractor. Several years ago, the default option was an ImageNet-trained convolutional neural network. However, the recent past has seen the emergence of countless backbones pretrained using various algorithms and datasets. While this abundance of choice has led to performance increases for a range of systems, it is difficult for practitioners to make informed decisions about which backbone to choose. Battle of the Backbones (BoB) makes this choice easier by benchmarking a diverse suite of pretrained models, including vision-language models, those trained via self-supervised learning, and the Stable Diffusion backbone, across a diverse set of computer vision tasks ranging from classification to object detection to OOD generalization and more. Furthermore, BoB sheds light on promising directions for the research community to advance computer vision by illuminating strengths and weakness of existing approaches through a comprehensive analysis conducted on more than 1500 training runs. While vision transformers (ViTs) and self-supervised learning (SSL) are increasingly popular, we find that convolutional neural networks pretrained in a supervised fashion on large training sets still perform best on most tasks among the models we consider. Moreover, in apples-to-apples comparisons on the same architectures and similarly sized pretraining datasets, we find that SSL backbones are highly competitive, indicating that future works should perform SSL pretraining with advanced architectures and larger pretraining datasets. We release the raw results of our experiments along with code that allows researchers to put their own backbones through the gauntlet here: this https URL

It's hard to be both concise and informative. This 1000-IQ scientific infographic by Logan Thorneloe is both.

While Nietzche isn't my favorite philosopher, I do enjoy listening to various interpretations of his work/thoughts. This video (and others by this YouTuber) is some of the best on Nietzche. If you're interested in exploring how various styles of thought play with each other, this is a good watch.

Today we examine an 1875 Fragment, entitled "Science and Wisdom in Battle". Not only does this fragment contain one of my favorite quotations of Nietzsche's, it represents his continual grappling with the meaning of Ancient Greek culture. In particular, we discuss the importance of "relations of tension" in Nietzsche's earlier work: art versus science, culture versus the state, history versus forgetting, and of course, science and wisdom. Both are drives to knowledge, and the tension between them created philosophy in the tragic age of the Hellenes. Science is characterized by logical, objective, specialized knowledge, whereas Wisdom is defined by Nietzsche as a tendency for illogical generalization, leaping to one's ultimate goal, and an artistic desire to reflect the world in one's own mirror.

Random walks in 2D and 3D are fundamentally different (Markov chains approach)

It's not easy to make Math videos that are both fun and useful, but this one knocks it out the park.

"A drunk man will find his way home, but a drunk bird may get lost forever." What is this sentence about?

In 2D, the random walk is "recurrent", i.e. you are guaranteed to go back to where you started; but in 3D, the random walk is "transient", the opposite of "recurrent". In fact, for the 2D case, that also means that you are guaranteed to go to ALL places in the world (the only constraint is, of course, time). [Think about why.]

Markov chains are also an important tool in modelling the real world, and so I feel like this is a good excuse for bringing it up.

At the end, I also compare this phenomenon to Stein's paradox - in both cases, there is a cutoff between 2 and 3 dimensions, and they have similar intuitive explanation - is that a coincidence?

Mixtures of Experts Unlock Parameter Scaling for Deep RL

The recent rapid progress in (self) supervised learning models is in large part predicted by empirical scaling laws: a model's performance scales proportionally to its size. Analogous scaling laws remain elusive for reinforcement learning domains, however, where increasing the parameter count of a model often hurts its final performance. In this paper, we demonstrate that incorporating Mixture-of-Expert (MoE) modules, and in particular Soft MoEs (Puigcerver et al., 2023), into value-based networks results in more parameter-scalable models, evidenced by substantial performance increases across a variety of training regimes and model sizes. This work thus provides strong empirical evidence towards developing scaling laws for reinforcement learning.

Why Can’t Language Models Learn to Speak Backwards?

Understanding why LLMs are weak in the ways they are weak can teach us a lot about the limitations of our current training processes.

Language models can't learn as well in reverse? In this video, we look at the paper "Arrows of Time for Large Language Models" which studies this phenomenon and offers some theories to explain it.

The Murder of Sitting Bull - Native American History - Part 4 - Extra History

One of my motivations for writing my piece on the limitations of Data was my love for history. One of the most common themes that I see there is how many of the dominant narratives taught to us as fact were the result of propaganda. This video was another such example: Many settler Americans pretended that Sitting Bull was secretly European so that they didn't have to face the humiliation of losing to an inferior tribe.

We delve into the final chapter of Sitting Bull's life, exploring his return from exile in Canada, his surprising reception in American cities, and his unexpected role in Buffalo Bill's Wild West Show. Despite his legendary status, Sitting Bull's aspirations to meet President Grover Cleveland are met with disappointment, leading him back to Standing Rock where he encounters the burgeoning Ghost Dance movement. As tensions escalate, Sitting Bull's involvement in the dance ultimately leads to a tragic confrontation with authorities, marking the poignant end of a remarkable legacy.

A cautionary tale about ChatGPT for advanced developers

Given how much noise is being about Devin, it is important to cherish such videos that provide more balanced analysis of these tools.

ChatGPT has unlocked opportunities for me that I otherwise might not have had the time to achieve. But, without a careful strategy, ChatGPT and other AI coding assistants like GitHub Copilot can hinder your code quality and personal improvement.

Dr K: "There Is A Crisis Going On With Men!", “We’ve Produced Millions Of Lonely, Addicted Males!”

Clickbaity title aside, this is one of the better videos on the mental health crisis amongst men. Given Dr. K's experience with treating clients/engaging with his community, this is worth paying attention to.

Dr Alok Kanojia (HealthyGamerGG) is a psychiatrist and co-founder of the mental health coaching company 'Healthy Gamer', which aims to help with modern stressors, such as social media, video games, and online dating.

Lionsgate’s Grand Adventure for Hollywood Magic

The movie industry is dominated by the “Big Five” of Paramount Studios, Universal, Sony Pictures, Warner Brothers, and Walt Disney Pictures - who eat up over 80% of the box office. But in a era where awards are meaningless, streaming has replaced DVDs, and talent no longer moves the box office - the Big 5 movie studios have become conservative. Hollywood has devolved into non-stop superhero movies, nostalgia-centric remakes, and formulaic sequels of existing franchises where the ROI is safer and risk is much lower than any real original endeavor.

This gap has enabled smaller studios like MGM, Lionsgate, A24 and streaming services like Apple and Netflix to thrive with independent titles in this ever-competitive landscape. As consumers, we see the trailers, suffer through terrible movies, and are now being funneled to streaming services. Yet the industry has rarely, if ever, been covered from the perspective of the studio.

Instead of covering one of the Big 5 major film studios where the movie-making business is insulated, we’ll look at Lionsgate - one of the few remaining independent studios whose survival depends on making successful movies and TV shows. Lionsgate is best known for franchises like the Hunger Games, Now You See Me, The Expendables, Saw, John Wick, Step Up, Divergent along with solo hits like La La Land, Knives Out, Hacksaw Ridge, Mad Men, and Orange is the New Black. In this episode, we’ll cover the business and transformation of the movie and greater motion picture industry over the past two decades through the lens of Lionsgate.

Why is the determinant like that?

As a self-taught mathematician/AI person, it always helps to have videos like this b/c they give me insight into the workings/assumptions of the systems we base our systems on.

Other Content

How Pinterest Scaled to 11 Million Users With Only 6 Engineers

In this video, we will be exploring how Pinterest managed to scale to 11 million users with only 6 engineers. We are going to explore their early tech stacks, issues they ran into with scaling and how they overcame those obstacles.

What haunts statisticians at night

Why Do Historians Only Care About Rich People? - How History Works

Have you ever wondered what your life would be like if you lived at a different time in history?

I am sure all of us have at one point or another, but it can be quiet hard to imagine.

How would you live as a regular person? What would your house look like, what would your job be, what food would you eat, what would you do for fun, would you be educated and would your life be more or less stressful than the one you lead today?

The answer to all of these questions can be quite hard to work out. Historians have done much less work researching and reporting on the lives of common people than they have on the lives of a handful of wealthy and powerful individuals.

If you liked this article and wish to share it, please refer to the following guidelines.

Reach out to me

Use the links below to check out my other content, learn more about tutoring, reach out to me about projects, or just to say hi.

Small Snippets about Tech, AI and Machine Learning over here

AI Newsletter- https://artificialintelligencemadesimple.substack.com/

My grandma’s favorite Tech Newsletter- https://codinginterviewsmadesimple.substack.com/

Check out my other articles on Medium. : https://rb.gy/zn1aiu

My YouTube: https://rb.gy/88iwdd

Reach out to me on LinkedIn. Let’s connect: https://rb.gy/m5ok2y

My Instagram: https://rb.gy/gmvuy9

My Twitter: https://twitter.com/Machine01776819

Thank you for the spotlight! 😃

Thanks for the inclusion!