Serious Problems With ChatGPT And Other LLMs That Internet Influencers Won’t Tell You About

With Make-A-Video, ChatGPT, PaLM, and other Large Language Models gaining so much attention, it is important to remember these models have major design issues.

My last post on the free Alternatives to ChatGPT did really well. So now it’s time for me to rain on your parade and cover something extremely important.

ChatGPT has been all the rage recently. People have been using it for all kinds of tasks- from writing sales emails and finishing college assignments, to even as a possible alternative to Google Search. Combine this with other Large Language Models like BERT, AI Art generators like Stable Diffusion and DALLE, and Google’s hits like GATO and Minerva for robotics and Math, and it seems like huge Transformers trained on a lot of data and compute seem like God’s Gift to Machine Learning.

While these models are primed to disrupt many industries, they also have a lot of design flaws that need to be accounted for. Without considering these flaws, the adoption of these models into your solutions will be hasty and have a lot of flaws. This is will hurt your business in the long run. In this article, I will go against the grain and cover some serious issues with the Large Language Models that you would have to deal with as you integrate them into your pipelines. Understanding these issues will help you use these powerful technologies the right way.

Cost

To get the very obvious out of the way, Large Language Models are expensive. Extremely expensive. This shouldn’t come as a shock to any of you, but the larger the model, the more expensive running the model will be. This occurs for two reasons. One obvious, one not so obvious.

Larger Models → More Params → Higher costs. You have to do more computations to get the same result, which is always going to be a challenge to deal with.

More diverse data →Larger Search Space to traverse through. Diversity is generally a good thing, but only up to a point. If you had a customer service bot, then it knowing how to program in Python is mostly useless. This would just be an unneeded overhead. Smaller models specialized for tasks would work much better (more on this in another section).

Keep in mind that a lot of ML models are deployed in contexts where they have to make a lot of inferences. Amazon Web Services estimates that “In deep learning applications, inference accounts for up to 90% of total operational costs”. Having huge models can really eat into your margins (and even turn the profits into losses). This is far from the only problem with LLMs.

Lower Performance

This is going to come as a shock to a lot of you, especially given the hysteria around these models. These models are not very good.

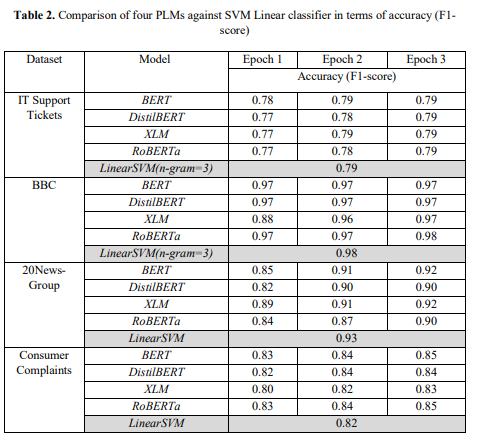

If you’ve been scrolling LinkedIn/YouTube this might come as a shock to you. After all, it seems that these models are doing so much. However, when it comes to business use cases, these models are often lose to very simple models. The authors of “A Comparison of SVM against Pre-trained Language Models (PLMs) for Text Classification Tasks” compared the performance of LLMs with a puny SVM for text classification in various specialized business contexts. They fine-tuned and used the following models-

These models were stacked against an SVM and some old-fashioned feature engineering. The results are in the following table-

As you can see, SVMs match the performance of these large models. This is a huge win for them, given the much higher costs associated with the bigger models. Keep in mind, text classification is one of the core functions of these bigger models.

This extends beyond just text classification. There are a lot of new-age internet experts claiming that AI will replace artists, developers, and other roles in the upcoming future. However, when you look into the data, this is far from true. Fast AI has an exceptional write-up investigating Github Copilot. One of their stand-out insights was- “According to OpenAI’s paper, Codex only gives the correct answer 29% of the time. And, as we’ve seen, the code it writes is generally poorly refactored and fails to take full advantage of existing solutions (even when they’re in Python’s standard library).” I did a more thorough breakdown of GitHub Copilot in my newsletter, so those of you interested can check that out.

I’ll also be going over why these models won’t be replacing artists/coders soon. For now, just keep this in mind- LLM training data isn’t created from just the best performers. It is taken from everyone. Take a second to think about the consequence of this design. Meanwhile, here is a pretty interesting thread with ChatGPT struggling with Basic Math.

If you’re actually looking to integrate these systems into your solution, just beware of the very high costs and often subpar performance of these systems. Often, you don’t even need Machine Learning, so Deep Learning becomes overkill. Simple AI can be used to create great, high-performing solutions. If you’re looking for a guide on how you can pick between AI, Machine Learning, and Deep Learning; check out the thread below.

Delusion

As you start researching these systems, you will learn a very interesting fact about Language Models- They are very vulnerable to delusion. While Adversarial Perturbation is a general problem for Deep Learners, LLMs are especially prone to ‘hallucinations’. LLMs function as advanced autocomplete tools and are very sensitive to their input prompts. Here is an example of ChatGPT inventing a completely new phrase, b/c the prompt asked it to define it.

However, this is far from the only case. Their black-box nature means that they can have strange outputs, for even simple tasks. Recently, I wrote an article called How Amazon makes Machine Learning More Trustworthy. In it, I broke down multiple publications by Amazon where they answered how they created AI models that were more immune to bias, and more robust while also protecting user privacy. I was curious how ChatGPT would summarize the article. Take a look-

It did a pretty solid job. However, the last sentence of the summary is pretty interesting, because I never wrote anything about human evaluations. This was something ChatGPT came up with, on its own. This is not

Fixed Dataset

Don’t forget, LLMs are trained on a fixed corpus of data. This makes them inherently static. Think of how quickly cultural norms and trends change. There has been a lot of controversy around AI art generators like Stable Diffusion creating artworks that propagate negative stereotypes. Individuals and research groups can get away with this because their primary intent is experimentation. People would be far-less forgiving of businesses.

To be useful in a commercial setting, they would need to change somewhat as fast as programming languages themselves change. This would require constant retraining and tuning (further increasing your costs). While techniques like Transfer Learning and Active Learning can reduce these costs, retraining will still be very expensive.

Don’t forget, about the extreme sampling bias in data collected from the internet. An AI trained on large models will replicate these biases. This is another point of caution that you want to consider before blindly jumping into using these Language Models in your systems.

Depending on your use case, this may or may not be a big deal. Take some time to evaluate it for yourself and take a judgment call. To reiterate, the point of this article is not to bash LLMs, but to help you be more aware of some of the overlooked issues with Large Language Models.

If you’re looking for a reliable way to integrate these models into your work to save a lot of resources, or are generally interested in Machine Learning and Technology, read on because I have a special resource for you.

If you liked this write-up, you would like my daily email newsletter Technology Made Simple. It covers topics in Algorithm Design, Math, AI, Data Science, Recent Events in Tech, Software Engineering, and much more to help you build a better career in Tech. Save your time, energy, and money you would burn by going through all those videos, courses, products, and ‘coaches’ and easily find all your needs met in one place.

I am currently running a 20% discount for a WHOLE YEAR, so make sure to check it out. Using this discount will drop the prices-

800 INR (10 USD) → 533 INR (8 USD) per Month

8000 INR (100 USD) → 6400INR (80 USD) per year

You can learn more about the newsletter here. If you’d like to talk to me about your project/company/organization, scroll below and use my contact links to reach out to me.

Reach out to me

Use the links below to check out my other content, learn more about tutoring, reach out to me about projects, or just to say hi.

If you like my writing, I would really appreciate an anonymous testimonial. You can drop it here.

To help me understand you fill out this survey (anonymous)

Check out my other articles on Medium. : https://rb.gy/zn1aiu

My YouTube: https://rb.gy/88iwdd

Reach out to me on LinkedIn. Let’s connect: https://rb.gy/m5ok2y

My Instagram: https://rb.gy/gmvuy9

My Twitter: https://twitter.com/Machine01776819

Really good, you are the first person on Substack that I have seen who actually understand this shit, obviously you are a coder;

I have been doing AI since 1980's, so I have played continously with all this crap over the years;

I too have written lots of articles on this chat-GPT shit that I'm now seeing;

This is a great article - thanks for keepin' it real. Because of my own niche and direction, I'm partial to your note here on how AI won't replace artists, the meme made me laugh. I know there's a lot of fear, and a handful of people who will build their platform of vanity on the vitriol and opportunity that aforementioned fear provides.

We love AI as a tool. We love critical thinkers and AI working in conjunction to do cool stuff, make beautiful things, make practical things, and ultimately, serve the "greater good." It's a lot of surface level thinking and fear-mongering to think AI will "replace" someone--the counterpoint I think about is: what about your leaders who lead you astray in thinking you weren't replaceable? You shouldn't be replaceable, which is the bigger problem, your leaders should be identifying opportunities to use your talent and gifts in new and exciting ways alongside their AI investment.

Thanks for sharing the other side of the LLM coin, there are many flaws, in an article I recently did (Meet: Dash) we had ChatGPT spin up some scholarly material. I then asked it if the material was legitimate, and it kindly informed me that it was totally made up.