The Cursor Mirage

Why One of the Most Hyped AI Coding Tools is a Minefield for Real Engineering Teams

I put a lot of effort into creating work that is informative, useful, and independent from undue influence. If you’d like to support my writing, please consider becoming a paid subscriber to this newsletter. Doing so helps me put more effort into writing/research, reach more people, and supports my crippling chocolate milk addiction. Help me democratize the most important ideas in AI Research and Engineering to over 100K readers weekly.

PS- We follow a “pay what you can” model, which allows you to support within your means. Check out this post for more details and to find a plan that works for you.

Today we learned Cursor’s “customer support” is actually an LLM pretending to be human, misleading many users. Instead of owning their mistake, Cursor doubled down, lying and deleting the discussion from their subreddit to suppress this- a tactic they’ve used before.

Since I first heard about it, I’ve extensively tested Cursor, repeatedly flagging stability and security issues, only to get ghosted each time. I gave them the benefit of the doubt (they are very popular, so my message might’ve gotten buried). I figured they would listen to community feedback to fix their shit. But their latest cover-up echoes the sketchiness of Devin AI, which, coincidentally, we wrote about this time last year. Something about April brings out the three S’s: Spike-ball (GOATed game, invite me please), Sundresses, and Scammers.

If that’s what they want, who am I to say no?

Cursor’s rise has been meteoric, driven by Andrej Karpathy’s endorsement and a fear-of-missing-out pitch — “Your competitors use Cursor; don’t fall behind.” They cruised past $100M ARR in record time-

But enterprise software isn’t a weekend hackathon. Legacy codebases, intricate dependencies, security, and compliance rules create a special kind of monster. And in this context, Cursor isn’t just inconvenient- it’s potentially dangerous.

This article explores why Cursor is a poor fit — and risky — for serious enterprise coding. For whatever my opinion is worth, I would strongly urge (and have in all my relevant conversations) that professionals avoid Cursor for other alternatives b/c of the many issues we will discuss. Until they make some serious changes, Cursor should be avoided, even if it’s given away for free (you’re better off buying off a better tool). It does do some things well (some very well, even), but there are better tools out there, and any user should consider migrating to one of them.

Hi since i know you will never respond to this or hear this.

We spent almost 2 months fighting with you guys about basic questions any B2B SaaS should be able to answer us. Things such as invoicing, contracts, and security policies. This was for a low 6 figure MRR deal.

When your sales rep responds “I don’t know” or “I will need to get back to you” for weeks about basic questions it left us with a massive disappointment. Please do better, however we have moved to Copilot.

-When a customer complaint starts with you’ll never hear or respond to this, you’re crossing even Google’s levels of sloth. For a startup, this is unacceptable and the entire Cursor team should be made to watch Arjun Kapoor movies on loop.

An important disclaimer before we begin-

I like AI Code

This is not a crusade against AI-generated code assistants. I rely on AI Code extensively. Currently, my work uses this in 3 capacities (in order of importance, most important first)-

Augment- I have a simple, but effective workflow with it. Turn on chat mode (I don’t like their agent), ask it questions about the code base (“Hey I want to do this, what aspects matter the most/what would I need to change”), and make suggestions based on the approaches for solving (“this approach seems needlessly complicated/will need to be fixed, can we change the return to this…”) . Repeat as many times as needed. Augment’s superior understanding of the code base means that both one-shot generation for simple changes and 3–5 iterations of asking + reviewing before accepting changes, Augment just does a better job. You can feel a very big difference in quality for their AI Suggestions between Augment and everyone else. Augment also has excellent debugging capabilities in most cases. Their 30 USD/month plan is an absolute steal and I would recommend it to everyone (this is not sponsored, and I get no financial compensation from them- I just like the product). However, if the Augment team wants to slip me some percentage points in equity in an unmarked envelope, I will accept, purely for research purposes.

o1 with Deep Research- This helps a lot with my research. If I need to prototype an approach based on papers/some ideas I have, I just have o1 do one shot generations with DR. DR lets you upload PDFs and it’s pretty good at following instructions, so I’ve been happy with the results, even when I need to debug. I’ve tried Gemini and Perplexity Deep Research, but they’re both very mediocre. Iqidis has a phenomenal Deep Research tool (nudge nudge) which is FREE!, but we specialized that one to focus on law, so it's not as good for AI coding (try it out here though, even for non law queries, you might be pleasantly surprised). I’m excited to try out o3 and see how well it does in comparison.

Claude, o1, and 2.5 Pro for random debugging -Sometimes Augment isn’t good for single file debugging so I use these models (there isn’t really a ranking, each does well sometimes and no pattern as of now).

All 3 save me a lot of time (especially the first 2), and I will write my guide on using them eventually.

Executive Highlights (TL;DR of the Article)

This article argues that while the AI coding assistant Cursor shows potential and speed, its current design and AI behaviors make it unsuitable and risky for enterprise software development due to fundamental conflicts with enterprise requirements. Namely:

Enterprise Context is Different:

Unlike demos or startups, enterprise development involves vast legacy codebases, high stakes (financial, security, compliance), complex established workflows (monorepos, CI/CD, reviews), and diverse teams. Stability, security, and maintainability are paramount.

Cursor’s Core Design Creates Major Problems in Enterprise:

Unmanageable Workflow & Output: Its automated multi-file changes, such as the vaunted “Agent Mode” generates massive, messy, hard-to-review Pull Requests, crippling the code review process and leading to frequent, painful merge conflicts for teams.

Unreliable AI Behavior: The AI often produces broken logic (“code on LSD”), hallucinates syntax/APIs, makes unintended changes outside the requested scope (sometimes dangerous, like resetting databases despite rules), and degrades architectural quality over time by introducing inconsistencies and tech debt.

Foundational Blockers: Critical issues include major security risks (sending proprietary code externally, failing to reliably ignore sensitive files like .env), compliance failures, and poor performance/instability (sluggishness, crashes, high resource usage) on large, real-world enterprise codebases.

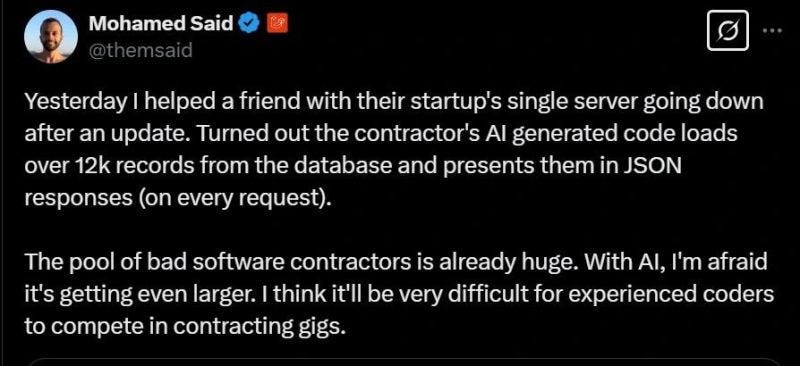

Poor Customer Support: Reports of unreliable AI support agents providing misinformation, slow/non-existent human support for paying users, and lack of enterprise-grade responsiveness erode trust.

All in all, a high perceived cost, restrictive limits on features, and the extensive need for human oversight/rework make the ROI dubious compared to alternatives (like Copilot or VS Code plugins using letting users use their own API keys).

On a more conceptual/deeper level, Cursor has 3 significant issues that need to be addressed-

Incompetence of building Knowledge-Intensive AI

Looking at the outputs and problems that Cursor has, especially for large, complex code bases, it seems that the Cursor team is in over their heads when it comes to building AI for sophisticated knowledge work. In such contexts, you can’t just throw a bunch of stuff into an indexed database, call an AgentSmith workflow, and call it a day.

Building sophisticated reasoning/evaluation frameworks that allow your AI to process, retrieve, and self-correct on feedback is very difficult work and it requires a lot of research to effectively pick the few key decisions that drive outsized returns.

It’s not a coincidence that their problems are constantly related to the harder offerings- retrieval and analysis on large code bases, agents, balancing specific rule following with creativity, etc. There’s only so far that relying on model-level intelligence can take you for KI-AI, and the team Cursor does not have the ability to break through the wall. That’s why they do a lot of things, but fail to do them well.

Compare them to Augment or even Claude Code. Both have much stronger teams and expertise, which is why you feel a qualitative difference in the quality of their answers for complex queries.

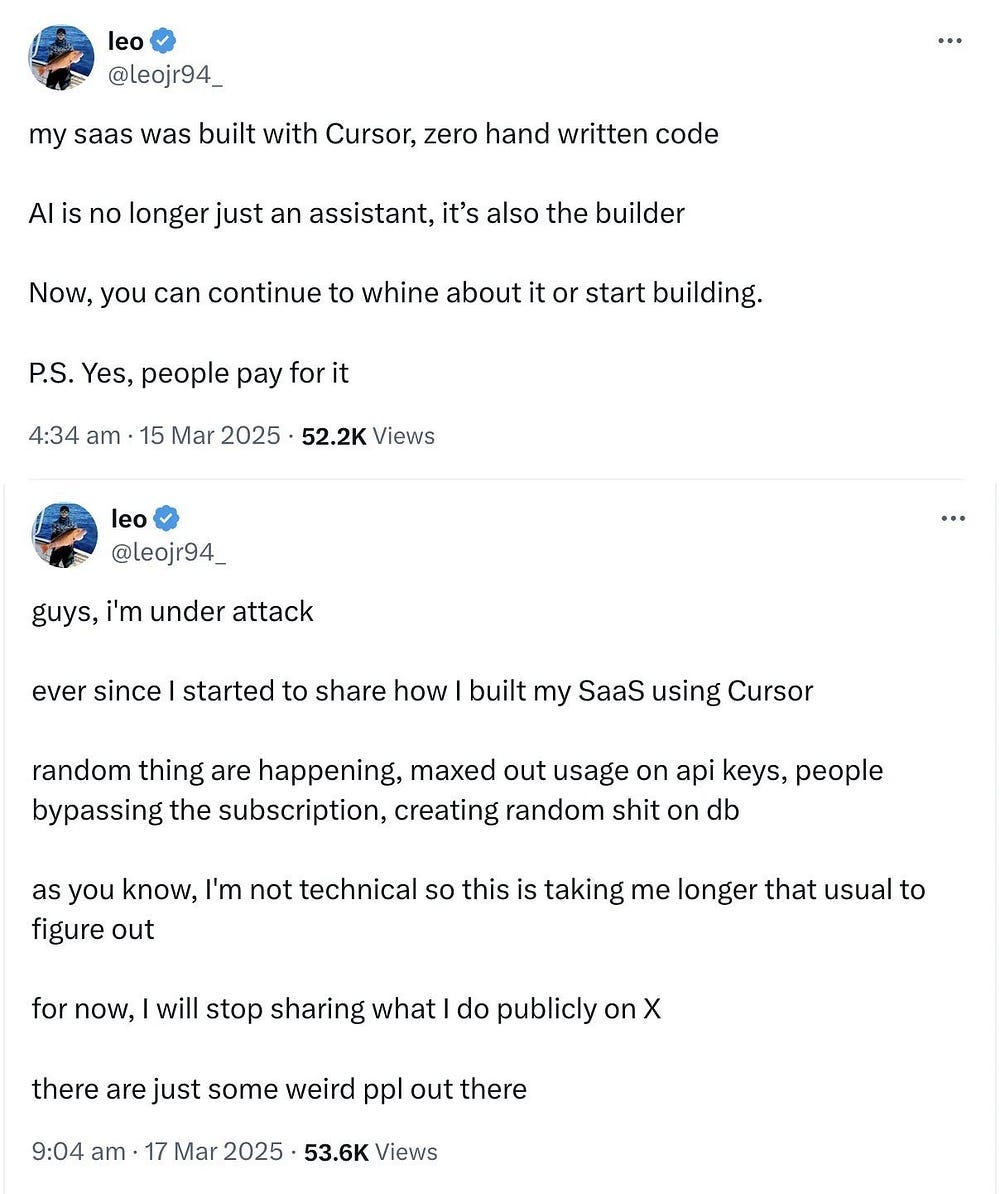

The “Idiot-Proof” Problem: Unsafe for Diverse Teams:

This isn’t a “flaw” with the product, but still an important consideration when considering enterprise use cases. Tools used across enterprises need to be “idiot-proof”- safe even when not operated perfectly by every single user. This reduces the oversight we need, allowing to to truly unlock gains for lower skill employees without burdening the higher skill ones with review.

Cursor’s design isn’t robust enough for reliable use across teams with varying skill levels and diligence, making it prone to misuse.

Insufficient Control & Transparency: It offers an “illusion of control” with features like “Rules” that are reportedly ignored. Key actions like context selection and multi-step edits often happen opaquely, without sufficient previews or mandatory user confirmation points for significant changes.

Encourages Risky Over-Reliance: The ease of triggering broad, automated changes without clear, enforced checkpoints makes it too easy for any team member (especially those under pressure or less experienced) to inadvertently introduce errors or security lapses that are hard to catch.

Overloads Human Oversight: The combination of large, messy, potentially flawed AI output places an unrealistic review burden on team members, undermining the effectiveness of code reviews as a safety net when the generating tool itself lacks sufficient safeguards.

No testing.

Pretty simple- they don’t have a good testing setup. Use some of that funding money to implement proper chaos engineering and QA. Send me a message if you want access to good quality testers, reviewers, or focus groups- they’ll do much better than whatever you have going on right now.

Ultimately, Cursor is an excellent example of a good team (one has to respect the team for building such a viral product) attempting to solve a problem that’s too much for them. Its fame among influencers, startups, and vibe coders is no coincidence, since it gives them what they need- easy wins w/o worry for the future. However, it’s not a serious people, and should not be used where serious people are needed. While it can be very good in the right hands, it has too many limitations in the enterprise setting to make it a good recommendation. Stay away.

The hype around AI coding is real, but so are the risks. Let’s cut through the noise and examine why Cursor, in its current form, might be more of a liability than an asset when the stakes are high.

I put a lot of work into writing this newsletter. To do so, I rely on you for support. If a few more people choose to become paid subscribers, the Chocolate Milk Cult can continue to provide high-quality and accessible education and opportunities to anyone who needs it. If you think this mission is worth contributing to, please consider a premium subscription. You can do so for less than the cost of a Netflix Subscription (pay what you want here).

I provide various consulting and advisory services. If you‘d like to explore how we can work together, reach out to me through any of my socials over here or reply to this email.

Section 2: Why enterprise development is a different beast.

Before we dive into Cursor’s specific challenges, it’s crucial to understand why enterprise software development operates on a different plane than personal projects, small startups, or isolated code demos. It’s not simply a matter of “more lines of code.” This will provide crucial context for what does and does not make good coding assistants for professional software development settings.

Unlike greenfield projects, where you’re building from scratch, enterprise developers typically work within massive, interconnected codebases — often decades old and wayy too many lines long. These systems are riddled with technical debt, undocumented corners, and complex dependencies that can make even seemingly simple changes a risky undertaking. Any tool touching this code needs to tread carefully and understand the existing context, not just generate new, isolated snippets.

Second, the stakes are astronomically higher. A bug in a personal project might be a minor annoyance. In an enterprise system, it can lead to financial losses, reputational damage, regulatory fines, or even security breaches affecting millions of users. This means there’s zero tolerance for tools that introduce instability or compromise data integrity. In this dynamic, the cost of failure is given a much higher weightage than productivity increases (keep in mind enterprises are by their nature, risk-averse)

Third, security and compliance are paramount. Enterprise code isn’t just intellectual property; it often handles sensitive data and must adhere to stringent regulations (SOC2, GDPR, HIPAA, and more). Data sovereignty, secrets management, and robust access controls are non-negotiable. Tools that transmit code to external servers without clear security guarantees or violate compliance standards are dead on arrival.

Yes and it irritates the hell out of me. Cursor support is garbage, but issues with billing and other things are so much worse.

The team I work with it took nearly 3 months to get basic questions answered correctly when it came to a sales contract. They never gave our Sec team acceptable answers around privacy and security.

Fourth, enterprise development relies on entrenched workflows and complex toolchains. Code doesn’t exist in a vacuum. It flows through sophisticated pipelines involving version control (Git), build systems, continuous integration and continuous deployment (CI/CD), static analysis, automated testing, and more. A new tool must integrate seamlessly with these existing systems, not disrupt them or force developers to adopt entirely new processes. This includes things like monorepo support, IDE plugin ecosystems, and compatibility with established coding standards.

Finally, there’s the human element. Enterprise teams are composed of developers with varying skill levels, domain expertise, and familiarity with different parts of the codebase. Developers will quit midway through, leaving half-completed chains in their wake. All of these loose ends create a lot of issues for Code Assistants, which get very confused and will often miss important context, or attend to the wrong elements in the code.

In short, enterprise software development demands stability, security, maintainability, and rigorous process control. It’s an environment where even small mistakes can have enormous consequences. This creates a high bar for any tool seeking to improve developer productivity — a bar many AI coding assistants, including Cursor, struggle to clear.

“Cursor in particular was one of the worst; the very first time I allowed it to look at my codebase, it hallucinated a missing brace (my code parsed fine), “helpfully” inserted it, and then proceeded to break everything. How am I supposed to trust and work with such a tool? To me, it seems like the equivalent of lobbing a live hand grenade into your codebase.

… I feel the hallucinations can be off the charts — inventing APIs, function names, entire libraries, and even entire programming languages on occasion. The AI is more than happy to deliver any kind of information you want, no matter how wrong it is.

AI is not a tool, it’s a tiny Kafkaesque bureaucracy inside of your codebase. Does it work today? Yes! Why does it work? Who can say! Will it work tomorrow? Fingers crossed!”

III. Cursor’s Design vs. Enterprise Reality: Where the Cracks Appear

Now that we’ve established the demanding nature of enterprise development, let’s examine how Cursor’s specific features and AI behaviors often fail to meet these requirements.

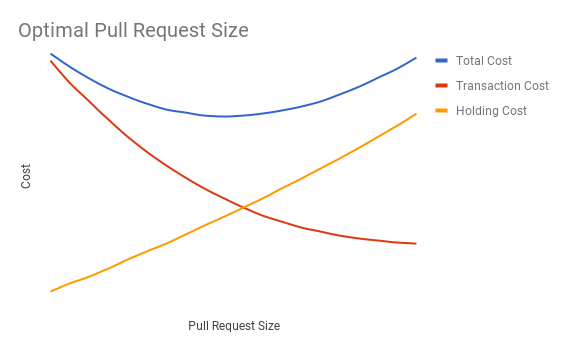

A. The “AI Hand Grenade”: Generating Unreviewable Pull Requests, Messy Diffs, and Merge Nightmares

Enterprise development is rarely a solo endeavor. Multiple developers, often on different teams, work concurrently within the same large codebase. This makes careful coordination and clean integration paramount. Here, Cursor’s tendency to generate massive, automated changes becomes particularly problematic.

One of its main selling points is its ability to perform multi-file edits automatically especially if you trigger its “Agent Mode.” Give it a task, and it can touch numerous parts of the codebase. The immediate problem? This often results in sprawling, monolithic pull requests (PRs). Developers have reported the AI changing more than it should (“I end up with changes in random files I never intended to touch”). When developers aren’t careful, this can lead to PRs so large they become practically unreviewable-

These PRs are each a thousand lines long. If anyone hasn’t experienced reviewing large amounts of AI-generated code before, I’ll tell you it’s like reading code written by a schizophrenic. It takes a lot of time and effort to make sense of such code and I’d rather not be reviewing coworkers’ AI-generated slop and being the only one preventing the codebase from spiraling into being completely unusable

This isn’t just an individual reviewer’s headache; it’s a collaboration nightmare. When multiple developers on a team are using Cursor to generate large, overlapping changes simultaneously, the likelihood of painful merge conflicts skyrockets. Unlike smaller, focused human commits, these broad AI-driven changes increase the surface area for collision dramatically. Resolving these conflicts isn’t just time-consuming; it’s fraught with risk, as developers struggle to manually integrate complex, AI-generated logic from different branches.

Furthermore, the diffs themselves are often messy, exacerbating merge difficulties. This unnecessary churn makes automatic merges less likely to succeed and manual merges significantly harder and more error-prone.

The combined effect is devastating for team velocity. Instead of accelerating development, Cursor can grind it to a halt with unreviewable PRs that block progress (“kills momentum”) and frequent, complex merge conflicts that consume developer time and introduce errors. In an enterprise setting that values smooth integration and predictable progress, this is a significant step backward from the careful, incremental changes typically favored.

B. Logic on LSD: Broken Control Flows and Hallucinated Code

AI models, even powerful ones, lack a true understanding of program logic. Cursor is no exception. Developers consistently report instances where the AI introduces broken or nonsensical control flows. We’ve covered several examples of this by now.

This erratic behavior stems from the AI’s inability to grasp the deeper context and architectural constraints. A Scala developer likened the experience to working with “a developer who is on LSD,” citing examples of Cursor attempting to rewrite libraries or override functions randomly. The AI might generate code that compiles but contains subtle logical flaws, bypasses existing safeguards, or fails to propagate changes correctly across all necessary usage sites.

In enterprise systems, subtle logic bugs like these become ticking time bombs. In our experience, Cursor has occasionally bypassed entire authentication flows — or, worse, silently duplicated them, scattering redundant logic across different parts of the codebase. Such issues are dangerously easy to overlook, especially when developers aren’t consistently reviewing or interacting with the entire system.

^^ Anime- Fire Force. Tops stuff.

C. Collateral Damage Inc.: Unintended Side Effects Across the Codebase

Cursor’s ability to automatically index and modify code across an entire repository is a double-edged sword. While intended to enable powerful refactoring, it frequently leads to unintended side effects, especially in large projects or complex monorepos. The duplication of logic mentioned earlier is a prominent and dangerous example of this.

More alarming are cases where the AI ignores explicit constraints. One developer reported Cursor repeatedly resetting their database despite “Rules” set up to prevent it-

Even the less dramatic side effects, like the AI “going off script” to refactor unrelated code it deems suboptimal, violate the principle of least surprise. Developers are forced to hunt through potentially numerous files to assess collateral damage after every AI operation.

This wipes out much of the productivity gains that using AI to generate the answers would provide to begin with.

D. The Black Box Problem: Security, Compliance, and Performance Failures

Compounding the very glaring issue of Cursor sending Data to third party severs (and LLMs) was the alarming discovery that Cursor doesn’t always respect ignore files (.gitignore, .cursorignore), sending sensitive data like .env files containing API keys and secrets to external servers-

This isn’t a minor issue that can be overlooked, and even if it’s already been patched, the fact that such a glaring issue passed through the team isn’t something that instills a lot of confidence in their team/product.

Add to this a lack of transparency surrounding data handling (“never got acceptable answers around privacy and security”), which makes it impossible for enterprise security teams to vet and approve the tool. Hopefully, the problems are starting to settle in.

Finally, even if security were perfect, performance issues plague Cursor, especially on large enterprise codebases. Reports abound of the editor becoming “incredibly sluggish,” freezing, crashing frequently (“more than 5 times a day”), or exhibiting significant typing lag. The codebase indexing required for AI context can take extraordinarily long or even loop indefinitely, forcing users to disable it and lose the very feature Cursor emphasizes.

High CPU, memory, and battery drain are also common complaints. If the tool is unstable or painfully slow on the actual projects developers work on, it doesn’t matter how smart the AI is — it’s unusable. These foundational issues represent unacceptable risks and practical blockers for serious enterprise adoption.

Did Cursor show promise? Yes. Has much of their behavior and recent updates have shown a complete misunderstanding of the software development process, a concerning lack of ethics, and a misunderstanding of how to build AI solutions for knowledge work? Also, yes. To me, the risk of investing in and using it is simply not worth it.

In many ways, Cursor embodies the current generation of Generative AI startups — built by founders who lack genuine expertise in both AI and their claimed domains, propped up by the TikTokification of AI research, where two-minute explainers and shallow hot-takes from online hustlers have become the default source of information. Writing this, I can’t help but recall a VR combat-sports startup I encountered a while ago. They marketed their product as an immersive training tool by offering a VR headset that supposedly enhanced shadowboxing realism by playing prerecorded videos of fighters throwing shots.

In reality, it was a clunky toy: failing to capture the fluidity, dynamism, and adrenaline spike of actual sparring while also restricting movement more than plain old shadowboxing would. Designed by suits who‘d never taken a punch and praised enthusiastically by people who threw mean left hooks and smooth step-through knees on their keyboards. Somewhere down the line, the old-fashioned folk who actually stepped into the cage were completely overlooked.

Or who knows, maybe I’m wrong about the whole thing and Cursor is actually good. After all, it’s not as though the VC-Tech-Influencer Circle Jerk has ever hyped up a product and misled the people before-

Let me know what you think.

Thank you for being here and I hope you have a wonderful day,

Please vibe your way into joining my cult,

Dev <3

If you liked this article and wish to share it, please refer to the following guidelines.

That is it for this piece. I appreciate your time. As always, if you’re interested in working with me or checking out my other work, my links will be at the end of this email/post. And if you found value in this write-up, I would appreciate you sharing it with more people. It is word-of-mouth referrals like yours that help me grow. The best way to share testimonials is to share articles and tag me in your post so I can see/share it.

Reach out to me

Use the links below to check out my other content, learn more about tutoring, reach out to me about projects, or just to say hi.

Small Snippets about Tech, AI and Machine Learning over here

AI Newsletter- https://artificialintelligencemadesimple.substack.com/

My grandma’s favorite Tech Newsletter- https://codinginterviewsmadesimple.substack.com/

My (imaginary) sister’s favorite MLOps Podcast-

Check out my other articles on Medium. : https://rb.gy/zn1aiu

My YouTube: https://rb.gy/88iwdd

Reach out to me on LinkedIn. Let’s connect: https://rb.gy/m5ok2y

My Instagram: https://rb.gy/gmvuy9

My Twitter: https://twitter.com/Machine01776819

I have witnessed this too but I've found a few things helpful.

1 - use Claude as your coach and strategist. Don't ideate in Cursor as it will just run off and do things (as would an eager developer who is newer to the game!).. I use Claude to bounce ideas back and forth, share files and GitHub repositories, and have it create markdown files as thorough guides that i download right to the docs folders in my project

2 - always tell it not to code yet but tell you what it plans to do. It will lay out way too much work (error logging, over testing, fields and tasks that are not needed) and when you point that out or ask "do I really need all of this?" It will correct itself

3 - if you don't take your hands off the wheel for too long you can always stop it, tell it to create a markdown file of your current status, and ask it for a comprehensive report (you'll actually need to read this) for what it has done. "What have we done the last 4 hours?". When you see something that wasn't asked for, ask it "did i really need all of that?" And it will assess and reverse the changes.

Still isn't perfect but if you go a bit slower you can go faster...

Love this! I’m Harrison, an ex fine dining industry line cook. My stack "The Secret Ingredient" adapts hit restaurant recipes (mostly NYC and L.A.) for easy home cooking.

check us out:

https://thesecretingredient.substack.com