Using AI to drive the next generation of Revenue Operations[Breakdowns]

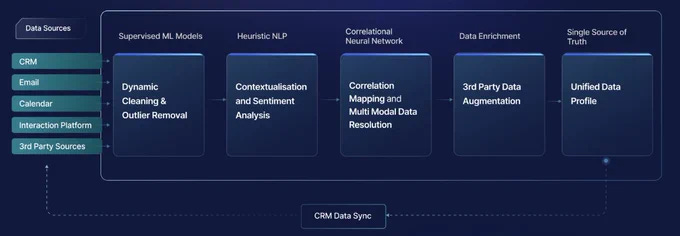

How Clientell uses a combination of various AI Technologies to add value in different stages of the RevOps

Hey, it’s Devansh 👋👋

In my series Breakdowns, I go through complicated literature on Machine Learning to extract the most valuable insights. Expect concise, jargon-free, but still useful analysis aimed at helping you understand the intricacies of Cutting Edge AI Research and the applications of Deep Learning at the highest level.

If you’d like to support my writing, consider becoming a premium subscriber to my sister publication Tech Made Simple to support my crippling chocolate milk addiction. Use the button below for a discount.

p.s. you can learn more about the paid plan here. If your company is looking for software/tech consulting- my company is open to helping more clients. We help with everything- from staffing to consulting, all the way to end to website/application development. Message me using LinkedIn, by replying to this email, or on the social media links at the end of the article to discuss your needs and see if you’d be a good match.

How can we use AI to improve our sales and marketing? What tools should we use? That’s something a lot of you have asked me. So strap in, I got something very special for you.

To those of you who haven’t been paying attention to business publications, the term Revenue Operations (RevOps) might be new. Put simply, RevOps aims to integrate insights from sales, marketing, and customer service in order to attain several key improvements- better sales performance, more targeted marketing, and improved customer experiences. This has made Revenue Operations The Fastest Growing Job In America (Forbes) and Head of Revenue Operations the most in-demand job in 2023 (LinkedIn).

Coinciding with a rise in AI, RevOps as a field has started to gain a lot of momentum in the last few years, with more and more organizations rushing to implement it. There are several features of RevOps data that make it particularly compatible with AI-

End-to-End Perspective- Data from marketing, sales, and customer service have always been valuable in their own right- but when we combine them we get a complete picture of a customer journey: from first contact to sales and customer experience. This allows us to take a complete view of the data, as opposed to the fragmented chunks we would have to deal with otherwise, allowing for deeper insights. Think of how multimodal models are exponentially more powerful because they have another dimension of information they can operate on.

Easy Comparisons- Many AI solutions can be hard to judge because their use cases are very abstract (what does it really mean to have a high-quality text generation and what makes one answer better than another?). This is why so much of the field focuses on benchmarks, common datasets, and other proxies (this is also a huge business opportunity FYI). For RevOps, we can evaluate business impact relatively easily, making evaluations and comparisons much easier. You don’t need to Shakuni your evaluation pipelines to see whether something works or doesn’t- the money will tell you.

Lots of commercially valuable data- Related to the last point, the data present is also plentiful and commercially valuable. This helps in 2 key ways: 1) You don’t need to kill yourself explaining the value to non-technical stakeholders; 2) You have plenty of data to run experiments on, enabling better analysis.

Prior to my current assignment at SVAM, I was a founding engineer and the head of AI at Clientell- a no-code B2B SAAS platform that helped organizations streamline their RevOps for 3 years (I’m still involved with them but on a more part-time basis). Clientell brings AI to organizations that don’t have the technical expertise/budgets to develop in-house Data Analysis solutions. In this article, I will be going over the major pieces of our AI Solutions and why we did things the way we did. If you want more details on any of the components, you can reach out to me directly to talk.

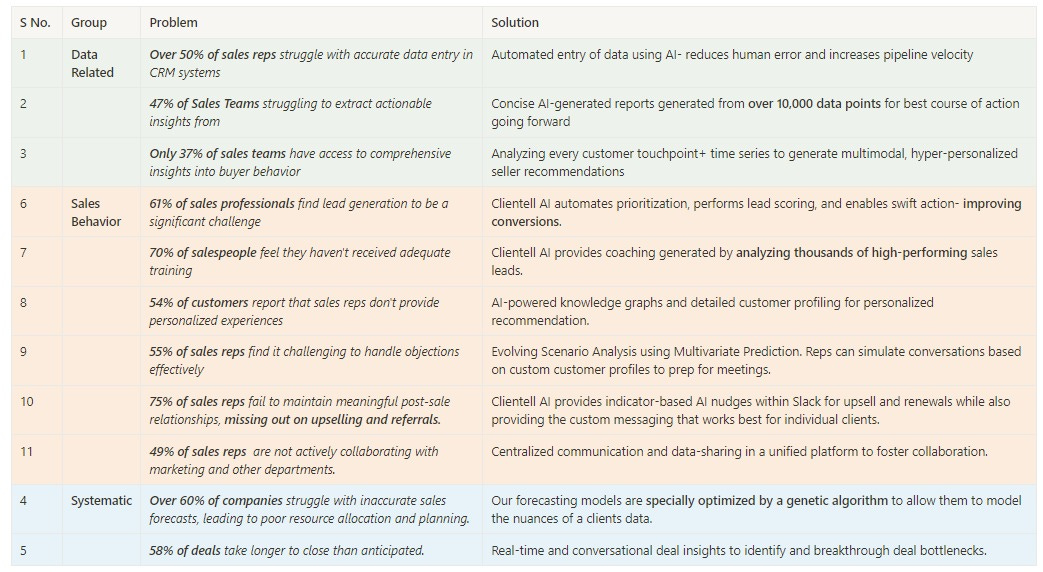

The Problem

Based on the experiences of my cofounders (and the current CEO and CTO), we noticed the 3 overarching flaws in the current RevOps ecosystem-

Data-Related Issues- Sales Reps are not properly trained to handle data. This leads to errors while inputting data and struggles with extracting valuable insights from that data. Furthermore, there was a distinctive lack of data on buyer behavior.

Inefficient Sales Rep Behavior- We noticed large inconsistencies with rep training and behaviors, leading to wildly inconsistent results and worse conversion rates. This could be improved.

Systematic Issues- There were also systematic issues, such as the use of outdated forecasting methods and long sales cycles that could be tackled strategically.

To those interested in a more detailed look into the problems we tackled, refer to the following table.

With that context out of the way, let’s now look at the major components of the system. Starting with my original specialty- statistical analysis.

Statistical Analysis

In the current Clientell ecosystem, Statistical Analysis happens mostly in the background (since our users are mostly non-technical). The most prominent use of Statistical Analysis is in our quantification of deal engagement and risk.

Most high-performing sales teams follow a system where the sales process is broken into various stages. This allows them to systematically guide a lead to closure and resale. Our AI analyzes the interactions between the prospect and sales rep to quantify the prospect’s interest in the interaction, based on the current stage of the cycle. By doing so, a sales rep can meet the leads where they are- as opposed to mechanically going through the motions.

We accomplish this quantification using the following pieces-

ML Models- We use an ensemble of various ML models that extract various features from the interactions to quantify lead engagement. I will cover some of the ways these features are extracted later. To ensure the best results, we leverage Bayesian Hyperparameter Optimization to sweep through thousands of configurations very quickly. We tested various policies but found this to work the best. The optimization and our diverse ensembles allow us to adapt to the nuances of each client-sales rep pair.

Customer Profiling- Clientell has access to thousands of customer-sales rep interactions. We use these to create various profiles. We cluster and group leads into these profiles and use graphical neural networks to generate personalized recommendations. The generated profiles are also used as a data point for our ML Models in quantifying deal risk. A similar setup is used to generate profiles of ‘great salespeople’. These profiles are then used to train salespeople and generate recommendations.

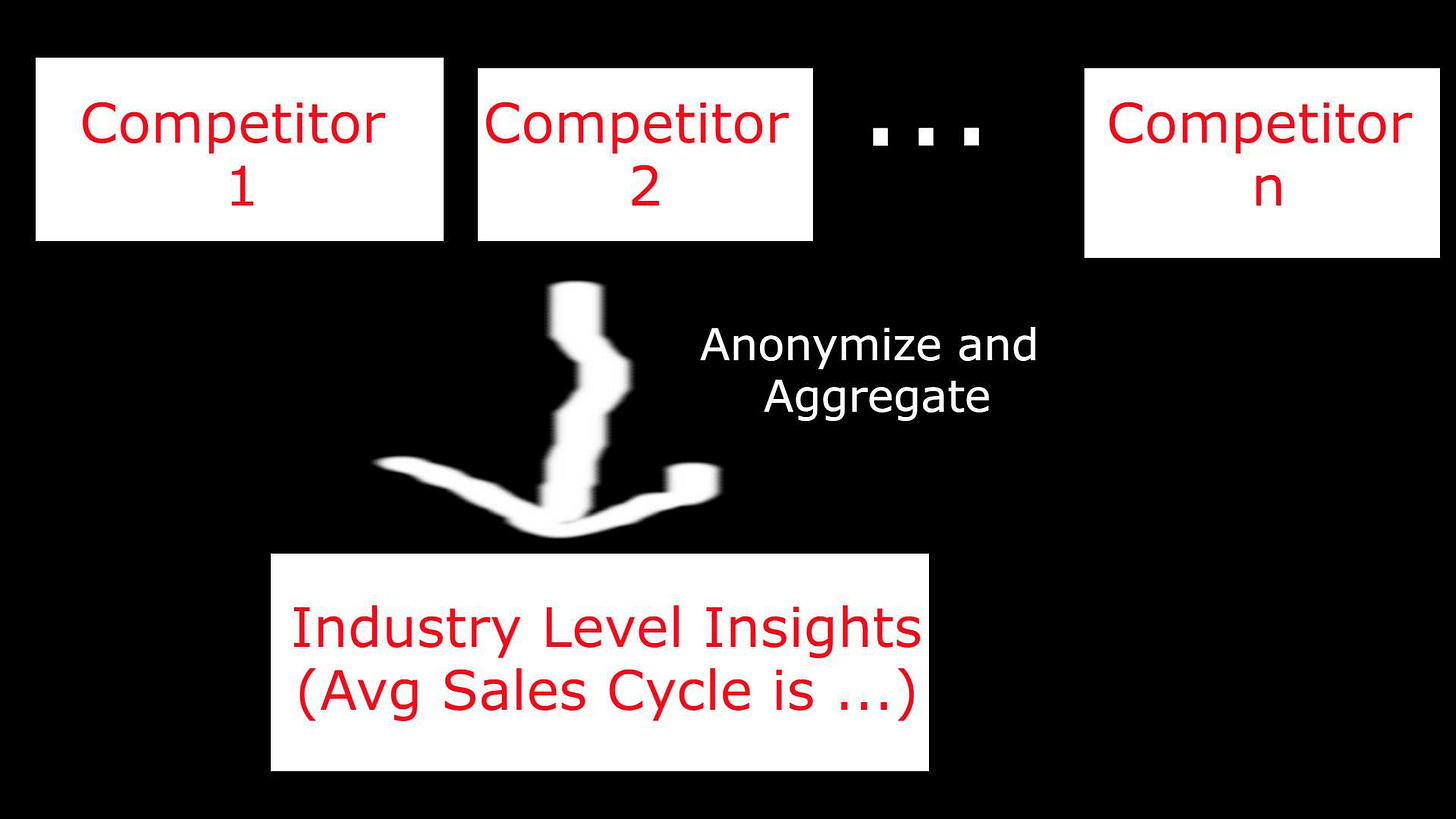

Industry and Client Analysis- Different industries have different SOPs and processes. We refine all of our AI by using analysis from both the client and their competitors. Make sure you prevent data leakage here (we use separate models and pipelines to ensure this).

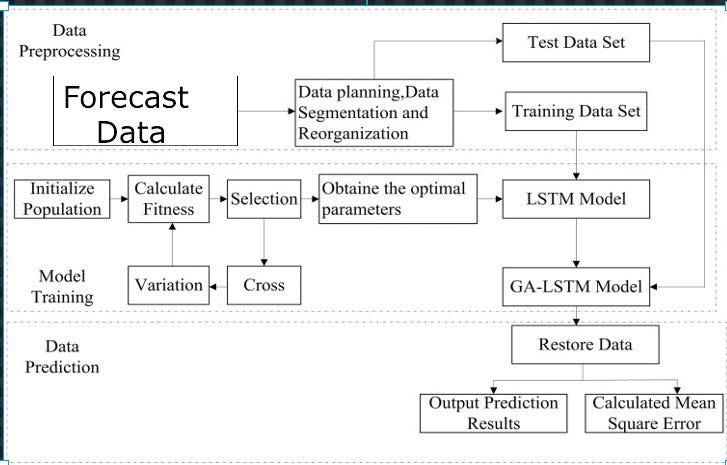

Another area where users interact with statistical analysis is in revenue forecasting. While this might seem dull to a lot of you, Revenue Predictability is on every Board’s agenda for 2024 (this is one of the reasons that subscription businesses tend to be valued highly). Thus, revenue forecasting is one of our cornerstones. We improve upon the standard forecasting procedures (looking at data from past quarters and projecting forward) in two main ways-

Using our advanced deal analysis AI, we can tell you how likely you are to convert new deals. This gives us another data point to consider.

We use Genetic Algorithm Optimized LSTMs for forecasting from past data. This setup strikes a perfect balance between performance and cost. The basic plan for the implementation is in the diagram below.

These improvements combine to give our clients more accurate revenue forecasts (one of our clients saw their sales team forecasts jump to 95% accuracy, among other benefits).

Let’s move on to our recommendation systems, clustering, and customer profiling.

Profiling + Recommendations

When it comes to customer profiling, we use a relatively simple setup. We utilize unsupervised clustering to group various customers. Every new prospect is then in this space, and recommendations are generated by analyzing the nodes (previous customers) that are closest to them. Very basic, yes. But works well for us.

We use Probabilistic Graph Models to model interactions between the prospect and SDR and generate recommendations. Since we always ‘know’ the end result (we want to push the next stage), we can work backward from there. We use both feature importance and Causal Inference (a lot of Bayesian Graphs) to score the possible actions and generate recommendations( we also tried using Large Language Models, but the costs were too high since we generate and score actions a lot).

With that out of the way, time to get into the glue that binds everything together- the NLP. Given the nature of the work, NLP is used everywhere- analyzing conversations, feature extraction, Q&A, and much more.

NLP to Improve Sales

Our NLP stack combines traditional software engineering like regular expressions with Deep Learning Models and LLMs (Open Source for the W). Based on the domain knowledge extracted from analyzing sales interactions/speaking to SDRs- we have a variety of RegExs and Rules that extract features that are used as features in the deal quantification and customer profiling. Regexs and rules are also pretty useful when it comes to automating data entry tasks, since with some good design, it’s possible to constrain the formats of the inputs and outputs(making developing rules feasible).

Next up is sentiment analysis and meeting summarization. For these tasks we leverage models in the BERT family for their superior performance in NLU based tasks (and since they don’t hallucinate). Since these models are smaller than LLMs, it also makes keeps costs low.

Lastly, we have the flashiest part of our platform: our Decoder-only transformers and the Clientell chatbot. We leverage a custom LLM in our chatbot to interact with our users. LLMs are quite costly, but they streamline the user experience. So we bear those costs. We use various routines and functions to reduce load where we can. For example in the following images, our LLM calls another function that queries and generates reports (instead of doing things itself as try to use LLMs for). Our LLM functions as the controller which takes user query and then calls the relevant script for a particular functionality.

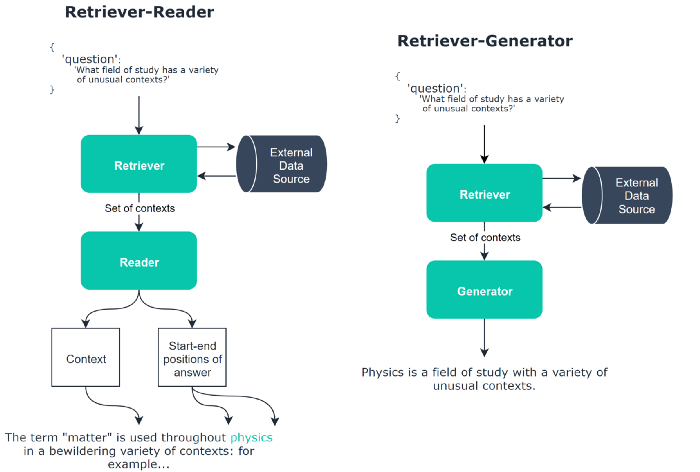

When building Q&A capabilities, you can either implement a retriever-reader or retreiver -generator framework. We use the former in tasks that involve open book Q&A that require precision (point to specific policies, highlight specific mentions etc). We pull out the contexts and present the most relevant ones.

We leverage Retriever-Generator framework to create our simulations. For some context- we let SDRs practice their pitches and test out a bunch of scenarios. we use LLMs to generate these scenarios. To make them as specific as possible we use retrievers to pull out interactions with the prospect. We then perform lots of data engineering and analysis on these interactions to generate the prospect profile (if the profile already exist, this step is skipped). SDRs have the option to manually change the profile parameters (changing information like anger, impatience, and receptivity) to simulate a bunch of scenarios. Building this was a very interesting experience. We had great results in our proof of concept. After deploying we noticed an issue- the model outputs were very boring. There was a lot of repetion in the scenarios. It’s not an issue now, but it limits utility for longer term users.

This was a tricky one to resolve because reducing temperature (or relaxing constraints) lead to a sharp dropoff in quality (specifically relevance). We have resolved this issue by leveraging an LLM with longer context windows for the generation aspect. This increases the focus on the specific context and allows us to relax a few constraints. This is hacky but it works- so we ball. we don’t have to over-engineer this because it is a small part of the Clientell ecosystem, it is not a make-or-break functionality, and customer feedback has been mostly positive.

If you’re looking for a guide on how to build LLM/LLM-enabled systems yourself, check out the following article for more details. I break down research into the topic over there.

Now for the last part of our ecosystem- let’s now cover the Audio Analysis AI. This is the next frontier that we are actively working to build upon.

Audio AI

So far, the audio AI we have implemented is fairly rudimentary. We leverage Deep Learning to transcribe calls and utilize signal processing to analyze the interaction to quantify the lead’s engagement, energy level, and emotional valence. We believe that using the Audio (and ultimately video) information in the meetings can be used to create comprehensive multi-modal profiles of leads. This will allow us to personalize recommendations and quantify risk at an unprecedented level- leading to a great competitive advantage.

So far our priority has been cleaning up the pipelines. We have a lot of services to interact with various data sources, pull data, run models, create interactive dashboards, etc. The priority has been to cut costs and reduce the overhead from these processes. But our performance has stabilized and we have now begun to look towards expansion: both in improving our present capabilities and developing new ones. If you have any suggestions and inputs, I would love to hear them.

If you want more details about Clientell as a company the Clientell CEO, Saahil Dhaka would be a great person to speak to. He has been working on the company since the beginning and is still on it full-time. You can also get a deeper look into the features we have on the Clientell YouTube channel here. Of course, if you want any details on the AI, you can always reach out to me.

If you liked this article and wish to share it, please refer to the following guidelines.

That is it for this piece. I appreciate your time. As always, if you’re interested in working with me or checking out my other work, my links will be at the end of this email/post. If you like my writing, I would really appreciate an anonymous testimonial. You can drop it here. And if you found value in this write-up, I would appreciate you sharing it with more people. It is word-of-mouth referrals like yours that help me grow.

Reach out to me

Use the links below to check out my other content, learn more about tutoring, reach out to me about projects, or just to say hi.

Small Snippets about Tech, AI and Machine Learning over here

AI Newsletter- https://artificialintelligencemadesimple.substack.com/

My grandma’s favorite Tech Newsletter- https://codinginterviewsmadesimple.substack.com/

Check out my other articles on Medium. : https://rb.gy/zn1aiu

My YouTube: https://rb.gy/88iwdd

Reach out to me on LinkedIn. Let’s connect: https://rb.gy/m5ok2y

My Instagram: https://rb.gy/gmvuy9

My Twitter: https://twitter.com/Machine01776819

Such an informative piece with loads of take-always, thank you.

Amazing as always Devansh!