Why the AI Pause is Misguided [Thoughts]

AI can cause harm in a lot of ways. Just not in the ways people making noise about it claim.

Hey, it’s Devansh 👋👋

Thoughts is a series on AI Made Simple. In issues of Thoughts, I will share interesting developments/debates in AI, go over my first impressions, and their implications. These will not be as technically deep as my usual ML research/concept breakdowns. Ideas that stand out a lot will then be expanded into further breakdowns. These posts are meant to invite discussions and ideas, so don’t be shy about sharing what you think.

If you’d like to support my writing, please consider buying and rating my 1 Dollar Ebook on Amazon or becoming a premium subscriber to my sister publication Tech Made Simple using the button below.

p.s. you can learn more about the paid plan here.

By this point, you’ve all heard of the giant AI pause. The Future of Life Institute has called for all AI labs to immediately pause for at least 6 months the training of AI systems more powerful than GPT-4. Their rationale is simple- AI Models are getting more and more powerful. If we don’t regulate them properly, someone will create a powerful model with no guardrails which will cause a lot of harm. So pause the development of big LLMs, let the regulators catch up to it (because politicians clearly understand AI enough to regulate it), and then proceed with AI that won’t kill us all in some Terminator-esque dystopia.

We are drowning in information but starved for knowledge.

-A quote very relevant to the situation

In this article, I will be going over the following points-

How the AI actually causes harm.

Why the Open Letter is similar to putting makeup on a monkey because you can’t date Marilyn Monroe. It doesn’t address the fundamental problems, instead focusing on cosmetics.

Why this letter causes more harm than the models it aims to stop.

Should we let machines flood our information channels with propaganda and untruth? Should we automate away all the jobs, including the fulfilling ones? Should we develop nonhuman minds that might eventually outnumber, outsmart, obsolete and replace us? Should we risk loss of control of our civilization? Such decisions must not be delegated to unelected tech leaders.

-This is a quote from the letter. Very bombastic. The last line (which I bolded up) has some… very interesting undertones

How AI Actually Causes Harm

The capabilities of an AI Model is not the only factor that causes harm. It’s often not even the most important facet in the equation. When AI is misapplied (either maliciously or unintentionally), there are other prominent issues that need to be tackled- not just the baseline capability of the model.

When it comes to harm by AI are giving too much credit to AI and its super intelligence and not enough credit to human stupidity

-A tl;dr of this section

A lot of the harm caused by AI is caused by the misapplication of technologies in areas that they don’t belong in. Take the case of a recent professor, who was falsely named by ChatGPT in the list of professors who committed sexual assault. ChatGPT created a fake scenario and cited a fake article in its result. In this case, there were no adverse consequences for the professor. Instead, let’s assume that someone took the output to be true, and started campaigning for this professor’s removal. In our scenario, who is the one that truly caused harm- ChatGPT or the person who mindlessly believed ChatGPT and didn’t bother verifying anything, even when it is well-known that ChatGPT makes things up?

These models have flaws. I have spent a lot of time critiquing the hype around them, wherein people make them out to be an almighty solution to everything that you could want (there is only one true silver bullet in AI- random forests). However, it’s also important to not swing the other way, to not hold them as the catalyst for massive societal doom, where we all sit around where the evil AI does all our jobs. This kind of Doomerism is as bad as the hype bros on the other extreme

One of my favorite critiques of the AI-Doomerism and the AI Letter comes from the insightful

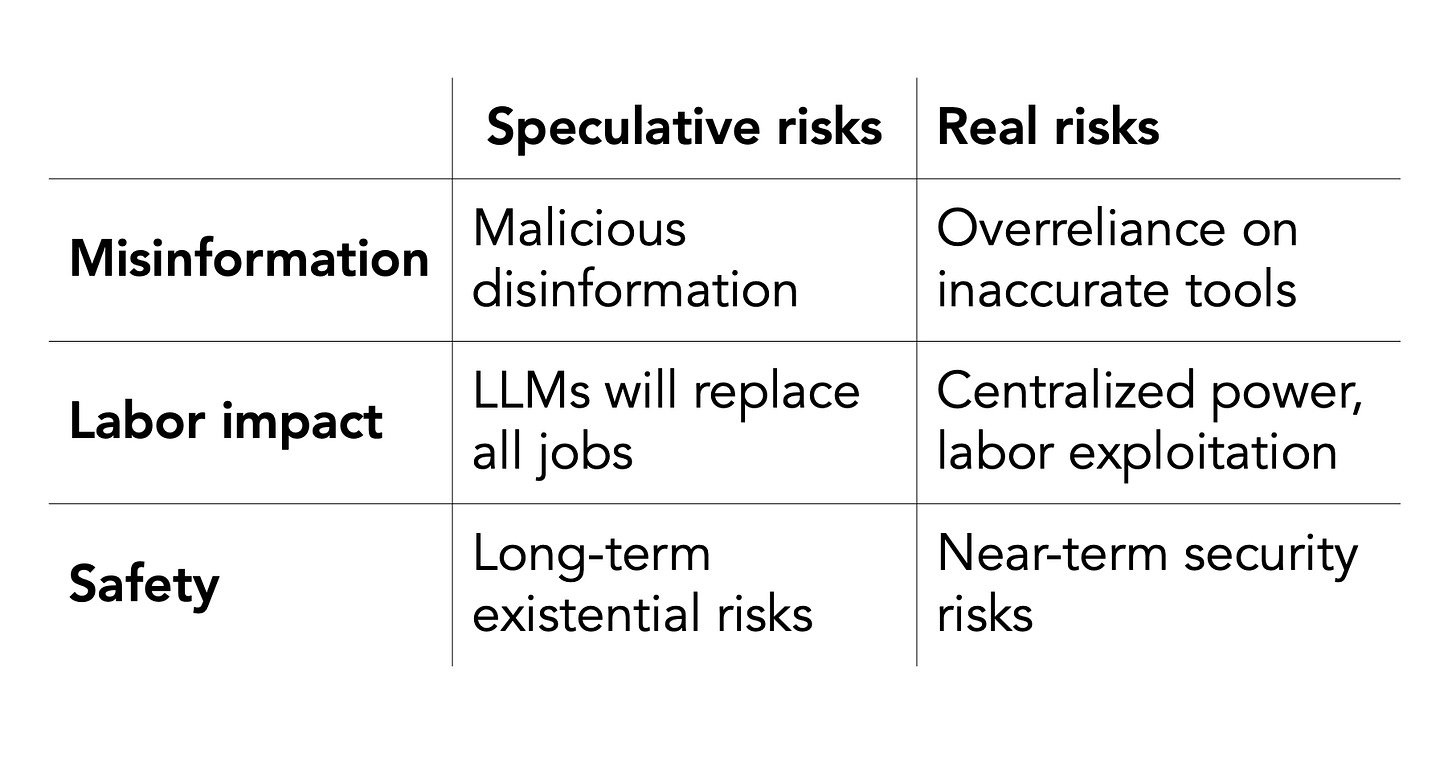

newsletter. In their insightful article- A misleading open letter about sci-fi AI dangers ignores the real risks, they very rightly pointed out that the letter and its proponents were misleading the conversation, away from the issues. Take a look at their statement-We agree that misinformation, impact on labor, and safety are three of the main risks of AI. Unfortunately, in each case, the letter presents a speculative, futuristic risk, ignoring the version of the problem that is already harming people.

They also had a fantastic matrix to succinctly summarize the difference between the real risks and what the Doomers were getting hot and bothered about-

Their analysis lines up with our example earlier. The harm caused by models. The harm is not caused by the model, but by someone who treats them as Gospel and uses them without evaluating them. And this misapplication of AI is more common than you’d think. And this is not a problem that occurs exclusively with models that are as powerful as GPT-4. Humans live by the ‘Monkey-See Monkey-Do’ philosophy. People run after technologies and ideas that are trendy and gain attention, without thinking whether those ideas would be valid in a particular context. Here’s an example from my own experiences.

As some of you may know, I got into AI in 2017. I was part of a group of three people who created a novel algorithm for detecting Parkison’s Disease using voice samples. This has been done by others (many with better accuracy than ours), but our implementation of it gained special interest and was eventually even licensed and monetized for one simple reason- our algorithm was very strong against background noise, bad signal, and perturbations. Our secret- we avoided many of the standard methods that others used (including Deep Learning). In the lab, these methods pushed the accuracy scores higher than ours. But they were too fragile to be good for real-life implementations. Too many people make the mistake of falling in love with a certain model, architecture, or way of doing things and use them blindly. We’re seeing it with ChatGPT and LLMs right now, but this has been a problem for a while.

People’s tendency to apply AI and its outputs without critical thinking is more harmful than any particular type of AI

Let’s look into one of the most examples thrown around of how these generative models can cause harm- one that does have some merit to it- customized propaganda/misinformation.

AI and Weaponized Misinformation

People are worried that people could use AI to target certain vulnerable demographics, and then use powerful models to create content to lead them down the rabbit hole of extremism. Using Generative AI, groups would be able to churn out a lot of content, to hook people into their idealogy. Social Media indoctrination is a very real phenomenon. Generative AI can make it much worse by filling up a mark’s feed with their agenda.

since they are so flexible, and have the potential to be either smart or stupid, depending on how skillfully they are instructed

-A perfect quote that can be used to describe AI, shared by

of

On this point, I agree- this is a problem and one that Powerful Generative AI would make worse. However, the calls to pause the development of LLMs do very little to combat this. For a very simple reason- generative machine learning models are a side character in this situation. Read that again. When it comes to our propaganda-spitting AI, we have two bigger boss villains, that are much more important to deal with-

The Profiling Algorithms used to discover the targets- Anyone with any experience in recommender systems or other tasks that involve customer profiling will tell you that there is a lot more to building a persona than straight text. Even in Clientell, where we use primarily natural language interactions (email and voice) to build personas of prospects for our clients, we have multiple kinds of data- from tables to special encodings to make the final persona. The persona built is not from one giant model, but from the interaction of multiple smaller models that target various functions that Neural Networks don’t hit as well. Furthermore, the profiling algorithms used by Social Media predate LLMs and have already demonstrated their danger in that area. Stopping new ‘powerful’ models doesn’t fix this part. That should be tackled first.

Extremism by Social Media is a social issue- In labeling indoctrination/misinformation as an AI issue, we overlook another core issue- the roots of indoctrination run deeper than AI. Mainstream media has been polarizing people longer than I’ve been alive. Extremists on all sides have been profiting off normal people forever. Men are more suicidal than ever, Instagram caused body dysmorphia in many young girls, etc etc. I’ve attended schools in 3 different countries (India, Singapore, and the USA). All the places I’ve been to have been highly regarded educational institutions. Not one of them has taught me how to deal with the urge to chase validation on Social Media, How to Spot Charlatans, and how to think critically. Now I attained enlightenment in the womb, so it wasn’t a big loss to me, but others weren’t so lucky. When I wrote about Influencers shilling bad AI products online, 5 different people reached out to me and how they were scammed by some social media influencer. Coffeezilla built his brand by exposing scammers who rug-pulled everyone, including educated professionals.

Yes, more powerful AI will make more harmful propaganda/misinformation. And yes that will swing more people. I’m not denying that. I’m just pointing out that even if we created the perfect regulation to stop new AI Models from generating misinformation and propaganda, the core issues go far beyond. Attention and resources are limited. We need to focus them on the core issues, not be swept up in hype cycles.

I vividly remember the ~2014 one. 80% unemployment rates were supposed to be around the corner. If machines can beat people at Jeopardy, who's ever going to need a human doctor anymore?

, creator of Keras and Senior Staff Software Engineer at Google describing similar AI Replace all hype in 2014

-

Hopefully, I’ve demonstrated that the call for stopping AI is not going to actually solve the real issues when it comes to AI harm. Now I’m going to go over the last point- why the Open Letter causes more problems in AI than the models.

The Society of Spectacle

In the 1960’s French Philosopher Guy Debord wrote what is possibly one of the most accurate predictions of the modern world- The Society of Spectacle. In it, Debord critiques how a world dominated by advertising and consumerism becomes more concerned with the spectacle of an event than the event itself. In other words, the packaging becomes the product, and the aesthetic trumps the function. Looking at the state of the modern world, it’s hard to disagree. Given the prevalence of IG Filters and fake lifestyles, it’s hard to disagree.

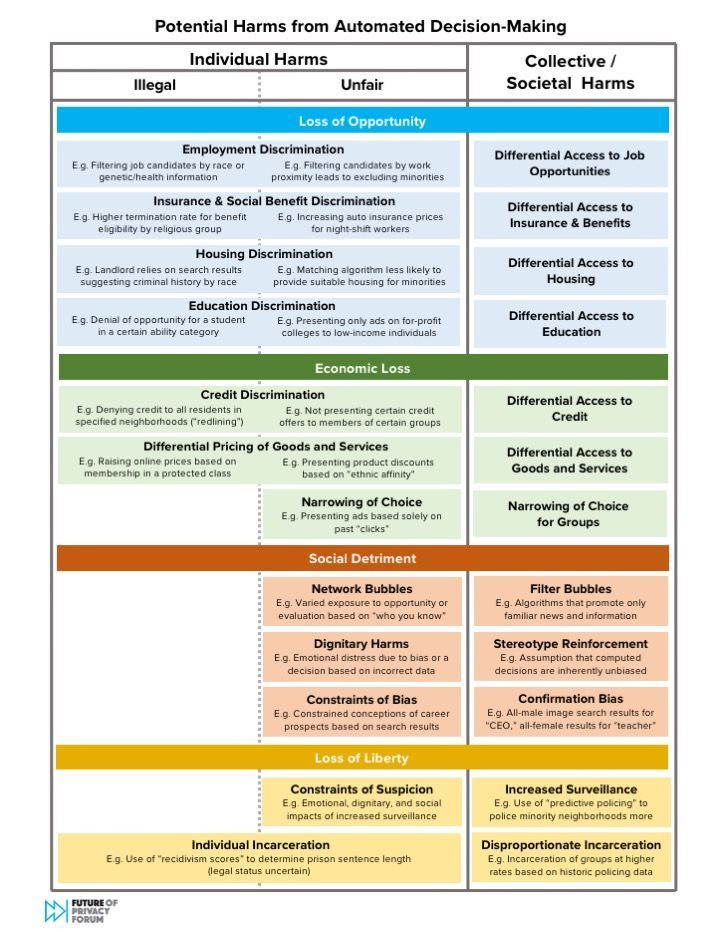

This shows itself in the AI Open Letter situation. Take a look at the very real societal problems that AI is causing, and will cause going into the future. These included some terrible consequences like racial discrimination, extreme funneling into categories, increased isolation and more-

Much of this is notably absent from the Open Letter. Instead, it focuses on the Sci-Fi dangers of AI automating all jobs (even fulfilling ones 😢😢) being snatched from us and AI becoming smarter than us. Regulators have had years to make policies based on the very real discrimination that has been caused by AI but have done very little.

The letter relies heavily on fear and sensationalism to get signatures, falling into the playbook of New Age AI Gurus who shill 30 products a week under the claim that you will fall behind if you’re not using AI. If you aren’t ‘leveraging’ AI, your firm will go bankrupt, you will lose your hair, and your children will start punching their grandmas as they lose sight of morality. The sensationalism of this letter muddies the waters, makes discourse on the topic unclear, and ultimately creates a scenario where bad actors can use the noise to exploit people. This letter was to AI, what that Sequoia profile was to SBF and the Crypto Scam.

Just like the conversation around Crypto and FTX, this letter adds a lot of noise to the field, with very substance. It’s hard to take it seriously when the letter focuses on Tech Celebrities like Andrew Yang, Elon Musk, and Harrari and not AI Legends like Andrew Ng and Yann LeCun (who both called this a bad idea)- another similarity to the Crypto Mania which used financial influencers to market, even as experts cautioned against it.

While the idea of pausing AI experimentation may seem like a plausible harm reduction strategy to implement prior to formal regulation, the context behind the open letter raises some questions. First, and perhaps most tellingly, some of the letter's most prominent signatories represent tech companies that have fallen behind the curve of AI development. As such, the call for a pause reads more as an attempt to buy time and accelerate their own AI development efforts rather than a genuine concern for establishing and implementing guidelines aimed at minimizing the potential harms of AI.

- AI Researcher Sebastian Raschka raising some valid points about the conflict of interest by many cosigners signing the letter.

Fear is a powerful marketing tool and the letter so far has been used as just that. Safe AI is a must, but the 6-month ban does nothing to address the core problems that make AI unsafe. It feels more like an attempt to slow down the developments, centralize the power over AI to certain groups, and make everyday people cower about this scary new thing that we must be protected from.

If that last bit sounds a little tin-foil hatty to you, here is the experience of one person who’s life was ruined by Doomerism-

Long story short, a little over 2 years ago I was doomscrolling about AI gone rogue scenarios, reading lesswrong articles about the typical paperclip argument ; a superintelligence will come along, you tell it 'make me happy' and boom, the AI stimulates the part of your brain that produces dopamine for eternity.

This triggered a *beyond severe* panick attack where I basically gave up on life out of the fear of some rogue AI that will just keep me alive forever and ever. Every time I tried to confront my fear the concept of living for eternity against my will overwhelmed me. I somehow got through school while being quasi paralysed 24/7 the entire year. Now although my anxiety symptoms are still the same, and I can't go to school or do almost anything, recently I've regained hope that my life doesn't have to end here

-Just like positive hype bankrupted people, doomerism causes serious harm to people. Read the full letter here

That is it for this piece. I appreciate your time. As always, if you’re interested in reaching out to me or checking out my other work, links will be at the end of this email/post. If you like my writing, I would really appreciate an anonymous testimonial. You can drop it here. And if you found value in this write-up, I would appreciate you sharing it with more people. It is word-of-mouth referrals like yours that help me grow.

Reach out to me

Use the links below to check out my other content, learn more about tutoring, reach out to me about projects, or just to say hi.

Small Snippets about Tech, AI and Machine Learning over here

Check out my other articles on Medium. : https://rb.gy/zn1aiu

My YouTube: https://rb.gy/88iwdd

Reach out to me on LinkedIn. Let’s connect: https://rb.gy/m5ok2y

My Instagram: https://rb.gy/gmvuy9

My Twitter: https://twitter.com/Machine01776819

https://fpf.org/wp-content/uploads/2017/12/FPF-Automated-Decision-Making-Harms-and-Mitigation-Charts.pdf

thanks for this, I was waiting for some expert to give a critical pass at this open letter. Maybe some proposals for how we should involve regulators in AI? Because governments and institutions will enter the dance anyway.