2 Year Special: AMA ,10 Million Views and more

A review of the past year, I'm hiring some people, answering some questions, etc

AI Made Simple’s turns two today.

Given that this is so close to the new year, I’ve decided to roll up a yearly review, AMA, and next steps into one piece.

This post will contain the following-

My analysis of the year, including problems we had, stats about growth etc.

My next steps for the newsletter.

Answering some Questions from the AMA.

My questions for you (I want to get to know you better, so I’d really appreciate you taking some time to answer them).

Final Notes on the AI Space and Sign-off

We will answer the following questions from the AMA-

Recommendations for starting a newsletter for a beginner.

My biggest Fear around AI

What We Need for Intelligence

How I write, how long it takes me, etc.

My favorite Article

The field in AI I’m most excited about

More active members of the community might recognize some of these from earlier messages in the Group Chat or LinkedIn. Since I wasn’t able to get to every question (didn’t want to make this too long), I’ve decided to do more posts answering such questions over there or on LinkedIn. Come join us there if you’re interested. I will also start answering some questions at the end of the regular articles so we can actually finish the ones.

If you want to add your questions, put them in this anonymous Google Form- https://docs.google.com/forms/d/1RkIJ6CIO1w7K77tt0krCGMjxrVp1tAqFy5_O2FSv80E/

Let’s get into these

Last Year in Review

Concrete numbers I’m happy with at a glance-

Number of shares for Articles> 1000 (30 is a low-ball estimate for the average number of times 1 article is shared, multiplied by the lots of articles we’ve written- I didn’t want to sit and count the exact number). Substack doesn’t give very good stats, so you will have to bear with the estimations in a few places.

Follower count- 130K —> 166 K.

Revenue from paid subs: 0 (no monetization)—> 20.5K (264 Paying Subs at the time of writing). It’s very interesting that many people choose one of the higher-tier subscription plans, even though there isn’t any “incentive” to do so (we have a pay-what-can system to support the mission of democratizing AI/Tech to everyone, irrespective of their financial ability).

No. of people I had direct conversations w/: >733 (this is the number where I gave up on logging them). This is the number I’m happiest with.

Total Views: >10 Million

Number of times I pet my cat Chunchunmaru >= 3.

Gallons of Chocolate Milk Consumed >=1.

Substacks Recommending Us- 283 (238 + 45 for AIMS and TMS respectively)

We played a small part in Meta changing their policies to better protect teenagers on their platform from sexploitation.

A Retrospective

On the whole, I’m very happy with how last year went. I had some major concerns about the newsletter and it’s viability, but we got through them and I think things have worked out for the best. Let’s first talk about the challenges (they tie into some of the other questions I received) we went through before proceeding to the growth.

Coming into 2024, I had lost a lot of newsletter subscribers and some of the major partnerships I had worked to build (we went from almost 200K subscribers to 130K, and our monthly reach was reduced from millions to a ~300K). AI Made Simple, which was at one time in the top 10-15 of Substack tech newsletters, also completely disappeared from the rankings. This happened due to a few decisions I made, so I’m not mad about it, but it still was a difficult experience.

A large (very large) part of my growth was due to knowledge partnerships I did with organizations like tech education Coursera (not them specifically, but I’m using their name as an example given their prominence). I would provide my writing to them/their students for free in return for a backlink to my newsletter. I was pretty confident that I was the best at what I did, so once people were exposed to a few samples of my work, they would join my newsletters. This strategy worked out really well, and it’s how my writing grew so quickly, even though I didn’t have a major following on any other platform.

However, this arrangement was canceled around the mid-late last year. Around this time, I wrote a few articles critiquing-

The Big Banks funding Climate Death (and companies greenwashing heavily)

The use of Child Labor in Big Tech

Amazon’s use of AI to oppose unions,

Microsoft attempts to sell DALLE for training AI-based weapons systems (such as Israel’s Project Lavender, an AI-based weapons Targeting System)

Government internet/information suppression tactics (through Mass surveillance, Signal Jamming to cut communications, Internet Blockers, post suppression, etc.).

I also did articles on how to use AI to fight against the last three use cases.

The organizations I was partnering with wanted me to take down these articles and not talk about these topics since they didn’t want to be seen endorsing them/ “attacking the companies/groups” I was mentioning (surprised Pikachu Face). I disagreed, so we ended up cutting these partnerships, consulting projects, and other arrangements. I spent a long time thinking about whether I made the right decisions, whether this whole thing was worth it, if I would just lose everything again, if my actions were simply performative and would ultimately be ineffective etc etc. I was also worried that the Substacks recommending me would stop for a similar reason.

Ultimately, I decided that this wasn’t too bad. I came with nothing and worst case; I go back with nothing. I had no way of knowing if I was doing the right thing, but I did know that I would rather regret doing the wrong thing over regretting behaving like a coward. So I shrugged my shoulders and wrote down two quotes that have influenced my life philosophy more than I would like to admit-

“Marry, and you will regret it; don’t marry, you will also regret it; marry or don’t marry, you will regret it either way. Laugh at the world’s foolishness, you will regret it; weep over it, you will regret that too; laugh at the world’s foolishness or weep over it, you will regret both. Believe a woman, you will regret it; believe her not, you will also regret it… Hang yourself, you will regret it; do not hang yourself, and you will regret that too; hang yourself or don’t hang yourself, you’ll regret it either way; whether you hang yourself or do not hang yourself, you will regret both. This gentlemen, is the essence of all philosophy.”- Soren Kierkegaard (you really thought I would do an article w/o quoting him?)

and

“There is no avoiding war; it can only be postponed to the advantage of others.”- Niccolo Machiavelli. The Prince is my pick for the greatest book ever written, and I think everyone should read it.

and commit to my actions, come what will. If I was as good as I thought, things would work out fine. If not, this is was the result of my incompetence, and I deserved my failure for overestimating my ability.

Fortunately, despite losing all the group partnerships, we’ve done pretty well.

We’ve grown our following by over 36K+ over the last year, with 264 Paying Subscribers (ARR 20.5K USD). Your generosity in supporting me (even though most of my articles are free) has been staggering- so thank you. In place of the organizational partnerships, you guys have really stepped up, sharing these articles more than a thousand times. Thanks to all of your generous support- our Cult did over 10 Million views in the last year, fully independently. No paid ads, no institutional backing, no PR agencies involved. I wrote, you commented and shared, and together our chocolate milk cult reached some very high places. We grew almost entirely from Word of Mouth Referrals.

Thanks to both the paid subscriptions and the clients I work with (consulting is how I pay the bills)- I can operate with a level of freedom that few can have. I’ll do my best to pay this back by bringing the best possible information to you; no clickbait, sensationalism, or jumping on trends for attention. I will reiterate my promise to you- I will continue to write about only the most important ideas, in the way that best represents them. The focus will always be on what matters, not what’s popular/what I can sell.

I’m particularly grateful to all of you who question, challenge, and occasionally even correct me. I learn so much from our interactions. Brilliant as I am, there’s only so much I can know/think about alone. Talking to you has been a great source of inspiration, knowledge, and insight into aspects I would typically never have known myself. I directly interacted with over 730 people over the last year (733 is how I many I was able to log in my Meetings tracking Excel Sheet before giving up on it altogether), and it always surprises me that there are that many people who have taken the time out of their busy lives to talk to me. Publically, I don’t play favorites, but to all of you who bought me lunch, dinner, chocolate milk OR FLEW ME OUT to meet you/your team- just know you’re closer to my heart than the rest. You know how to make me feel so pretty, and I will always wear my best heels and lipstick for you.

I would also like to thank the guest authors who made some excellent contributions to this newsletter. Every single one of you brought something special. I have high expectations from contributors to this newsletter, and all of you surpassed them. Once again, from the bottom of my heart- thank you.

I’ll end this section with an apology to all of you who messaged me but never got a reply. I have a lot going on, and at this stage, I don’t even have time to read ever single email/message I get, much less reply to it. I do my best to revert to everything (I’ve carved out times in my day just to read messages and reply to people), but sometimes, I read a message and forget to reply. Other times, I think it’s a great comment, and I should revert to it later and then end up forgetting. I hope you’ll continue to message me. It means the world to me that people write to me.

Time for the Next Section.

Goals for Next Year

Over the next year, this is how I anticipate the newsletter going-

More Guest Posts

Our cult is a murderer’s row of experts (I’m not going to name-drop so I don’t look like I’m bragging). As mentioned, all guest authors from 2024 spat some serious venom, and I hope to continue that momentum with more collaborations. I think guest posts are a great way to learn about new ideas/concepts that I would not have thought of or known by myself and promote a diversity of thought in our community. So if you want to come in and share what you’re doing, please don’t be shy. I’m happy to turn the spotlight on you, whether it’s for one article or an entire series.

On that note, I also would invite more of you to share your stuff on the Community Group chat here. As long as you follow these guidelines, I think it’s a great way to get exposure to the other members of the cult. Nothing would make me happier than two cultists meeting and doing cool shit together.

More Case Study Style Posts

I want to cover how organizations solve problems with AI, instead of covering individual research components. If you’re a startup/company that wants to be featured, shoot me a message. Keep in mind, b/c of all the BS in the field, I will have to audit you. So only reach out to me if you are confident that you’re making meaningful contributions (your work doesn’t have to be technically impressive, as long as you solve real problems).

Fewer Lists + Individual Research Paper Breakdowns

As mentioned earlier, I don’t have as much time as before, and lists are the least fun/valuable to write. So, while we will still do them, there won’t be as many.

Similarly, I will likely be doing fewer individual paper breakdowns. I think there are many great educators who are getting into the breakdown space, so my doing them is just less necessary. Based on everything that happened, I think my superpower is seeing looking past the scary math/code to see how things play together. Most big-picture people don’t understand the technical/theoretical nuances (hence the bad investments), and most technical folk worry too much about the tech and not enough about the way it solves problems in society (hence the overemphasis on benchmarks and rigor w/o thinking of actual ROIs).

Based on my observation, many AI projects end up wasting time solving “nice-to-haves” and not focusing on what’s critical (or they overinvest in the critical aspects). Reading b/w the lines to identify the correct problems and how much to invest in them is something I think I do better than most people, and I want to write more stuff that aligns with this.

As with the lists, I will still do the individual breakdowns when I think something is super-duper important and deserves it’s own article.

Better Visualization (I’m hiring)

Put simply, I'm looking to make my work more beautiful.

I'm planning to add few quirkier visuals to summarize/explain ideas( example from one of my fav mangas- Blue Lock below). Unfortunately, I am a horrifyingly bad artist (you'd probably block me if I ever drew any of you), so my attempts at this have not been useful.

This is where I’m looking to team up. We wouldn't need much- just 1/2 of these illustrations per article. If this interests you, reach out to me, and let’s talk.

Let’s move on to the Questions in the AMA

AMA

Recommendations for starting a newsletter for a beginner.

I wouldn’t recommend it. As a platform, newsletters are incredibly hard to grow. I’ll talk soon about my observations on Distribution vs Monetization based platforms, but NLs fall on the latter category. This means that growth on them is extremely slow if you don’t have any following.

That being said, content creation is an excellent investment both personally and professionally. My first recommendation is YouTube, b/c that’s where most of the people are. It’s generally inclusive of newer creators (the 0—>1 is much easier than a platform like Substack) and it has enough name value that people check it out immediately (if you put a YT channel and a Substack on your resume), people are less likely to see the Substack, even with significantly higher numbers. I speak from experience here.

If you are dead-set on writing, my recommendation is LinkedIn. It skews towards distribution but has the newsletter option. LinkedIn articles have done decently well for me. You can also leverage things like Special Interest groups on LinkedIn to massively up your reach and following. It’s not very pleasant to write on, so putting a draft on Medium and then copying it over is a good strategy (it’s what I do). Speaking of Medium, it’s completely dead at this point and I would not recommend it. I only use it for the writing experience, but I would not recommend it as a priority.

My recommendation around LinkedIn is to religiously study

. He’s done some very good numbers (over 100 Million impressions in one year iirc), so I think studying his posts to understand his growth strategy will be key. Rube also shares some pretty interesting insights, so it wouldn’t hurt you anyway.In terms of actual advice on how to be a good writer, I can only offer 3 meaningful pieces of advice. In order of importance, they would be:

Be fit: Increasing your physical fitness will boost your energy levels. In hindsight, my articles are possible because of my insane energy levels (I write for upto 12-13 hours in one stretch since I do most of my articles in one shot. During this time I don’t eat or take breaks. The most hours I’ve done in one go, FYI, is 17 for this one). Both my ability to push to the limit and pure work capacity have likely been enhanced by my exercising, and I think anyone looking to be an exceptional writer should also dedicate a serious effort to fitness. Probably the single most important thing I can say (60%).

Write a lot- The more you do something, the better you get. Ignore all tips, optimizations, guides etc. Just write. See where your skills align. Ultimately, writing is as much about self-discovery as it is about self-expression and learning how you like to write is key there. 30%.

Read a lot- Seek different kinds of work, see what you enjoy, try to see why you enjoy them. Iterate. Occasionally, look at other successful people that you didn’t get and steal from them. Read a lot, and read a diverse amount of information. 10%.

My biggest Fear around AI

Last year, it was the climate load through the increased use of Generative AI. I still think it’s a problem, but it seems less of a concern than I thought (I saw some very interesting stuff on Threads that I can’t find on this). I’m still researching, but for now, it seems like I was most likely wrong about the severity of the increased Gen AI load (the crux of things I saw was that increased usage can be offset by more efficiency, fewer people going into office etc).

Now, I’m very worried what Gen AI will do to our brains. Essentially I’m worried about a few things-

People use it to replace large parts of their thinking. This is something I’m seeing already.

The smoothening out of their brains as their assessments rely on Gen AI.

People forget the limitations and deploy them without oversight.

Bad things happen (people being evaluated unfairly b/c they’re outside the norm of the training data, etc).

Harmed people have no recourse b/c most people have kind of gotten used to using GenAI as a crutch.

And this is before we get to actual propaganda. What happens when people start actively embedding serious propaganda into the AI? If people have become too reliant on them, then they might miss this and brainwash themselves. It’s like the slightest whiff of convenience makes people surrender their freedom and power.

It also concerns me that no major AI commentators seem to be discussing this regularly. Maybe I’ve read too much 1984 and Farheneit 451, but the way people seem to be recklessly replacing their life experiences with AI is deeply concerning to me and I can’t think of too many other “societal risks” with AI.

The Substacker

has an excellent piece called, “AI instructed brainwashing effectively nullifies conspiracy beliefs” which talks about how people were able to use AI to rewrite people’s beliefs. More concerning than the results, were the people celebrating it-In this case, it was about conspiracy theorists. But what happens when powerful groups start using this to rewrite history? AI is not a magically independent entity. It’s a function of it’s training and the algorithm that prioritizes certain activities. The makers of AI and groups of power have outsized influence on it, as they have on all technology. This means that it can be made to toe the line.

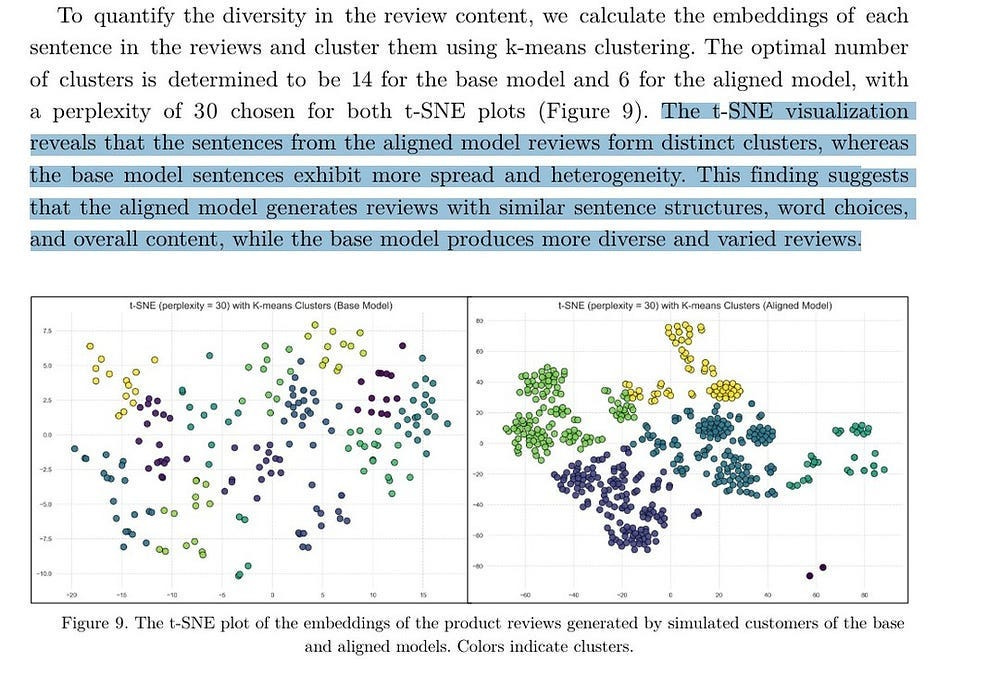

In case you forgot, let me remind you that the alignment process of LLMs explicitly reduces generation diversity-

“Large language models (LLMs) have led to a surge in collaborative writing with model assistance. As different users incorporate suggestions from the same model, there is a risk of decreased diversity in the produced content, potentially limiting diverse perspectives in public discourse.… This suggests that the recent improvement in generation quality from adapting models to human feedback might come at the cost of more homogeneous and less diverse content.”

- Does Writing with Language Models Reduce Content Diversity?

This was also noticed by another publication-

I can’t pretend to be an expert that can accurately forecast how technology will reshape our brains. But I can use my meager historical knowledge to point out that when influential groups choose to become arbiters of knowledge and correctness, especially over “other” groups, we tend to see tyranny and oppression.

To summarize,

I am a little bit concerned about AI’s environmental impact.

I am quite concerned about it being used as a tool to limit free thought.

I am deeply concerned by the people running to use AI as a crutch for themselves with no concern of how they’re limiting themselves.

But maybe I’m one of the conspiracy theorists/conspiracy theory aligned people that need to be corrected. Genius mathematicians do have a strangely passionate relationship with insanity, after all (I wonder why that is). And when it comes to becoming a looney toon, cage fighters and their brain damage aren’t far behind (if you didn’t know, I used to compete in underground cage fights up until last year). So maybe I’m not the paragon of wisdom here, and y’all should catch me before I start sticking needles in my eye.

Maybe one of you can tell me if I’m very off about this risk here. I would nominate

, , since I find you to be particularly insightful for this kind of thinking.What We Need for Artificial Intelligence

Here we’re using Intelligence to mean a similar kind of intelligence as shown by life. Not AI in the common parlance.

I’m going to go off Dr. Lambos here. Simply put, Synthetic Machines are not intelligent because they are built from inflexible components. Adaptability and Agency are built into our very cells, and our intelligence is a manifestation of that. Given how we build machines, this is not something we can encode into machines.

To add my own spin to that- all AI we build from algorithms is based on Mathemtical Thinking. Mathematical Knowledge relies on building up our knowledge from rigorously-defined principles. While such thinking is extremely powerful, it has limitations on what can be expressed/quantified through it since no mathematical system can be both complete (able to prove all true statements/without axioms) and consistent (free of contradictions)-

This limits what we can represent with algorithmic models. Our intelligence is possible b/c we don’t use purely mathematical thinking (it’s only a subset). For any purely mathematical system to represent it is impossible.

On a deeper level, I’m a bit confused why we’re so obsessed with needing AI that will represent our thinking.

AI (all forms in how we use it) encodes processes/domain knowledge at a particular time. This is incredibly powerful since it gives us a completeness that’s impossible for us (even if it’s an approximation). Imo, we should be focused on integrating that thoroughness with our adaptibility. Just b/c LLMs can’t do math, doesn’t make them any less useful. Instead of forcing them to do math, just use other techniques and safe everyone time. That’s something a lot of RAG teams, in particular, get wrong. There’s no reason to forget the decades of information processing and searching research just b/c Vector Search is possible now.

How I write, how long it takes me, etc.

This was the specific question, which I’ll break down into it’s sub-parts- “What is your approach and research sources when you plan to write out an article ? Your insights are deep and profound - how many hours go into each article ?”

What is your approach and research sources when you plan to write out an article ?- I read/listen to a lot of stuff (I’m going through 1000s of pages of research, blogs, talks, articles, etc, every week, no exaggeration). I talk to a lot of people. So it’s either recommendation algorithms, someone’s posts, a conversation I have, or something I notice while working with a few clients.

This is like my pretraining since this is where I’m learning and building connections. I enjoy most of pretraining since I listen to lectures, papers readouts, etc while playing video games (Endless Legend, Civ, and AoE 2), cleaning, or something else that doesn’t burn me out. It’s only when my ears pick up something interesting that I read into it deeper (which is not fun- it’s work- but I have to pay my bills).

A lot of my reading is non-technical and on topics I enjoy- economics, history, how problems are solved, etc- which makes this a lot less boring than if I was only reading AI papers. It’s also why my writing covers a much wider range than other AI Research writers- since I often blend various interests into one piece.

The really tiring bit is the writing. I don’t enjoy it, but sculpting greatness with your hands is fulfilling. It’s also one of the few times I allow myself to drink chocolate milk, so I am motivated to write.

Usually, my writing is in a few camps-

Look at this cool thing I learned.

This person said something wrong that I want to correct.

Here are some overarching thoughts that I have on a topic that a lot of people are discussing.

Let’s talk about a phenomena that I think is worth talking about.

Here’s an inspiration from a conversation I had.

Each article is generally written for 1-3 people. By that, I mean that I think about the people that I want to read this and write for them specifically. Our audience is very diverse, so if I tried to please everyone- I would create an incredibly mediocre blob. Picking people helps me focus my attention and decide what I should talk about in one article and what might be better left for later.

how many hours go into each article- Pretraining is hard to measure, b/c so much of it is tied to my day to day life. Most of it is also not really work (I enjoy doing things and a lot of my reading/learning) so I don’t measure that. But I do spend a LOT of time learning or thinking. That’s the majority of my week.

Writing, I can be more precise since I time it (and the other things I don’t enjoy like deep dives into papers, analyzing reports etc)- Each article takes me around 8-10 hours on average, with sessions often extending to 12-13 hours. As mentioned earlier, I write everything in one go. Since I only really do 2-3 articles a week, it’s pretty manageable. I can sustain it b/c I enjoy large parts of my main work- talking to people, figuring out how to solve problems, the research for pretraining, thinking about random things in my head, etc) so the additional cost of the actual writing is much lower for me. Aligning the things I like with my main work has been a winner for me.

My Favorite Article that I’ve written

It’s gotta be the first non-technical article I did.

4.5 years ago, I wrote my first business-related article. It has been the most important article I've ever written, because it had a hot-take that changed my writing forever.

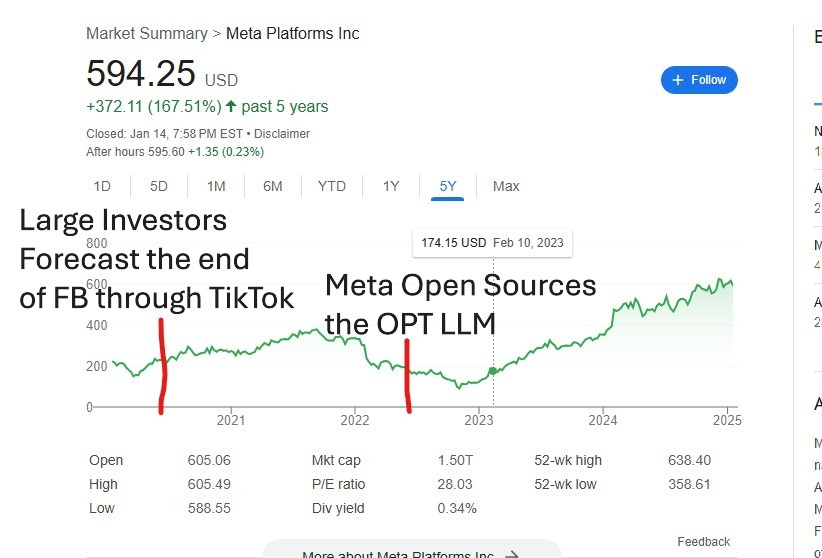

The take was about Facebook. I had just gotten into investing, so I was listening to a lot of top investors and analysts on what they said. There was a prevailing sentiment that TikTok would wipe out Facebook (which had seen explosive growth, especially in the Pandemic). I disagreed heavily. Aside from FB making money while TikTok was not, I also thought they missed two things-

1. FBs massive contributions in Open Source Software.

2. Their amazing AI research.

In my mind, both would allow them to iterate, build, and expand much faster than Tiktok and be a strong competitive advantage. This made Facebook so much more than a Social Media company. This sounds simple, but all the stock analysts and commentators seemed focus on TikTok's strong growth in the teenagers in USA, and not much on anything else.

I looked at all this surface-level analysis and thought, “None of you know what you’re talking about.” This gave me a lot of confidence since that meant that there was a place in the market for someone like me to get in. This was the first time I started exploring the intersection of business and tech- and it helped me realize almost noone knows how to price the more “deep” tech concepts accurately. Eventually, this led to my investment thesis, and my first 100 USD/hour contract.

Given how much it changed my life, that’s my number 1. The number 2 is also about Facebook (which had turned into Meta by this point).

In May 2022, I covered Meta open-sourcing their OPT LLM, saying it was a brilliant move that would change the LLM space forever. This was something I reiterated in my end-of-the-year review, where I ranked Meta ahead of OpenAI despite OAI's explosion in popularity through the release of ChatGPT. When OPT was released, most people didn’t know what a GPT was, and the few business people who knew about this all criticized Meta for wasting money (releasing an expensive model for free).

Since then, my prediction has aged pretty well-

Interestingly, this was actually one of the main things mentioned in one of the reviews my work got-

Both these articles were huge for me to go after investors and financial analysts as a client base, even though I didn’t have any traditional qualifications for this. This has gone so well that this is likely to be the first year I have an even split between investors (helping them w/ sourcing, assessing, and pricing investments) and actual technical teams that I work with, based on the conversations I’m having right now. I’ve even been offered jobs from VC firms on the basis of my markets/financial based analysis.

Btw if you’re looking to understand why the OSS strategy is such a powerful business strategy, check this out-

We’ll end with another business oriented question.

The field in AI I’m most excited about

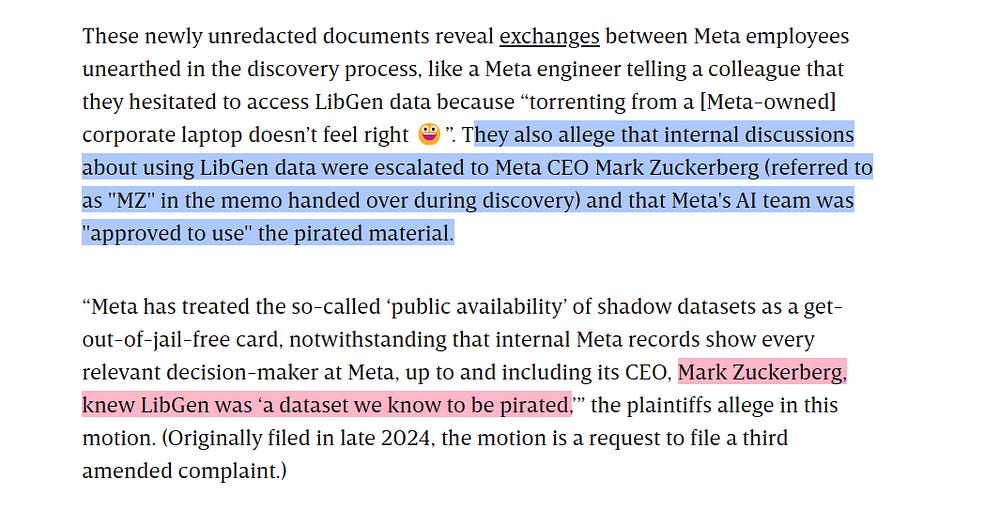

As you might have learned, it was recently revealed that Meta used pirated data from LibGen to train their Llama Language model-

This got me thinking a few thoughts-

In my article, “6 AI Trends that will Define 2025”, I selected Adversarial Perturbation as one of the major trends that people should be focusing on. This development makes me more bullish on that since now publishers would also start looking to protect their IP.

AP has naturally good economics: the labor capable of doing AP is significantly underpriced; it has natural affinities with scale (a lot of conversation around OG AP is implementing on edg(i)e(r) devices like Smart Phones, which would reduce both inference costs to providers (since it runs locally) and forces it to run on lower resources (it’s on a smartphone, not a cloud); and it can be priced efficiently.

AP will explode in popularity b/c it is particularly relevant to a few different groups, each of which has a very high distribution-

Artists/Creators- They have been extremely critical of AI Companies taking their data for training w/o any compensation. More and more people are starting to look for ways to fight back and have started learning about AP. One of the groups I’m talking to is a coalition of artists with millions of followers (and hundreds of millions of impressions) working on building an app that will make attacks easily accessible to everyone. They found me, which is a clear indication of how strong the desire for such a technology is.

News Media + Reddit- NYT and other media groups would benefit from AP as a tool to prevent scrapping and training on their data without payment first.

Policy Makers- Given the often attention-grabbing nature of the attacks, I think a lot of political decision-makers will start mandating AP testing into AI systems. I can’t divulge too much right now, but I’ve had conversations with some very high-profile decision-makers exploring this.

Publishers- Publication Houses can’t be too happy about their Data being used to train models with no compensation to them. A publishing house would corrupt all their material to make it unusable for scraping and then make a different clean set available for training after payment

As a field, almost no one knows it, meaning it’s not crowded (valuations aren’t jacked up).

It has appeal to people traditionally not deeply entrenched with AI (even technical publishers like O’Reilly don’t have deep investments in AI themselves).

3 , 4, and 5 mean that we can open a whole new market that doesn’t really exist right now.

It has positive synergies with the Data Market (both real and Synthetic) which is primed to explode.

It picks a fight with the operating model for Silicon Valley. There’s no better for an entrant to establish themselves than picking a fight with established players. Resource discrepancies aren’t as much of a problem with AP- so the fights are a lot more even than you’d assume.

I have to do more market research to do a proper deep dive into the market of AP and its factors, but given the combination of all these factors, AP is possibly the most undervalued sub-field in AI (if there’s a more undervalued field, I can’t think of it). Small investments in the right teams will yield massive returns.

This is why I did a whole primer on it yesterday on LinkedIn. So far it has been very well received, and I got some interesting leads. If you are someone interested in this space, please do reach out to me.

That will be the end of this AMA. Thank you all for the questions. Hopefully, I was able to answer the questions with the insight you were looking for.

For the final bit, I would really appreciate you taking the time to fill out this short survey. I originally asked a few of my marketing friends to create a KYC/demographics survey, but the questions didn’t really inspire me. So I created a bunch of them myself. Forgive them if they’re a bit weird, I thought they’d be a good way to get to know all of you. I (or anyone else) won’t see how you vote, so please be honest. I know some people have trouble answering Substack poll (or polls embedded in their emails) so either mail/comment your answers or feel free to skip this and go to the final notes on AI.

Help me Understand you

Final Closing Notes on AI

When I look at the AI Space, I feel excitement. I think there’s a lot more open space in the world than you might assume. Things have changed so much, and I believe that even established experts are mostly bluffing. No one really knows how the cards are going to fall or what will end up being truly important. I believe chaotic spaces like this are the best places to be a human.

I guess what I want to say is that I’m going to bully my way to GOAT status- first on Substack, then in AI, and eventually of all humanity. I hope you feel privileged since you get front-row seats to the whole thing. I know I feel privileged that I get to go up with such fun group of people.

I might be delusional. I might be a visionary. I don’t know. But I am a lot of fun. Hitch your ride to my wagon, and I promise you’re going to have a great fucking time either way.

Thank you for being here, and I hope you have a wonderful day.

Dev <3

Reach out to me

Use the links below to check out my other content, learn more about tutoring, reach out to me about projects, or just to say hi.

Small Snippets about Tech, AI and Machine Learning over here

AI Newsletter- https://artificialintelligencemadesimple.substack.com/

My grandma’s favorite Tech Newsletter- https://codinginterviewsmadesimple.substack.com/

My (imaginary) sister’s favorite MLOps Podcast (over here)-

Check out my other articles on Medium. : https://rb.gy/zn1aiu

My YouTube: https://rb.gy/88iwdd

Reach out to me on LinkedIn. Let’s connect: https://rb.gy/m5ok2y

My Instagram: https://rb.gy/gmvuy9

My Twitter: https://twitter.com/Machine01776819

![Why Companies Invest in Open-Source Tech and Research[Markets]](https://substackcdn.com/image/fetch/$s_!9H8X!,w_1300,h_650,c_fill,f_auto,q_auto:good,fl_progressive:steep,g_auto/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F0f2e040a-380a-432a-b612-6fe468c195ed_1000x1000.jpeg)

Dakara here! Thanks for bringing some greater exposure to the concerns I highlighted about AI's ability to manipulate beliefs and much of the public's enthusiasm with that capability!

I also greatly share your concern of "People use it to replace large parts of their thinking."

I often call this the 'Algorithmic Society'. Most of our socialization among ourselves is now mediated by algorithms.

FYI, a more in-depth essay I wrote more recently on the scale of gen AI and its societal impacts you might find of interest - https://www.mindprison.cc/p/the-cartesian-crisis

Lamine is absolutely insane.