Why is Agentic AI so Powerful [Agents][Thoughts]

Why (well designed) Agentic Systems Work as Well as they do

Hey, it’s Devansh 👋👋

A lot of us are very excited about the future of Agentic AI. Agents is a series dedicated to exploring the research around Agents to facilitate the discussions that will create the new generation of Language Models/AI Systems. If you have any insights on Agents, reach out, since we need all the high-quality information we can get.

I put a lot of effort into creating work that is informative, useful, and independent from undue influence. If you’d like to support my writing, please consider becoming a paid subscriber to this newsletter. Doing so helps me put more effort into writing/research, reach more people, and supports my crippling chocolate milk addiction. Help me democratize the most important ideas in AI Research and Engineering to over 200K readers weekly. Many companies have a learning budget that you can expense this newsletter to. You can use the following for an email template to request reimbursement for your subscription.

PS- We follow a “pay what you can” model, which allows you to support within your means, and support my mission of providing high-quality technical education to everyone for less than the price of a cup of coffee. Check out this post for more details and to find a plan that works for you.

A month or so ago, I did the following article on how Agentic AI could enforce better software design principles for AI systems. This article will be a much deeper exploration + expansion of the ideas I played with for it. I figured it would be a good way to end our mini-series focusing on Agents since we’ve danced around, flirted with, but never directly answered the question- why is everyone making such a big deal about Agents? What makes Agents so appealing to everyone trying to build on LLMs? Why are they

This article will be my attempt to answer this question. To do so, we’ll first talk about the principles that I believe align best with Agentic AI and then cover some case studies on how we might choose to implement them. As usual, below here is an overview of Agentic Systems and their impact from perspectives (larger screens → better reading experience)-

Executive Highlights (TL;DR)

What makes a system Agentic?

Many of you are no doubt sick of hearing me answer this question, but please do bear with me one more time. Agentic AI systems decompose a user query into a series of mini-steps, handled by different components. Instead of relying on a single LLM (or another AI) to answer a complex query in one go, Agentic systems break down the query into simpler sub-routines. These sub-routines tackle problems better by being modular, auditable, and easier to extend.

For instance, consider a sales-related question. Instead of having one model perform every task (querying data, formatting results, and generating responses), Agentic AI can:

Use a source-specific SQL generation tool for structured queries.

Leverage an LLM for natural language processing tasks.

Employ traditional algorithms for computations or predefined rules for constraints.

This article is going to talk about why we might want it. But before we start, I think it’s important to discuss a misconception about Agentic AI and its costs. People often underestimate the costs of building Agentic Systems, especially when they read reports and comments about how efficient these systems are (including from people like me).

While Agentic Systems are more efficient and have better ROI after setup, they come with a much higher cost of development (research, additional employment, maintenance, etc.) and thus are better suited for teams with more disposable cash and available teams. I’ve noticed a growing trend with teams that lack the necessary foundations trying to prematurely get into Agents. Not every team should keep a luxury player like Messi. Some teams can buy mature stars. Others should focus on developing Youth Talent and convincing Todd Boehly to pay 100 Million for them (Chelsea was looking good this season though, before they started Chelsea-ing again).

On another note, since the benefits of Agents come if you build them well, our following guide on How to build Agentic Systems might be useful to you. It went half-viral (thank you all for sharing that), and was the source of this meme that I was very proud of making-

Back to our main conversation. Why is everyone getting hot and bothered about AI Agents? There’s a short answer and a long answer.

Why People Love Agents- Short Answer

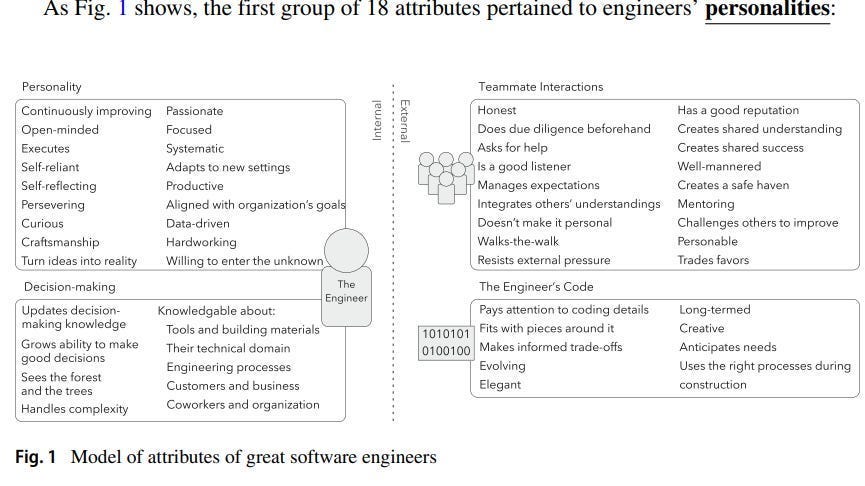

Good Code is, above all, very flexible. Code that can be changed easily by anyone can be improved more quickly. This is the only real directive that I try to enforce on my AI Research people at SVAM- write code (+ documentation) that makes all future changes as easy as possible. Even Microsoft’s study into SWE- “What distinguishes great software engineers?”- had similar conclusions, with “Adjusting behaviors to account for future value and costs” being rated as the 2nd most important trait (the first was a more generic “writes good code”).

Agents are great because they make LLM-based systems easier to modify. Traditional LLM-based systems have a lot of instability and vulnerabilities that can be triggered at unexpected moments. Agentic Architectures give us better accuracy, fewer uncontrollable errors, and the ability to tack on more features.

Now for the longer answer.

Why People Love Agents- Long Answer

Agentic Architectures break down a user’s request into smaller steps, each handled by a specialized agent. These agents can be diverse:

LLMs for specific tasks: One agent might summarize text, while another translates it. This is generally better than giving multiple instructions to one instance of an LLM.

Traditional algorithms: An agent could perform calculations, access databases, or interact with APIs. This is highly recommended for things like search, computations query generation, etc.

Rule-based systems: Agents can enforce constraints or handle specific scenarios based on predefined rules.

The system controls these agents, coordinating their actions and combining their outputs to produce the final result. This approach contrasts with the monolithic approach of using a single LLM for everything.

Agentic Architectures have a natural synergy with Good Software Design Principles. Building agentically, we can improve-

Modularity: Modularity is the practice of dividing a system into smaller, self-contained components or modules that can function independently. We want modular systems because they reduce complexity and allow teams to focus on individual components without interference. Agentic AI naturally supports modularity by using specialized agents for tasks like data querying or response generation. This isolates errors and allows for easy reuse of components across projects.

Scalability: Scalability is the ability of a system to handle increasing workloads or larger data volumes without sacrificing performance. Given that Tech is most profitable when leveraging economies of scale, the importance of this should be clear.

Agents are great here for two reasons. Firstly, Agents enable the development and maintenance of more complex systems- which increases the amount of things you can do. Secondly, Agents can reduce your computing costs per task by reducing the number of more expensive LLM calls for certain tasks by replacing them with cheaper processes.

Separation of Concerns: Separation of Concerns involves organizing a software system into distinct sections, each focusing on a specific functionality. This simplifies development, reduces complexity, and minimizes the risk of unintended side effects when making changes. Agentic AI (I’m getting tired of typing this, so will just use AAI) embodies this principle by assigning specific roles to individual agents.

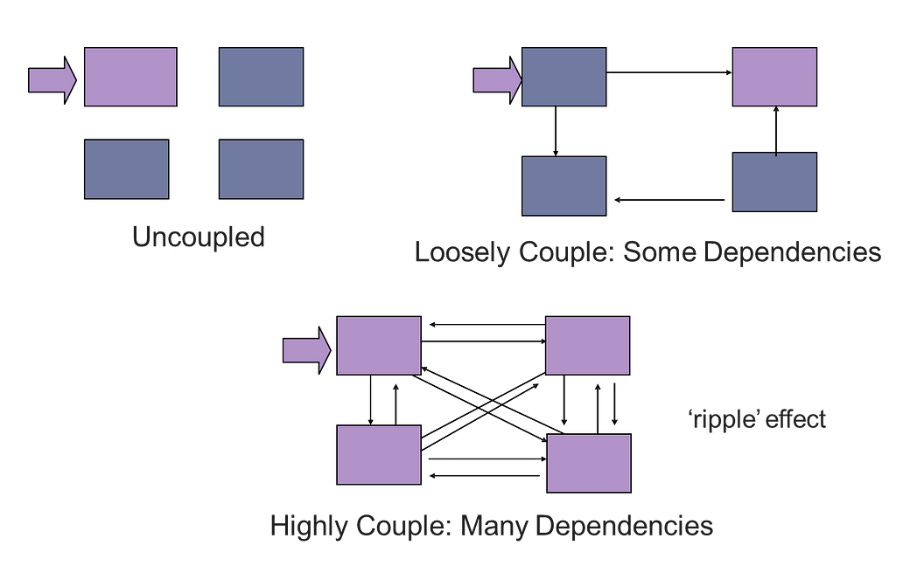

Loose Coupling: Loose coupling is the practice of minimizing dependencies between components in a system. This principle ensures that changes or failures in one part of the system do not propagate to others, making updating, maintaining, or replacing components easier. Similar to above, specialized Agents allow us to build and change functionalities without impacting the larger setup much better than with traditional LLMs.

Robustness: Robustness is the ability of a system to maintain functionality and performance even when faced with errors, unexpected inputs, or partial failures. This principle is especially crucial for critical domains like healthcare or legal applications, where the vulnerability of LLMs to minor changes to inputs can be very problematic. AAI boosts this in two ways- the scalability adds robustness toward one of the biggest problems in tech (overload causing weird behavior) and the separation of concerns + Loose Coupling is very helpful in helping us isolate and track errors.

Consistency: Consistency ensures that a system behaves predictably and uniformly across its operations, something traditional LLM Systems lack at the moment. Consistent systems reduce confusion for users and make debugging + development more straightforward by providing reliable outputs. Consistency is one of the biggest improvements that Agentic Systems can provide for LLM based systems in several ways byincreasing the usage of more deterministic/stable components and reducing the amount of “entaglement” by limiting the uneccesary context that LLMs have to interact with (this is a bigger problem than you’d think). I’m not going to elaborate too much on this because even though this is an extremely important benefit- I don’t have that much to say about this specifically.

The rest of this article will take each principle and build on it. We’ll play with ideas like where traditional LLM systems fall short and how AAI enables these principles, and we'll talk about some case studies. If that sounds interesting, let’s get into it.

I put a lot of work into writing this newsletter. To do so, I rely on you for support. If a few more people choose to become paid subscribers, the Chocolate Milk Cult can continue to provide high-quality and accessible education and opportunities to anyone who needs it. If you think this mission is worth contributing to, please consider a premium subscription. You can do so for less than the cost of a Netflix Subscription (pay what you want here).

I provide various consulting and advisory services. If you‘d like to explore how we can work together, reach out to me through any of my socials over here or reply to this email.

How Agentic Architecture can Boost Modularity

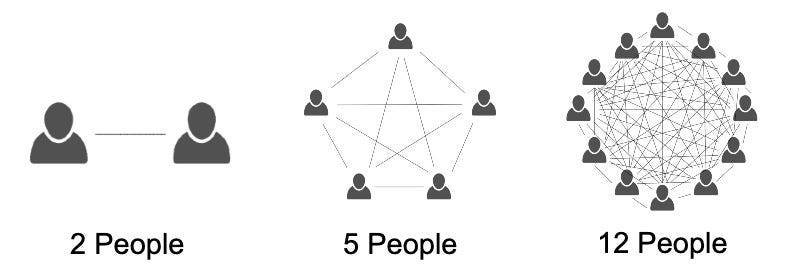

Modularity involves breaking down a complex system into smaller, independent modules. This is crucial for managing complexity, promoting code reusability, simplifying development and maintenance, and isolating errors. Non-modular systems can have increasingly complex connections, as non-specialized modules tend to take on larger and larger responsibilities, making the code base harder to iterate on.

This is especially important for LLMs, which tend to suffer from a “Swiss Knife Problem”- they seem like they can do everything, so they’re often plugged in for a large diversity of tasks (I guess most AI Teams haven’t fully imbibed the lesson that teenage me learned the hard way- just b/c you can do something (like throwing Laxmi Bombs at your friends to celebrate Diwali), doesn’t mean that you should do it).

Let’s see this from a practical example. Imagine building a customer support chatbot, one of the most common use cases for LLMs. Most teams try to implement LLM Based Customer Support by mixing the following-

Using longer and more complex prompts to lay out specific instructions and workflows, tell the AI to look at specific areas, etc. As some people call it, “Prompt Engineering”.

Try to look for a more powerful model

Use a Specialized model fine-tuned with the data that you’re processing (LLMs for tabular data etc).

However, this is extremely inefficient and will lead to all kinds of errors. Instead of a single LLM trying to handle everything, you could have specialized agents:

Query Processing Agent: Processes user input to extract intent and entities (e.g., convert “I want to track my order #” to a command like track order (order ID) ).

Order Tracking Agent: Queries the database for order status using the extracted order ID.

Response Generation Agent: Constructs a natural language response based on the order status.

Escalation Agent: If the order status is problematic or the user expresses dissatisfaction, this agent routes the conversation to a human operator.

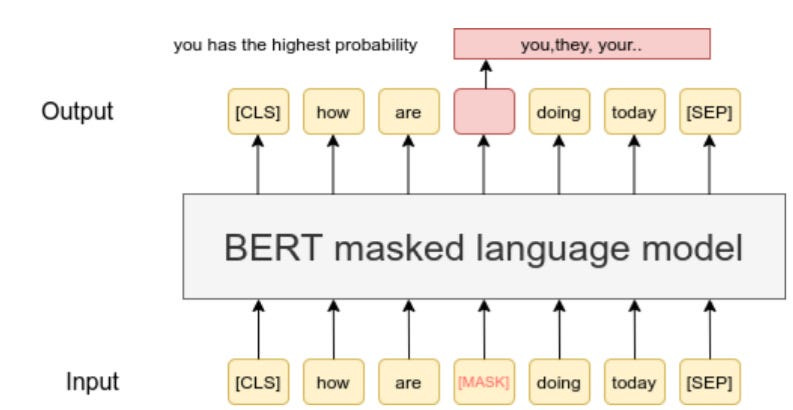

This allows you to really max out performance. For example, both the Query Processing and Escalation agents rely more on understanding/translating than raw generation (the setup behind the standard Decoder-only Autoregressive LLMs like GPT, Claude, Gemini, etc.). And not a lot of people know (or have forgotten this), but other kinds of transformers are more suited for these specific setups.

In general, BERT or other encoder-heavy models will do a lot of good for you when it comes to query classification. Because they tend to be stacked with auto-encoders and are often trained with bi-directional masking (you mask a token, look at both contexts in front and behind the masked token, and then try to predict what the masked token is), you tend to get a more robust understanding of the language-

This is why BERT-based language models often beat up SOTA LLMs in many domain-specific information retrieval and language understanding challenges, even when they are smaller-

When considering the standard 12 layer of BERT, our experiments show that (Chat)GPT achieves comparable performance to BERT (although slightly lower) in regard to detecting longterm changes, but performs significantly worse in regard to recognizing short-term changes. We find that BERT’s contextualized embeddings consistently provide a more effective and robust solution for capturing both short- and long-term changes in word meanings.

But that’s not all.

Since each agent focuses on a specific task, our system is easier to understand, debug, and extend. The processor/escalation agent could be reused in a different application, like a voice assistant. If the Order Tracking Agent fails, the other agents can still function, offering a generic apology and suggesting alternative contact methods. And most importantly, this improves the performance of your system in many key ways for example-

Things like Order Tracking and large parts of response generation can be done with standard techniques reducing costs (no calling expensive LLMs). The latter is particularly worth emphasizing- don’t forget that template strings still exist, and a LOT of outputs can just be used

It also wipes out any uncontrollable errors introduced by LLMs.

It improves the transparency of your system since you’re relying on more well-understood setups.

So much cleaner than LLMs for everything.

For another example- I was working with someone on scoring the importance of events based on the metadata (where it took place, what people were involved etc). The workflow was pretty simple-

Take the event.

Score it’s various metadata points based on rules (if an event is held in NYC, then it’s location score is 10… Give seperate scores for every metadata). For certain metadata categories, they didn’t have hard and fast rules, but so they asked the LLM to come up with a score by itself.

Once you have the metadata points, combine them according to a formula for the event importance score.

Summarize the 10 most positive and negative comments (each) from the event to understand user feedback. The events were small enough that they were just loading the entire comment list and asking the LLM to find them.

They were originally following a very complex prompt by asking the LLM to do all this at once (event + metadata fed in — -> score is outputted). They reached out to me since the costs of this scaled up were massive, and they wanted to see if there was a more efficient way to do this. We dropped the costs ~93% and increased performance by 37% by doing some simple tweaks-

Created dictionaries for each metadata category (instead of telling the LLM to assign a location score of 10 to events NYC, we did a simple dict with key “NYC” mapping to 10).

For the metadata where we didn’t have concrete rules (no dictionary to create the mapping)- we had Claude create a dictionary once before running the main scoring function. We edited the dictionary to tune the values according to their research. This way they only had to run one LLM call, instead of calling the LLM to score the unclear Metadata every time.

Run the scoring formula in a function.

Use a simple sentiment analyzer to score positive and negative comments, and then pick the top k=10.

Use the LLM (Claude since Gemini was very fragile and GPT is more expensive) for summaries + analysis.

This cut down the costs of the LLM costs tremendously (much shorter prompt) AND improved their performance since LLMs suck at math + having predefined dictionaries made the scoring deterministic. These metadata scoring dictionaries(which are primitive Agents) are also now in their own functions- allowing them to be reused in other places/extended easily.

Many of you are very advanced, so you might wonder why I took so much time to explain such an obvious solution. However, it is worth noting that many modern teams attempting to work with AI have no technical understanding of AI (their AI Engineers are often Full Stack Devs who know how how to call APIs) and thus miss all kinds of best practices associated with designing AI products.

This also extends to many AI products/service providers. Call me elitist or arrogant, but it’s a simple fact that many Gen AI Teams are really really bad, and the only reason they haven’t been fired or gone bankrupt is that the people hiring or funding them don’t have the skills to even comprehend how bad they are. It’s similar to how I would struggle to judge and critically evaluate an opera show since I have absolutely no understanding of that art form.

Let’s end that tangent and continue onwards.

To summarize, Modularity is great b/c it isolates responsibility- promoting reuse, improving performance, and allowing greater specialization. All very helpful. Agentic AI is a way to promote that within LLMs since LLMs require a clear demarcation of different tools/agents- which is essentially the same as splitting them into different modules.

Next, let’s talk about the next principle.

Scalability and Agents: Building AI Systems That Grow With You

In my mind, traditional LLM architectures face three critical scaling bottlenecks:

Cost Scaling: Every request, no matter how simple, invokes the full model. This leads to an interesting dilemma- do you use a more capable model for increased ability, but one that will be overkill in most cases? Or is a simpler model better for its costs? This becomes a bigger problem when we have to bake in input validation, preprocessing, and error handling within the LLMs.

Complexity Scaling: As requirements grow, prompts become increasingly byzantine. What starts as “Answer customer questions about orders” evolves into “Check order status AND calculate shipping AND verify inventory AND suggest related products AND…” Each addition makes the system more brittle.

Performance Scaling: LLMs slow down with context length. A system that works fine with 5 product descriptions might crawl when handling 50 and completely break with 500.

There’s a special kind of hell where 2 and 3 intersect. LLMs struggle with a phenomenon that I call context corruption- where prior context (even when unrelated to the current task) can skew outputs (if there’s an official name, lmk. Otherwise, I’m claiming this)-

There is a lot of other research that shows how often LLMs can be influenced by seemingly irrelevant factors like word choice, order of choices, etc. This is one of the many reasons that I think people significantly overestimate the impact “reasoning models” will have on AI adoption/capabilities (imo, the biggest hurdle to Gen AI products isn’t their base knowledge, but this is something that we will discuss in another article).

That is one of the biggest reasons why I don’t rate discussions around Context Length very highly. Your model’s pure context length is meaningless if it gets confused with the information that’s put into the context length. Usable context length is a lot harder to determine.

Most LLM Based Systems hurt themselves b/c they overload the LLM with irrelevant information (90% of the input ends being irrelevant to the query). This is done either actively (more complex prompts, as discussed previously) or subconsciously- relying on techniques like Alignment or Fine-tuning to force knowledge into a system.

Either way your system’s scale suffers in several ways-

Your capabilities are limited since more of your computing costs are proportionally allocated to traversing the search space and thus limit more complex inference.

The information in search space can create all kinds of conflicts, even if every individual data point is valid. This is one of the reasons that hallucinations are a mathematically unsolvable problem in general-purpose LLMs (and why you should build around them in domain-specific cases).

All of this is very costly.

Agents, with their modularity and separation of responsibilities, allow for scaling in various ways. Obviously, they enhance the capabilities (I can add in agents for formatting, visualization etc)- which we will discuss later. However for this section, it’s better to think about the horizontal scaling ability this gives us. The decomposition of the workflow into substeps allows us to be more refined with how we want to allocate resources efficiently.

Consider a system processing large volumes of scientific literature. An agent-based approach could employ:

Ingestion Agents: Multiple agents working in parallel to download and pre-process articles from different sources (many of our clients really want bots to pull information from specific sources).

Analysis Agents: Specialized agents performing different analyses, like topic extraction, sentiment analysis, or named entity recognition.

Retrieval: Parses through the publications to pick the 10 best ones for us. In usual retrieval you normally combine a lightweight filter to parse through most sources and a heavier model for a later stage filter.

Ranking- Walks through the retrieved publications to rank them for our information to pass the top 3.

Aggregation Agent: Combines the results from the analysis agents.

This distributed processing allows the system to handle massive datasets efficiently. Let’s say the volume of literature increases 100x (I would imagine AI has gone up way more than that). We can account for this increase by-

Spinning up 100x more on Ingestion and Analysis agents. This is simplistic (usually you’d follow some inverse scaling law, but I’m steelmaning the argument and saying we pull everything).

Increasing top k for retrieval to 30.

Increasing the reranking top k to 5 instead of 3.

Here, your total increased investment is less than 100x since the other components did not have to scale by the same amounts. This is going to be much more efficient than a traditional LLM Based system since the only way to scale it up would be spend more money on the LLM itself (and if your data information ends up being more than your models representational capacity- you’ll have to rebuild a new model).

A good irl of this principle is Uber’s QueryGPT, which allows users to generate SQL queries through natural language prompts (this use case is incidentally, how I first got into LLMs in 2022. But with a Multi-national Supply Chain Company, not Uber). QGPT sped up the query generation 3x for the users (10 mins →3 mins). One of the keys to its scale is that it only calls the GenAI creator at the very end, after it has filtered through the data etc. This means that the cost of calling the LLM will grow sub-linearly when compared to the increasing dataset sizes. The Architecture is shown below-

The principle next is a personal favorite of mine.

Separation of Concerns, Loose Coupling, and AAI

I will mix SoC and Loose Coupling into it to avoid getting too repetitive.

Traditional LLM Based Systems have very serious attachment issues. Let’s understand them to understand why these are important.

Task Interference

When you have one model juggling multiple responsibilities, changes to one task often affect others in unexpected ways. A simple update to how you handle date formats might suddenly impact how the model generates SQL or writes reports. This happens because LLMs don’t maintain clear boundaries between different types of processing.

The really interesting part about this interference is how subtle it can be. Even seemingly unrelated changes can cause unexpected behaviors. Add instructions about handling customer names, and suddenly, your price calculations start including tax differently. Change how you format addresses, and your product recommendations shift. It’s like having a butterfly effect in your system, where small prompt changes ripple out unpredictably.

This is one of the reasons that Fine-Tuning often adds unexpected security errors-

“Disconcertingly, our research also reveals that, even without malicious intent, simply fine-tuning with benign and commonly used datasets can also inadvertently degrade the safety alignment of LLMs, though to a lesser extent. These findings suggest that fine-tuning aligned LLMs introduces new safety risks that current safety infrastructures fall short of addressing — — even if a model’s initial safety alignment is impeccable”

-”Fine-tuning Aligned Language Models Compromises Safety, Even When Users Do Not Intend To!”

Including completely new vulnerabilities that didn’t exist in the base model, as shown in, “Scalable Extraction of Training Data from (Production) Language Models,” whereDeepMind researchers were able to extract several megabytes of ChatGPT’s training data for about two hundred dollars. Their attack worked on the aligned ChatGPT, but not on the base model, showing that the alignment created a new kind of vulnerability that didn’t exist before.

Error Isolation

Identifying the source becomes a complex puzzle when something goes wrong in a multi-task LLM system. Is the error coming from the data extraction logic? The validation rules? The way tasks interact? Without clear separation, debugging becomes more art than science.

This gets exponentially worse as your system grows. Each new feature adds not just its potential failure points but new ways for features to interact and fail together. You end up with a system where fixing one error might create two more simply because you can’t isolate the real problem.

This can cause a model to confuse multiple concepts with each other based on arbitrary connections that should not have been made. An example of this is Gemini infamously refusing to help an under-18 user with C++ b/c it would be unsafe (here the Safety concepts for minors caused conflicts w/ Programming Safety, even though they are semantically very different)

Figuring out why errors are popping up and what’s causing them is not a fun game.

Testing and Validation

How do you test a system where every component is intertwined? You can’t unit-test date formatting or verify SQL generation without triggering the full pipeline (which is, again, very costly).

Traditional software testing relies heavily on isolation — being able to verify each component independently. But when everything’s jammed into one LLM, you lose this ability. Your tests become more like integration tests; you’re testing everything at once and hoping for the best.

How Agents Promote Separation of Concerns and Loose Coupling

The inherently broken-down nature of well-built Agentic Systems enables

Each piece has one job and does it well. When something breaks, you know exactly where to look. When you need to make changes, you can update components without worrying about cascading effects.

This has been one of the key drivers behind IQIDIS, a Legal AI Startup I’m building. Our users are often impressed by the depth of our Legal Responses, the quality of the citations, the more nuanced reasoning, and our ability to process arbitrary context lengths at a fraction of the cost (200 USD/ month per user) of our competitors (some of whom charge thousands of dollars a month per user). The secret to this is our well-mapped out workflows and SoC. For example-

The different jurisdictions in our case law are stored in separate environments to remove the chance of our case law recommendation platform recommending a Texas-specific law to Cali-based lawyers. This has a problem that it will cut out your ability to do cross-domain analysis, which is something we fix by knowledge graphing between different components in different silos. This is very different to the mainstream approach of putting everything in the same DB and annotating the data to guide it toward the right place. In my opinion, this is an unnecessary source of error, and I want to minimize the risk of unrelated laws inducing hallucinations.

Our multi-modal, legally enriched markdown creator has it’s own silo and is used to process legal documents (which often have a mix of tables, scans, handwritten notes, images, charts, etc). This is used for document processing, but since we’re constantly enhancing it, giving it its own space allows us to test multiple approaches to always have the best possible markdown representations.

Similar to above for embeddings (we built a custom embedding setup to maximize retrieval performance). This is especially important in legal because standard embeddings can’t capture legal nuance and they don’t incorporate the temporal dimension very well.

Different kind of knowledge graphing is used to map entities to each other, enabling more nuanced document/case matter understanding (traditional Vector Embeddings struggle when two points are not directly connected, which is also why Coconut by Meta is bringing such a buzz)-

If this has you interested, send an inquiry to info@iqidis.ai. We offer every user a free trial for a week and no lock-ins, so you can try it out risk-free and leave when you want. We’re that confident that you’ll like where we are now and what we have cooking.

By splitting all the different components for IQIDIS, we get to test every little aspect- which has been incredibly helpful in maximizing the efficiency of our development. We’re able to compete in quality against startups raising millions while being bootstrapped because the design allows us to isolate errors, split test ideas, and iterate on feedback extremely effectively.

Now for the final section.

How AI Agents Help Software Robustness:

We’ve mostly discussed all the relevant ideas, so let’s jump right into case studies.

In self-driving vehicles, agent-based systems can enhance safety. Different agents could control braking, steering, and obstacle avoidance. If one agent malfunctions, the others can take over or initiate emergency procedures. This redundancy is crucial for ensuring passenger safety (there is also a much more obvious idea of having backup agents in case your primary agent fails). This extends to other cases.

This redundancy and error handling ensures the system continues to function even with partial failures.

The decomposition of a task into workflows also enables more transparency (especially if you have detailed logging) and will also contribute to the enhancement of security features (input validation,post-generation mechanisms etc)- both of which will help your system robustness.

Looking back at it, I don’t think I’ve said too much that’s super ground-breaking. And I’ll be honest- I don’t think agents are meant to be a revolutionary idea. In my head-canon, agents are an attempt to bring some of the ideas that made Software such a dominant force- control; efficiency; and scale- into the non-deterministic and expensive world of LLMs. The balance of bringing the enforcer Casemiro to the more artistic Modric and Kross. The timeless OGs that never truly lose their value- like your favorite characters first SSJ Transformation or Sweet Pea’s breathtaking defense. And in an AI environment where everyone seems obsessed with novelty and needlessly “world-changing” advancements- I think it’s important to appreciate that.

This is came out very different to what I started off writing, and is pretty different from what I usually write. Let me know what you think.

Thank you for reading and have a wonderful day.

Dev <3

If you liked this article and wish to share it, please refer to the following guidelines.

That is it for this piece. I appreciate your time. As always, if you’re interested in working with me or checking out my other work, my links will be at the end of this email/post. And if you found value in this write-up, I would appreciate you sharing it with more people. It is word-of-mouth referrals like yours that help me grow. You can share your testimonials over here.

Reach out to me

Use the links below to check out my other content, learn more about tutoring, reach out to me about projects, or just to say hi.

Small Snippets about Tech, AI and Machine Learning over here

AI Newsletter- https://artificialintelligencemadesimple.substack.com/

My grandma’s favorite Tech Newsletter- https://codinginterviewsmadesimple.substack.com/

My (imaginary) sister’s favorite MLOps Podcast-

Check out my other articles on Medium. : https://rb.gy/zn1aiu

My YouTube: https://rb.gy/88iwdd

Reach out to me on LinkedIn. Let’s connect: https://rb.gy/m5ok2y

My Instagram: https://rb.gy/gmvuy9

My Twitter: https://twitter.com/Machine01776819

Thanks for this. What are your thoughts on agents being produced by existing SaaS players like Salesforce via Agentforce?

Good read. Reading this I am reminded of very similar arguments for the workflow pattern, that is systems designed as a collection of decision nodes and processing steps, linked by a state machine that governs the order of execution. The key difference to an AAI seems to be the planning step, where in a workflow, planning is a design-time task (i.e. specifying the state machine), while in an AAI planning is a run-time ad-hoc task delegated to an LLM. Thoughts?