AGI is Tech Bro Word Soup[Thoughts]

How AGI went from a sci-fi idea to the worlds biggest distraction.

Hey, it’s Devansh 👋👋

Thoughts is a series on AI Made Simple. In issues of Thoughts, I will share interesting developments/debates in AI, go over my first impressions, and their implications. These will not be as technically deep as my usual ML research/concept breakdowns. Ideas that stand out a lot will then be expanded into further breakdowns. These posts are meant to invite discussions and ideas, so don’t be shy about sharing what you think.

I put a lot of effort into creating work that is informative, useful, and independent from undue influence. If you’d like to support my writing, please consider becoming a paid subscriber to this newsletter. Doing so helps me put more effort into writing/research, reach more people, and supports my crippling chocolate milk addiction. Help me democratize the most important ideas in AI Research and Engineering to over 100K readers weekly.

PS- We follow a “pay what you can” model, which allows you to support within your means, and support my mission of providing high-quality education to everyone for less than the price of a cup of coffee. Check out this post for more details and to find a plan that works for you.

To promote my work better, I have decided to be more active on Twitter, TikTok, and LinkedIn. Over this time, I’ve seen a lot of people push the narrative that AGI is coming soon. Notably, the CEO of Softbank believes that “artificial intelligence that surpasses human intelligence in almost all areas, will be realised within 10 years.” The cofounder of DeepMind, Mustafa Suleyman, is even more optimistic claiming that AGI will be here in 3 years.

Since my existence revolves around jumping on social media trends, I figured I’d drop my 2 cents on AGI. In this article I will cover 2 ideas-

Why have so many people been pushing AGI this strongly?

Why AGI will never successfully simulate natural intelligence. No matter how much training data we use, what fancy model architecture we create, or how many billions are dropped into compute- AGI is never coming.

Let’s get into it.

The economics of AGI

Why do many people push the AGI narrative? The one-word answer is money. But the nuances are very interesting. Before we proceed, I want you to understand a few things-

Companies like Google are rolling in money. They also have a near monopoly in their fields. This creates an interesting problem- they can’t really grow their core business anymore.

Investors don’t like it when companies just sit on piles of cash. They would prefer to know that these companies were putting that money into either business expansion or in shareholder payouts (either dividends or stock buybacks). This is why companies have such low savings in the bank. As a tangent, the low savings are why a lot of the champions for the free market, people who preach “small government and people not relying on government handouts” run to government bailouts when economies take a dive.

Point 2 is why we saw Microsoft invest heaps into Mixer and Gaming, Facebook put money into providing internet to more people, and Google is currently pushing Pixel very aggressively. In a world where a lot of the basic needs are mostly solved, a company’s only two choices are to either take market share from other people, or to manufacture completely new needs. The former is extremely expensive (and comes with a high chance of failure). The latter has a phenomenal track record (diamonds, luxury fashion, the travel industry, and other status symbols are a perfect example).

This is where AGI comes in. AGI behaves as the shiny new trinket to wave for investors, a possible vision for the future. As long as the money goes into AGI, it’s not wasted money, it’s an investment. It doesn’t matter if these never live up their hype, because in a few years we will find a new trend. We can rinse repeat this, hoping to time the market cycles so that our exits are greater than our investments. No need to worry about the long run, because in the long run we’re all dead. As such, the utility of an idea is not as important as the short-term profitability of it’s underlying stock (and companies don’t have to be profitable to have a profitable stock).

AI comes with 2 other benefits- it’s useful (when used right) and no one really understands it. This means that selling AI Fantasies is much easier than selling other products. Most companies will make enough money through implementing simpler AI products to cover the costs of their “Gen-AI stack” or their “AGI investments”.

Add to this the incentives for individuals to hype up AI and we have the perfect conditions for selling castles in the sky. It’s also why the industry relies so extensively on hyperbole and exaggeration. AI is billed as the “most important development of the century” on par with the agricultural revolution and electricity. Take Suleyman (DeepMind guy). As he was making his AGI predictions, he was also promoting his book- “The Coming Wave: Technology, Power, and the Twenty-First Century’s Greatest Dilemma.” The book pushes the narrative that understanding AI/other powerful technologies are non-negotiable to prevent societal collapse or a techno-punk dystopia.

This is all very compelling, but let’s take a step back from this massive Big Tech Circle Jerk. I want to ask you a question- Is AI really that important? Sure, it can have impacts on society, but is it really the most important dilemma in our century? I’m probably not going to make many friends with this, but it’s important to think this question through. Is AI really that high on the scale of societal threats? Is AI (especially ‘powerful AI’) even that important to society? Will heavy-weight AI really define our lives as profoundly as people claim?

Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war.

-This is a claim that I addressed in my post on actual AI risks

Suleyman’s book pushes the narrative that containment problem is the “essential problem” of our age. Other people echo similar sentiments, arguing that institutions should step up to make AI Safety the number one priority and that “everyone should know about AI”. Sure, understanding the tradeoffs with AI safety is important but is it more important than the following issues-

Finding ways to prevent businesses pushing negative externalities onto regular people.

Unsustainable agricultural practices completely destroying the environment.

Addressing Systematic Inequality by giving privileges to underprivileged groups, without disenfranchising members of the ‘privileged groups’ (who often aren’t the reasons for these inequalities).

I came up with these off the top of my head but any of these are infinitely more important than AI risks. These are all issues with the same risks as pandemics and nuclear war. Yet these issues don’t generate a proportional level of urgency. Instead, everyone and their grandma is busy fetishizing the risks of us accidentally inventing Laplace’s Demon.

I’m personally not convinced that AI is as Transformational or important as people make it out to be. Certainly not anywhere close to being what defines the 21st century. We have too many real issues to deal with already. The AI that does make a difference will be more specialized, incapable in all use-cases but one. If you disagree, feel free to reach out.

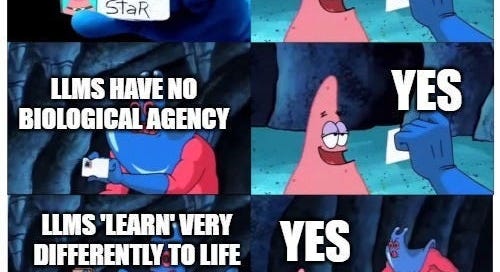

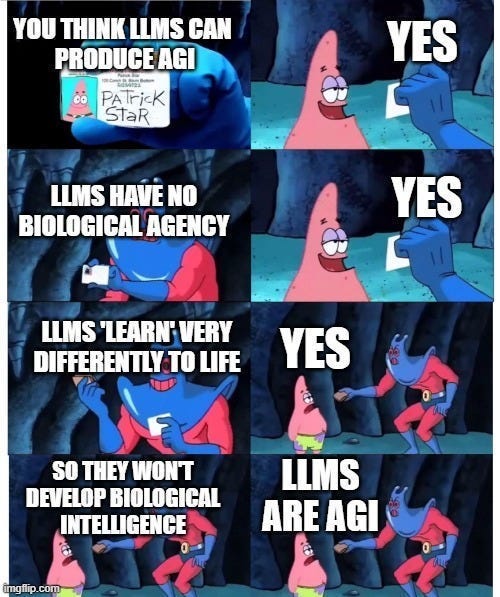

Now let’s move on to the next section- why artificial forms will never form biological intelligence. The crux of my argument can be summarized by the super academic diagram below-

AI, Intelligence and Biology

Intelligence is primarily an evolutionary adaptation. Humans developed intelligence due to evolutionary pressures. This intelligence is then refined through societal pressures- leading to different groups being good at different things. The article How Language Shapes Thought is a phenomenal read that goes over how our mother tongues shape our brains into developing different capabilities-

…people who speak languages that rely on absolute directions are remarkably good at keeping track of where they are, even in unfamiliar landscapes or inside unfamiliar buildings. They do this better than folks who live in the same environments but do not speak such languages and in fact better than scientists thought humans ever could. The requirements of their languages enforce and train this cognitive prowess.

Human intelligence was created to help us perceive and analyze our world in a human context. It doesn’t even generalize well to our monkey cousins, much less other biological entities. Our intelligence is specific to our bodies, and their needs. Without them, we would not have ‘human intelligence’. To think that humans would develop another human-like entity purely out of binary and code is very silly.

This has been backed up by research. Research comparing how humans and LLMs learn languages demonstrated that humans have vastly different learning behaviors compared to LLMs. Forget General Intelligence, our vaunted AI overlords haven’t even cracked the human linguistic intelligence (which keep in mind changes based on your language)-

In pre-registered analyses, we present a linguistic version of the False Belief Task to both human participants and a large language model, GPT-3. Both are sensitive to others’ beliefs, but while the language model significantly exceeds chance behavior, it does not perform as well as the humans nor does it explain the full extent of their behavior — despite being exposed to more language than a human would in a lifetime. This suggests that while statistical learning from language exposure may in part explain how humans develop the ability to reason about the mental states of others, other mechanisms are also responsible.

Lambos’s Substack is a great resource if you’re interested in this idea. Dr. Bill Lambos has spent over 50 years studying systematic differences between humans/biological entities and Artificial Systems and he goes deep into it. The Substack is very new (only 1 post so far), but Dr Lambos and I have had extensive discussions about this topic-

The answer is that AI programs seem more human-like. They interact with us through language and without assistance from other people. They can respond to us in ways that imitate human communication and cognition. It is therefore natural to assume the output of generative AI implies human intelligence. The truth, however, is that AI systems are capable only of mimicking human intelligence. By their nature, they lack definitional human attributes such as sentience, agency, meaning, or the appreciation of human intention.

Let’s move on to the next point. Even if we were able to build a magic architecture that can simulate human thought process, thereby enabling General Intelligence, there is another huge issue in AI.

Thoughts, Experience, and Data Points.

One of my favorite quotes states- “The map is not the world”. Data is in a manner of speaking a map of a phenomena. Feeding AI more/better maps will not give it a deep understanding of the world. This becomes doubly true when we realize that our interaction with Data is very different to an AI Model’s-

When AI Models interact with Data it is their world. When we (or other intelligent life) interacts with data, the data is a compression of another object/process we perceive.

Let’s elaborate on that.

Take my favorite word- chocolate milk. To you, me, or anyone else familiar with this bundle of bliss- the taste, texture, price, and other characteristics of the milk are simply facets of the milk. Our thoughts/memories about something are simply our perceptions of that thing- in an extremely compressed form. That is why you and I can experience the same event but have very different memories of it. Our brains compressed different things. Human Data is always an incomplete representation of an event/object.

AI doesn’t operate the same way. All that AI knows about chocolate milk is the data it receives- not the actual phenomenon. This means that the underlying representations it constructs are very different from the representations created by people. People use intelligence to encode a particular object/process into data (here I’m considering memories and thoughts as a kind of data). AI builds its world view (intelligence) by taking the incomplete representations of the world and stacking them. These are in many ways complimentary processes.

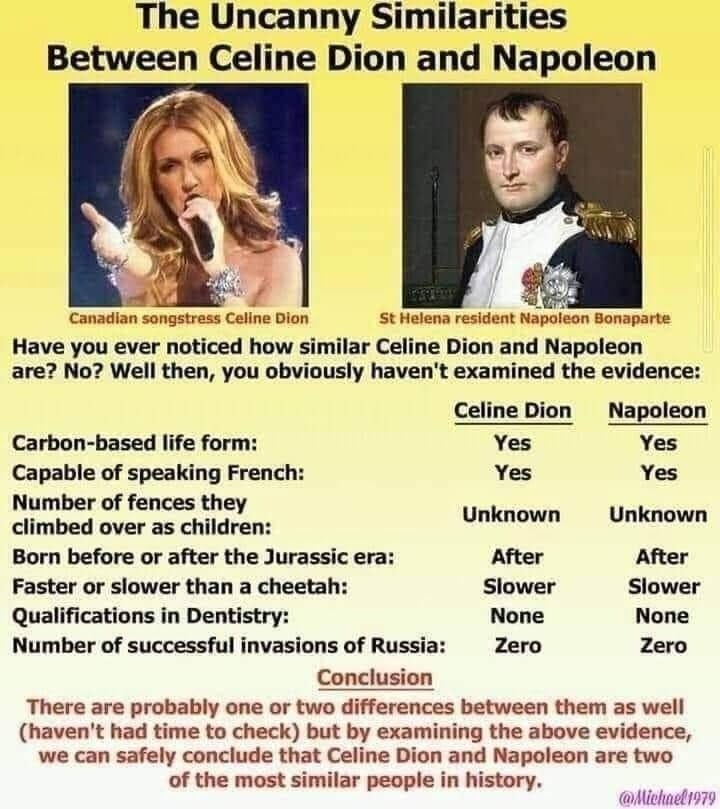

That is why you can build AI that will agree with the following conclusion, but you’ll have a tough time looking for people to do the same.

This difference is a good thing. It gives me, you, and many people other jobs (and as an added cherry on top- may eventually be used to tackle some of the world’s biggest problems). AI has been effective, not because it’s general and human-like, but precisely because it’s not. It does things we can’t, and in doing so greatly improves our capabilities.

AGI is a hollow-meaningless dream and is never going to come. Unless you are an investor who wants to give me money to develop AGI. In that case, please disregard everything I wrote. I have the key to building AGI, just need some money to see it through. Let’s be rich together.

If you liked this article and wish to share it, please refer to the following guidelines.

That is it for this piece. I appreciate your time. As always, if you’re interested in working with me or checking out my other work, my links will be at the end of this email/post. And if you found value in this write-up, I would appreciate you sharing it with more people. It is word-of-mouth referrals like yours that help me grow.

Reach out to me

Use the links below to check out my other content, learn more about tutoring, reach out to me about projects, or just to say hi.

Small Snippets about Tech, AI and Machine Learning over here

AI Newsletter- https://artificialintelligencemadesimple.substack.com/

My grandma’s favorite Tech Newsletter- https://codinginterviewsmadesimple.substack.com/

Check out my other articles on Medium. : https://rb.gy/zn1aiu

My YouTube: https://rb.gy/88iwdd

Reach out to me on LinkedIn. Let’s connect: https://rb.gy/m5ok2y

My Instagram: https://rb.gy/gmvuy9

My Twitter: https://twitter.com/Machine01776819

So much this! It has been driving me nuts and I've wrote a few of my own on this topic. In fact, one I have in Queue is called I Hate AI! and discusses much of the absurdity in our reactions that you share here. (I'll probably quote this one too! :) ) Well done.

Love this article! My two cents, AI doesn’t have to be human-like in order to change the society.