Some ways that AI is dangerous to society[Thoughts]

If you're looking to jump in to address AI risks- these would be good places to start

Hey, it’s Devansh 👋👋

Thoughts is a series on AI Made Simple. In issues of Thoughts, I will share interesting developments/debates in AI, go over my first impressions, and their implications. These will not be as technically deep as my usual ML research/concept breakdowns. Ideas that stand out a lot will then be expanded into further breakdowns. These posts are meant to invite discussions and ideas, so don’t be shy about sharing what you think.

If you’d like to support my writing, please consider buying and rating my 1 Dollar Ebook on Amazon or becoming a premium subscriber to my sister publication Tech Made Simple using the button below.

p.s. you can learn more about the paid plan here.

Before we begin- I’m around the NYC-New Jersey area. If you’d like to meet in person, please let me know.

Another month, another AI Existential Risk Letter. At this point, we cycle through AI existential risk letters more often than McDonald’s cycles through promotional offers. This time, The Center of AI Safety (CAIS) came out with an open letter warning us that AI Existential risk should be given the same importance as Nuclear War and Pandemics-

Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war.

-This is… a claim.

Originally, I was planning to create addressing this claim and why the risk of extinction from AI is not anywhere close to completely world-changing events that have seismic impacts on populations, societies, and the environment. But writing that I ran into an issue- I have nothing meaningful to say about the topic. I have already covered topics like-

Harping on those points would be beating a dead horse. So I thought I’d change things up and write about something actually useful. In the article today, we will be covering some of the ways that AI is actually a risk to societies. Since regulators, policymakers, and researchers seem to be increasingly interested in AI and AI safety, this list should provide a good starting point for actual tangible impacts in promoting AI safety and handling AI Risk. While these may not be as sexy as AI Magically emerging into Terminator or kickstarting the next Human Extinction, these are nevertheless extremely important avenues to explore. Just remember- if you decide to write an open letter on any of these topics, I expect a shoutout. Without further ado, let’s take a looksie at some of the uncool risks that AI comes with. We’ll discuss potential solutions to these, but for the fixes, I’ll do a dedicated follow-up article. So keep your eyes peeled for that.

An AI Index analysis of the legislative records of 127 countries shows that the number of bills containing “artificial intelligence” that were passed into law grew from just 1 in 2016 to 37 in 2022. An analysis of the parliamentary records on AI in 81 countries likewise shows that mentions of AI in global legislative proceedings have increased nearly 6.5 times since 2016.

Overconfident usage

Risk TL;DR- People will attribute capabilities to the AI that don’t exist and thus use them in ways that are not suitable (reference image above for one example)

Starting off relatively lightweight- we have the hidden risk from AI that people are slowly waking up to: the inappropriate usage of AI. The hype around GPT led to people force-fitting Gen-AI in areas where it had no business being. Recently, we had a lawyer using ChatGPT in a court case, which turned out…

The fabrications were revealed when Avianca's lawyers approached the case's judge, Kevin Castel of the Southern District of New York, saying they couldn't locate the cases cited in Mata's lawyers' brief in legal databases.

The made-up decisions included cases titled Martinez v. Delta Air Lines, Zicherman v. Korean Air Lines and Varghese v. China Southern Airlines.

"It seemed clear when we didn't recognize any of the cases in their opposition brief that something was amiss," Avianca's lawyer Bart Banino, of Condon & Forsyth, told CBS MoneyWatch. "We figured it was some sort of chatbot of some kind."

Schwartz responded in an affidavit last week, saying he had "consulted" ChatGPT to "supplement" his legal research, and that the AI tool was "a source that has revealed itself to be unreliable." He added that it was the first time he'd used ChatGPT for work and "therefore was unaware of the possibility that its content could be false."

The last line may seem like an excuse to you, but it is unfortunately a very real phenomenon. People often take the outputs of these systems at face value, which can lead to bad decisions (my brother was about to base some investment analysis on numbers given to him by Bard, without verifying it). There’s a whole Wikipedia dedicated to the Automation Bias, which “is the propensity for humans to favor suggestions from automated decision-making systems.”

As you can see, the over-confident usage of these models is a problem that predates the models themselves. Treating automated systems as Gospel seems to be a human tendency. So what can be done about this? Increasing Public Awareness would be a great first step. I’m sure the lawyer came across posts discussing how ChatGPT is unreliable (I know my brother did). Unfortunately, in today’s world, there’s an overload of information going in and out of our brains. It can be easy to lose track of little details- even when the details are very important. Only by ensuring that important messages are seen on a regular basis- will we be able to ensure that people don’t succumb to these biases.

News outlet CNET said Wednesday it has issued corrections on a number of articles, including some that it described as "substantial," after using an artificial intelligence-powered tool to help write dozens of stories.

The true problem is much deeper than this. The automation bias (and many of the other problems discussed later) is compounded by the way our lives are structured- we’re always bombarded by stimuli and expected to constantly be on the move. We don’t have the mental energy to slow down and evaluate decisions. Until this is fixed, I don’t see the problem going away. That’s going to require a lot of changes and legislation to enable, but it’s going to be infinitely more useful than the clown and monkey show that is the conversation around AI Regulation these days.

If you have some suggestions on tackling the overreliance on these AI Tools, I would love to hear them. In the meanwhile, improving public awareness or working on some of the other problems mentioned below would do a lot to address AI Risks. Alongside this, we need to invest in and reward research that explores Safety and Efficient usage, as opposed to the more glamorous fields of AI that take over much of our attention- both in research and in development. Putting more resources into this, and then communicating findings is a must in order to help people from blindly using GPT where RegExs would suffice.

I’d like to end this section with a particularly insightful quote about why Pure Technologists make bad predictions about ‘revolutionary’ Tech. In last week’s edition of Updates (the new series where I share interesting content with y’all), there was a Twitter thread that said the following-

Geoff made a classic error that technologists often make, which is to observe a particular behavior (identifying some subset of radiology scans correctly) against some task (identifying hemorrhage on CT head scans correctly), and then to extrapolate based on that task alone.

The reality is that reducing any job, especially a wildly complex job that requires a decade of training, to a handful of tasks is quite absurd. …

thinkers have a pattern where they are so divorced from implementation details that applications seem trivial, when in reality, the small details are exactly where value accrues.

-This thread is extremely insightful. Can’t recommend it enough. This is a mistake I continue to struggle with.

Might be worth thinking about the next time some overconfident technologist/VC promises to disrupt an industry they know nothing about. To a degree, it also explains why so many people have been confidently proclaiming GPT will replace X. Speaking of which, let’s move on to the next section-

Risk 2: Centralization of Power and Worse Working Conditions

Risk Tl;DR- Without proper planning, the integration of AI will perpetuate the power imbalances between workers and upper-level management.

To truly understand this risk, we must first understand an integral concept to tech: leverage. For our purposes, leverage is simply the ability to influence people and products through your actions. A CEO has more leverage than a worker, because a worker only makes decisions about a small component of a company, while the CEO makes decisions about the entire company (this is incredibly simplistic, but this helps get the point across). Leverage and tech go hand in hand because Tech allows you to reach multiple people in the same way traditionally high-leverage positions do. Think of how the creator of Flappy Birds or Fruit Ninja reached millions through their apps.

So why does this matter? Leverage is one of the major reasons that is used to justify why upper-level management is paid so much more than the workers under them (“the VPs make the important decisions, workers can be replaced” etc etc). CEO pay has skyrocketed 1,460% since 1978. Here’s another crazy statistic-

And yet the wealth gap between CEOs and their workers has continued to widen. In our latest analysis of the companies in our 2022 Rankings, we found that the average CEO-to-Median-Worker Pay Ratio is 235:1 as of 2020, up from 212:1 three years prior. Specifically, average CEO pay increased 31% in the last three years while median worker pay increased only 11%,

Increasing inequality coinciding with the rise of tech and digital adoption is not an accident. Combine this with loosening worker protection laws, a lack of education about financial topics, and a lack of meaningful worker advocacy- and we see the trend toward greater inequality is not going to go anywhere anytime soon. So what does this have to do with AI? Simply put, AI will make the whole thing worse.

Take ChatGPT for example. It can do a whole host of jobs- from writing emails to generating business plans etc. It doesn’t do them well. But it can do them- and to many of the decision-makers for orgs, that’s all that matters. Recently, The National Eating Disorders Association fired all its human employees and volunteers that run its famous helpline — and replaced them with a new AI chatbot named Tessa. I’ll let you guess how well that panned out.

The important thing to note here is not that whether AI replaces people is not really a matter of AI’s competence at a particular task- it is solely tied to management’s perception of how well AI would do. These managers are often out of touch with the core requirements of the job (we have a whole article dedicated to why people who would often make bad managers are promoted over here). Judge for yourself how that would pan out. But that’s not all.

Think about what happens when these systems start to fall apart. Do you think the decision-makers would admit their mistakes (especially when their compensation relies on money from shareholders and investors)? Or will they continue to try building around a broken system, making their employees bear the consequences? We’ll see the familiar pattern of back-pedaling, layoffs, and shifting goal-posts that have become all too common these days to enable these AI fever dreams. Human employees end up facing the consequences of decisions made by management. Take JP Morgan’s multi-million dollar employee tracking system WADU. It is used by management to track mouse clicks and other ‘markers’ of developer productivity. The system makes employees feel paranoid (with descriptions like Big Brother) worsening the environment. What did the management do? Chalk up WADU as a multi-million dollar failure? Lol no. They doubled down.

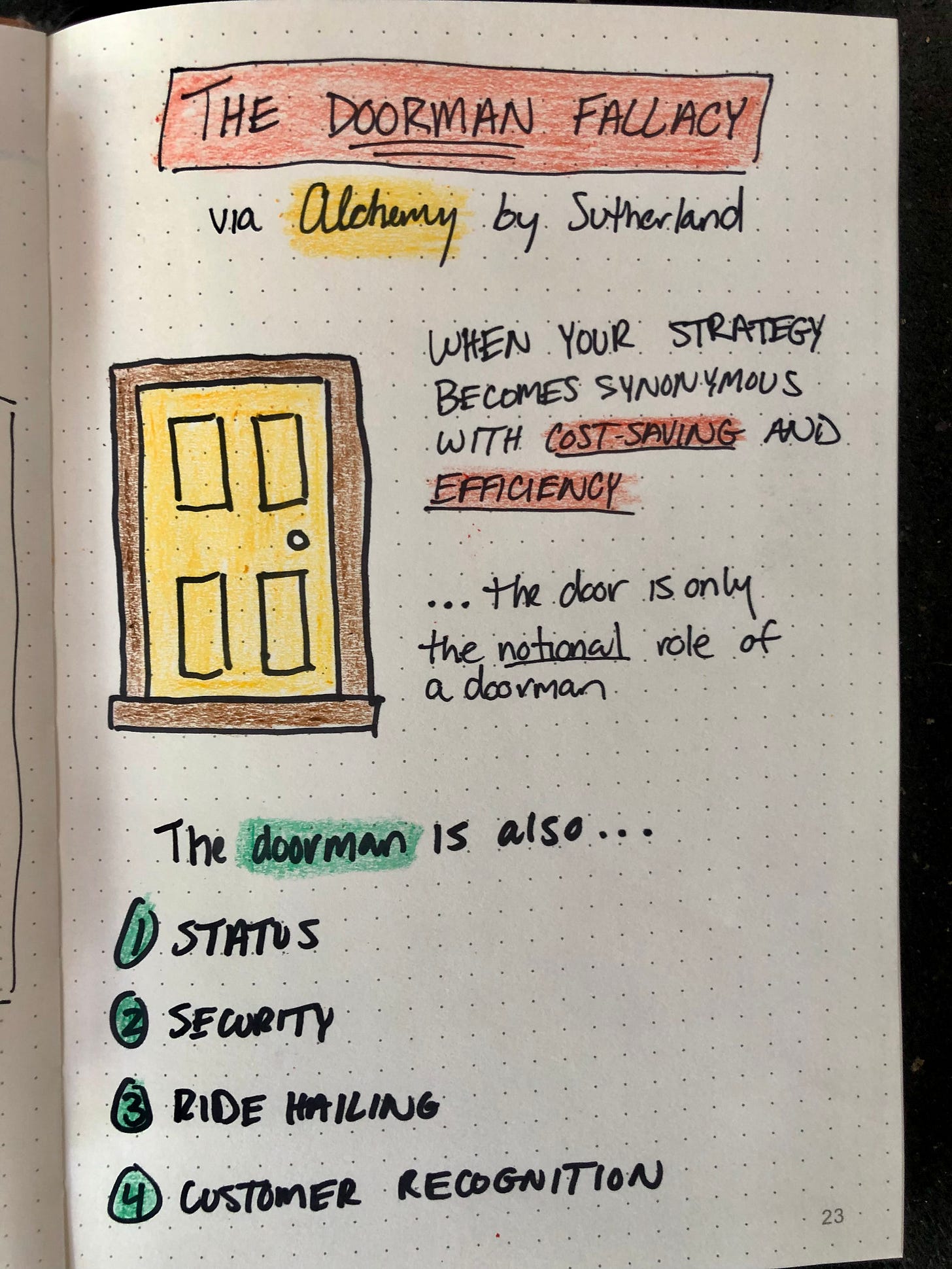

What we end up with are worse working conditions, lower-quality work, and people displaced from their positions because management didn’t understand their value. This is not the horse being replaced by a car- this is hotels replacing doormen with automatic doors (read about the Doorman Fallacy if you want to learn more)

AI will do great things for our productivity and work lives, make no mistakes about it. But reckless implementation of AI will create worse working conditions for the employees and prospects. It will further skew power dynamics- which would enable an environment of exploitation.

So what can be done? Here we have a clear answer- better social safety nets. Stopping people from using AI, no matter how dumb their use might sound, is not a good idea because it is only through experiments and failure that we understand the true limitations of our systems. The issue is the growing power disparity between workers and their employers. By giving people stronger social safety nets, we give them more leverage against out-of-touch management and bad working conditions. It is a much better long term solution- since increasing inequality and power imbalances lead to a less productive work-force

So, on aggregate, as the incomes of the 1% pull away from those of the rest, people’s overall life satisfaction is lower and their day-to-day negative emotional experiences are greater in number. The effects at work alone are numerous: other research has shown that unhappy workers tend to be less productive; studies have also found that unhappy workers are more likely to take longer sick leaves, as well as to quit their jobs.

-Income Inequality Makes Whole Countries Less Happy, Harvard Business Review

If you’re really worried about AI undoing the social fabric, tackling inequality might be a worthwhile place to start.

Risk 3: Really Dumb Design

Risk TL;DR- People can be really dumb with system design. It doesn’t how good your AI Model is if you wrap it in a system that is unsafe or inefficient. And the opacity of ML makes this very possible.

It should come as no surprise to you that humans can be comically bad at designing abstract Software Systems. Not too long ago, the internet put a lot of attention on Amazon Prime reverting to Monoliths and cutting costs by 90% for one of their functionalities. Some heralded it as the death of Microservices. However, others noted something clear- the system wasn’t designed very intelligently. Take a look at a response I got for my coverage of the same-

that’s dumbest architecture I ever aware for a primevideo scale and high io, and complete architectural failure, I never thought aws devs can do such stupid thing, this is not microservices vs monolith, it’s simply bad tech choice, picking serverless is shameful for this.

we have similar video streaming serving with less than petabyte scale but, we never thought of such bad architecture, we use microservices and have more than 30+ modules, we use kubernates and we process videos but we don’t use s3 like storage in the pipeline, because it will be just dam slow never scale/feasible from cost and performance.

Non-AI architecture is not something I know much about, but given how many people I know had a similar response, there must be an element of truth to this. So it’s reasonable to assume that the high costs of Prime were caused by bad architectural design. And this was architecture by Amazon people, who are supposedly some of the best engineers in the space. Imagine the horror shows that ordinary folks like us pass off as Software Design.

Dig through the internet, and there is no shortage of stories of badly designed software. In my article, 3 Techniques to help you optimize your code bases, we covered the story of how dead code cost a company dearly-

When the New York Stock Exchange opened on the morning of 1st August 2012, Knight Capital Group’s newly updated high-speed algorithmic router incorrectly generated orders that flooded the market with trades. About 45 minutes and 400 million shares later, they succeeded in taking the system offline. When the dust settled, they had effectively lost over $10 million per minute.

AI (especially Machine Learning) takes the difficulty of designing safe software systems to a whole new difficulty level. There are a lot of moving parts in ML systems that are very fragile and can be disrupted by relatively small perturbations- both deliberate and accidental (this includes the very costly state-of-the-art models). Designing Safe ML systems is a whole additional layer of complexity- on top of a task that humans have historically done poorly (this is also why companies should invest in hiring proper ML people instead of just half-heartedly transitioning some people into ML- you will inevitably run into problems that require specialized ML knowledge).

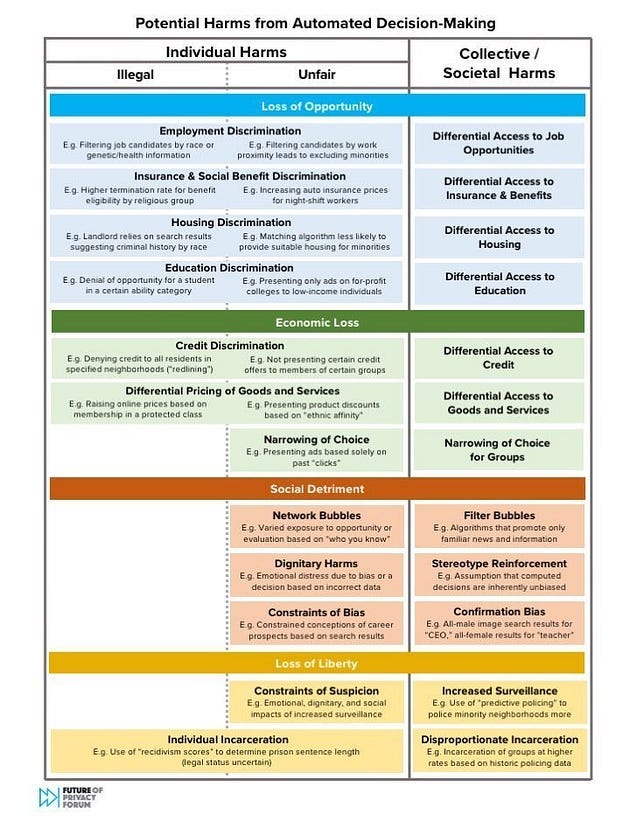

Regular readers will recognize the following chart with an overview of some of the risks posed by AI (I refer to it a lot lol, but I haven’t found anything better)-

Many of these risks come into play because we don’t put a lot of thought when designing autonomous agents. We take a lot of human abilities for granted and expect them to carry over to autonomous agents in training. We also have an exceptional talent to consider only our viewpoint and forget about everything that is not normal. This makes us famously bad at identifying biases in our datasets. All in all, this is a risk that exists and is already causing hell in society. Take a look at the following statement by in the writeup, “Bias isn’t the only problem with credit scores—and no, AI can’t help” by MIT Tech Review-

We already knew that biased data and biased algorithms skew automated decision-making in a way that disadvantages low-income and minority groups. For example, software used by banks to predict whether or not someone will pay back credit card debt typically favors wealthier white applicants.

Many of these biases are not explicit rules coded in AI (“Black People won’t pay back loans so reduce their score by 40%) but associations that an AI Model picks up implicitly by looking at the features inputted into its training. Features that will invariably encode the stories of systemic oppression of minorities/weaker groups. As a tangent, this is why collecting a diverse set of data points and training with noise are so powerful- they allow you to overcome the blacks and whites of data collection systems and introduce your AI to shades of grey.

Ultimately Badly Systems and AI will go very well with each other since AI systems tend to be very opaque and opacity is a great way to build systems with limitations that you don’t understand (this is why Open Source is critical for AI Testing). Luckily the fix for this is more clear- investments into AI Robustness. Typically, much of the attention has gone to AI Model performance, with researchers going to painstaking lengths to squeeze raw performance on benchmarks. We need to reward research into AI Safety and create more checks and balances to ensure people meet those standards. This is a fairly complex topic, so I will elaborate on how in another article. One idea I have been exploring is 3rd party audits of AI Systems by third parties- who could specifically check for Dimensions and Simplicity.

By this point, I’m sure y’all have gotten sick of me. So we’ll close the article on a risk that is relatively straightforward-

Risk 4: Climate Stress

Risk TL;DR- The run towards using LLMs for every trivial task is going to put a large stress on the environment.

The jack-of-all-trades nature of the GPT models opened up a new avenue for many people- using fine-tuned models for every small task. I’ve seen a GPT for everything, including (but not limited to): writing essays, NER (named entity recognition), improving your online dating, Q&A about texts, creating travel plans, and even replacing a therapist. People believe that GPT + the magic of finetuning will work in any use case. Words like ‘Foundation Models’ have become buzzwords of the hype.

Leaving aside the fact that these models (even fine-tuned ones) aren’t very good, this leads to another issue. LLMs are massive (even the small ones) and require a lot of energy to run. There are a lot of direct and indirect costs involved in training and then using these models at scale- which will put a large pressure on our energy grids.

This was an issue that Deep Learning has been grappling with for a while now. Models have been getting larger and larger, with more elaborate training protocols for lower ROIs. ML Research was overlooking exploring simple techniques that worked well enough and focusing on beefing up models in many ways.

In earlier years, people were improving significantly on the past year’s state of the art or best performance. This year across the majority of the benchmarks, we saw minimal progress to the point we decided not to include some in the report. For example, the best image classification system on ImageNet in 2021 had an accuracy rate of 91%; 2022 saw only a 0.1 percentage point improvement.

However, this SOTA obsession was slower to be integrated into the industry because of the challenges of scale. GPT has changed that. Now companies can’t wait to be ‘cutting edge’ no matter what the cost. This will put a lot more demand on these massive models, driving up emissions and the consumption of these models.

How do we focus? We will go on over it in a follow-up article. However, the components are the same as already discussed: awareness and education around simple methods; incentivizing research into simple methods (both monetary and prestige related); and improving our social nets and the pace of lives to enable people to take a step back from a domain filled with misinformation and hype.

That is it for this piece. I appreciate your time. As always, if you’re interested in reaching out to me or checking out my other work, links will be at the end of this email/post. If you like my writing, I would really appreciate an anonymous testimonial. You can drop it here. And if you found value in this write-up, I would appreciate you sharing it with more people. It is word-of-mouth referrals like yours that help me grow.

Reach out to me

Use the links below to check out my other content, learn more about tutoring, reach out to me about projects, or just to say hi.

Small Snippets about Tech, AI and Machine Learning over here

AI Newsletter- https://artificialintelligencemadesimple.substack.com/

My grandma’s favorite Tech Newsletter- https://codinginterviewsmadesimple.substack.com/

Check out my other articles on Medium. : https://rb.gy/zn1aiu

My YouTube: https://rb.gy/88iwdd

Reach out to me on LinkedIn. Let’s connect: https://rb.gy/m5ok2y

My Instagram: https://rb.gy/gmvuy9

My Twitter: https://twitter.com/Machine01776819

Chat GPT may produce false statements . But what about its prevailing use by number of students who use it as short to complete assimnents and other stuff

https://open.substack.com/pub/wisdomgazette/p/coming-soon?r=2jm2h5&utm_campaign=post&utm_medium=web

If your going to play 'open-GPT' then at the very least 'roll your own' unfiltered version, by getting the original 'META' training set, and running it on your home computer, all in the privacy of your own home, nothing 'online' is needed meaning that big-brother assholes never see's your query's.

https://bilbobitch.substack.com/p/chatgpt-one-ring-to-rule-them-all

This post link carefully explains how to build your own 'chat-GPT' with no fucking HOMO filtering, you can ask it real questions, like "How can I make a bomb', or "Meth' an you will not get a woke-lecture.