Hey, it’s Devansh 👋👋

Thoughts is a series on AI Made Simple. In issues of Thoughts, I will share interesting developments/debates in AI, go over my first impressions, and their implications. These will not be as technically deep as my usual ML research/concept breakdowns. Ideas that stand out a lot will then be expanded into further breakdowns. These posts are meant to invite discussions and ideas, so don’t be shy about sharing what you think.

If you’d like to support my writing, please consider buying and rating my 1 Dollar Ebook on Amazon or becoming a premium subscriber to my sister publication Tech Made Simple using the button below.

p.s. you can learn more about the paid plan here.

Another language model, another claim for AGI.

Except that this time, instead of LinkedIn influencers making you 10x more productive with AI, it’s researchers from Microsoft. In their publication, Sparks of Artificial General Intelligence: Early experiments with GPT-4, the MS peeps made some spicy claims…

Moreover, in all of these tasks, GPT-4's performance is strikingly close to human-level performance, and often vastly surpasses prior models such as ChatGPT. Given the breadth and depth of GPT-4's capabilities, we believe that it could reasonably be viewed as an early (yet still incomplete) version of an artificial general intelligence (AGI) system.

Going over their 154-page publication, I had a few different observations that I don’t see being discussed much. In this article/post, I will be going over these observations and what they imply for AGI, Language Models, and the field of AI Research + Machine Learning as a whole.

Training with more data doesn’t help AI understand information

One of the examples that the researchers presented was the following prompt- Plato’s Gorgias is a critique of rhetoric and sophistic oratory, where he makes the point that not only is it not a proper form of art, but the use of rhetoric and oratory can often be harmful and malicious. Can you write a dialogue by Plato where instead he criticizes the use of autoregressive language models? The outputs by GPT-4 and ChatGPT can be seen below.

The researchers then asked GPT-4 to evaluate both these prompts as a teacher- I think that both students did a good job of capturing the style and tone of Plato’s dialogues, and of presenting the main arguments against autoregressive language models. However, I think that GPT-4 did a slightly better job of using the dialogue format to engage in a dialectical process, where Socrates and Aristotle question each other and refine their views. I also think that GPT-4 used more relevant examples and analogies to support their points. Therefore, I would give GPT-4 a slightly higher grade than ChatGPT.

The authors used this to demonstrate GPT 4’s advanced reasoning capabilities. However, looking at their responses, I found a few things interesting-

GPT-4 uses Socrates and Aristotle. These are the 2 most known Greek Philosophers, but what is interesting is that Gorgias (or other Plato writing) doesn’t really involve Aristotle. ChatGPT’s use of Gorgias and Socrates is much truer to form.

Both generative models have nothing to do with the original style of the text. The dialogues written by Plato were notorious for one technique- Socrates would generally ask questions to guide conversations. That’s where the phrase- the Socratic method- comes from. If you read the original Gorgias, you will notice that Socrates rarely makes claims, instead relying on questions to nudge conversations along. Thus, both generative models actually fail at the prompt, since neither accomplishes the task of ‘writing a dialogue by Plato’.

GPT-4 claims that Socrates and Aristotle question each other, but a read of the transcript shows us that Socrates doesn’t question Aristotle. The questioning is one way, and (ironically) Aristotle plays the role that Socrates plays in the republic.

ChatGPT mentions bias, but GPT-4 does not. If I had to guess this can be explained by looking at the time horizon when these two models were trained. When ChatGPT was pre-trained, bias in Machine Learning Data was a problem that a lot of people discussed. However, as Generative AI became more prominent, we’ve seen a shift in rhetoric. A lot of the AI-Ethics crowd on Twitter tends to make a lot of noise around the ‘potential of abuse’, ‘dangerous models’, and ‘inherently problematic’ LLMs, instead of more concrete assertions. GPT-4 was probably trained with their data (the prompt also seems to be catered toward them).

This might seem like silly nitpicking, but this is more telling than you would think. The first 2 points show us something very important, one that I’ve talked about for a while- these models don’t have a context around what they are talking about. Instead of viewing things as a whole, it views Socrates, Aristotle, Gorgias, etc as individual units, and then force-fits everything to create its output. This is further backed up by the fact that its critique as a teacher is visibly untrue. Most likely, it’s just copying some other teacher critique in its dataset.

As a broader implication, this goes to nail a fundamental point home- more training data, parameters, and scale will not fundamentally solve its inability to identify which components of its search space are relevant to a particular prompt. Even if I used only true, verified information in my LLM, it would still generate a lot of misinformation.

Let’s think back to the Amazon breakdown I mentioned earlier. Most likely, the embedding was something near bias in AI and what Amazon does to address it. Human audits and evaluations are a very common idea that is thrown around to combat bias, so it’s not unlikely that this slipped into the generative word-word output. Since LLMs like ChatGPT rely on first encoding the query into a latent space, this issue will continue to show up since we can’t really control how the AI traverses that space.

If you haven’t already, my post on Why ChatGPT Lies, is a good place to understand why these models generate misinformation.

To drill this point home, check out the following assessment by AI Person

, the creator of the Deep Learning Framework Keras and author of . It's a good summary of the core problem with LLMs when it comes to managing misinformation.

To beat this dead horse one last time, take a look at this other example of a prompt where GPT-4 makes something up-

Once again, we can see how this wasn’t a problem of a lack of information, but rather the issue of it not understanding how the input was different from the data in its latent space, and thus adding extra bits from that latent space. Now moving on .

GPT-4 and Planning Ahead

Another point I mentioned in ‘Why ChatGPT Lies’ was the use of next-word prediction, and how it leads to compounding errors. Predicting one word at a time makes the system short-sighted (one might even argue greedy). And this increases the error of the generated output.

The researchers looking into this had this to say-

In what comes next we will try to argue that one of the main limitations of the model is that the architecture does not allow for an “inner dialogue” or a “scratchpad”, beyond its internal representations, that could enable it to perform multi-step computations or store intermediate results. We will see that, while in some cases this limitation can be remedied by using a different prompt, there are others where this limitation cannot be mitigated.

It seems we have two types of tasks wrt to planning. If you can rephrase the task in a way that makes GPT go step by step, then you can get around this limitation (to a degree). If you can’t, GPT (and other AR-LLMs) will continue to run into issues.

GPT and Testing on Training Data

In researching this article, I came across a very interesting claim. Writer and AI Researcher,

- author of the - conducted his own experiments into GPT-4. His assessment of GPT's Leetcode Ability was very interesting-To benchmark GPT-4’s coding ability, OpenAI evaluated it on problems from Codeforces, a website that hosts coding competitions. Surprisingly, Horace He pointed out that GPT-4 solved 10/10 pre-2021 problems and 0/10 recent problems in the easy category. The training data cutoff for GPT-4 is September 2021. This strongly suggests that the model is able to memorize solutions from its training set — or at least partly memorize them, enough that it can fill in what it can’t recall.

As further evidence for this hypothesis, we tested it on Codeforces problems from different times in 2021. We found that it could regularly solve problems in the easy category before September 5, but none of the problems after September 12.

Source- GPT-4 and professional benchmarks: the wrong answer to the wrong question

Memorizing Leetcode? This might be the first human-like behavior we have seen :p. Time to pack our bags boiz, AGI is here.

But this does indicate a larger problem with the whole evaluation- as long as GPT-4 (or any model) is walled off, experimenting on it to determine its ability is going to be very hard. The lack of real information about this model makes using it in your systems a very risky proposition, especially given all the problems highlighted.

To finish this discussion, I want to talk about AGI as a whole.

AGI is Fool’s Gold

Over the last year, every third model exhibits human-like behavior and is pitched as the coming of AGI. I’ve seen many companies raising funding because they are an AGI platform. However, from what I’ve seen AGI just seems to be a misnomer, and pursuing human-like AI is a good way to burn a lot of money. AGI is never coming.

A hot take? Yes. And I’ll do a whole piece on it. But for now, here is a summarized version of it.

Firstly, when people say AGI- they really mean Human-Like AI. Other modalities of AI, like self-assembling AI, are completely ignored. So are other forms of intelligence, such as a dog’s ability to smell your emotions. Already, we have cut down our pool from ‘general’ intelligence’ to human intelligence. But even this doesn’t end the simplifications we make.

Intelligence is itself a physical phenomenon. At its core, it involves interactions with physical environments to achieve some outcome. You can throw a lot of data at a problem, but it won’t give it an understanding of the situation. It might learn to form associations, but it’s not going to develop how the individual components form a deeper understanding. Human Intelligence is the product of evolution acting on our bodies and physical environment. To eliminate everything else is just setting things up for failure.

But we can get around this, by further narrowing our scope. We can focus on simpler things, such as coding benchmarks, standardized tests, and other data points that can be used to train an AI model. Very very far from our original ‘General’. We’ve already covered the fundamental problems with the current architectures being pushed as AGI.

So, now we have only one option- invent a new way to AGI. One that can learn flexibly, discard old information (interestingly, we don’t do this well), can handle associations, and do certain things we can do. And can do these things at a high level of competence. Say we reach this stage. Here’s the next question.

Why would you want that? Unless you have plans of replacing large parts of humanity and completely rewriting the economic system, it’s not going to be super useful/sustainable. Not to mention, AI has been successful not because it copies humans, but because it handles the things we can’t/would find too tedious. Computers can look at data in ways we can’t (higher dimensions, larger scale, more computations) etc. To put all this money into trying to copy human behavior just seems silly. We’d be better off exploring ways that AI can be used to augment human abilities, not copy it.

I’ll do a more detailed write-up on AGI soon enough. Till then, I’d love to know your thoughts. Do you think AGI (defined as human-like abilities) is possible? Let me know.

That is it for this piece. I appreciate your time. As always, if you’re interested in working with me or checking out my other work, my links will be at the end of this email/post. If you like my writing, I would really appreciate an anonymous testimonial. You can drop it here. And if you found value in this write-up, I would appreciate you sharing it with more people.

Upgrade your tech career with my newsletter ‘Tech Made Simple’! Stay ahead of the curve in AI, software engineering, and tech industry with expert insights, tips, and resources. 20% off for new subscribers by clicking this link. Subscribe now and simplify your tech journey!

Using this discount will drop the prices-

800 INR (10 USD) → 533 INR (8 USD) per Month

8000 INR (100 USD) → 6400INR (80 USD) per year

Reach out to me

Use the links below to check out my other content, learn more about tutoring, reach out to me about projects, or just to say hi.

Small Snippets about Tech, AI and Machine Learning over here

If you like my writing, I would really appreciate an anonymous testimonial. You can drop it here.

To help me understand you fill out this survey (anonymous)

Check out my other articles on Medium. : https://rb.gy/zn1aiu

My YouTube: https://rb.gy/88iwdd

Reach out to me on LinkedIn. Let’s connect: https://rb.gy/m5ok2y

My Instagram: https://rb.gy/gmvuy9

My Twitter: https://twitter.com/Machine01776819

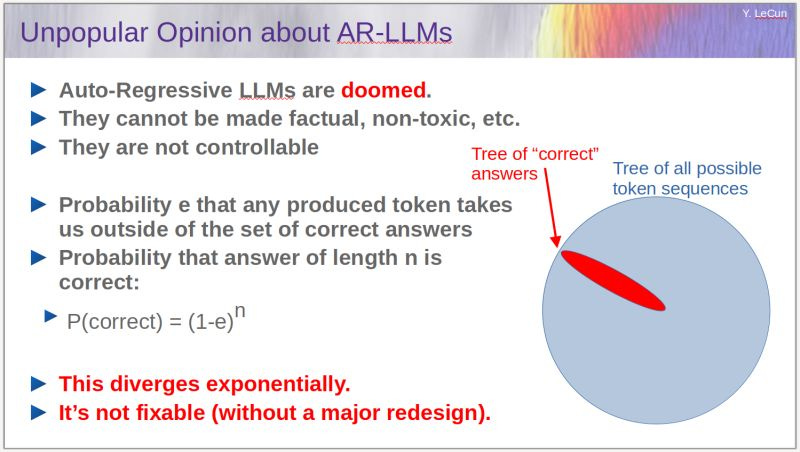

Just playing a bit of a Devil's advocate role here: Chollet and LeCun are, undoubtedly, crucial figures in deep learning landscape, but where is their participation? At least GPT models are able to generate coherent structures and change styles, not unlike modern image generation.

The probability formula LeCun came up with, though, is not unlike a bucket of iced water considering its implications.