Cloud Infrastructure for AI: What You Actually Need to Know [Guest]

How compute, storage, and networking shape your AI projects—and why understanding infrastructure can give you a competitive edge

Hey, it’s Devansh 👋👋

Our chocolate milk cult has a lot of experts and prominent figures doing cool things. In the series Guests, I will invite these experts to come in and share their insights on various topics that they have studied/worked on. If you or someone you know has interesting ideas in Tech, AI, or any other fields, I would love to have you come on here and share your knowledge.

I put a lot of effort into creating work that is informative, useful, and independent from undue influence. If you’d like to support my writing, please consider becoming a paid subscriber to this newsletter. Doing so helps me put more effort into writing/research, reach more people, and supports my crippling chocolate milk addiction. Help me democratize the most important ideas in AI Research and Engineering to over 100K readers weekly. Many companies have a learning budget that you can expense this newsletter to. You can use the following for an email template to request reimbursement for your subscription.

PS- We follow a “pay what you can” model, which allows you to support within your means, and support my mission of providing high-quality technical education to everyone for less than the price of a cup of coffee. Check out this post for more details and to find a plan that works for you.

Barak Epstein has been a senior technology leader for over a decade. He has led efforts in Cloud Computing and Infrastructure at Dell and now at Google. Currently, he is leading efforts to leverage Parallel Filesystems to AI and HPC workloads on Google Cloud. Barak and I have had several interesting conversations about infrastructure, strategy, and how investments in large-scale computing can introduce new paradigms for next-gen AI (instead of just enabling more of the same, which has been the current approach). Some of you may remember his excellent guest post last year, where he talked about how it was important to go beyond surface level discussions around AI to think about how advancements in AI capabilities would redefine our relationships with it (and even our own identity about ourselves and our abilities).

Barak is back with another classic. In this article, he bridges the gap between cloud infrastructure specialists, AI professionals, and curious generalists. He argues that an understanding of cloud infrastructure—at least at a high-level—is becoming essential foundational knowledge. Here's what Barak covers:

Compute: The Foundation of AI: Understanding the critical role compute plays in AI, with a focus on how GPUs, CPUs, TPUs, DPUs, FPGAs, and IPUs differ, their respective strengths, and why these differences matter.

Key Trends in Compute Hardware: Discussing recent developments, especially GPU architectures such as Nvidia’s Blackwell, Google's Trillium TPU, and evolving CPU innovations that are increasingly relevant to AI workloads.

Storage: Fueling AI with Data: Highlighting storage's crucial yet often overlooked role in AI performance, including performance bottlenecks, optimization strategies, and how advanced storage techniques shape AI system design.

Networking: The Backbone of AI: Exploring networking as the critical connector in AI infrastructure, with insights into inter-GPU communication technologies, GPU-to-storage communications, and advanced networking solutions such as RDMA, NVLink, and DPUs.

AI Frameworks: Bridging Models and Infrastructure: Evaluating leading frameworks—JAX, PyTorch, TensorFlow—and how their unique characteristics interact with infrastructure to influence model efficiency and performance.

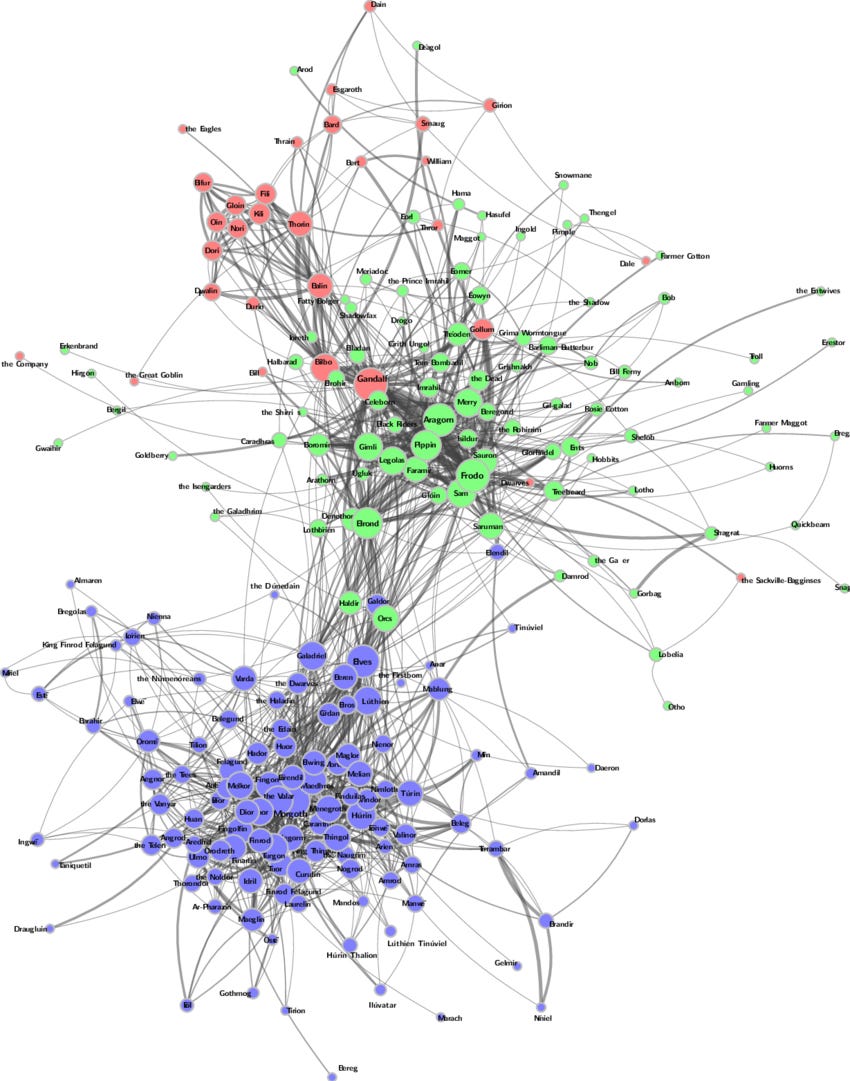

Case Study: Edge-GNN Use Case: A look at Edge-based Graph Neural Networks, illustrating how specific infrastructure choices (compute, storage, networking) directly impact AI capabilities and decision-making.

I think you’ll enjoy this thoughtful and practical guide to understanding cloud infrastructure’s impact on AI—now and in the future. If you want more of such insightful writing, make sure you check out Barak’s excellent, Tao of AI newsletter here.

How to read this article:

For AI practitioners: To optimize your AI projects, you need to be able to speak the same language as your infrastructure specialists. This guide will equip you with the knowledge to understand the key components of cloud infrastructure, make informed decisions about your AI workloads, and collaborate effectively with your infrastructure team.

For Generalist Readers: Copy-paste this article into an LLM Chat Tool and ask:

“Please summarize this article. Focus on 2-3 takeaways regarding GPU development that will help me keep an eye on the development and relevance of this critical piece of computing infrastructure that I know little about. Add an additional takeaway or two regarding the rest of the content in the article. Please make some suggestions about how and where these trends might impact my field of <insert your field here.>”

For any reader: Copy-paste this article into an LLM Chat Tool and ask:

“Tell me a short story about the content of the article. help me build a mental map of the key takeaways so that i can start to follow trends in these infrastructure components"

[Optionally] “Help me understand how these trends relate to my field of <insert field here>.”

I would argue that some knowledge of technical infrastructure, even at a high-level, should be part of the “liberal arts education” of the future or, if you prefer, part of every AI engineer’s, data scientist’s, or even average educated person’s, foundational knowledge. The cloud focus here is, in part, an artifact of where I spend my time. But I also think that it’s reasonable to focus on cloud infrastructure as a proxy for the generalized AI infrastructure of the future, since the average Market Newcomer is likely to use hyperscaler capabilities and/or tools, for the easily foreseeable future.

We’ll go through each critical domain for cloud computing (compute, storage, networking) and highlight the key investment areas and/or development bottlenecks that you should track (even lightly) over the next few years. We'll also discuss how these areas work together and how they are brought together by AI frameworks.

Compute: The Foundation of AI

Compute is obviously where the action is, and the shift in relative value between GPUs and CPUs, as perhaps the key trend over the past half-decade or more, is likely to generate (further) shifts in market structure and developer focus. Compute provides the raw processing power for AI workloads, but its effectiveness is heavily dependent on the efficiency of storage and networking, as we'll see in later sections.

Staying alert in this market means (at least) paying attention to the latest generation of GPUs, as well as their principal characteristics, (e.g. memory size, memory bandwidth, core count), even if at a high-level.

We’ll break the Compute section into two. If you’re already familiar with the basic types of processors and their relative strengths and weaknesses, feel free to skip to Compute, Part II.

Compute, Part I: Processor Type Overview

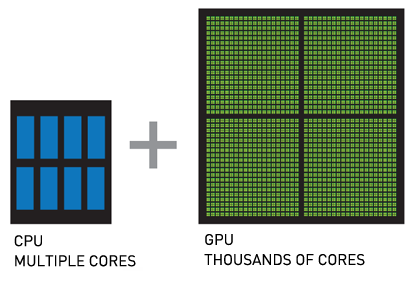

CPUs excel at sequential processing with a small number of powerful cores optimized for diverse instructions and low-latency memory access, making them ideal for general-purpose tasks.

GPUs, with thousands of simpler cores and high memory bandwidth, are designed for parallel processing, accelerating computationally intensive tasks like AI training and graphics rendering.

TPUs, custom-designed by Google solely, are purpose-built for the matrix multiplications at the heart of neural networks, sacrificing general-purpose flexibility for extreme AI-specific performance.

DPUs specialize in data management, accelerating network operations, storage access, and security tasks to optimize data flow for large AI systems.

FPGAs (Field-Programmable Gate Arrays) provide a flexible hardware fabric that can be customized for specific workloads, bridging the gap between general-purpose processors and specialized ASICs (Application-Specific Integrated Circuits).

IPUs (Intelligence Processing Units) are optimized for graph processing and sparse data structures, which makes them a good fit for analyzing interconnected data in applications like social networks and recommendations.

These architectural differences dictate each processor's strengths. CPUs’ few, complex cores handle diverse workloads sequentially. GPUs' high core count and high memory bandwidth enable parallel processing for compute-heavy tasks. TPUs' specialized design prioritizes matrix multiplication speed for AI. DPUs streamline data movement with specialized hardware. FPGAs' reconfigurable logic allows them to be tailored for specific algorithms, offering a balance of performance and flexibility. IPUs–still niche–efficiently support the analysis of complex relationships within graph data with their unique architecture.

Compute, Part II: Key Trends, by Chip Type

GPU (Graphics Processing Unit):

GPU are typically evaluated, at the top level, according to the number of Floating Point Operations (FLOPS) supported, the amount of memory and memory bandwidth they contain, and their interconnect capabilities (for GPU-to-GPU communication; more on that below).

Nvidia is, of course, the market leader here. Their newest productized architecture, Blackwell, was officially announced in 2024 and includes (a) dual reticle-sized dies (where two silicon pieces are connected to make a single functional unit) and (b) twice the memory bandwidth (8TB/s vs 4TB/s) of the Hopper Architecture, and (c) an enhanced Transformer Engine. The Transformer Engine, introduced with the Hopper Architecture in 2022, allows for the reduction of precision when model loss is small and points to another key feature in GPU architecture, which is support for mixed precision calculations, which came with the Volta architecture, first announced in 2013 and productized in 2017.

Several other companies make GPUs, including AMD, Intel, Xilinx, and Qualcomm. This article addresses some expected future trends in GPU development, across the broader field.

Schematic View (Source)

TPU (Tensor Processing Unit): The TPU was developed specifically for Machine Learning/AI. They are built around a Matrix Multiplication Unit (MXU), designed to accelerate the matrix multiplications that are critical for neural networks. They also use a ‘systolic array’ architecture, in which data flows through the chip in ‘waves’, reflecting the calculation pattern required to efficiently support the targeted operations (but not necessarily efficient for other operations). Finally, they are generally built to work with reduced precision calculations.

Trillium is the 6th generation TPU and was introduced in 2024. It doubles the high bandwidth memory capacity and bandwidth compared to the previous generation. It also doubles the interconnect bandwidth (Interchip Interconnect), for communication between chips in a TPU pod and 67% more energy efficient than the previous generation. The interconnect gains are leveraged to build larger “TPU pods” where hundreds of TPUs are connected to work together, a process similar to GPU trends described above.

Finally, improvements were made in the Sparse Cores, which have a different architecture than other TPU cores, and are focused on making the processing of model embeddings more efficient. Research suggests that these cores benefit from different data flow patterns than the systolic processing described above. Other specialized data structures and operations help to accelerate the calculation of sparse matrices typical for embeddings.

CPU (Central Processing Unit):

GPUs and specialized accelerators like TPUs rightfully take the spotlight for AI, butCPUs are also undergoing significant innovation that's impacting the AI landscape. Here are some key trends:

1. Enhanced AI Instructions:

Specialized instructions: Modern CPUs are incorporating specialized instructions to accelerate common AI operations like matrix multiplication, convolution, and other mathematical functions. These instructions can significantly improve the performance of AI inference on CPUs.

Example: Intel's latest Xeon CPUs include Advanced Matrix Extensions (AMX) that accelerate matrix operations, to support deep learning.

2. Optimized Memory and Caches:

Larger caches: CPUs are being designed with larger (data) caches to improve data access for AI workloads. This reduces the need to fetch data from main memory, which can be a bottleneck for AI performance.

High-bandwidth memory: Some CPUs support high-bandwidth memory (HBM), which offers significantly faster data transfer rates compared to traditional memory. This is crucial for AI tasks that require large amounts of data to be processed quickly.

3. Software Optimization:

AI-optimized libraries: Software libraries are being developed to optimize AI performance on CPUs. These libraries leverage the specialized instructions and hardware capabilities of CPUs to accelerate AI workloads.

Examples: Intel's oneDNN library optimizes deep learning performance on Intel CPUs. Ampere’s AI-O “software library to help companies shift code from GPUs to CPUs”.

4. Heterogeneous Computing:

CPU-GPU collaboration: CPUs are increasingly being used in conjunction with GPUs and other accelerators in heterogeneous computing environments. The CPU handles tasks like data preprocessing and control flow, while the GPU or accelerator focuses on computationally intensive tasks.

Unified memory: This technology allows CPUs and GPUs to share the same memory space, reducing data transfer overhead and simplifying programming.

5. Edge Computing:

Efficient inference at the edge: CPUs are well-suited for AI inference at the edge due to their lower power consumption and versatility. This is driving innovation in CPUs for edge devices like smartphones, IoT devices, and embedded systems.

Example: Arm's CPUs are widely used in edge devices and are being optimized for running smaller AI models efficiently.

DPU (Data Processing Unit): Data Processing Units are emerging as essential components for managing the massive datasets used in modern AI applications. They offload data-related tasks, including network traffic management, storage optimization, and security functions, from the CPUs and GPUs. This frees up the primary processors to focus on the core AI computations, leading to improved overall system performance, particularly in large-scale AI deployments where data bottlenecks can be a significant issue.

Nvidia summarizes the DPU’s architectural components as follows:

An industry-standard, high-performance, software-programmable, multi-core CPU, typically based on the widely used Arm architecture, tightly coupled to the other SoC components.

A high-performance network interface capable of parsing, processing and efficiently transferring data at line rate, or the speed of the rest of the network, to GPUs and CPUs.

A rich set of flexible and programmable acceleration engines that offload and improve applications performance for AI and machine learning, zero-trust security, telecommunications and storage, among others.

IPU (Intelligence Processing Unit): Intelligence Processing Units are carving out a specialized niche in the AI landscape by focusing on the acceleration of graph-based AI. They excel at accelerating Graph Neural Networks (GNNs), which are employed in a variety of applications, including social network analysis, recommendation systems, and even drug discovery, thanks to their architecture specifically designed to handle the complexities of graph data.

FPGA (Field-Programmable Gate Array): FPGAs have a long history, dating back decades. They are re-programmable circuits that can be configured to support particular applications. They can be customized for AI applications and typically consume less power than GPUs, but they require significant upfront programming, which is not everyone’s cup of tea. Tech Target has created a simple guide to help determine whether an FPGA could work for your AI needs.

Why you should care about infrastructure efficiency [Source]

Storage: Fueling AI with Data

Storage provides the fuel for AI, holding the massive datasets that are used to train and run AI models. However, storage performance can often be a major bottleneck in AI workflows. Slow storage can starve compute resources, leading to inefficient training and inference. Therefore, understanding storage characteristics and optimization techniques is critical for AI professionals.

We’ll focus on areas of innovation and/or bottlenecks likely to impact AI Systems development.

Performance Bottlenecks: Slow storage can severely impact AI training, especially with large datasets that need to be accessed quickly.

Interconnect Speed: The speed of the connection between storage and compute (e.g., the network bandwidth) can limit data transfer rates and create a performance bottleneck. This ties back directly to the networking technologies discussed in the previous section.

Metadata Operations: Excessive metadata operations, often caused by many small files or complex compute patterns, can strain the storage system and slow down data access. This is a common challenge with large datasets consisting of many individual files.

Storage Tiering: Moving data between slower and faster storage tiers (e.g., from cold storage to hot storage) can introduce latency and impact AI training performance. While tiering can be cost-effective, it requires careful management to minimize data movement overhead.

Storage-Framework Interaction: Inefficient interaction between the storage system and the AI framework (e.g., inefficient data loading) can impact performance. Frameworks often provide tools and techniques for optimizing data loading, and understanding these is crucial.

Optimizing Storage

Cloud providers are addressing these challenges via:

High-performance storage systems: Optimized for low latency and high throughput, using technologies like NVMe drives and high-speed networking.

RDMA and GPU Direct Storage: Enabling direct access to storage from GPUs, bypassing the CPU and reducing data transfer latency. This directly relates to the networking technologies discussed earlier.

Tiered storage: Offers a balance of performance and cost through tiered storage, where data is placed on different tiers based on access frequency and performance requirements.

Networking: The Backbone of AI

Networking is the circulatory system that connects compute and storage. Efficient networking is absolutely critical for AI workloads, especially those involving distributed training across multiple GPUs or TPUs, or those dealing with massive datasets that need to be quickly accessed from storage. Slow or congested networks can create bottlenecks that severely limit the performance of your AI applications.

This section highlights the key areas in which networking-related innovation is likely to impact the success of AI systems, and of AI-Systems-in-the-Cloud, in particular.

Inter-GPU Communication: Efficient communication between GPUs is critical for large-scale AI models that require multiple GPUs to work together.

NVLink: NVIDIA's high-bandwidth, low-latency interconnect technology allows GPUs to communicate directly with each other, bypassing the PCIe bus and significantly reducing latency. This is crucial for efficient data and model parallel training.

Infiniband: A high-performance networking standard commonly used in HPC clusters. Infiniband provides low latency and high throughput, making it suitable for communication between GPUs in a distributed AI training environment.

GPU to Storage Communication: Accelerating data transfer between storage and GPUs is essential for efficient AI training, especially with large datasets, and is directly related to storage performance.

RDMA (Remote Direct Memory Access): RDMA allows network devices to transfer data directly to or from the memory of a GPU without involving the CPU. This reduces latency and improves throughput for data-intensive AI workloads.

GPUDirect: GPUDirect technologies enable GPUs to bypass the CPU and communicate directly with other devices, such as network interface cards (NICs) or storage devices, reducing latency and improving overall system performance.

Network Offloading: Offloading network-related tasks from the CPU to specialized hardware frees up CPU resources for AI computations and improves network efficiency.

SmartNICs: Programmable network interface cards that can offload tasks like network virtualization, security (e.g., encryption/decryption), and storage access. This reduces the processing burden on the CPU and improves network performance.

DPUs (Data Processing Units): More powerful than SmartNICs, DPUs are dedicated processors designed to offload and accelerate data-intensive tasks, including networking, storage, and security functions, and even some AI inference tasks (see the compute section for more details).

AI Frameworks: Bridging Models and Infrastructure

Modern AI development relies heavily on powerful frameworks that streamline model building, training, and deployment. These frameworks are often optimized for specific hardware, particularly TPUs and GPUs, to maximize performance. Here's a breakdown:

JAX:

JAX excels at large-scale models and distributed training. Its functional programming paradigm simplifies parallelization, and its XLA compiler optimizes code for various hardware backends.

JAX is particularly well-suited for TPUs. Its functional programming approach aligns well with the architecture of TPU pods, and XLA's efficient compilation is essential for maximizing TPU performance. This makes JAX a strong choice for training very large models on TPUs.

While JAX performs well on GPUs, especially with XLA optimizations, its GPU performance may not always match highly optimized PyTorch or TensorFlow implementations. XLA still plays a key role in optimizing GPU execution, but other frameworks often have more mature GPU-specific features.

PyTorch:

PyTorch is known for its ease of use, large community support, and strong performance, especially in research and development. It offers a familiar API and a rich ecosystem of tools and libraries.

PyTorch's integration with TPUs is facilitated through the PyTorch/XLA bridge. Nonetheless, JAX often demonstrates superior native TPU performance. Efficient data loading from storage to TPUs is a crucial consideration when using PyTorch on TPUs.

PyTorch offers excellent performance on GPUs and is a popular choice for GPU-accelerated deep learning. For very large models, optimizing data parallelism and model parallelism across multiple GPUs (and potentially nodes) is critical. This optimization often involves leveraging high-speed interconnects like NVLink and Infiniband for efficient inter-GPU communication.

TensorFlow:

TensorFlow is a mature framework with a large ecosystem and strong production focus. TensorFlow 2.x introduced a more user-friendly API, making it easier to learn and use.

TensorFlow is designed to work seamlessly with TPUs, offering tight integration and optimized performance. Understanding data input pipelines and TPU-specific optimizations is essential for maximizing TensorFlow's performance on TPUs.

TensorFlow provides good performance on GPUs and benefits from its mature ecosystem. TensorFlow may take longer for training jobs, while using less memory (though I am still learning about these dimensions for these chips). Similar to PyTorch, scaling TensorFlow across multiple GPUs often involves optimizing data parallelism and model parallelism, which relies on efficient networking and storage access.

The choice of framework impacts not only programming style and community support, but also how effectively your AI workload utilizes the infrastructure. For example, if you're training a massive model on TPUs, JAX might offer better performance due to its optimized compiler. Conversely, if you're working with a more moderate-sized model on GPUs, PyTorch's ease of use and strong community support might make it a better choice, provided you pay attention to how it scales with your data and model size.

Select Open-Source AI Libraries [Source]

Bringing it home: Edge-GNN use case

We’ve explored the interplay between AI and cloud infrastructure, highlighting key areas like compute, storage, and networking, and how they are orchestrated by AI frameworks.

Let’s briefly take a concrete example, to conclude this piece: consider “Edge-Based Graph Neural Networks” (Edge-GNNs), where "edge" refers to features associated with the connections between nodes in the graph (more on Graph Neural Networks here). These additional features provide richer information for the GNN, enabling more accurate and nuanced analysis. However, they also introduce computational and storage challenges. The inclusion of edge features increases the dimensionality of the input data, impacting the memory footprint and processing requirements. This directly relates to infrastructure considerations. For example, larger memory capacity and higher memory bandwidth become crucial for handling the increased data volume [NB: “edge” is used in two ways in the linked article]. Also, the computational complexity of processing edge features might require specialized hardware or software.

[NB: I’m including the below example, not because I know it is correct, but because it brings together an evocative and (relatively) easily understandable example, for thinking about the nuances for optimizing processor and infrastructure choices to support a specific AI model. I’ve started to research the below claims, to validate whether they are true. But I found the task a bit overwhelming. I couldn’t bring myself to just delete the story, since I find it so engaging, and since it may “scaffold” some of my future research on this topic.]

“Choosing the right hardware for your edge GNN depends on the specific task. Consider a simple GNN predicting friendships. Each person (node) has features like age and interests, and each friendship (edge) has a "connection strength" score. For compute, if your GNN mostly combines person features and connection strength for predictions, a GPU's flexibility is helpful. However, for GNNs with lots of complex math on this data, and if battery life is a concern, a TPU might be better, provided you can find tools that handle the connection strength data efficiently. Storage is crucial because GNNs often access data irregularly, like jumping between friends. So, how you store and access the person and connection data impacts speed. Finally, networking is less critical if the whole friendship network is on one device. But, if the network spans multiple devices or connection strengths change often, strong networking is essential.”

Finally, if you want to go fully meta, read Devansh’s take on how Edge-GNNs have improved the design process for, well, AI Chips.

I look forward to your feedback.

Thank you for being here, and I hope you have a wonderful day.

Dev <3

I provide various consulting and advisory services. If you‘d like to explore how we can work together, reach out to me through any of my socials over here or reply to this email.

I put a lot of work into writing this newsletter. To do so, I rely on you for support. If a few more people choose to become paid subscribers, the Chocolate Milk Cult can continue to provide high-quality and accessible education and opportunities to anyone who needs it. If you think this mission is worth contributing to, please consider a premium subscription. You can do so for less than the cost of a Netflix Subscription (pay what you want here).

If you liked this article and wish to share it, please refer to the following guidelines.

That is it for this piece. I appreciate your time. As always, if you’re interested in working with me or checking out my other work, my links will be at the end of this email/post. And if you found value in this write-up, I would appreciate you sharing it with more people. It is word-of-mouth referrals like yours that help me grow. You can share your testimonials over here.

Reach out to me

Use the links below to check out my other content, learn more about tutoring, reach out to me about projects, or just to say hi.

Small Snippets about Tech, AI and Machine Learning over here

AI Newsletter- https://artificialintelligencemadesimple.substack.com/

My grandma’s favorite Tech Newsletter- https://codinginterviewsmadesimple.substack.com/

Check out my other articles on Medium. : https://rb.gy/zn1aiu

My YouTube: https://rb.gy/88iwdd

Reach out to me on LinkedIn. Let’s connect: https://rb.gy/m5ok2y

My Instagram: https://rb.gy/gmvuy9

My Twitter: https://twitter.com/Machine01776819

Very interesting article. Thank you for publishing. As I am researching the topic myself, I am asking myself why you mention NVLink and InfiniBand as Inter-GPU communication protocols. Maybe you can help clarify it for me :) It is my understanding that NVLink is a protocol that serves intra-server GPU-to-GPU communication, while InfiniBand is used as protocol for inter-server GPU-to-GPU communication. As such they are not really interchangeable with each other. While I understand that there is no real alternative to NVLink in intra server communication, this is not the case for InfiniBand where Ethernet-based protocols could be considered as an alternative. Especially, considering RDMA like feature like RoCE. What is your take on this? Kind regards and thanks again for the great read!

Great article