How Politicians make money from inflation and what that means for System Design[Thoughts]

A look at how incentives can have the opposite effect to how they were designed.

Hey, it’s Devansh 👋👋

Thoughts is a series on AI Made Simple. In issues of Thoughts, I will share interesting developments/debates in AI, go over my first impressions, and their implications. These will not be as technically deep as my usual ML research/concept breakdowns. Ideas that stand out a lot will then be expanded into further breakdowns. These posts are meant to invite discussions and ideas, so don’t be shy about sharing what you think.

If you’d like to support my writing, consider becoming a premium subscriber to my sister publication Tech Made Simple to support my crippling chocolate milk addiction. Use the button below for a discount.

p.s. you can learn more about the paid plan here. If your company is looking looking for software/tech consulting- my company is open to helping more clients. We help with everything- from staffing to consulting, all the way to end to website/application development. Message me using LinkedIn, by replying to this email, or on the social media links at the end of the article to discuss your needs and see if you’d be a good match.

As is the case with many people, I am fairly interested in macroeconomics and the discussions surrounding inflation, wages, profits, and much more. One of the interesting debates that have caught my attention is to do with the discussion around minimum wage, inflation, and how affording basic necessities has become a struggle. For example, while things have continued to become more expensive, wages in the USA have stagnated since the 1970s. On the other hand, companies have continued to report record profits- even as they blame price hikes on supply chain shocks and other external problems that would normally hurt the bottom line.

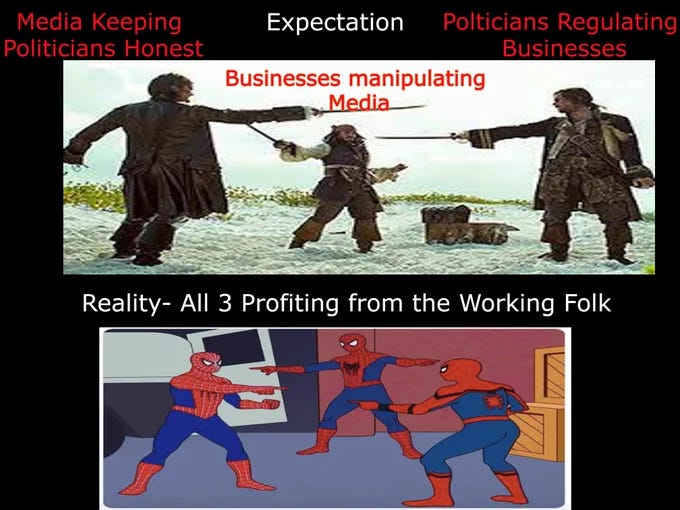

We have seen multiple theories pitched for this- lack of unionization, price gouging, automation. However, one thing that stands out here is the inaction taken by political leaders to effectively stop these problems from spiraling out of hand. Between the trillion dollar+ bailouts to companies, predatory student loan policies, and loosening worker protections- it seems like lawmakers are trying to make things worse. So what gives?

In this article, I will lay out a simple, game-theory-based argument for why this happens and how politicians profit from this. After that, we will cover this problem that shows up all the time when it comes to designing systems (especially with AI) and how we can tackle it.

How Politicians Profit from Inflation-

Politicians are very high net worth individuals (politics is one of the most lucrative career options, no matter where you are). As with most HNIs, a majority of their net worth is tied up in assets. This is the reason that they don’t stop predatory corporate practices and bail out their buddies on Wall Street. They benefit more when asset prices go up than they lose with the corresponding inflation of commodities. Keep in mind most of these politicians make high-base salaries and have many major expenses like housing taken care of, which means that they are insulated from the worst effects of the inflations.

Politicians care more about the share price of Walmart going up than they do about keeping the price of produce at Walmart down.

Politicians are far from the only HNI that bend society to maximize profits. Homeowners frequently block the construction of multi-housing units because they drive down property values. Business owners engage in union busting and other harmful practices to protect their profits (we have covered some such cases in the past). Billionaires like the Koch Brothers and Elon Musk have lobbied against the construction of Public Transportation in favor of car-centric investments. Crypto firms (backed by respected institutional investors) set up complicated structures to exploit legal loopholes and run their scams. The only difference is that politicians are supposed to regulate these people and protect the interests of the working class. However, Politicians have realized that they are better off getting in bed with the businesses since the negative externalities of this arrangement are born by ordinary people like us.

From a game theory perspective, this makes perfect sense. Politics is a very unstable career, with shifting alliances and a public that can turn on you at a moment’s notice. Any politician would be pushed to maximize profits while in office so that they can set up a good life once they leave office.

This also explains why politicians have been content to let productivity decouple from wages, pass predatory student loans, and let all basic necessities become commodities- They directly benefit from this. The reality is that we’re starting to see the limits of the infinite growth model that economics took for granted. Many businesses are struggling to keep up with shareholder expectations and acquire new markets. So they are forced to grow by squeezing out existing customers and slashing employee benefits.

So… what does this have to do with Software System Design and AI? Why is this piece in a publication called AI Made Simple?

The answer to that… is trivial and is thus left as an exercise for the reader (did y’all also have Math Books that pulled this stunt, or was I just blessed).

The Al Alignment Problem

The example I just went over is a real-world example of the AI alignment problem. AI Safety researchers have discovered designing incentives for AI Agents is a notoriously hard challenge because they always end up behaving in ways that we don’t expect. This is typically shown in video games, where common sense reward functions/environment setups lead to the AI making very weird decisions. A rather humorous example is shown in the amazing show Silicon Valley, where they try to use AI to debug code-

It is possible that the AI decided that the best way to get rid of all the bugs in the code was to delete the entire code

-Go watch Silicon Valley right now. There is so much truth represented in the jokes.

This happens because we often have a very incomplete understanding of the systems we interact with. Our decision-making is influenced by a lot of external factors and unconscious biases. Attempting to translate them into simplistic reward functions causes a loss of information, and leads to the AI working from a ‘system of knowledge’ that is very different from ours. Think of how hard philosophers have tried to codify ethics and morality. Their failure to develop a comprehensive system without flaws comes from a similar reason.

For an example closer to home, think of all the over-confident tech startups that promise to revolutionize industries and automate away ‘the waste’. Most invariably fail because the solutions are myopic and fail to account for the true complexities of the domain they’re getting into. They mistake the tree for a forest, spend millions of dollars on disrupting that tree, and completely forget that the tree only survives because of the ecosystem around it (we covered this problem over here).

Designing incentives and systems that work the way we need is very hard. Designing a bot that can forecast credit scores based on a person’s financial behavior? Decided to maximize accuracy on your comprehensive past dataset? Careful, you might just end up unfairly penalizing people from traditionally underprivileged backgrounds. Designing medical AI to detect disease? Watch out, black patients are three times more likely to suffer from an occult hypoxemia that remains undetected by pulse oximeters compared with white patients.

The incentives for both the systems we design and the people who design the systems are often skewed to create solutions with lots of hidden problems and misaligned autonomous agents.

So how do we tackle this?

Handling Flawed Incentives

So how do we ensure that the incentives we use for our incentives don’t come with hidden hacks? Simple, we give up on trying to create hack-free systems.

This might seem counter-intuitive. However, this is based on the principle of opportunity cost- your resources and attention are a scarce commodity. No matter how much time you spend on theory-crafting the perfect system, reality will give it a very intimate, face-first entanglement with failure and security flaws. In the immortal words of Mike Tyson, “Everyone got a plan till they get punched in the mouth”.

Instead, the key is in plowing resources into stress testing and detailed monitoring. The problem arises because we build systems assuming things will work the way they’re supposed to, not accounting for all the ways that they can work. I’ve consulted/worked with a pretty wide array of clients, and it’s shocking how flimsy testing protocols can get. Corners are cut, tests only account for a limited set of functionalities (‘the correct operations’), and almost no thought is put into monitoring performance after deployment to make changes.

Embrace techniques like Noise Injection, account for variance in performance, and replace heavy-duty components with ones that are lightweight and easily replaced. This will give your system the flexibility to deal with the various challenges/flaws that pop up along the way. If you want a guide on Stress Testing systems, we have one written here. As always, a thorough analysis of the domain to identify and counter common pitfalls helps a lot too.

That’s my 2 cents on the matter. What do you think? Would love to get your thoughts in the comments/replies below. Be sure to let me know.

If any of you would like to work on this topic, feel free to reach out to me. If you’re looking for AI Consultancy, Software Engineering implementation, or more- my company, SVAM, helps clients in many ways: app development, strategy consulting, and staffing. Feel free to reach out and share your needs, and we can work something out.

If you liked this article and wish to share it, please refer to the following guidelines.

That is it for this piece. I appreciate your time. As always, if you’re interested in working with me or checking out my other work, my links will be at the end of this email/post. If you like my writing, I would really appreciate an anonymous testimonial. You can drop it here. And if you found value in this write-up, I would appreciate you sharing it with more people. It is word-of-mouth referrals like yours that help me grow.

Reach out to me

Use the links below to check out my other content, learn more about tutoring, reach out to me about projects, or just to say hi.

Small Snippets about Tech, AI and Machine Learning over here

AI Newsletter- https://artificialintelligencemadesimple.substack.com/

My grandma’s favorite Tech Newsletter- https://codinginterviewsmadesimple.substack.com/

Check out my other articles on Medium. : https://rb.gy/zn1aiu

My YouTube: https://rb.gy/88iwdd

Reach out to me on LinkedIn. Let’s connect: https://rb.gy/m5ok2y

My Instagram: https://rb.gy/gmvuy9

My Twitter: https://twitter.com/Machine01776819

I’ve always found it hilarious to think that LLMs to date have a habit of returning delusional answers rather than simply state they “can’t compute”. It also explains in an oddly mirrored way why some human managers I’ve had the displeasure of working with or for make some totally stupid and irrational decisions! Lol.

As with any system, it can go wrong and it’s important to engineer in ways of at least detecting and reporting on strange behaviour as soon as it is detected by investing in defensive coding. Not sure what the AI equivalent is but humans spend many years learning various rules or guidelines around morality and being honest and admit your limits or failures, though even that has been severely eroded in todays selfish and self promoting world - spot the grumpy old man about to graduate from his fifties!

As such, I think testing is the way to go and it shouldn’t just stop at release date. I am a big proponent of live testing and passing through (be it specially marked) test requests into systems to see how they fare.

In the current world of MVP madness though, all of those features get deprioritised and end up on the backlog and entries in the technical debt and deep in the in-tray of the product manager.

Good luck with your crusade, and if I can help, you have my full support.

Great article, Devonshire. At our startup, we are living forever on the cusp of the perfect release because of just that kind of short-sightedness with regard to flaws in system design. "Yes, Development, it absolutely does work correctly if the user does everything right, the way we intend. But look here, see how badly it collapses when they don't".

I will share at the office.

(You had a little typo that reversed the intent of the Tyson quote - its "Everyone's got a *plan*...)