MANAGING AI/ML: How to build and deploy AI/ML systems [Guest]

Deep Insights from an AI Leader in Big Tech

Hey, it’s Devansh 👋👋

Our chocolate milk cult has a lot of experts and prominent figures. In the series Guests, I will invite these experts to come in and share their insights on various topics that they have studied/worked on. If you or someone you know has interesting ideas in Tech, AI, or any other fields, I would love to have you come on here and share your knowledge.

If you’d like to support my writing, consider becoming a premium subscriber to my sister publication Tech Made Simple to support my crippling chocolate milk addiction. Use the button below for a discount.

p.s. you can learn more about the paid plan here. If your company is looking for software/tech consulting- my company is open to helping more clients. We help with everything- from staffing to consulting, all the way to end to website/application development. Message me using LinkedIn, by replying to this email, or on the social media links at the end of the article to discuss your needs and see if you’d be a good match.

Dr. Chris Walton is a Senior Applied Science Manager at Amazon. His LinkedIn is a treasure trove of insights into leading AI/ML teams and understanding integrating these technologies into systems. Such content is severely underserved and thus Chris’s content is extremely valuable for anyone who wants to lead effective AI Teams and Projects in their organizations. I would highly recommend following him and looking through his content here. In today’s article, Chris will be sharing his wisdom about building and deploying ML systems. Chris covers various crucial points: including the difference between traditional software and Machine Learning, the difference in managing science/oriented AI teams vs ML Engineering teams, how to effectively manage expectations, and the challenges that you would implementing AI based systems. I’m sure you will find this as insightful as I did.

I am an Applied Science Manager, which means that I lead teams to build and deploy AI/ML systems. Despite the popularity of AI/ML, you won’t find many management books on the subject (I must write one!). Because I want to become better at my job, I spend a lot of time talking to other AI/ML managers to learn what they are doing, and to share knowledge from my own experiences. This article contains some of these learnings, which I hope you will find useful when building your own AI/ML systems.

One of the most common responses I receive is “I just use agile software development techniques to build AI/ML systems!” When I hear this my heart sinks, as I know the manager will not be successful. Every manager who has given me that response is no longer with the company or has gone back to managing conventional software systems. The reason is that AI/ML systems are very different, and agile techniques are generally a poor fit (more on this later).

Another common response is “I run my team like an academic research group” with a focus on experiments and publishing papers. This is a better response as it recognizes that AI/ML systems have a large science component. But it fails to capture the considerable effort that is needed to productionize AI/ML systems, commonly called MLOps. This effort is often greater than the scientific work, and it is estimated that over 90% of ML models never reach production due to lack of MLOps support. Managing AI/ML means making different science and engineering activities work together seamlessly.

Building and deploying AI/ML systems effectively requires new management techniques. This does not mean that all software management techniques need to be thrown out, but it does require a different mindset and willingness to adapt. There is no one-size-fits-all for AI/ML management, and I won’t directly prescribe any new methodologies here. Instead, I will highlight the differences and common pitfalls, together with suggested improvements. It will then be up to you to determine what works best for your own unique combination of people, domain, datasets, and customers.

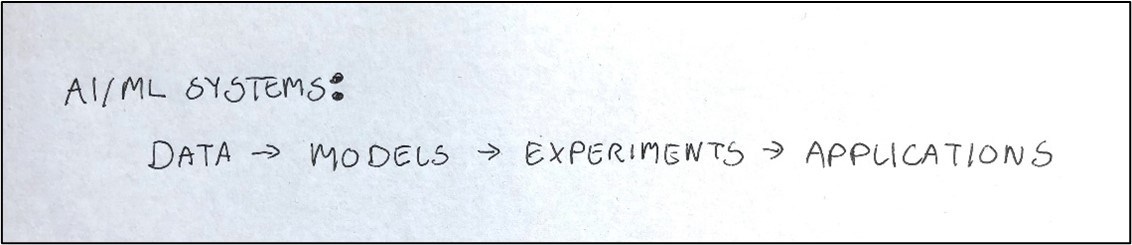

Differences in AI/ML SYSTEMS and Conventional Software Systems

How do AI/ML systems differ from conventional software systems? Here are some differences:

Data Handling – AI/ML systems usually require large quantities of curated training data, and (in general) the more data that is used, the better the system will perform. For example, GPT-3 (used by ChatGPT) is trained on ~45TB of data, which includes much of the Web, Wikipedia, and libraries of books. A lot of the effort required to build an AI/ML system is often simply ingesting and processing the large quantities of training data. Data quality is critical, as AI/ML follows the garbage-in/garbage-out principle, i.e., a powerful AI/ML model will still produce poor results when fed with low quality data.

Model Training – Conventional software systems implement algorithms to solve specific problems, while AI/ML systems “learn” how to solve problems. An AI/ML system is built by constructing models, which are then trained and tuned until they perform well on example problems. For example, a conventional software system to recognize handwriting could be constructed as an algorithm to trace letters and compare them against a library of shapes, while an AI/ML system for handwriting recognition is trained using examples of handwritten words, from which it learns to recognize words automatically!

Experimentation – building an AI/ML system is normally done iteratively, starting with simple techniques then gradually refining via experiments. An A/B experiment compares the performance of the previous system (control) against the new system (treatment) to determine if there is an improvement. Each experiment will generate new learnings, and it can often take a very large number of iterations before you strike gold. This can feel like guesswork, but educated guesses (hypothesis) are how the scientific method works.

Application – AI/ML systems have inherent uncertainty in their outputs, which means they are not suitable for all applications. A common way to measure AI/ML accuracy is to test on held-out data (i.e., not used for training) and generate an ROC plot of the false-positive against true-positive rate. Measuring the area under this curve (AUC-ROC) as a value from 0 to 1 gives a measure of accuracy, where 0.5 is essentially random. In general, 0.7 to 0.8 is considered acceptable, 0.8 to 0.9 is excellent, and over 0.9 is exceptional. This is very different from conventional systems, as most engineers would baulk at a sorting algorithm that was only 95% accurate!

AI/ML systems have a lot more “fuzziness” than conventional software systems, both during construction and in operation. The knee-jerk reaction of many software managers who encounter AI/ML is to treat this fuzziness as a defect and attempt to eliminate it, e.g., insisting every experiment is successful, setting fixed delivery dates, and requiring 99.99% accuracy. This is a fool’s errand that will quickly lead to frustrated scientists, team attrition, over-simplified models, and low performance. And requiring experiments to be successful means you are not actually experimenting at all!

Instead, it is necessary to view the fuzziness as an intrinsic property of AI/ML and develop management techniques to compensate (more on this next). AI/ML is best used in applications where humans also struggle to achieve high accuracy, e.g., handwriting recognition, facial recognition, weather prediction, medical diagnosis, fraud detection, etc. These kinds of applications are generally very difficult to implement as conventional software systems. AI/ML can often outperform humans for these applications, but it is also important to consider the consequences of incorrect outputs and implement appropriate safeguards.

AI/ML MANAGEMENT

AI/ML systems require management of many different roles, each with distinct skillsets and techniques, e.g., applied science, data science, data engineering, data analytics, feature engineering, software engineering, ML engineering, MLOps, etc. For this article, I will split them into science and engineering categories, and will put applied/data science, feature engineering, and data analytics into the science category. I recommend separate teams for scientists and engineers, as they require different management styles and techniques:

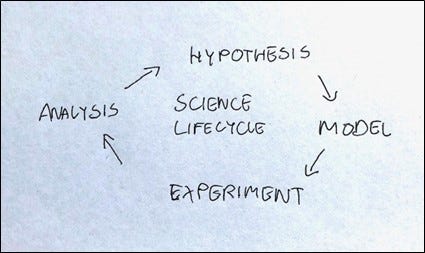

SCIENCE MANAGEMENT

AI/ML science is similar to other forms of science and follows the iterative scientific method: Hypothesis -> Model -> Experiment -> Analysis. Compared to software engineering, science requires very long cycles, which can include months of research before committing to an approach, and extensive analysis to understand the outcomes of experiments.

-Extremely important point to note.

Scientific work can feel agonizingly slow for managers accustomed to implementing and releasing new features on a bi-weekly cadence. However, attempting to short-circuit these activities is a mistake, as these phases are where valuable learnings are generated. Other suggestions on how to manage science activities are:

Experiments - Science is done by performing experiments to test hypothesis. The faster your scientists can experiment, the more insights they will generate. Focus on what is preventing rapid experimentation and find ways to remove the blockers, e.g., data quality and automation. Experiments will have a high failure rate - allow time for multiple iterations of each experiment, find ways to fail fast, and always have multiple alternatives available.

Planning - Science is unpredictable and has branching behaviors, e.g., if you run 10 experiments, 3 may be partially-successful and require further investigation, leading to 6 new experiments, of which 2 return promising results, etc. This means that conventional planning techniques cannot be used. My advice is not to attempt detailed planning for science, and instead focus on increasing experiment velocity, and maximizing the value of each experiment.

Peer-Review - Scientists work best as a group where they can perform peer reviews, e.g., on experiment design and results. Science is rarely clear-cut, and feedback from other scientists ensures that the best conclusions are reached. Adding isolated scientists to engineering teams will be less effective, and where this is unavoidable try to establish cross-team science partnerships.

Knowledge - Establish regular knowledge sharing for your scientists with engineering. Your scientists will rely on engineering to tell them what is possible within the architectural constraints, and engineering needs to know where the science is going to ensure they are building systems to meet future needs. Weekly review sessions that alternate between science and engineering topics are a good way to achieve this.

Prototyping – A working prototype is a powerful interface between science and engineering. If your scientists build a prototype (e.g., Jupyter notebook), the engineers can then work out the best approach for productionization. The outputs of the prototype can be compared with the production system to validate the implementation, and any differences can be identified and discussed.

Roles - Scientists are not engineers or business analysts. Although many scientists are capable of writing and deploying code and performing detailed business analytics, these tasks require very different skills and it is best to hire experts. When this is not possible, try to rotate these activities between your scientists to avoid frustration.

My preferred approach for managing science activities is to use a Kanban process to track progress of each project and ensure there are not too many projects in-flight at the same time. However, there are generally several ad-hoc science tasks that need to performed, e.g., analysis, tuning, and diagnosis. As a result, I normally assign two workstreams to each scientist: one for large projects, and one for smaller work items. Each scientist has a primary research area, which is tracked in the main workstream, with the second workstream for smaller tasks. Each scientist will pick-up these smaller items when their main work-steam is blocked, e.g., waiting for experiments to complete. This two-stream approach ensures the smaller items are progressed in a timely manner, and are evenly distributed between the scientists.

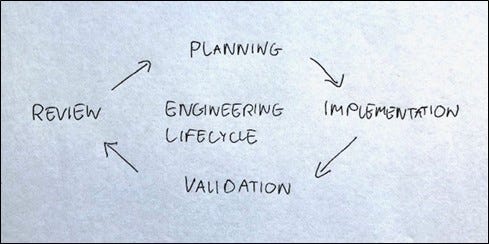

ENGINEERING MANAGEMENT

AI/ML engineering is similar to other forms of software engineering, but is focused on implementing and productionizing science outputs, rather than building from scratch. Scientists and engineers work at different speeds and coordination is needed between the two groups. Done poorly, engineers will complain they are blocked waiting for the scientists to complete, and the scientists will report they are not being given sufficient time for research. This requires careful management, and my recommendation is that the engineering work is not scheduled until the science is fully complete and peer-reviewed! Other recommendations for managing AI/ML engineering are:

Collaboration - The purpose of AI/ML engineering is to take science research and translate it into working production systems. Your engineers should work closely with your scientists to understand their results and determine the best way to implement them in production. This requires engineers who are familiar with AI/ML techniques and tools, and capable of interpreting science research, i.e., not afraid of equations!

Effort - AI/ML requires a lot of engineering effort, and I generally recommend a 2:1 ratio of engineers to scientists. Your engineers will often need to maintain an existing AI/ML system, while also developing the next iteration. You also need a good mix of senior engineers to address the hard AI/ML challenges, and junior engineers for more routine activities. Your senior engineers will train your junior engineers, and so you have a sustainable team lifecycle.

Customers - AI/ML systems should be designed for two separate customers: the scientists, and the end-users. The systems should have self-service interfaces for scientists to perform experiments, tuning, analysis, and diagnostics in ways that are transparent to the end users. Your scientists will also require easy access to the internals to ensure things are working correctly. Engineering should also build tools to help the scientists work more effectively.

Ownership - The AI/ML engineering team should have full end-to-end ownership of the pipeline, from offline model training through to online inference. This is because every change made to the models must be accurately reflected during inference. If have separate teams for these activities you will spend a lot of time ensuring the systems are in sync, and even minor differences will result in training/serving skew that will impact accuracy.

Disconnects - Avoid the temptation to centralize either science of engineering, e.g., a single science team for multiple engineering teams, or a single engineering team for multiple science teams. This approach will create a disconnect between the two, with scientists blocked on engineering support, or engineers unable to get enough guidance from science. The two need to work closely together from initial research through to productionization.

My preferred approach for managing AI/ML engineering is to use conventional agile techniques, with caveats around the co-ordination with science. It is well-known that AI/ML systems are very hard to build. There will always be technology, architecture, and cost limitations that require careful tradeoffs. In addition, these systems require high levels of numerical precision, as even minor differences can prevent them from working properly. This can rapidly lead to frustration, delays, and finger-pointing! As normal, focus on addressing the biggest pain points and finding workable compromises for the rest.

MANAGING EXPECTATIONS

Thus far, I have focused on the technical challenges of managing scientists and engineers to build AI/ML systems. However, your biggest challenge will be managing the perceptions and expectations of business and product leaders. The start of my career coincided with an explosion in popularity of the web, and I spent a lot of my time evangelizing the benefits of going online, while addressing concerns about hackers and viruses! We are now at a similar stage with AI/ML, where these systems are exploding in popularity, but there is a lot of misinformation on the capabilities and dangers. Your skill at managing the expectations of leadership will ultimately determine the success or failure of your systems.

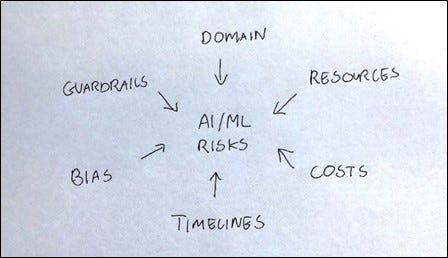

You will likely encounter a wide-range of viewpoints, such as: 1) AI/ML is a magic bullet that can solve all business problems, 2) AI/ML is biased and will lead to PR/legal/financial issues, and 3) AI/ML is dangerous and will replace us all! This is not surprising, as even leading AI/ML practitioners disagree on the risks, and how close we are to achieving human intelligence. The use of AI/ML to solve business problem is still very new, and so a lot of effort is still needed to explain and justify the benefits. Nobody asks me about the advantages of going online anymore, and I expect eventually everyone will also know the benefits of AI/ML. Some specific challenges you will likely face are:

Application - Until recently, it has often been hard to get leadership buy-in for AI/ML solutions, given their unpredictable nature and high costs when compared with conventional software systems. However, with the recent surge in AI/ML popularity, the challenge is now to ensure these solutions are applied in ways that are appropriate, and have some chance of actually being successful!

Build or Buy - There are many open-source models and off-the-shelf AI/ML solutions that may do some or most of what you need. This can save significant time and effort, but they will not be customized for your data and domain. A lot of the effort of building an AI/ML solution is curating the training data, and you can generally only get the highest performance and accuracy by building your own systems.

Resources - AI/ML solutions are computationally expensive to build and operate as they require processing large volumes of data, and use expensive GPUs. The costs for experimentation can also approach the costs of running the production system. Justifying these costs can be difficult, but scrimping on resources or experimentation will effectively guarantee poor performance.

Timelines - AI/ML systems take a long time to build as they require research and experimentation cycles with frequent failures and dead-ends. My advice is to run parallel tracks, so you can deliver regular incremental improvements on one track, giving you the aircover to perform larger experiments on the other track.

Explainability - You may be asked why an AI/ML system is making specific decisions, which can be hard to justify as these systems often operate as a black-box. In general, the more complex the models, the harder it will be to explain why they generate specific outputs. My advice is to invest science effort in explainability techniques as a core activity, rather than attempting to do this afterwards.

Guardrails - AI/ML systems are probabilistic in nature, which means they will often generate incorrect outputs. This is expected behavior, and so it is necessary to implement appropriate guardrails to prevent adverse behaviors. One approach is to have humans audit a sample of the outputs of the system. This can give you a measure of the quality of the system, and provide a feedback loop to improve the AI/ML system.

For me, the largest benefit of using AI/ML in a business context is scale. One of the first successful deployments of AI/ML was to recognize handwritten zip-codes, which has enabled the postal system to scale. Consider the current difficulties completing your tax return, obtaining a mortgage, getting customer support, buying a car, getting a travel visa, changing your internet provider, and updating your travel plans. The existing human processes are woefully inefficient and are not likely to improve. My opinion is that AI/ML will not replace humans, but will augment human processes and help us make decisions more efficiently. Companies that leverage AI/ML effectively will be able to realize much greater scale and improvement in the customer experience.

FINAL WORDS

AI/ML system have high complexity, due to unique science and engineering challenges that are not present in conventional software systems. I have now outlined the key differences and some of the management challenges you will likely face. However, I have only scratched the surface here, and there are certainly many risks and scenarios that I have not covered or solved. Despite the difficulties, AI/ML systems are capable of solving problems that are far beyond the capabilities of conventional software systems, and the outcome of successful AI/ML deployment can be both personally and financially rewarding!

In the early days of computing there was a “Software Crisis”, where reliable software could not be built fast or reliably enough to meet demand. I predict an upcoming “AI/ML Crisis” as we struggle to increase the speed and accuracy of these systems. For this reason, I believe it is imperative to develop and improve the management techniques for AI/ML so that we can be ready to meet these challenges.

[Please note that all opinions expressed in this article are entirely the view of the author, and in no way represent the views of Amazon.]

If you liked this article and wish to share it, please refer to the following guidelines.

That is it for this piece. I appreciate your time. As always, if you’re interested in working with me or checking out my other work, my links will be at the end of this email/post. If you like my writing, I would really appreciate an anonymous testimonial. You can drop it here. And if you found value in this write-up, I would appreciate you sharing it with more people. It is word-of-mouth referrals like yours that help me grow.

If you find AI Made Simple useful and would like to support my writing- please consider getting a premium subscription to my sister publication Tech Made Simple below. Supporting gives you access to a lot more content and enables me to continue writing. You can use the button below for a special discount for readers of AI Made Simple, which will give you a premium subscription at 50% off forever. This will cost you 400 INR (5 USD) monthly or 4000 INR (50 USD) per year.

Reach out to me

Use the links below to check out my other content, learn more about tutoring, reach out to me about projects, or just to say hi.

Small Snippets about Tech, AI and Machine Learning over here

AI Newsletter- https://artificialintelligencemadesimple.substack.com/

My grandma’s favorite Tech Newsletter- https://codinginterviewsmadesimple.substack.com/

Check out my other articles on Medium. : https://rb.gy/zn1aiu

My YouTube: https://rb.gy/88iwdd

Reach out to me on LinkedIn. Let’s connect: https://rb.gy/m5ok2y

My Instagram: https://rb.gy/gmvuy9

My Twitter: https://twitter.com/Machine01776819

This was great Devansh! As a ML engineer, this was very helpful to read especially to get a management perspective. As someone who has never worked in a non-ML role, it was interesting to see the considerations for ML vs non-ML engineering work because the ML considerations are what I see as the default.

Excellent post! Thanks!