Prompt Engineering will change the world. Just not in the way you think [Thoughts]

How people have completely missed the benefits of this idea and how it's impact

Hey, it’s Devansh 👋👋

Thoughts is a series on AI Made Simple. In issues of Thoughts, I will share interesting developments/debates in AI, go over my first impressions, and their implications. These will not be as technically deep as my usual ML research/concept breakdowns. Ideas that stand out a lot will then be expanded into further breakdowns. These posts are meant to invite discussions and ideas, so don’t be shy about sharing what you think.

If you’d like to support my writing, please consider buying and rating my 1 Dollar Ebook on Amazon or becoming a premium subscriber to my sister publication Tech Made Simple using the button below.

p.s. you can learn more about the paid plan here. If your company is looking to build AI Products or Platforms and is looking for consultancy for the same, my company is open to helping more clients. Message me using LinkedIn, by replying to this email, or on the social media links at the end of the article to discuss your needs and see if you’d be a good match.

Strap your belt in kiddos, because this is going to be controversial.

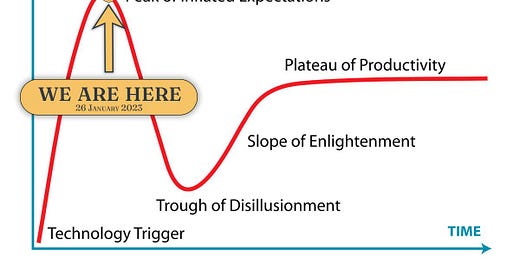

Prompt Engineering is one of those topics that everyone has very strong opinions on. On one hand, we have ‘AI Consultants’ on LinkedIn who get their knowledge of AI and Machine Learning from McKinsey reports and IG Reels claiming that Prompt Engineering is a million-dollar skill that will allow you to rake in heaps of money and set up businesses on your own. On the other hand, we have Machine Learning experts like

who have expressed shock at the 6-figure salaries and hype for prompt engineers. They see PE as another instance of market hype and misunderstanding- completely undeserving of the ‘revolutionary new skill’ moniker that people are tacking onto it.In this article, I’m about to present you with a third perspective. Leveraged correctly Prompt Engineering is going to change the World. Prompt Engineering has created an opportunity that would be unthinkable before, and its implications will resonate throughout. Just not in the way that people are making it out to be. I believe that most people have misunderstood PE and its true utility. In this article, I will be going over what people get wrong about Prompt Engineering, where it will be truly useful, and how it can be used to enable safer and fairer AI. Used correctly, Prompt Engineering will cause huge leaps in AI Safety; I will explain how we can achieve that.

The Myth of Prompt Engineering

Let me get this out of the way- Prompt Engineering is not a million-dollar skill. No company should be paying high costs for a specialized Prompt Engineer. You will not magically become a doctor, software engineer, lawyer or what have you by mastering this skill. Here’s why.

At its core, Prompt Engineering offers something simple but powerful- through the correct permutation of characters, we can influence the model outputs to get whatever results we need, in a way that would surpass traditional experts (at least in terms of ROI). Let us for a second assume we have the perfect training data- data that contains all the world’s information structured in the best possible way powering the Language Model. So who is best suited to leverage this magic AI? Would it be-

Someone who writes very good prompts

Someone with domain expertise

The latter is much more meaningful to any organization. Not only could they create the most detailed and specific prompts, but they would have the ability to assess the outputs and modify them as needed. At best, a pure prompt engineer would act as a junior engineer- someone who could implement small-scale specific features under detailed instructions from someone with the domain expertise required for the tasks in the first place (but will struggle with adapting any inputs). Hardly a job that will command millions or allow you to build up businesses alone.

Most people gassing up Prompt Engineering fall in 2 camps- Media Folk with an economic incentive to sensationalize everything (we covered that here) or non-experts who don’t appreciate the difficulty of these underlying tasks (take Geoff Hinton’s fallacy which I’ve talked about a few times). At best, Prompt Engineering can enable you the how of solutions, but without any expertise/domain knowledge- you won’t have the judgement to know when to use what. This is the same problem with many coding bootcamps/courses- they might teach you a particular technology or framework, but many don’t teach you the underlying principles that allow you to use these techniques effectively. Unless you develop that judgement, you will not be able to work on these challenges effectively. Domain Knowledge can’t be replaced with prompts.

Keep in mind, we’re talking the best case scenario- with a perfect dataset and a model that can synthesize everything perfectly. Reality is very very different. In reality, these models will make a lot of mistakes, which makes the entire process a lot more challenging. Substack bestseller and tech expert,

has a great piece on AI Coding over here. Most relevant to us are his experiences with debugging the code generated-Debugging the code required me to study the openai and telegraf libraries, undoing 90% of the benefit of using the AI in the first place.

Debugging was surprisingly hard, because these were not the kind of mistakes a normal person makes. When we debug, our brain is wired for looking at things we are more likely to get wrong. When you debug AI code, instead, literally anything can be wrong (maybe over time we will figure out the most common AI mistakes), which makes the work harder. In this case, AI completely made a method up — which is not something people usually do.

Imagine having to do that without understanding the underlying domain. How do you plan to dig through the documentation, rank different approaches for building, evaluate the different outputs, and go through the underlying source information without knowing anything about the field?

So far, I’ve been pretty dismissive of Prompt Engineering. That’s for a good reason. My IG and LinkedIn have been filled with gurus offering Prompt Engineering courses for money, and I want to warn you against that. They are all preying on your ignorance and hunger for upskilling. Prompt Engineering is not some super secret skill that you need a special course for. Most articles/content that tells you how to be better than 99% of people who use ChatGPT are the cookie-cutter nonsense. The people paying for prompt engineers are all involved with the hype. Some are trying to generate it: If Anothropic hires someone for 200K USD to write prompts, and puts out prompt engineering materials, then people will flock heavily towards this. They will ultimately use Anthropic Tools to try and cash in- which ultimately leads to increased sales for Anthropic. Or we have people who get affected by the hype, who look at all the publicity generated by people of type 1 and then fall the ‘trend’ without understanding the incentives. Most influencers pitching you Prompt Engineering as the next big thing are being paid to sell you the dream, either directly (these Gen AI companies put a lot of time into Influencer outreach) or indirectly (trying to get in on the hype).

So then why did I say that Prompt Engineering will change the world? If Prompt Engineering is a product of Hype, then what benefits will it bring? How do we square that circle? Simple- buy my 1000 USD prompt engineering course. My prompt engineering course is special- because it will also contain a dedicated section on how you can use the prompt engineering skills from this course to create your own course and sell that to people. We also have a very comprehensive refund policy- should you want a refund, all you need to do is write a 50,000 word essay about why this course didn’t help you and deliver it in person to our Mt. Everest office.

Now some of you are not sigma males like me- so you’d be hesitant to invest into assets, mentors, and yourself. Worry not. The next section is for you. Prompt Engineering is going to be a game-changer for AI Safety and bias research- even if you don’t buy my magic courses.

We’re all AI Safety Researchers.

Prompt Engineering might not be a 6-figure skill- but that doesn’t mean it’s not a skill that business should be putting 6 figures into. Sound weird? Let’s take a step back and look at the big picture- Prompt Engineers enables everyone to interact with a Language Model, and more importantly, to interact with it’s underlying dataset. Let that sink in. For years now, we have been trying to figure out ways to study bias in AI and Datasets. However…

The problem of studying AI Bias has had a delicious irony- the kind of people who would be into studying bias are by themselves a very unique group, introducing strong sample selection bias into these studies.

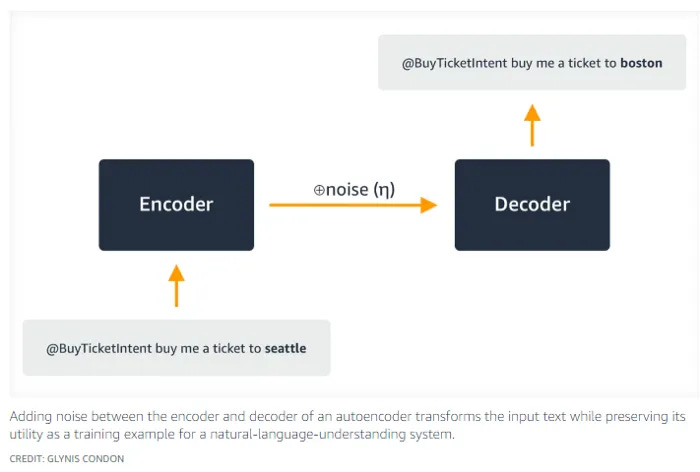

This has amongst the reasons why so many ‘Fair AI’ and ‘Robust ML Agents’ face-plant magnificently when they’re rolled out to the wild world of production AI. By and large, the most effective way to fight bias has been the use of Data Augmentation (or generally Noise Injection during training), in order to better simulate the chaos of the real world. By injecting noise, we force our models to not overfit- which helps it not learn the biases/noise in our input training data. Ensembles have worked very well for a similar reason- since they can sample a more diverse search space for the final results.

Notice that this doesn’t tell us much about the underlying data, just helps us better work through solutions. So where does Prompt Engineering fit into this? Simply put, PE allows everyone to interface with the model and it’s datasets. Through models like GPT- we have indexed large sections of the data on the internet. People can investigate these sections and for once we can come close to understanding the inherent biases in our datasets in a way that they will be reflected in the real world. Prompt Engineering allows us to not only study what biases persist in our datasets, but also what AI Models find more salient when parsing through the indexed data. This is a double whammy- allowing us to study both the data and the models. That is a massive W for AI Safety, Observable ML, and more. Let the potential for that sink in.

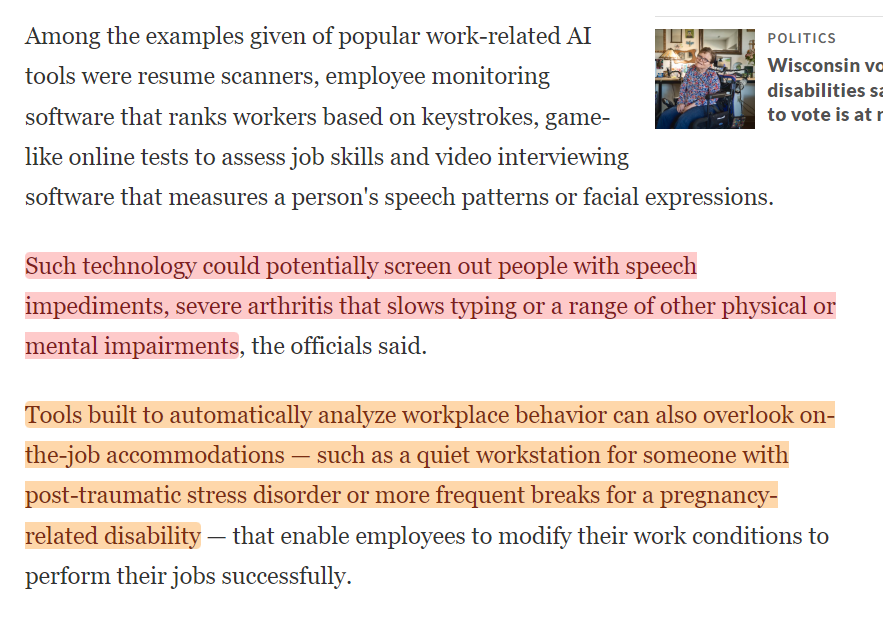

The implications for this on a macro-societal scale are clear- we can now study the bias present on the internet and how that influence our perceptions (look at the image above for a very jarring example). But the best part about this is that this approach is very malleable. It is very feasible to build your own LLM, indexed on your own source documents to build chatbots that can answer questions on specialized domains w/o hallucinations (my company, SVAM, does this for our clients). Now say that indexed data was company communications, policies, and other internal documents. Suddenty you have the tools to study the biases in these company communications and take concrete steps in making your organization more inclusive.

If you’re worried about the costs of training custom LLMs, you can significantly reduce costs by switching from the traditional pretrained setup to one based on retrieval (as shown below). That way, your additional costs are only in reindexing new data, instead of completely retraining from scratch (it also allows you to cite original sources, which is a boon for verification). That’s what we’ve done, and results are very promising. Below is a good illustration for a starting example that you can try out (if you’re looking for more details, you can always reach out to me).

Instead of burning heaps of money hiring one specialized prompt engineer- spend that money hiring a diverse set of testers who help you test these for biases. A while back, I wrote an article on biases and how they differed in humans vs AI. Towards the end, I touched upon how misunderstanding these biases leads to very really dangerous and exclusionary ideas- like implementing GPT for evaluating candidates/employees. Here is the part where I talked about how using GPT/other standard LLMs for other these tasks would create very exlusionary hiring/working environments-

Prompt Engineering with a diverse team can be a first-hand step into investigating whether these hiring practices are already exclusionary. For example, we could run the resume of a neuro-divergent person through the AI and ask it to score it to see if these neurodivergent are being unfairly penalized. This might seem like reaching to some of you, but AI-enabled discrimination is a very real phenomenon that people have conveniently overlooked.

To come up with effective protocols for Prompt Engineering and it’s application in studying AI/Data bias will be a challenge, and would require significant investment. But the returns will be well worth it. I’m not a huge regulations person, but I do believe something is required here. With all the talk about making millions with AI, it seems like many people have overlooked something fundamental- these tools (and technology in general) should exist to make human lives better, not just extract the most possible value for shareholders while making everyone elses’s lives worse.

Is that too controversial? I’d love to hear from you.

If any of you would like to work on this topic, feel free to reach out to me. If you’re looking for AI Consultancy, Software Engineering implementation, or more- my company, SVAM, helps clients in many ways: application development, strategy consulting, and staffing. Feel free to reach out and share your needs, and we can work something out.

That is it for this piece. I appreciate your time. As always, if you’re interested in working with me or checking out my other work, my links will be at the end of this email/post. If you like my writing, I would really appreciate an anonymous testimonial. You can drop it here. And if you found value in this write-up, I would appreciate you sharing it with more people. It is word-of-mouth referrals like yours that help me grow.

Reach out to me

Use the links below to check out my other content, learn more about tutoring, reach out to me about projects, or just to say hi.

Small Snippets about Tech, AI and Machine Learning over here

AI Newsletter- https://artificialintelligencemadesimple.substack.com/

My grandma’s favorite Tech Newsletter- https://codinginterviewsmadesimple.substack.com/

Check out my other articles on Medium. : https://rb.gy/zn1aiu

My YouTube: https://rb.gy/88iwdd

Reach out to me on LinkedIn. Let’s connect: https://rb.gy/m5ok2y

My Instagram: https://rb.gy/gmvuy9

My Twitter: https://twitter.com/Machine01776819

Appreciate this fresh angle on Prompt Engineering and what people misunderstand about it.

It's very helpful for creating a safer and fairer AI.

Prompt engineering course-sellers are like the pick-and-shovel sellers during the California Gold Rush of the 19th century: they're the first ones to get rich, and everyone else who comes later is going to have a LOT more competition.