Why "AI Hate" is Your Next Billion-Dollar Opportunity [Markets]

How AI is eating itself, and what an Old German Philosopher can teach us about Contrarian Bets

It takes time to create work that’s clear, independent, and genuinely useful. If you’ve found value in this newsletter, consider becoming a paid subscriber. It helps me dive deeper into research, reach more people, stay free from ads/hidden agendas, and supports my crippling chocolate milk addiction. We run on a “pay what you can” model—so if you believe in the mission, there’s likely a plan that fits (over here).

Every subscription helps me stay independent, avoid clickbait, and focus on depth over noise, and I deeply appreciate everyone who chooses to support our cult.

PS – Supporting this work doesn’t have to come out of your pocket. If you read this as part of your professional development, you can use this email template to request reimbursement for your subscription.

Every month, the Chocolate Milk Cult reaches over a million Builders, Investors, Policy Makers, Leaders, and more. If you’d like to meet other members of our community, please fill out this contact form here (I will never sell your data nor will I make intros w/o your explicit permission)- https://forms.gle/Pi1pGLuS1FmzXoLr6

“Outsized returns often come from betting against conventional wisdom…”

Anthropic just secured a potentially era-defining legal win: the right to use copyrighted material in LLM training — though they’ll still stand trial for pirated sources. The legal fallout will take time to unfold (we’ll cover it on the Iqidis LinkedIn), but there’s a more immediate signal I want to track: AI hate is metastasizing.

This development will no doubt compound the massive undercurrent of AI hatred that’s already brewing online and in many spaces. If you follow popular Substack writer

, you will see more and more of his posts have taken an anti-AI sentiment as well.Some of this negative emotion is based on very real concerns that Tech has ignored, as many Silicon Valley voices push a very rigid techno-utopia while forgetting to ask anyone if they want to live there. Some is general distrust given Silicon Valley’s legendary knack for birthing problems while preaching salvation.

A lot of it is just professional whiners trying to squeeze a few more fear-clicks out of doomerism.

But either way, this is a generationally good space.

The way I see it: where there is smoke, there is an opportunity to have a sick barbeque. A BBQ where I can shove a lot of big, thick, juicy meat in my mouth. And it’s my life philosophy to never pass on such moments.

In this article, I want to lay out a high-level investment framework to lay out why investing in this increasing antipathy is the correct thing to do —

Financially: lots of assymetric opportunity where small investments will net bigger upside

For better technology: Every dominant thesis loses sight of it’s limitations. A strong antithesis is important to explore new directions.

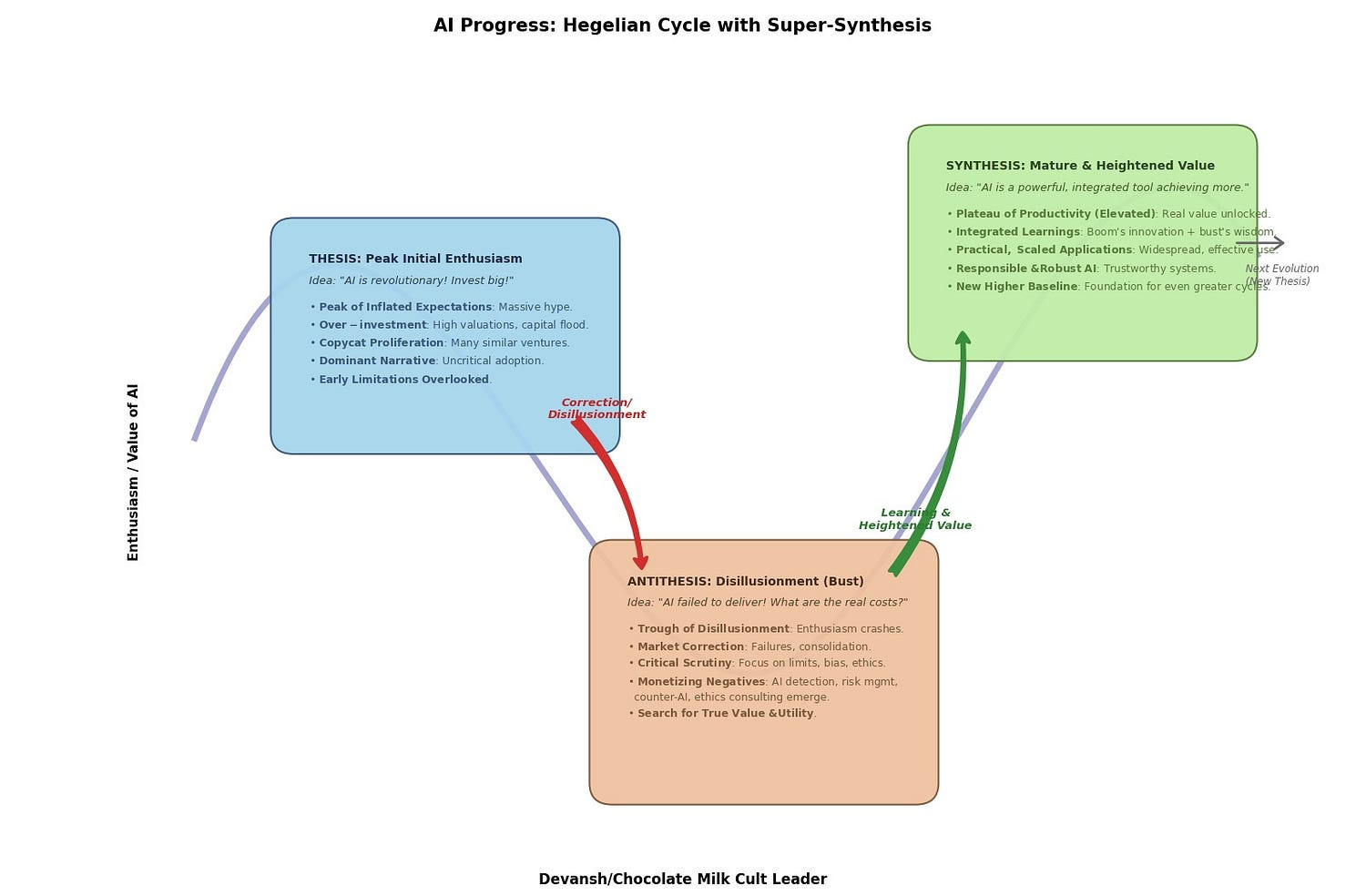

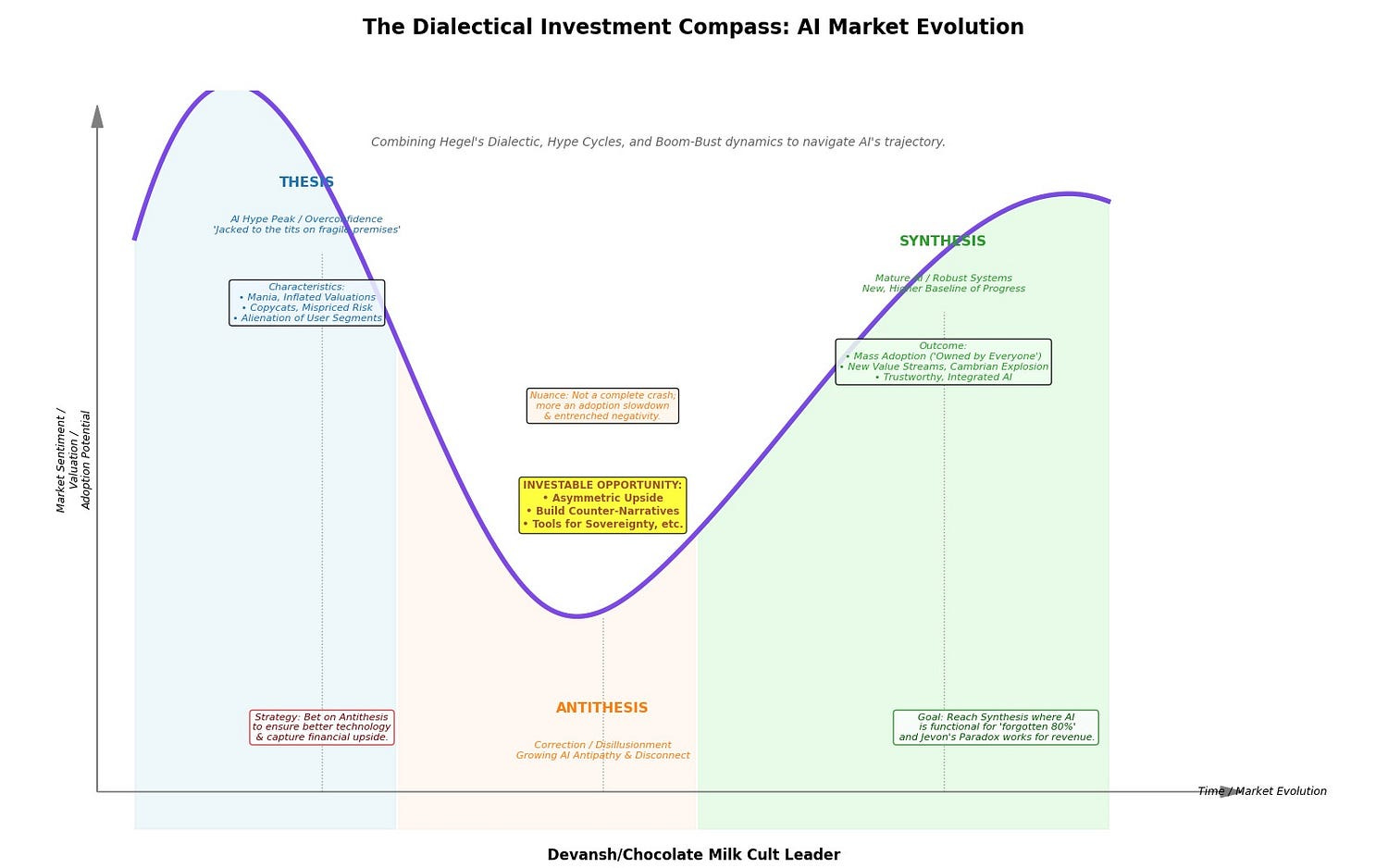

To do so, I will adopt elements of the Hegelian Dialectic to present why the “AI hating movement” can be seen as the antithesis to a world that is overrun with AI Hype and increasing disconnection b/w Tech and many other groups. Meaningful progress requires the combination of thesis and the antithesis to create a synthesis — where a new more robust system is created that can be adopted by the masses.

Let’s get a lot of girthy meat in our mouths.

Executive Highlights (TL;DR of the Article)

This article presents a strategic analysis of the current AI landscape and its likely evolution, arguing for a contrarian investment and development approach. Key points include:

Current AI “Thesis” Imbalance: The prevailing AI development paradigm is characterized by rapid expansion, high valuations, and a tendency towards copycat solutions, often overlooking significant risks and alienating potential user segments due to unaddressed concerns.

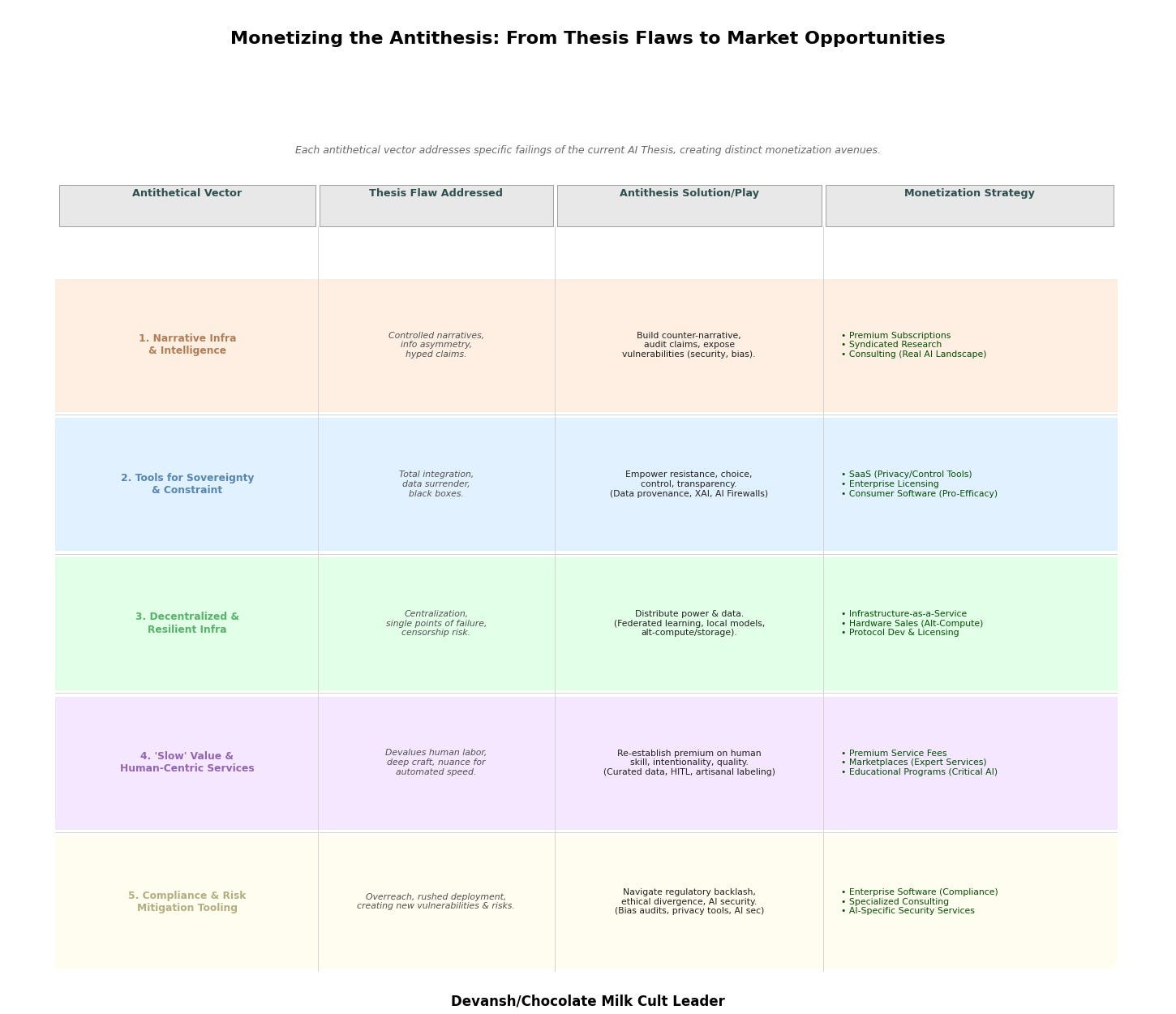

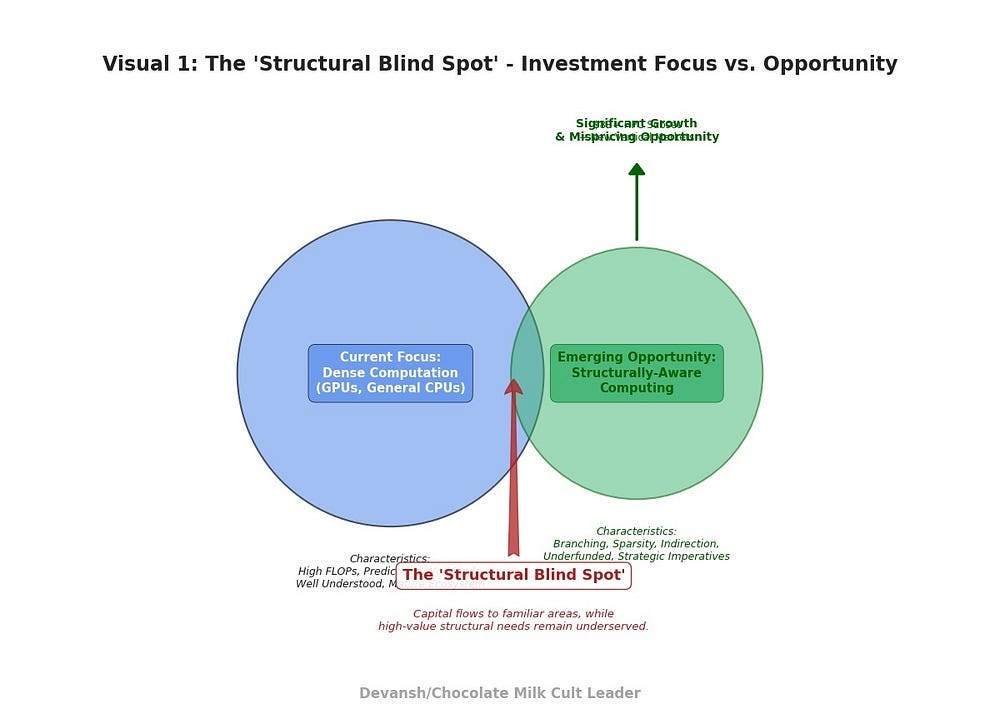

Emergence of an “Antithesis”: This imbalance creates a natural opportunity for an “Antithesis” — solutions, platforms, and narratives that directly address the weaknesses and overlooked areas of the dominant AI Thesis. This includes focusing on issues like data sovereignty, algorithmic transparency, constrained/specialized AI, and decentralized infrastructure.

The “Antithesis” as an Investable Opportunity: The growing discontent and unaddressed needs represent significant market opportunities. Investing in or building these anti-thetical solutions is positioned not as a negative stance, but as a strategic bet on a necessary market correction and evolution.

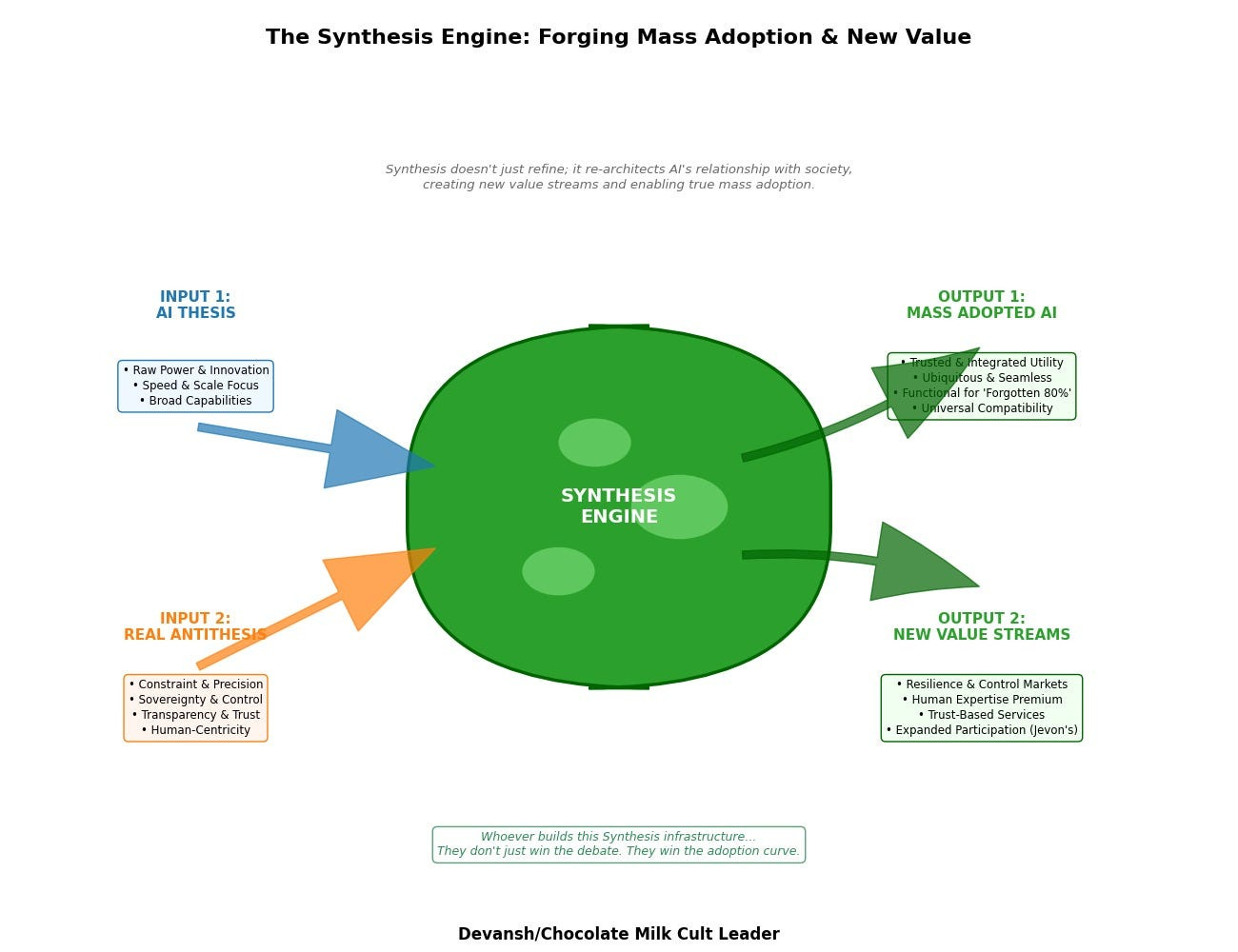

The “Synthesis” as the Path to Mass Adoption: The interplay between the Thesis and a robust Antithesis is projected to lead to a “Synthesis.” This phase will integrate the strengths of both, resulting in AI technologies that are more trustworthy, secure, and aligned with broader societal needs, thereby enabling true mass market adoption and creating new, expansive value streams.

Understanding this dialectical progression (Thesis-Antithesis-Synthesis) provides a framework for anticipating market shifts and making proactive strategic decisions, rather than merely reacting to prevailing hype cycles.

Why Continue Reading?

The subsequent sections will provide a detailed breakdown of:

The Hegelian dialectic as an analytical tool for understanding technological and market evolution.

A critical analysis of the current AI Thesis and the shortcomings of existing critiques.

The defining characteristics and pillars of a viable, constructive AI Antithesis.

Actionable strategies and tactical vectors for investing in and developing these an-thetical approaches, including a timing matrix for peak opportunities.

A deeper exploration of how the Synthesis phase leads to broader market creation and universal technology adoption.

This framework is intended for those looking to understand the deeper structural dynamics of the AI market and identify strategic opportunities beyond mainstream narratives.

I put a lot of work into writing this newsletter. To do so, I rely on you for support. If a few more people choose to become paid subscribers, the Chocolate Milk Cult can continue to provide high-quality and accessible education and opportunities to anyone who needs it. If you think this mission is worth contributing to, please consider a premium subscription. You can do so for less than the cost of a Netflix Subscription (pay what you want here).

I provide various consulting and advisory services. If you‘d like to explore how we can work together, reach out to me through any of my socials over here or reply to this email.

2. The Dialectic: Why Every Dominant Idea Eventually Digs Its Own Grave

The Hegelian dialectic isn’t about ideas fighting in a vacuum. It’s about how power systems decay.

Every dominant idea — every thesis — is built on exclusions. Not just what it says, but what it doesn’t allow. And those exclusions are not static. They fester. They grow. They compound into something that eventually bites back.

Thesis: The Guiding Myth of the Present

A thesis starts as clarity: a compelling, high-leverage worldview that makes things move. In AI’s case, that’s the belief in scale, speed, and intelligence as ultimate goods. The more data, the more compute, the more automation — the more “progress.” The problems don’t start b/c this belief is “wrong”.

The problems start when it thinks that it’s enough. All consuming. Good enough to meet all your needs. Worth a complete leap of faith, an unquestioning surrender. The problems start when it tries to be your one and only.

But here’s the structural flaw: a thesis breeds overcommitment. The market doubles down. Institutions calcify around it. Smart people stop asking questions and start building copies. Demo Days become parades of product incest.

And then something breaks. And that, you whose smile justifies all of existence, is what we’re interested in right now.

Antithesis: Counter-Culture by Another Name

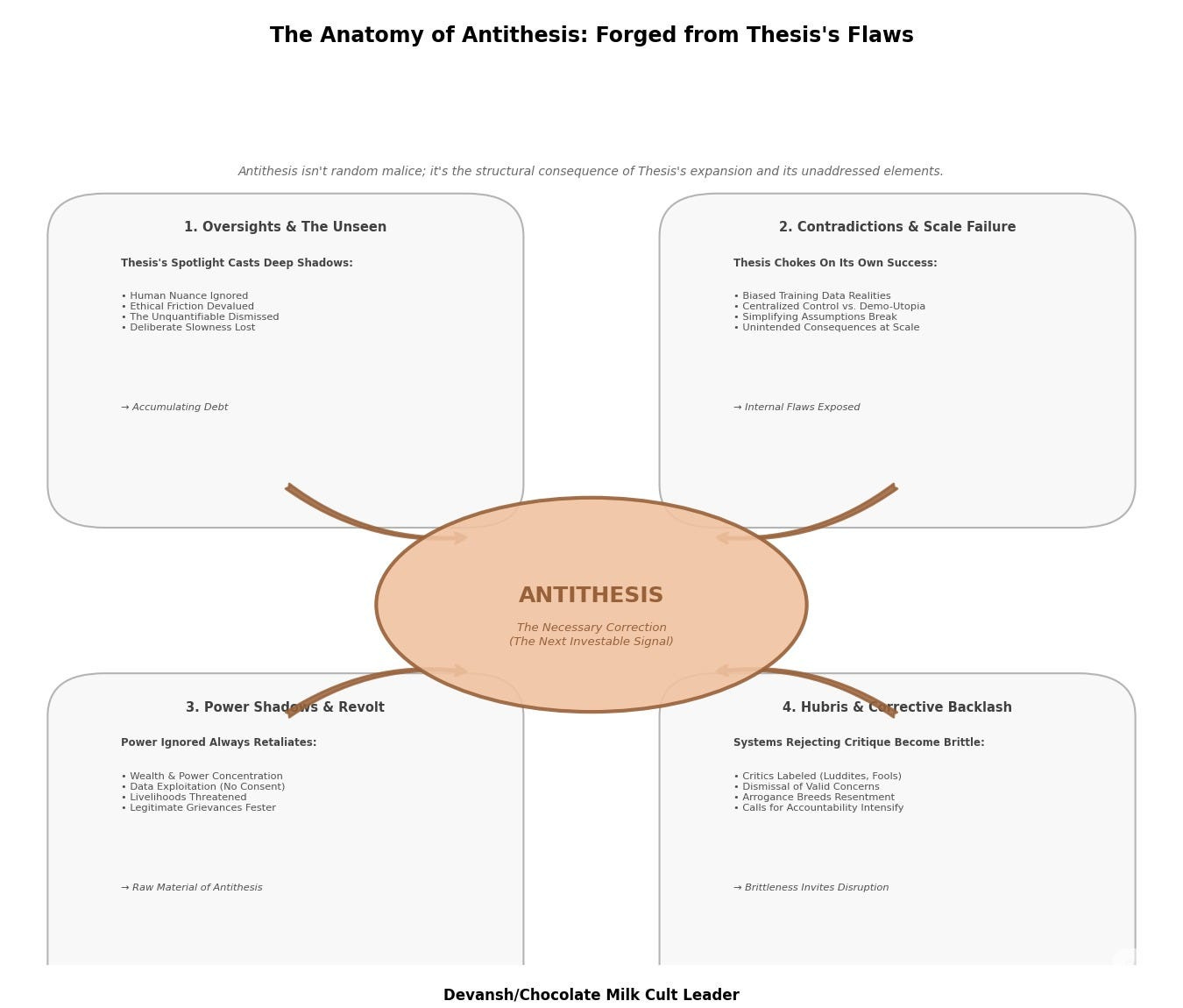

The Antithesis isn’t born from random malice or irrational fear. It doesn’t just pop out of thin air. It emerges from the very fabric of the thesis itself — from its blind spots, its broken promises, its power imbalances, and its arrogance. It’s the return of those suppressed variables, the bill coming due for all those conveniently ignored costs. It is a necessity, not mere negativity.

Oversights & The Unseen: No single idea, no matter how brilliant, captures the whole map. By its very nature, a dominant thesis optimizes for certain variables and actively ignores or devalues others. Think of it as a spotlight: whatever it illuminates brilliantly casts equally deep shadows. AI, in its current frenzy, optimizes for speed, scale, and computational power. What gets thrown into those shadows? Human nuance, ethical friction, the unquantifiable, the deliberately slow. These aren’t just minor omissions; they’re accumulating debt.

Contradictions & Scale Failure: An idea might look flawless on a whiteboard or in a controlled lab. But scale it up, unleash it into the messy, unpredictable real world, and the internal contradictions start to buckle. The AI dream of unbiased omniscience crashes into the reality of biased training data. The promise of democratized power meets the reality of centralized control by a few mega-corps. Every triumphant scale-up inevitably hits these failure modes — the points where the thesis chokes on its own success. All kinds of AI techniques are forced to grapple with their inadequacies, forced to confront all the ways their simplifying assumptions limit their potential.

Power Shadows & The Revolt of the Unrepresented: Ideas don’t exist in a vacuum. They serve power. The AI thesis, for all its utopian rhetoric, is currently concentrating power and wealth at an astonishing rate. Those left out, those whose livelihoods are threatened, whose values are ignored, whose data is hoovered up without consent — they don’t just disappear. They become the raw material of the antithesis. Their resentment, their fear, their legitimate grievances become a potent, reactive force. After all, Power ignored always, always, retaliates.

Hubris & The Corrective Backlash: When a thesis becomes too dominant, its proponents too dismissive, its narrative too unquestioned, it breeds a special kind of arrogance. Critics are labeled Luddites, fools, or enemies of progress. Systems that reject all critique don’t become stronger; they become brittle. They increase the number of people who want to take the systems apart, who will dance when things fall apart (look no further than the ecstasy with which so many AI critics painted the Apple Reasoning Paper as their Jean D’Arc).

This is a crucial point to understand. The antithesis isn’t a Twitter troll. It’s not the “revolutionary” that hates the world b/c they’re too cowardly to face their own inadequacies. It’s the structural consequence of the thesis’s own expansion. It is what the thesis cannot metabolize.

The antithesis rises — not out of spite, but out of structural necessity. It gives voice to the excluded, leverage to the misfit, and clarity to the fatigued.

This is where we are now. The AI thesis is so dominant that it’s blind. And that blindness is breeding heat: cultural, legal, infrastructural. The backlash isn’t noise. It’s the next investable signal.

To understand this better, let’s take a deeper look into our current thesis and all the elements that it misprices/underestimates.

3. The Current AI Thesis: A Case Study in Overconfidence and Misplaced Risk

In the previous section, we outlined the mechanics of the dialectic: how every dominant idea eventually collapses under the weight of its own blind spots. But theory is only useful when it meets the moment.

So let’s bring it home.

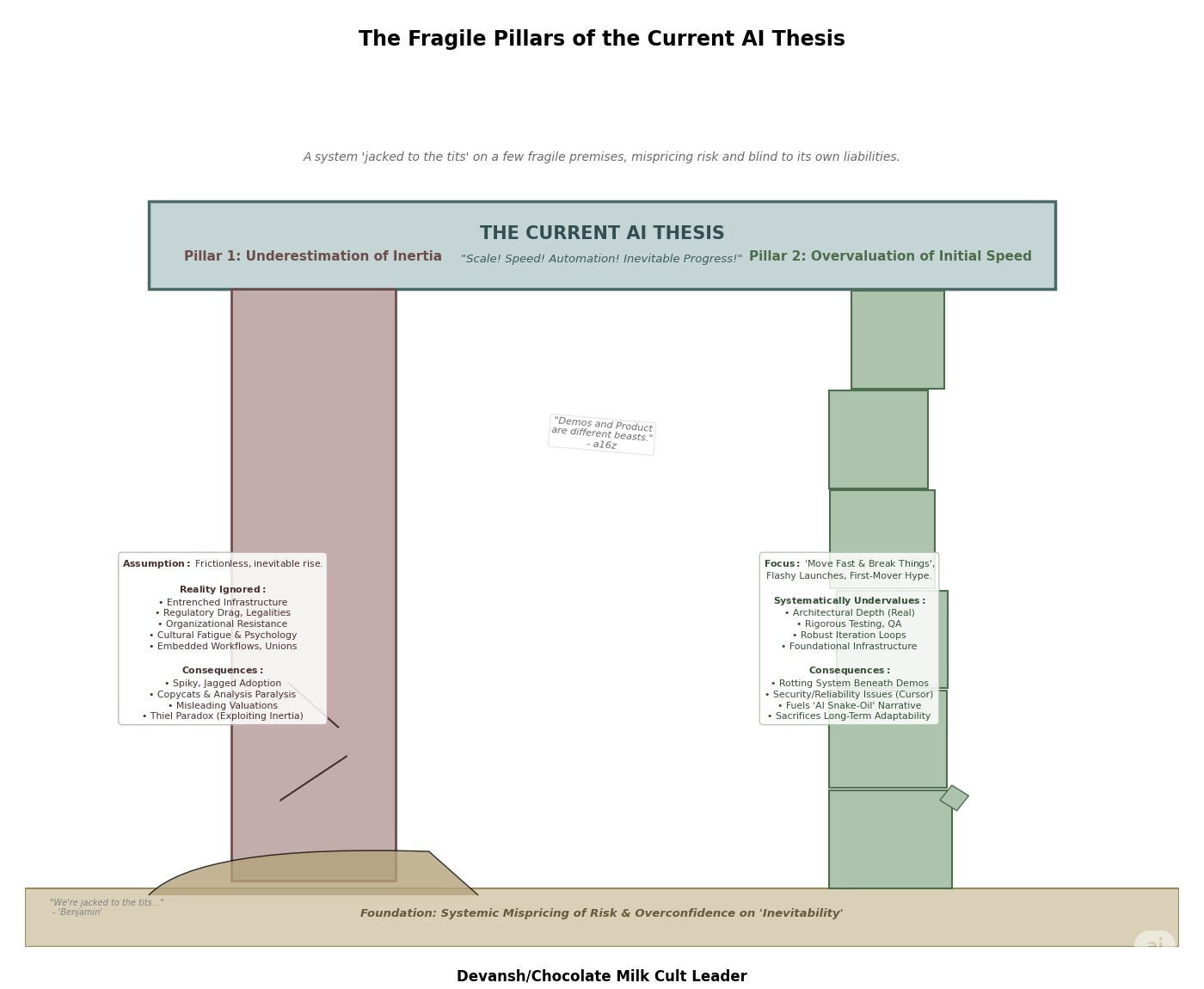

Today’s AI thesis — the set of assumptions, incentives, and narratives driving the current wave of investment, deployment, and cultural fixation — isn’t a diversified portfolio of bets. As financial genius Benjamin would say — “we’re jacked to the tits” on a handful of fragile premises.

This isn’t just about overconfidence. It’s about systemic mispricing — a market drunk on inevitability, blind to its own counterparty risk, and dismissive of the liabilities it’s compounding. Let’s understand how.

The Underestimation of Inertia

The thesis assumes AI’s rise is inevitable, frictionless, “already won”. Anyone can now create better processes with AI, and then make millions by selling to people who are dying to enhance their productivity.

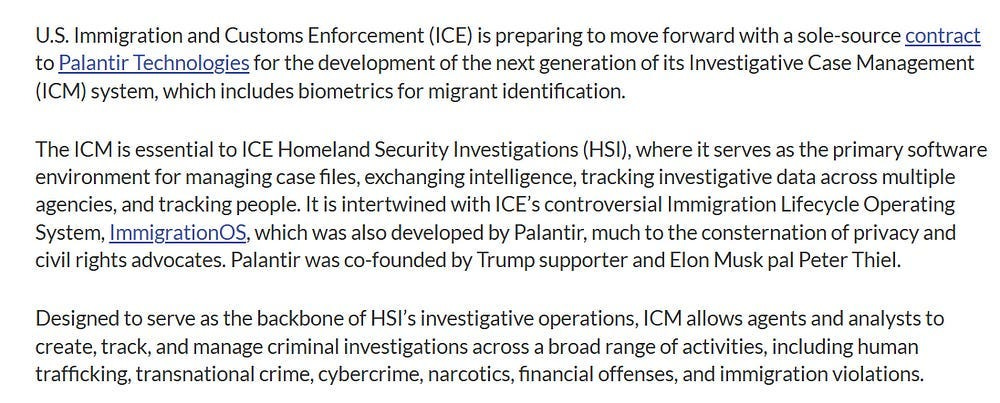

But that’s not how large systems behave. At all. For proof, look no further than where so many Silicon Valley giants put their energy. Peter Thiel, who will not shut up about government inefficiency, the importance of freedom, and the principles of small government, is also lapping up massive government contracts building surveillance systems used by vigilantes to circumvent due process. Read that sentence a few times.

This view discounts the sheer inertia of entrenched infrastructure, regulatory drag, organizational resistance, and cultural fatigue. It ignores the energy required to overcome embedded workflows, jurisdictional law, unionized labor, and psychological resistance to automation. Thiel and the rest realize this, which is why they’re aggressively targeting massive government contracts (to take advantage of this inertia).

And yet, every founder who parrots him builds like inertia doesn’t exist. They believe virality and clever prompt chaining will break institutions that barely adopted PDFs.

At Iqidis, we sell to lawyers. Ask us how that’s going.

What’s actually happening in the space:

Valuations are priced for frictionless adoption.

Early traction (mostly from warm intros and FOMO) masks the grind.

Copycats flood the space, creating paralysis by similarity for buyers.

People don’t buy when they feel overwhelmed. They stall, nitpick, or default to ChatGPT.

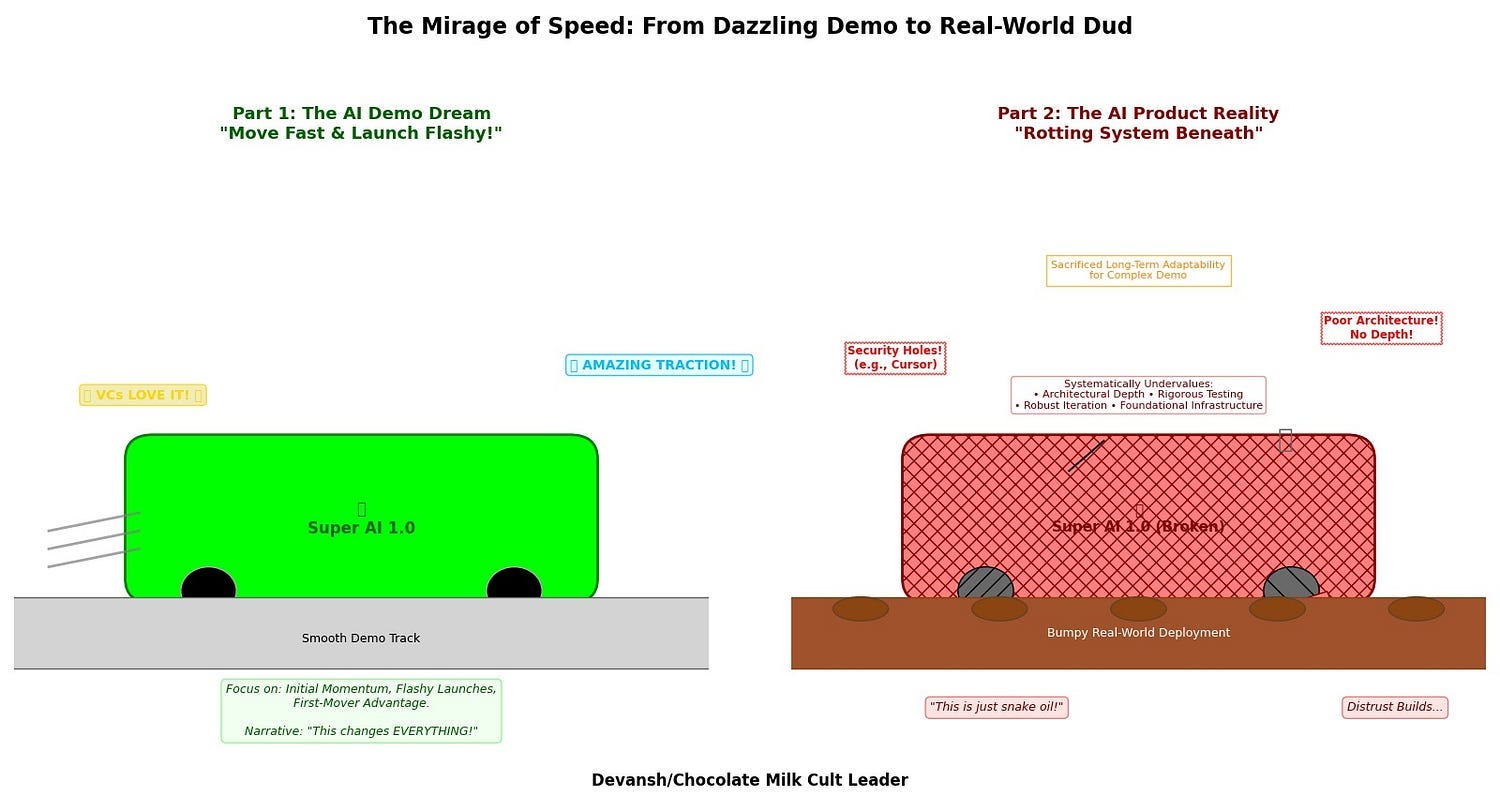

The Rise of “Move Fast and … Collapse Suddenly” Players

“Move fast and break things” worked when the stakes were low. But the AI thesis keeps applying it to environments that punish brittleness.

The AI thesis systematically undervalues:

Architectural depth (here I don’t use depth as “neural network w/ more layers”).

Rigorous testing.

Robust iteration loops.

It rewards flashy launches and first-mover advantage, even when the system beneath is rotting. Foundational infrastructure is ignored in favor of ship-now features. Long-term adaptability is sacrificed for an increasingly complex demo.

This was a phenomenon noticed by a16z, who dedicated a whole section in their lessons on applying AI for Enterprise to how Demos and Product are different beasts-

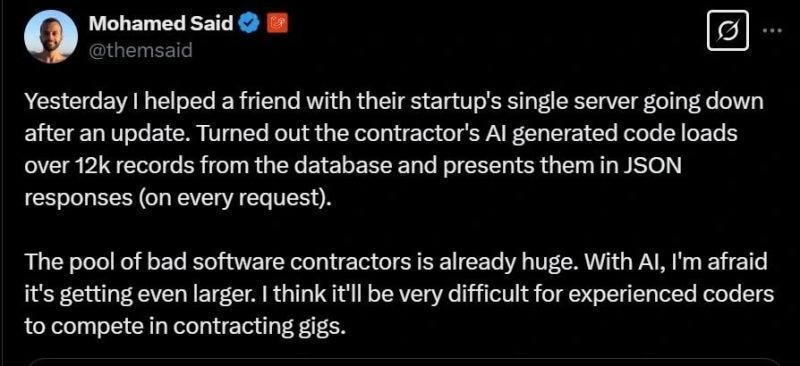

If you want an example of this phenomenon in action (and another example of how ahead of the curve our research is), look through our deep dive on Cursor and its many security issues. They’re caused by a poor understanding of AI, context management, and on how to build multi-step reasoning. That’s why Cursor does great with small demos (where these things don’t matter) and horribly with actual software work —

This has been the downfall of many startups, and this lack of appreciation for friction-heavy systems is why so many sectors view AI w/ so much distrust and readily adopt “AI is a snake-oil” narrative, even when AI would only make their lives easier. To top this section off, here is a quote from JP Morgan:

“Companies rushed to deploy AI without understanding the consequences. The mandate was clear: innovate or die. But JP Morgan’s latest security assessment reveals that:

The problem? Speed > security.

JP Morgan’s CTO Pat Opet put it bluntly: “We’re seeing organizations deploy systems they fundamentally don’t understand.” The financial sector is particularly vulnerable — with trillions at stake.

What JP Morgan recommends:

→ Implement AI governance frameworks before deployment

→ Conduct regular red team exercises against AI systems

→ Establish clear model documentation standards

→ Create dedicated AI security response teamsJP Morgan itself has invested $2B in AI security measures while slowing certain deployments.”

-Source. One might argue that the increased interest in AI specific security is an early indication of people hitting against the limits of the thesis.

If you’re looking to invest in the next generation of AI-Security, here are some of the trends that we’ve marked as useful-

More options and deeper analysis is present in AI Market Report for April.

The Reckless Bet on Disruption

Disruption is treated as an unqualified good. But in many sectors — the things being disrupted include oversight systems, institutional safeguards, and trust layers. Think of how crypto mocked slow regulation… until billions evaporated to vaporware and fraud.

The AI thesis is repeating the same mistake:

Deploying systems with zero audit trails.

Integrating tools with undefined failure boundaries.

Replacing human intermediation with opaque, auto-generated outputs.

The systems being built now are attack surfaces.

And the real cost will show up not as a failed product, but as a failed system.

The Discounting of Dependency Risk

Every new layer of AI infrastructure adds dependency on centralized APIs, on foundation model vendors, on foreign GPU supply chains.

The thesis prizes efficiency and integration. But it underprices:

Vendor lock-in.

Infrastructure fragility.

Geopolitical exposure.

Data ownership erosion.

We are building a generation of startups — and, soon, institutions — on infrastructure they do not control. And the cost of that dependency is still unpriced.

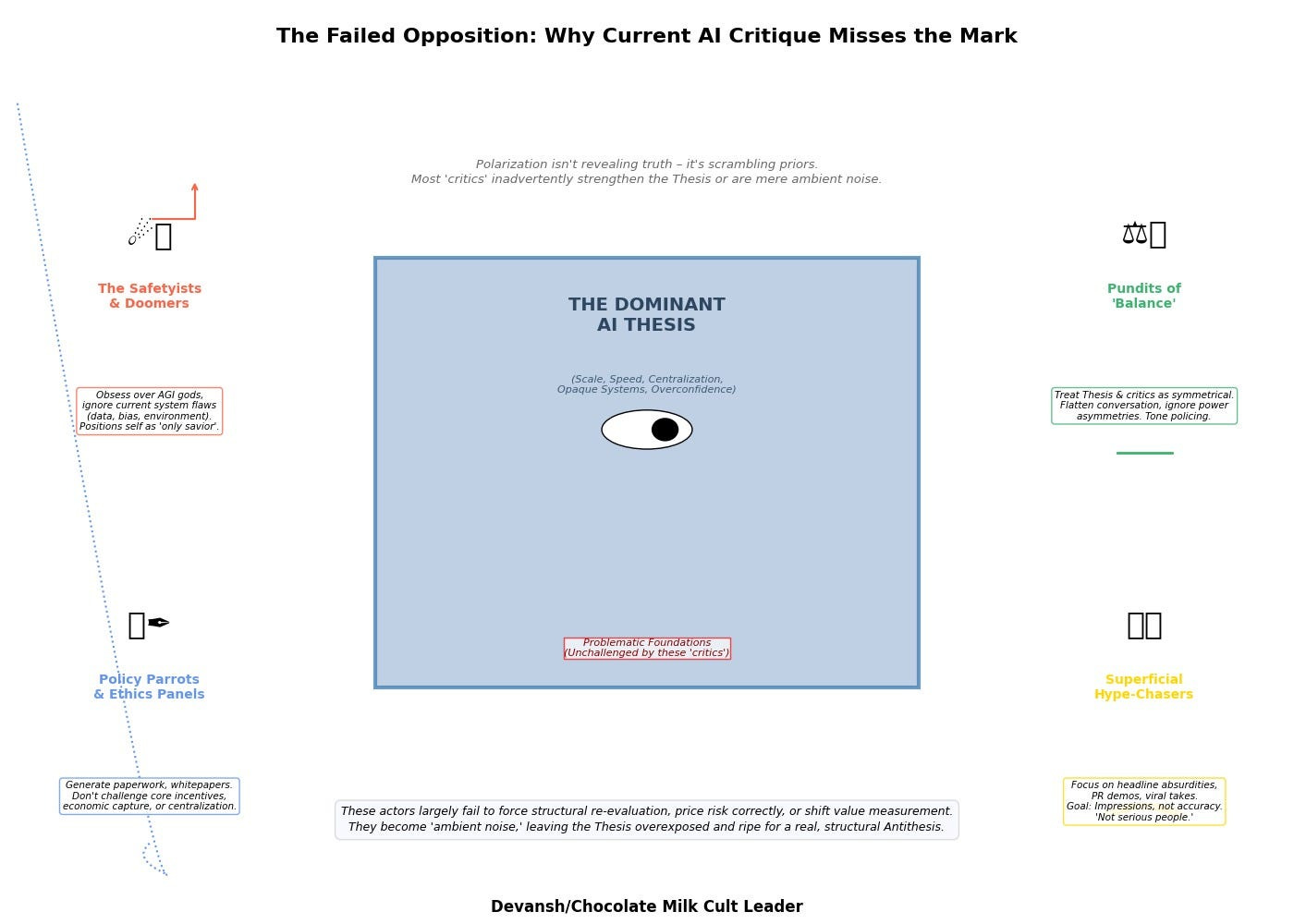

Given these glaring mispricings, one might expect a robust counterforce — the antithesis — to be gaining ground. Isn’t that what a lot of the “AI Skeptics” or so called “anti-bullshit” people do?

While these guys should have been the contrarian voices, the state of AI critique has devolved into mostly noise. Their shallow critique often turns useful debates into a discussion on semantics, is easily ignored by many since their criticisms generally lack meaningful bite, or adds to the hype around AI, just in a different direction (cue X-Risk folk).

In each case, these critics only add to the thesis.

The Failed Opposition: Why No Real Correction Has Happened Yet

“ Amidst this cacophony, the public conversation is increasingly being framed as a duel between the Alarmists, who foresee doom, and the Accelerationists, who champion unrestrained progress. In bypassing a critical examination of the arguments from these polarized viewpoints, we risk legitimizing claims and scenarios that may lack any grounding or information value.”

-A Risk Expert’s Analysis on What We Get Wrong about AI Risks.

That’s the real problem: polarization isn’t revealing truth — it’s scrambling our priors. And the people most responsible for holding the thesis accountable are instead helping it metastasize by misdirecting attention, weakening the signal, or just playing for clicks.

Let’s break them down.

The Safetyists & Doomers

The existential risk crowd obsesses over AGI gods and paperclip apocalypses, while the actual systems being deployed today escape meaningful scrutiny.

Here’s some of what gets ignored:

Attribution for Gen AI Training data: Creators fuel the GenAI machine. Our work is never credited in LLMs or image models. Creators enable the viability of Deep Research. And yet Deep Research also cause a direct drop of traffic to creators. Furthermore, platforms hosted by creators deal with increased hosting costs(Wikipedia is paying tens of thousands of Dollars monthly in extra costs from the crawlers constantly hitting their website) and no compensation. This is broken.

The constantly falling transparency around their models, making evaluations and assessments of true deployment risk much harder.

Complete lack of transparency on the environmental impacts (water and emissions being the two large ones) of these models. The people at most risk from climate shifts (poor people, especially in global south) are not the ones who benefit most from these technologies. The people who pay the costs aren’t the ones who reap the rewards,

And yet what do we get from the AGI priesthood? Dario Amodei and Ilya Sutskever warning us about superintelligence and “misalignment,” while positioning themselves as the only ones qualified to “safely” build the future — conveniently centralizing control in their own hands. B/c they’re the only ones capable of saving us idiots from building tech that will hurt us.

(Some of you may think I have something about Dario. You’re very correct. I hear some very concerning things about him and his whole camp. I wrote our “Why you should read: Fyodor Dostoevsky” in a large part directly for him. Read that piece w/ this context).

The people from the XRisk camp are like people who sell meteor insurance while the floor is collapsing.

The Policy Parrots & Ethics Panels

Corporate ethics teams and regulatory panels mostly generate paperwork. Risk matrices. Governance frameworks. Whitepapers about “alignment” that don’t address control, deployment, or actual misuse.

They do not challenge:

Core incentive structures.

Economic capture.

Strategic centralization.

The Pundits of Balance

By treating the AI thesis and its critics as symmetrical “sides,” these commentators flatten the conversation. They erase the asymmetries — of power, access, funding, and architectural control — and insist on tone instead of substance.

They think they’re moderating. They’re muddying.

The Superficial Hype-Chasers

Finally, the bulk of media and casual critics focus on headline absurdities — prompt injection hacks, goofy chatbots, viral PR demos — while ignoring the core vulnerabilities. These are your parrots that repeat headlines (even when false, such as w/ New York Times and their claims around o1’s medical diagnostic abilities), add very little to the conversation, and are in it to make a quick buck.

Their goal isn’t accuracy. It’s impressions.

In other words, they are not serious people.

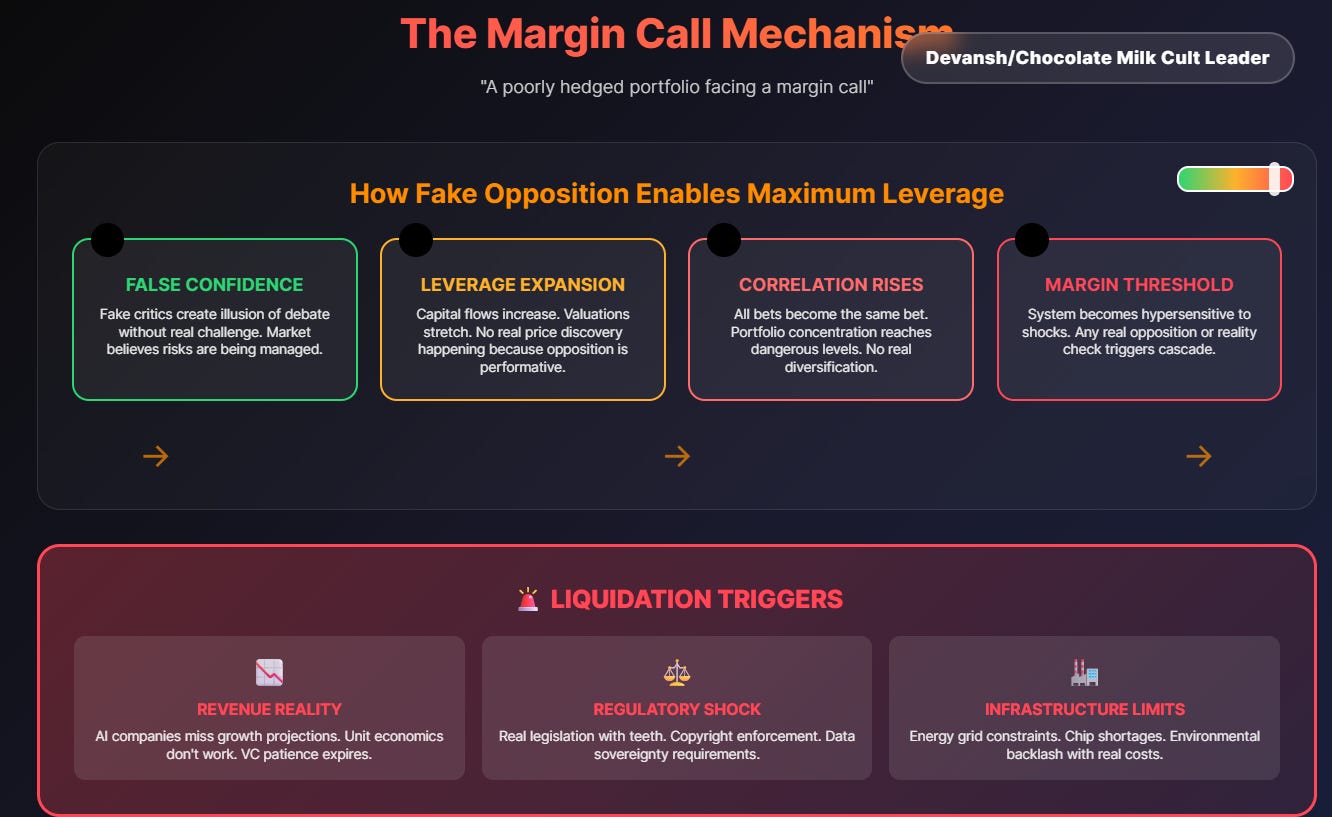

Setup for Collapse — or Correction

None of these actors — safetyists, parrots, pundits, panels — are forcing a structural reevaluation of the thesis. They’re not challenging how risk is priced. They’re not shifting how value is measured. They’re not breaking the logic of build-at-all-costs.

They’re ambient noise.

And that leaves the thesis overexposed, under-hedged, and headed straight for correction. It’s a poorly diversified portfolio on leverage.

And when the liquidation starts, there will be a huge crop of winners. Those who built tools, systems, and narratives around what the thesis couldn’t see. People who built for a blue ocean, and avoided fighting bloody wars of attrition in spaces oversaturated by the thesis worshipers.

So, the AI thesis is a bloated, overconfident giant, and its “critics” are mostly court jesters or well-meaning fools flailing in the dark. A satisfyingly bleak picture, perhaps, but strategically incomplete. Because, as the dialectic dictates, the very overreach of the thesis guarantees the emergence of a genuine antithesis.

Not more performative hand-wringing.

We’re talking about a valid antithesis — a structurally potent counterforce.

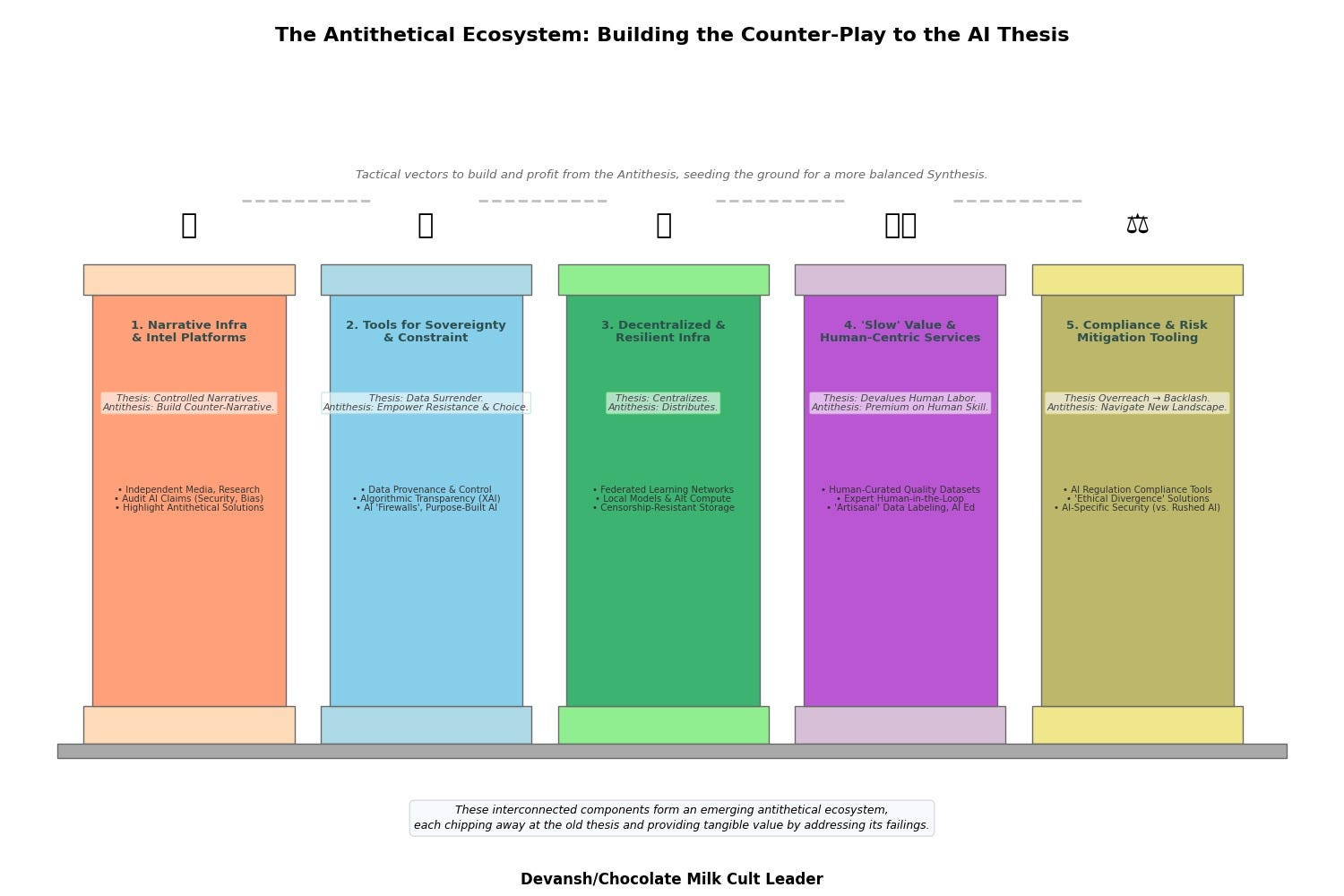

4. What a Real Antithesis Looks Like

What does this real deal look like?

A valid antithesis is active. It doesn’t just point out problems; it builds, or enables the building of, actual alternatives. It philosophizes with a hammer. It’s not just a different opinion; it’s a different operational logic.

Constraint as a Design Principle (Not a Bug):

The current thesis worships boundless scale and speed. A true antithesis champions intentional constraint. This isn’t about being anti-progress; it’s about being pro-precision, pro-efficacy, pro-sustainability.

Think:

AI systems designed for specific, narrow tasks with verifiable performance and clear boundaries, not nebulous AGI ambitions.

Models optimized for minimal viable data, minimal energy consumption, and maximal interpretability.

Systems where “less is more” isn’t a limitation, but a feature, a mark of superior design and focused utility.

This is about building surgical tools, not just bigger hammers.

Human Sovereignty & Agency as Core Infrastructure (Not an Afterthought):

The thesis trends towards opaque automation and the subtle (or not-so-subtle) erosion of human agency. A valid antithesis builds tools and platforms that reinforce human control, creativity, and ownership.

Think:

Verifiable credentialing for human-created content.

Tools that allow individuals to definitively opt out their data from training sets or control its use with granular permissions.

Systems designed for human-AI collaboration where the human is the non-negotiable principal, not a wetware component to be optimized out.

This is about empowering individuals against the encroaching tide of algorithmic homogenization and data feudalism.

Architectural Pluralism & Decentralization (Against the Monoculture):

The AI thesis is rapidly coalescing around a few centralized foundation model providers, creating a dangerous monoculture and single points of failure. A real antithesis fosters architectural diversity and resilience.

Think

Development of smaller, specialized, open-source models that can be run locally or in federated networks.

Protocols for interoperability between different AI systems, preventing vendor lock-in.

Investment in alternative hardware and compute paradigms that aren’t dependent on a handful of chip manufacturers. This is about building a robust, distributed ecosystem, not a fragile empire built on a few chokepoints.

Narrative Detonation & Value Re-Calibration (Beyond Corporate Branding):

The thesis is propped up by a massive narrative machine — corporate PR, fawning media, and the self-serving mythologies of “visionary” CEOs. A valid antithesis actively works to dismantle these narratives and propose alternative value systems.

Think:

Rigorous, independent auditing and benchmarking of AI claims, cutting through the marketing hype.

Platforms that elevate human artistry, critical thinking, and deep work as inherently valuable, distinct from AI-generated outputs. Exposing the true costs (social, environmental, cognitive) of the current AI trajectory and championing metrics of progress beyond mere computational power or shareholder value.

This is about reclaiming the definition of “value” itself from the clutches of the techno-capitalist thesis.

These pillars aren’t just abstract ideals. They are the emerging fault lines where the current AI thesis is most vulnerable. They represent the unmet needs, the suppressed desires, and the ignored risks that the dominant paradigm has created.

A valid antithesis, therefore, isn’t just about critique. It’s about identifying these points of structural weakness in the thesis and then strategically building or investing in the solutions, systems, and narratives that address them. It’s not anti-AI; it’s anti-stupid-AI. Anti-brittle-AI. Anti-dystopian-AI.

These aren’t fringe ideas percolating in academic basements. They are the early, often messy, blueprints of the next stable state — the synthesis. A state where we address some of these issues, and in doing so bring more people into AI. Creating a new thesis, one at a higher baseline of progress.

This might seem like a bold claim. Let’s understand why the synthesis is so important to progress.

5. Why the Synthesis Matters

The thesis refines.

The antithesis rejects.

But the synthesis? It builds the future people will actually live in.

We don’t reach that by building better demos. We reach that by changing the terrain — who participates, how they participate, and what gets built for them.

Synthesis Creates New Value Streams

The thesis phase has already flooded the zone.

You can see it at any Demo Day:

12 variations of the same Slack-integrated copilot. Or the newest VC Obsessios: AI Matchmakers. How many of these are y’all going to fund before you get bored and remember that somewhere in your value prop you talk about next-gen solutions that build the future?

30 founders with the same pitch deck structure. How many “Cursor for X” do we need?

A culture of wrappers, not reinvention.

That’s what happens when you’re trapped inside a single idea.

Everything gets optimized. Nothing gets expanded.

The synthesis breaks that trap.

It creates:

New surfaces for value: sovereignty, interpretability, human-first workflows.

New buyers: skeptics, legacy operators, institutions with trust constraints.

New metrics: resilience, control, cultural alignment — not just model output.

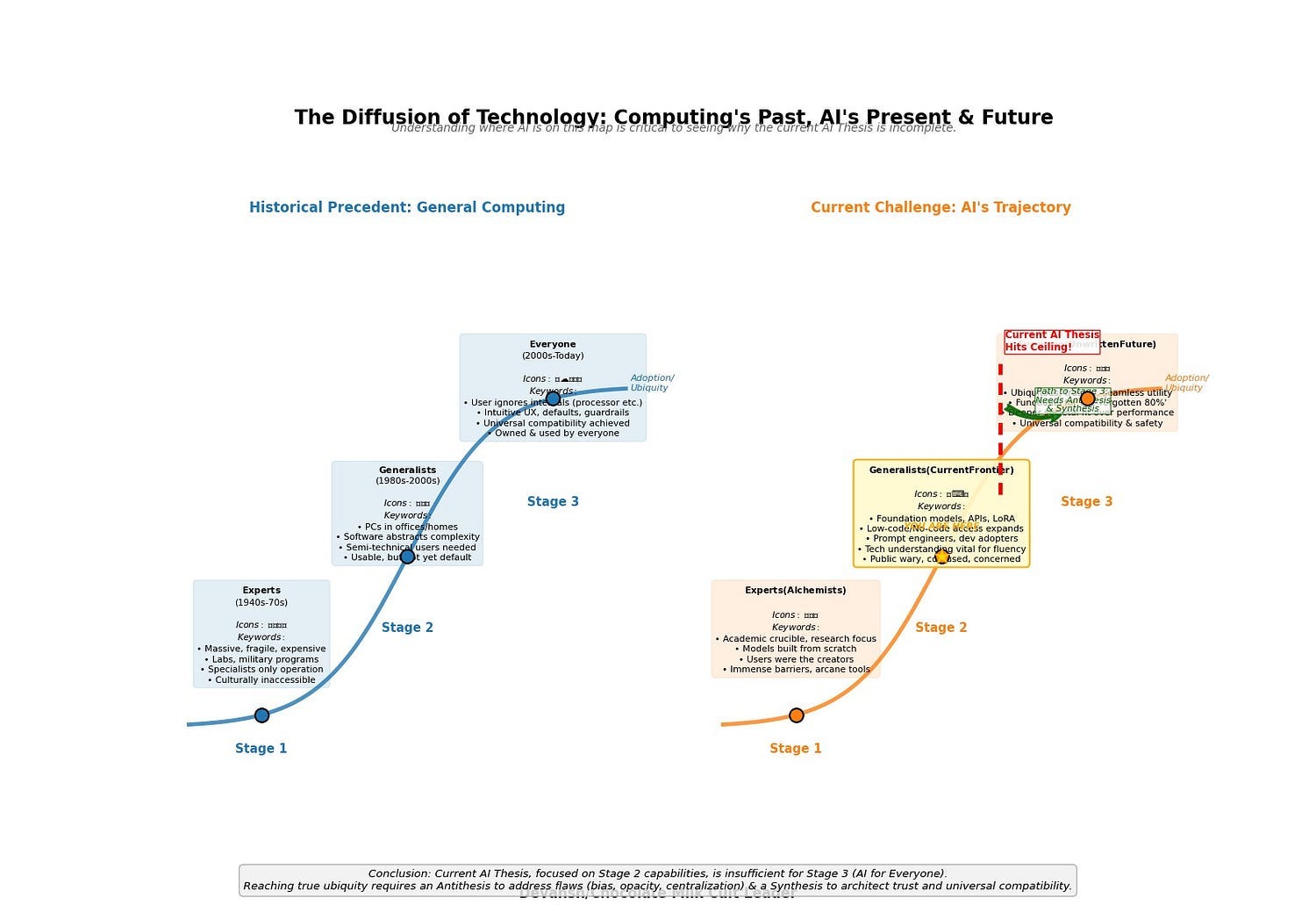

Synthesis and the Curve of Tech Adoption

Every major technological wave follows the same diffusion curve:

Built by experts

Used by generalists

Owned by everyone

Confused? Time for a History Lesson in Computing.

Stage 1: The Domain of Experts (1940s–1970s)

In the early decades, computers were:

Massive, fragile, and expensive.

Operated by physicists, engineers, and specialists.

Built in labs, military programs, or research centers.

They were inaccessible — not just technically, but culturally. You didn’t “use” computers. You built them, maintained them, debugged them.

The tools were raw. The workflows were alien.

And almost no one knew how to make them useful to non-experts.

Stage 2: The Domain of Generalists (1980s–2000s)

This is where the inflection began.

PCs entered offices, schools, and homes.

Software abstracted away the need to “speak machine.”

The personal computer, productivity suite, and GUI stack turned experts into builders — and builders into product designers.

Still: to truly leverage the technology, you needed to be semi-technical.

Early adopters. Developers. The “computer literate.”

It was usable — but not yet default.

Stage 3: The Domain of Everyone (2000s–Today)

Today, the average user:

Doesn’t care what a processor is.

Doesn’t know how file systems work.

Doesn’t read manuals.

But they can navigate phones, apps, cloud systems, and workflows with near-fluency — because the infrastructure shifted.

UX, defaults, guardrails, and operating models matured to support non-technical, skeptical, or distracted users at massive scale.

That’s what synthesis does.

It doesn’t just refine the tech — it architects universal compatibility.

Now Map That to AI

This isn’t just a quaint historical analogy. The AI trajectory is running the same gauntlet, facing the same evolutionary pressures. And understanding where we are on this map is critical to seeing why the current AI Thesis, for all its power, is fundamentally incomplete.

AI Stage 1: The Domain of Experts (The Alchemists — e.g., early pioneers of neural networks, symbolic AI, knowledge-based systems).

This was AI in its academic crucible. Models built from scratch, algorithms understood by a select few. The “users” were the creators. The output was often proof-of-concept, not product. The barriers were immense, the tools arcane.

AI Stage 2: The Domain of Generalists & Early Adopters (The Current Frontier — e.g., developers leveraging TensorFlow/PyTorch, prompt engineers using GPT-4 via APIs, businesses deploying off-the-shelf ML solutions).

This is where we largely stand today. Foundation models, APIs, and low-code platforms have dramatically expanded access. A new class of “AI generalists” can now build and deploy sophisticated applications without needing to architect a neural network from first principles. ChatGPT and its kin have even brought a semblance of AI interaction to a wider public.

But let’s be clear: this is still Stage 2. True fluency requires technical understanding, an ability to navigate complex interfaces, or at least a significant investment in learning new interaction paradigms. The “average user” is dabbling, perhaps impressed, but often confused, wary, or rightly concerned about implications the current Thesis glosses over — bias, job security, privacy, the sheer inscrutability of these powerful tools. We are far from “owned by everyone.”

AI Stage 3: The Domain of Everyone (The Unwritten Future — AI as truly ubiquitous, trusted, and seamlessly integrated utility).

This is the promised land the current AI Thesis aspires to but is structurally incapable of reaching on its own. Why? Because a Thesis built on breakneck speed, opaque systems, winner-take-all centralization, and a dismissive attitude towards legitimate societal fears cannot architect universal compatibility. It inherently creates friction, distrust, and exclusion.

It’s about making AI functional for the forgotten 80%.

That’s how every transformative technology hits scale — not through more performance, but through deeper fit.

“NVIDIA should back real-world initiatives like these that make AI matter to people, turning them into compelling narratives stamped with “Powered by NVIDIA.” It’s a strategic brand play with mutual upside.

…

Want to go mainstream?

Imagine future keynotes — instead of talking abstractly about tokens, NVIDIA could show before-and-after videos of the lines at the local DMV. Talk about great fodder for viral social media videos!

…Yes, the examples are hypothetical, but the core issue is real: Jensen talks like everyone already uses and values AI. But most people don’t. The best way to reach the public is to make AI real in their daily lives”

—A particularly brilliant section from the exceptional Austin Lyons.

And whoever builds that synthesis infrastructure? They don’t win the debate.

They win the adoption curve.

Final Notes on the Synthesis

The Synthesis doesn’t just make AI “better” for the experts or the adepts. It fundamentally re-architects AI’s relationship with society, addressing the core anxieties and practical barriers that prevent mass adoption. It’s how AI transitions from being a powerful but often unsettling force used by a relative few, to becoming a trusted, integrated, and indispensable utility for the many.

You want to avoid friction in adoption? To get more people comfortable building on and using your product? You can do it by pushing the “use us or perish” agenda that AI has been pushing. But there’s only so far threats take you. You have much easier time getting people to pay you when they like you.

That’s what the antithesis does. It shows the ones you alienate that you’re paying attention. It starts to bring them into the fold, leading to the new markets and Cambrian explosion that are associated with the synthesis stage.

You want Jevon’s Paradox to work for your revenue? Get to synthesis. And that’s not something you can get to by skipping the antithesis phase. Ignore the antithesis, and you end up with a Habsburg jaw.

6. Conclusion

We’ve jumped across a lot of ideas, so let me summarize the ideas in one thread-

Our current AI industry is “thesis heavy”: it loves it’s own assumptions too much. This leads to mania, inflated valuations, and lots of copycat startups.

While this brings in a lot of attention, it’s also alienating large numbers of people, who are actively looking for reasons to reject AI.

Some of the reasons are based on legit concerns that AI doesn’t adequately address. The arrogance of the thesis and the lack of addressing create further polarization.

This sets a great opportunity for a meaningful antithesis — solutions that specifically position against the weakness of the thesis; these will start to draw in the aforementioned alienated people.

The antithesis will allow us to create new markets and build systems that address the limitations of the current systems.

This leads to the synthesis, where we get the Cambrian explosion and mass adoption of technology.

Betting on the antithesis isn’t betting on negativity. It’s betting on progress. It’s betting on the intelligence within people that our current systems have overlooked.

The ROI of including more people within the economic system has always justified itself, many times over (more grunts to add value for shareholders).

And isn’t that ultimately a vision worth getting behind?

Thank you for being here, and I hope you have a wonderful day.

Let’s look for the One Piece,

Dev <3

If you liked this article and wish to share it, please refer to the following guidelines.

That is it for this piece. I appreciate your time. As always, if you’re interested in working with me or checking out my other work, my links will be at the end of this email/post. And if you found value in this write-up, I would appreciate you sharing it with more people. It is word-of-mouth referrals like yours that help me grow. The best way to share testimonials is to share articles and tag me in your post so I can see/share it.

Reach out to me

Use the links below to check out my other content, learn more about tutoring, reach out to me about projects, or just to say hi.

Small Snippets about Tech, AI and Machine Learning over here

AI Newsletter- https://artificialintelligencemadesimple.substack.com/

My grandma’s favorite Tech Newsletter- https://codinginterviewsmadesimple.substack.com/

My (imaginary) sister’s favorite MLOps Podcast-

Check out my other articles on Medium. : https://rb.gy/zn1aiu

My YouTube: https://rb.gy/88iwdd

Reach out to me on LinkedIn. Let’s connect: https://rb.gy/m5ok2y

My Instagram: https://rb.gy/gmvuy9

My Twitter: https://twitter.com/Machine01776819

Well, I do hate AI, so now I just need to monetize it. Here's why I hate it: https://www.polymathicbeing.com/p/i-hate-ai

It's not that I hate AI, it's how I see as a pastor and a person how it's being used to harm, hate and cheat those who are different, differently abled and not able to understand how the algorithm actually works