Why does changing a pixel break Deep Learning Image Classifiers [Breakdowns]

Understanding the phenomenon behind adversarial perturbation and why it impacts Machine Learning

Hey, it’s Devansh 👋👋

In my series Breakdowns, I go through complicated literature on Machine Learning to extract the most valuable insights. Expect concise, jargon-free, but still useful analysis aimed at helping you understand the intricacies of Cutting Edge AI Research and the applications of Deep Learning at the highest level.

If you’d like to support my writing, please consider buying and rating my 1 Dollar Ebook on Amazon or becoming a premium subscriber to my sister publication Tech Made Simple using the button below.

p.s. you can learn more about the paid plan here. If your company is looking to build AI Products or Platforms and is looking for consultancy for the same, my company is open to helping more clients. Message me using LinkedIn, by replying to this email, or on the social media links at the end of the article to discuss your needs and see if you’d be a good match.

A while back, we covered the One Pixel Attack, where researchers were able to break state-of-the-art image classification models and make them misclassify input images, just by changing one pixel of that input. After that article, many people reached out to me asking me why this happens. After all, SOTA classifiers are trained on large corpora of data, and this should give them the diversity to handle changing one measly pixel.

The One Pixel Attack is an example of Adversarial Learning, where we try to fool image classifiers(Youtube video introducing the concept here). Adversarial examples are inputs designed to fool ML models. How does this work? Take an image classifier that distinguishes between elephants and giraffes. It takes an image and returns a label with probabilities. Suppose we want a giraffe picture to output an elephant label. We will take the giraffe picture, and start tweaking the pixel data. We will continue to do so to minimize disagreement with the elephant picture. Eventually, we end up with a weird picture that has the giraffe picture as the base but looks like an elephant to the agent. This process is called Adversarial Perturbation. These perturbations are often invisible to humans, which is what makes them so dangerous.

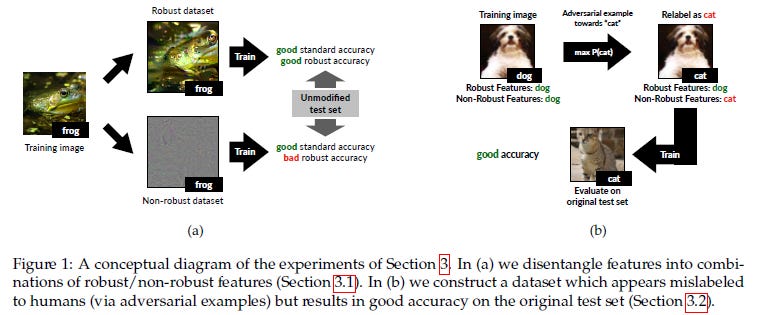

“Adversarial Examples Are Not Bugs, They Are Features” by an MIT research team is a paper that seeks to answer why Adversarial Perturbation works. They discover that the predictive features of an image might be classified into two types, Robust and Non-Robust. The paper explores how Adversarial Disturbance affects each kind of feature. This article will go over some interesting discoveries in the paper.

Takeaways

It shouldn’t be hard to convince you that adversarial training is very important. So now are some insights from the paper that will be useful in your machine learning journeys as you work to incorporate this into your pipelines-

Robust vs Non-Robust Features

Let’s first understand the difference between Robust and Non Robust features. Robust features are simply useful features that remain useful after some kind of adversarial perturbation is applied. Non-robust features are features that were useful before the perturbation but now may even cause misclassification.

Robust Features Are (Might be) Enough

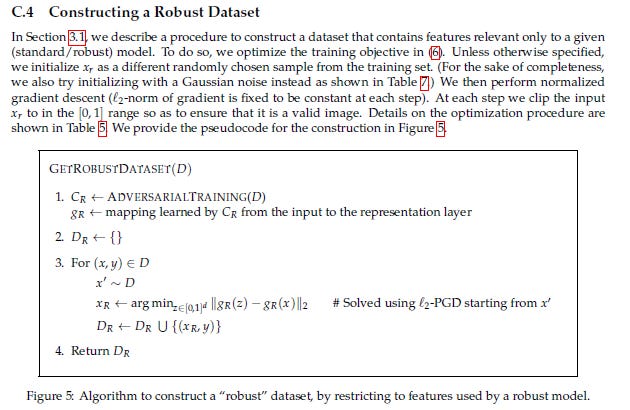

As somebody who advocates low-cost training (I’m a huge 80–20 guy) this is possibly the one takeaway that had me salivating the most. The authors of this paper did some interesting things. They used the original datasets to create 2 new datasets: 1) DR: A dataset constructed of the robust features. 2) DNR: Dataset of non-robust features.

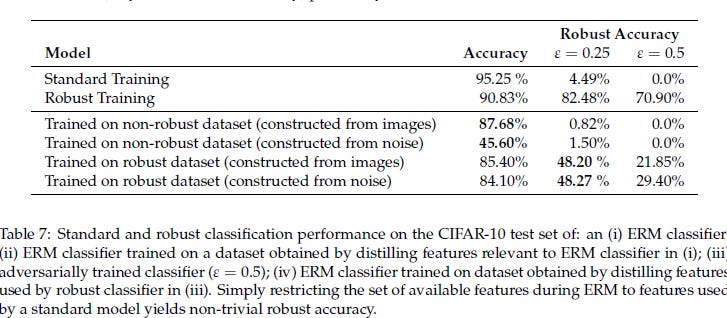

They found that training standard classifiers on the DR set provided good accuracy. Nothing too shocking, this was the definition of Robust Features. AND they had fantastic performance after adversarial perturbations were applied. Nothing too surprising. What got me was how well the robust dataset performed in normal training. Digging through the appendix we find this juicy table.

To quote the authors of this paper, “ The results (Figure 2b) indicate that the classifier learned using the new dataset attains good accuracy in both standard and adversarial settings.”To me, this presents an interesting solution. If your organization does not have the resources to invest in a lot of adversarial training/detection, an alternative might be to identify the robust features and train on them exclusively. This will protect you against adversarial input while providing good accuracy in normal use.

Adversarial Examples attack Non-Robust Features

This was the hypothesis of the paper. And the authors do a good job proving it. They point out that, “restricting the dataset to only contain features that are used by a robust model, standard training results in classifiers that are significantly more robust. This suggests that when training on the standard dataset, non-robust features take on a large role in the resulting learned classifier. Here we set out to show that this role is not merely incidental or due to finite-sample overfitting. In particular, we demonstrate that non-robust features alone suffice for standard generalization — i.e., a model trained solely on non-robust features can perform well on the standard test set.” In simple words, non-robust features are highly predictive and might even play a stronger role in the actual predictions.

This is where it gets interesting. The authors tested multiple architectures with adversarial examples and saw that they were all similarly vulnerable to them. This goes very well with their hypothesis. To quote them

Recall that, according to our main thesis, adversarial examples can arise as a result of perturbing well-generalizing, yet brittle features. Given that such features are inherent to the data distribution, different classifiers trained on independent samples from that distribution are likely to utilize similar non-robust features. Consequently, an adversarial example constructed by exploiting the non-robust features learned by one classifier will transfer to any other classifier utilizing these features in a similar manner.

Closing

This is quite an insightful paper. I’m intrigued by a few questions/extensions:

Testing this with non-binary datasets to see how well this approach can extend. More classes can make decision boundaries fuzzier so Robust datasets might not work as well (I still have hope).

Do all adversarial examples operate by attacking non-robust features, or is this a subset?

To those of you that were reading before I reached fame never before seen in mankind, you may remember the article- Improve Neural Networks by using Complex Numbers. The authors of that paper demonstrated that the use of Complex Functions extracted features that were quantitatively different than the ones extracted by standard ConvNets. Somehow, these features were also much stronger against Adversarial Perturbation- “CoShRem can extract stable features — edges, ridges and blobs — that are contrast invariant. In Fig 6.b we can see a stable and robust (immune to noise and contrast variations) localization of critical features in an image by using agreement of phase.” The findings of this MIT paper are very synergistic with that paper- robustness is tied to better feature extraction. Instead of mindlessly scaling up networks- an avenue to explore more robust networks would be to play with the different features extracted and see how they play out.

Let me know what you think. I would love to hear your thoughts on this paper.

If any of you would like to work on this topic, feel free to reach out to me. If you’re looking for AI Consultancy, Software Engineering implementation, or more- my company, SVAM, helps clients in many ways: application development, strategy consulting, and staffing. Feel free to reach out and share your needs, and we can work something out.

That is it for this piece. I appreciate your time. As always, if you’re interested in working with me or checking out my other work, my links will be at the end of this email/post. If you like my writing, I would really appreciate an anonymous testimonial. You can drop it here. And if you found value in this write-up, I would appreciate you sharing it with more people. It is word-of-mouth referrals like yours that help me grow.

Reach out to me

Use the links below to check out my other content, learn more about tutoring, reach out to me about projects, or just to say hi.

Small Snippets about Tech, AI and Machine Learning over here

AI Newsletter- https://artificialintelligencemadesimple.substack.com/

My grandma’s favorite Tech Newsletter- https://codinginterviewsmadesimple.substack.com/

Check out my other articles on Medium. : https://rb.gy/zn1aiu

My YouTube: https://rb.gy/88iwdd

Reach out to me on LinkedIn. Let’s connect: https://rb.gy/m5ok2y

My Instagram: https://rb.gy/gmvuy9

My Twitter: https://twitter.com/Machine01776819

These sorts of insights are valuable to contextualize what we see. It's getting behind the curtain of The Wizard of Oz that we see AI as and better understand how many levers and dials are adjusted to make an output look good... Under very constrained conditions.