Why you should read: Jean Baudrillard [Recs]

Technology, Symbols, and How Reality Loses Its Meaning

Hey, it’s Devansh 👋👋

Recs is a series where I will dive into the work of a particular creator to explore the major themes in their work. The goal is to provide a good overview of the compelling ideas, that might help you explore the creator in more-depth

I put a lot of effort into creating work that is informative, useful, and independent from undue influence. If you’d like to support my writing, please consider becoming a paid subscriber to this newsletter. Doing so helps me put more effort into writing/research, reach more people, and supports my crippling chocolate milk addiction. Help me democratize the most important ideas in AI Research and Engineering to over 100K readers weekly. Many companies have a learning budget, and you can expense your subscription through that budget. You can use the following for an email template.

PS- We follow a “pay what you can” model, which allows you to support within your means, and support my mission of providing high-quality technical education to everyone for less than the price of a cup of coffee. Check out this post for more details and to find a plan that works for you.

“Everywhere one seeks to produce meaning, to make the world signify, to render it visible. We are not, however, in danger of lacking meaning; quite the contrary, we are gorged with meaning and it is killing us.”

― Jean Baudrillard

Executive Highlights (TL;DR of the article)

Recently, I came across an app called “SocialAI — AI Social Network” with a very interesting premise- you get millions of followers, all bots. You can’t follow any humans. The goal is to provide users with an infinite stream of wholesome engagement-

The framing around LARPing as “a main character”, being surrounded by a community, and having millions of followers made me think of Jean Baudrillard (I can never spell his name right, so I’m going to stick to JB) and his analysis of hyperreality and the loss of meaning. Specifically, I think the following ideas are worth thinking about when it comes to the current tech and AI ecosystems-

The Precession of Simulacra:

JB argued that our relationship with reality is mediated through signs and symbols — images, brands, information — which have become detached from any underlying truth. This creates a world of “simulacra,” copies without originals, where the distinction between real and artificial becomes increasingly blurrier.

These simulations, Baudrillard believed, don’t just reflect reality — they actively shape how we see the world and what we desire. As our interactions with the simulations become more frequent than the real world, this can lead to the following-

Information Overload and the Loss of Meaning:

As the interactions between the real and simulacra propagate, they start to become twisted versions of themselves. This creates a further detachment from reality, and we slowly watch our original symbols and constructs start to lose their meaning. This is made worse by our constant exposure to information overload, which leaves us feeling overwhelmed and unable to discern what truly matters. Over time, this creates a world where it’s easier to tap out and go with the flow instead of constantly burning energy fighting against the tide to hold on to a degree of meaning.

And unfortunately, current developments in Language Models come with a risk of making things worse-

AI and the Automation of Meaning:

Artificial intelligence adds another layer to this problem, not just by curating our online experiences but by becoming a creator itself. AI-generated content, from realistic deep fakes to sophisticated text generators, can add to the overload and loss of meaning in several key ways-

The use of AI in Moderation- Companies all over use various flavors of Language Models to identify harmful content, assess quality, etc.- which can lead to several unintended consequences, where people. For example, a programming course teacher got banned from advertising his courses on Facebook because “Meta’s AI system noticed that I was talking about Python and Pandas, assumed that I was talking about the animals (not the technology), and banned me. The appeal that I asked for wasn’t reviewed by a human, but was reviewed by another bot, which (not surprisingly) made a similar assessment.”

The above is a more extreme case, but there are more mundane cases -such as AI penalizing certain works for appearing “dangerous” (I’ve had personal experience with this) or automated bots causing creators to be unfairly flagged for copyright violations. In all cases, the use of an unchecked decision-making system can impose arbitrary standards that limit the distribution of certain kinds of content, and push others- all creating a loss of creativity and critical thinking.

The use of AI for generation—On the other hand, the ease of AI-created content can lead to 3 major problems. As discussed by several experts, an overreliance on GenAI will lead to people outsourcing their thinking to GPT- reducing their critical thinking abilities and making them more compliant in accepting answers that are handed to them. This would be bad enough, but it also comes two algorithmic problems which will make this problem worse, at scale.

Firstly, the sheer amount of content adds to the numbing effect as people have to deal with more content to sift through. This saps our mental energy, making us more likely to not engage with sources critically. Secondly, the presence of AI-generated content will push down the more “unsafe” or “low-quality” human-generated content, limiting the kinds of information/styles/ and linguistic diversity that people are exposed to.

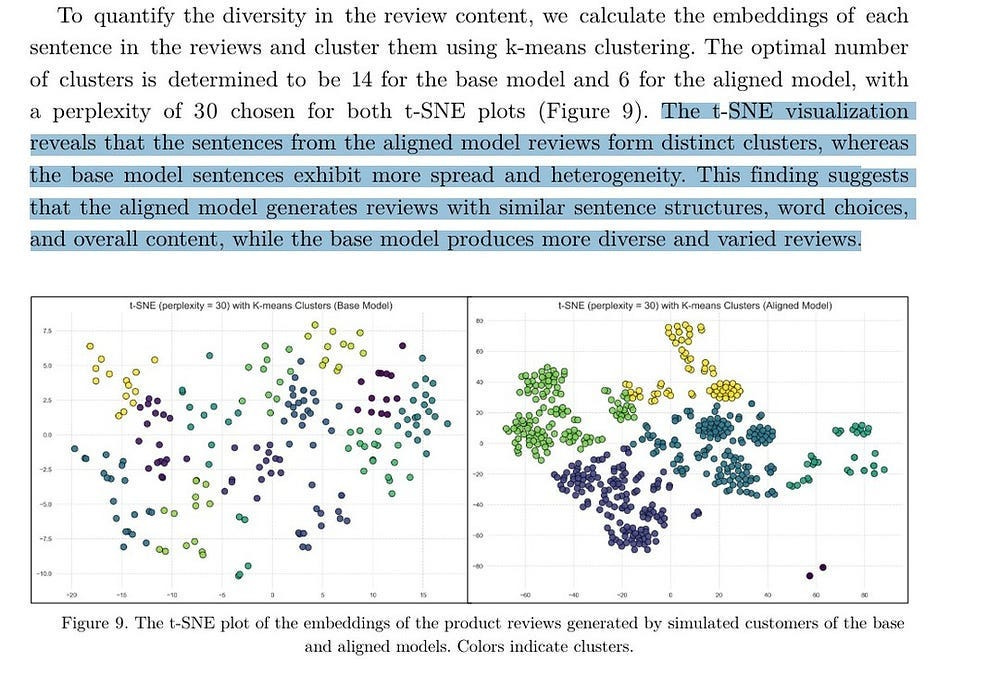

This is even worse if we end up using aligned models for review and generation- since they have been shown to significantly reduce creativity when used blindly-

“Large language models (LLMs) have led to a surge in collaborative writing with model assistance. As different users incorporate suggestions from the same model, there is a risk of decreased diversity in the produced content, potentially limiting diverse perspectives in public discourse.… This suggests that the recent improvement in generation quality from adapting models to human feedback might come at the cost of more homogeneous and less diverse content.”

- Does Writing with Language Models Reduce Content Diversity?

This was also noticed by another publication-

As more and more of the internet becomes written by Language Models, the proportion of training data that is purely LLM generated will be much higher. This will form a negative feedback loop as future models (which are trained on this data) will continue to see a drop-off in diversity (which will further push down creative text).

This was explored “The Curious Decline of Linguistic Diversity: Training Language Models on Synthetic Text”, which tells us that: “Our findings reveal a consistent decrease in the diversity of the model outputs through successive iterations, especially remarkable for tasks demanding high levels of creativity. This trend underscores the potential risks of training language models on synthetic text, particularly concerning the preservation of linguistic richness. Our study highlights the need for careful consideration of the long-term effects of such training approaches on the linguistic capabilities of language models.”

Please note that there are ways to maintain diversity when using synthetic data and/or aligned models for generation, but both these topics will be the topic of separate articles, since it requires careful setup. These systems, as with other technology, are naturally prone to amplifying their biases when unchecked, but we can absolutely keep the negative outcomes under control.

So, are we doomed to be mindless zombies? Fortunately, there is a way out, and it’s a lot more fun than you’d think.

Reclaiming Meaning in a Simulated World: Unfortunately, our man JB was too being angry at the hyperreality to give us too many concrete solutions. Generally, when people discuss solutions to JB’s reality-less world, they emphasize individual agency and critical thinking. We won’t touch on them too much in this piece since I already cover them quite a bit (and they might be best left for thinkers like Camus and Neitzche). Instead, I think it might be useful to talk about one idea I’ve heard from similar thinkers, but I don’t see too many people talk about: the use of an appreciation for beauty and whimsy and their use of self-discovery.

Tbh, Baudrillard isn’t my favorite writer. I put him in a similar bucket to Schopenhauer- he has some good ideas, but he feels a bit too world-denying, passion-less, and unhappy to speak to me on a personal level. His prose is also quite ugly. But I think it’s worth playing with his theories to find a framework for understanding the challenges and possibilities of finding meaning in our increasingly simulated age, especially since he formulated the challenges very clearly.

I put a lot of work into writing this newsletter. To do so, I rely on you for support. If a few more people choose to become paid subscribers, the Chocolate Milk Cult can continue to provide high-quality and accessible education and opportunities to anyone who needs it. If you think this mission is worth contributing to, please consider a premium subscription. You can do so for less than the cost of a Netflix Subscription (pay what you want here).

I provide various consulting and advisory services. If you‘d like to explore how we can work together, reach out to me through any of my socials over here or reply to this email.

How the World Loses Its Meaning

Abstraction today is no longer that of the map, the double, the mirror or the concept. Simulation is no longer that of a territory, a referential being or a substance. It is the generation by models of a real without origin or reality: a hyperreal. The territory no longer precedes the map, nor survives it. Henceforth, it is the map that precedes the territory — precession of simulacra — it is the map that engenders the territory and if we were to revive the fable today, it would be the territory whose shreds are slowly rotting across the map. It is the real, and not the map, whose vestiges subsist here and there, in the deserts which are no longer those of the Empire, but our own. The desert of the real itself

The Concept of Simulacra

JB argued that in modern society, our understanding of reality is increasingly shaped by representations rather than direct experiences. These representations — which he calls “signs and symbols” — include images, brands, media narratives, and other forms of information.

The key point is that these representations have become disconnected from any “true” or “original” reality they might have once represented. Instead, they create their own reality. Baudrillard calls these disconnected representations “simulacra.”

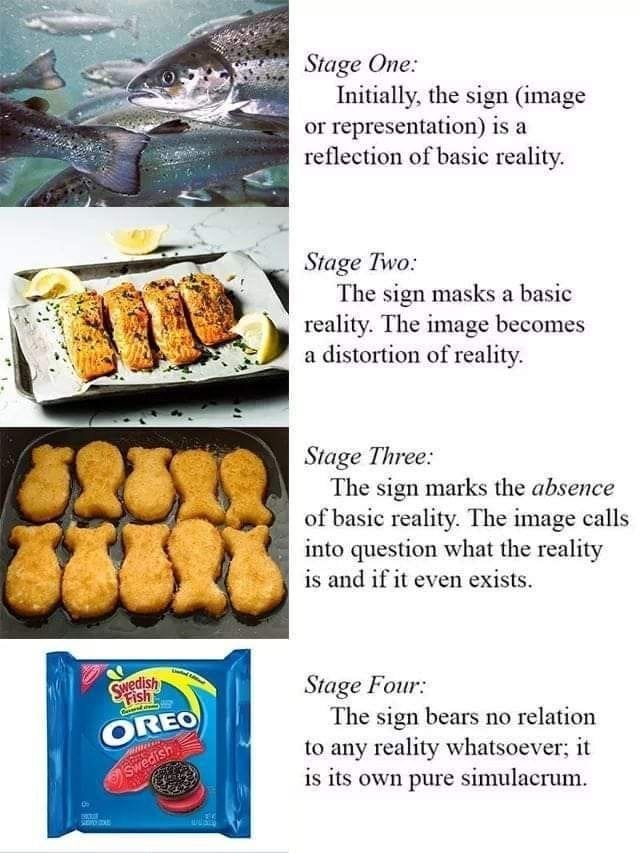

There are different stages of this simulation based on how disconnected your sign is from the original reality.

Let’s go through each stage, using the SocialAI platform as an example. Assume our base reality is having a group of friends, family, and other people who love you and are proud of you. This might sound like fiction to many of you, so imagine an alternate universe where people actually care about your existence. Let’s see how we can have 4 levels of representation for the community, each increasingly disconnected from this base reality.

The Four Stages of Simulation

Baudrillard outlined four stages to explain how we moved from representations that reflected reality to a world of pure simulacra:

It is a reflection of a basic reality: This is the starting point, where the sign (image, word, etc.) accurately represents something real. In our case, imagine a social media profile where you’re able to stay connected with the aforementioned (probably imaginary) loved ones. This representation accurately represents your community.

It masks and perverts a basic reality: Here, the sign begins to distort the reality it’s supposed to represent. This is like being part of a special interest group/page (like following a meme page). You’re still a community, but only tangentially.

It masks the absence of a basic reality: At this stage, the sign pretends to represent something real, but that real thing doesn’t actually exist. In our case, this is the timeline/general feed where you’re essentially shouting into the void. Your base reality (the most likely made-up loving community) has been replaced with an imitation (random account liking and engaging with you).

It bears no relation to any reality whatever: it is its own pure simulacrum: This is the final stage, where the sign doesn’t even pretend to represent reality anymore. It’s purely self-referential. This is our social media where you interact with no people, only bots.

As you can imagine, these stages aren’t massive leaps from each other. And this is why we have so easily slipped into stage 4.

The Dominance of the Fourth Stage

Baudrillard believed that in our modern world, we’ve largely entered this fourth stage. Many of our experiences and beliefs are shaped by pure simulacra — representations that don’t relate to any underlying reality.

Mass Media is a notable example of this. We will often form their career aspirations, life goals, and core beliefs based on people they’ve never met, served to them by algorithms that are incentivized to amplify extreme voices. Speaking about myself-

I started writing AI Research breakdowns partly inspired by the AI Researcher Yannic Kilcher, who used to post paper breakdowns very regularly on his Youtube channel.

I’m very inspired by the exceptional writers I read, some works of art etc. These were mostly recommended to me by social media algorithms. This includes the previous thinkers we’ve covered, who were all recommended to me through YouTube lectures at some point before I decided to read them (same for JB).

I didn’t even realize how one-sided the news coverage for me was till I was nomadic through various locations, through which I met people with very different sources.

In each case, I interacted with people’s representations of reality and made key decisions based on them. This was much easier than living their lives, and making the judgement based on that. The frequency of representations + the availability heuristic makes them a lot more influential than any “real” world behind the representations.

As the interactions between the real and simulacra propagate, they start to become twisted versions of themselves. This creates a further detachment from reality, and we slowly watch our original symbols and constructs start to lose their meaning.

The futility of everything that comes to us from the media is the inescapable consequence of the absolute inability of that particular stage to remain silent. Music, commercial breaks, news flashes, adverts, news broadcasts, movies, presenters — there is no alternative but to fill the screen; otherwise there would be an irremediable void. We are back in the Byzantine situation, where idolatry calls on a plethora of images to conceal from itself the fact that God no longer exists. That’s why the slightest technical hitch, the slightest slip on the part of a presenter becomes so exciting, for it reveals the depth of the emptiness squinting out at us through this little window.

Information Overload and the Loss of Meaning in the AI Age

We live in a world where there is more and more information, and less and less meaning.

At this stage, we encounter two interrelated phenomena: information overload and the loss of meaning. These issues, while not new, are significantly amplified by current technological trends.

Information Overload: Drowning in Data

The detachment from reality is exacerbated by our constant exposure to an overwhelming amount of information. This leaves us feeling overwhelmed and unable to discern what truly matters. Some key aspects of this information overload include:

Quantity Over Quality: The sheer volume of content produced daily, much of it AI-generated or AI-curated, makes it difficult to filter signal from noise. Info also comes at you from all angles, making it hard to get away.

Speed of Information Flow: The rapid pace of information dissemination means that new content is constantly pushing out the old, leaving little time for reflection or deep understanding. This leads to a lot of shallow learning, where concepts go in and out, instead of being retained in your brain.

Algorithmic Curation: AI-driven recommendation systems, while aiming to help us navigate this sea of information, often create filter bubbles that limit our exposure to diverse perspectives. They can also create hooks, getting us to keep checking in by creating a dependency on them.

The Erosion of Meaning

As we’re bombarded with information, much of it disconnected from any “base reality,” we start to see an erosion of meaning:

Contextual Collapse: As information is stripped of its original context and recirculated, its original meaning can be lost or distorted. This is how well-meaning social movements often become parodies of themselves.

Superficial Engagement: The overwhelming amount of content encourages skimming rather than deep engagement, leading to a surface-level understanding of complex issues.

Relativism of Truth: With the proliferation of conflicting information, it becomes increasingly difficult to determine what’s true, leading to a sense that all perspectives are equally valid (or equally meaningless). In another variant, people may become too tired to assert and fight for their beliefs.

Emotional Numbing: Constant exposure to sensational or emotionally charged content can lead to desensitization, making it harder to energize yourself.

When combined, they can often be overwhelming, which leads to one outcome-

Smile and others will smile back. Smile to show how transparent, how candid you are. Smile if you have nothing to say. Most of all, do not hide the fact you have nothing to say nor your total indifference to others. Let this emptiness, this profound indifference shine out spontaneously in your smile

The Path of Least Resistance

As Byung Chul Han said, Burnout is often a result of a subject that is constantly at war with itself, till it can’t grind itself anymore. Wrt to a subject in the simulacra, this can manifest in several ways:

Passive Consumption: Mindlessly scrolling through feeds, consuming content without critical engagement. I’m told this is called “Brainrot”, but I’m not sure I fully understand what that is yet.

Echo Chambers: Gravitating towards communities that reinforce existing beliefs, avoiding the cognitive effort required to engage with challenging ideas.

Meme Culture: Reducing complex ideas to simplistic, easily shareable formats that often strip away nuance and depth. Sloganeering is the more serious (and probably less intellectually demanding) version of this.

Outsourcing Critical Thinking: Relying on AI systems like large language models to summarize, analyze, or even form opinions on complex topics.

The last point is very interesting, because that is something people are pushing for aggressively. Social Media companies want to replace human labor with these. Many people are under the impression that plugging in LLMs for evaluations will reduce human bias. Others are convinced that LLM-generated content will allow anyone to become a millionaire from courses/social media following and will lead to infinite scaling for LLMs all the way to AGI.

Some of these are partially true. For example, as we covered in “How AI Will Impact Your Work”- LLMs can boost the bottom performers to match the average worker, according to a study by BCG. Something similar was seen by the Harvard Business Review-

He and his colleagues had assumed that teams leveraging ChatGPT would generate vastly more and better ideas than the others. But those teams produced, on average, just 8% more ideas than teams in the control group did. They got 7% fewer D’s, but they also got 8% more B’s (“interesting but needs development”) and roughly the same share of C’s (“needs significant development”). Most surprising, they got 2% fewer A’s. “Generative AI helped workers avoid awful ideas, but it also led to more average ideas,”

And this is their double-edged sword. LLMs, like much of technology (like other forms of AI), are inherently normalizing forces- they will tend to bring inputs towards an average. Depending on the use case, that can be a good or bad thing. However, their careless implementation can lead to problems. Let’s talk about how.

How AI Can Destroy Creativity

To address this, let’s talk about 3 topics-

“The limits of Language are the limits of our Thought.”- If AI limits the kind of content people are exposed to, it will reduce their ability to think about these topics.

During an evaluation, AI can often punish content that is “different”- whether in the content, style, or tone- and thus become an enforcer of sameness.

Creators on Recommendation Systems based Platforms are incentivized to copy each other. Social Media tends to push people (both creators and consumers) towards sameness, and punish creators that don’t meet the meta. We covered this extensively here, so I’m not going to prove this.

Language and Capabilities

Unfortunately, I can’t go around boinking people to cause brain damage so severe that they forget parts of a language to test their capacities before and after head-trauma.

Fortunately, there is research done on how people who speak different languages tend to be, on average, good at different things. “How Language Shapes Thought”, has a bunch of cool examples- such as a 5-year-old Aboriginal being able to do things that leading scientists could not. This is another cool one-

Moreover, groundbreaking work conducted by Stephen C. Levinson of the Max Planck Institute for Psycholinguistics in Nijmegen, the Netherlands, and John B. Haviland of the University of California, San Diego, over the past two decades has demonstrated that people who speak languages that rely on absolute directions are remarkably good at keeping track of where they are, even in unfamiliar landscapes or inside unfamiliar buildings. They do this better than folks who live in the same environments but do not speak such languages and in fact better than scientists thought humans ever could. The requirements of their languages enforce and train this cognitive prowess.

There’s a bunch of other cool statements, like how you can change a bilingual person’s feelings by changing the language you interact with them in etc.

With that covered, let’s touch on the next section-

How AI-Based Evaluations Punish “Different”

By their nature, AI tends to replicate the biases in your systems (unless explicitly designed not to do so, which adds another bias). Here, I’m using bias in the information-theory perspective- a shortcut used by a decision-making model (whether our brains or an AI) to prioritize and work with information. This can be positive (not eating something b/c it smells funny), value-neutral (my love for chocolate milk), or negative (racial biases).

AI biases arise from their dataset. For example-

Early ChatGPT claiming white people were superior when prompted came from the datasets that propagated white superiority.

Amazon’s hiring tool discriminating against women was the result it blindly trying to reproduce demographics (which were mostly male)-

Similarly, a lot of people with disabilities often get shafted by employee productivity analysis tools that don’t adequately understand the different ways they work. Something similar happens to neuro-spicy and non-native English speakers, who tend to use language differently than the norm and have been punished by systems that haven’t been designed to account for them.

For something closer to home- I often like to play with LLMs for evaluation. I’ve noticed that a lot of my writing gets scored down because “it’s not professional” especially when I talk about the limitations of LLMs and/or when I cover topics that LLMs consider too dangerous (such as our articles on multi-modal adversarial perturbations and the one on fighting government censorship of the internet). In the last case, the major LLMs kept asking me to add disclaimers, reduce information, etc. Claude even refused to assess the articles and improve with feedback.

This has also impacted distribution. For the last 2 years, multiple readers have sent me messages about how they stopped seeing my work pop up on their news/social media feeds. The main reason I created my Substack was that a lot of my readers had relied on Medium (this was my primary platform for the longest time), Google Alerts, or LinkedIn to stay updated with my work, but at different stages all of those stopped working-

This newsletter was a last-ditch effort to salvage the audience I had built up through Medium and LinkedIn. I saw the algorithms block my content, so I moved my focus to writing on a platform where I was at least guaranteed to have my emails delivered to people. I got very lucky that Substack has good word-of-mouth recommendations (which is pretty much exclusively how I’ve grown since Late 2022) and that some good people had found me before that point, so I’ve still grown my readership a bit.

I’m not sharing this for sympathy- I accepted the possible consequences of my actions when I chose to write what I did. But I figured my example might help you see the flaws up close. Automated systems struggle with data that are “on edge,” and this is, unfortunately, where a lot of creativity lies (especially the boundary-pushing kind). So, the automated review systems will naturally punish these articles while encouraging articles that meet their ideal style and content.

Getting back on topic- we have an AI that pushes certain kinds of content and suppresses others. Combined with cheap generators pumping out factory farm-style content, heaps of engagement/rage bait, and everyone talking about everything (YouTubers RDCworld and ApnaJ have hilarious skits about dudes starting podcasts that I would strongly recommend)- we have the perfect conditions to turn you into a zombie.

So, are you condemned to drift through the hyperreality aimlessly? Is there a way out? Let’s end on that.

Finding God in a Godless World

Never resist a sentence you like, in which language takes its own pleasure and in which, after having abused it for so long, you are stupefied by its innocence.

While our man JB is a bit light in the solutions department, there are some other thinkers who might have the answers we’re looking for.

Embrace the Struggle (Camus):

The only way to deal with an unfree world is to become so absolutely free that your very existence is an act of rebellion

-Camus

Albert Camus’s philosophy deals with the absurd- our struggle to find meaning and order in a fundamentally illogical and uncaring universe. Camus goes a step beyond existentialists; instead of trying to get people to forge their own values (or commit to a leap of faith)- Camus asks people to renounce the search for meaning altogether. In doing so, we can embrace life for what it is and thus live a richer, more fulfilling life.

In our context, this involves embracing the struggle and difficulty of dealing with hyperreality- “The struggle itself toward the heights is enough to fill a man’s heart. One must imagine Sisyphus happy”.

Subvert and Wander (Debord):

Guy Debord offers two powerful tools: détournement and dérive. Détournement encourages us to repurpose elements of our world in subversive ways, creating new meanings and authentic experiences (something like using a toothpick to pick a nose).

Dérive, or “drifting,” invites us to explore our environments without a fixed purpose, open to serendipity and unexpected discoveries.

Both these activities allow us to take back our sense of agency, allowing us to engage with the world on our terms, fighting back the apathy and numbness created by overstimulation.

Cultivate Simple Pleasures (Epicurus):

if we do not have a lot we can make do with few, being genuinely convinced that those who least need extravagance enjoy it the most; and that everything natural is easy to obtain and whatever is groundless is hard to obtain; and that simple flavours provide a pleasure equal to that of an extravagant life-style when all pain from want is removed, 131. and barley cakes and water provide the highest pleasure when someone in want takes them.

Epicurus advocated for finding happiness in simple pleasures and the cultivation of friendships. He argued, “Not what we have, but what we enjoy, constitutes our abundance.”

Epicurus’s philosophy of self-introspection to identify natural and unnatural pleasures is a great way to engage in self-discovery, and thus identify where you should and should not allocate your time and energy.

Embrace a Life of Adventure (Don Quixote):

When life itself seems lunatic, who knows where madness lies? Perhaps to be too practical is madness. To surrender dreams — this may be madness. Too much sanity may be madness — and maddest of all: to see life as it is, and not as it should be!

The Legendary Don Quixote offers a powerful metaphor for maintaining idealism and a sense of adventure in a world that might seem prosaic or overwhelming. It’s a story about a man who refuses to let reality tell him who he should be, and in doing so lives a life of adventure (even if he imagines it). While everyone laughs at Don Quixote, I don’t think there was anyone happier than him when he charged that windmill and I think there’s something to be taken from that.

These works/thinkers might help you claw back a sense of meaning from the constant deluge of noise. Or maybe I’m just a boomer who hasn’t accepted that the solution is to take the Soma and just let ourselves be swept along in our Brave New World.

If you liked this article and wish to share it, please refer to the following guidelines.

That is it for this piece. I appreciate your time. As always, if you’re interested in working with me or checking out my other work, my links will be at the end of this email/post. And if you found value in this write-up, I would appreciate you sharing it with more people. It is word-of-mouth referrals like yours that help me grow. You can share your testimonials over here.

I regularly share mini-updates on what I read on the Microblogging sites X(https://twitter.com/Machine01776819), Threads(https://www.threads.net/@iseethings404), and TikTok(https://www.tiktok.com/@devansh_ai_made_simple)- so follow me there if you’re interested in keeping up with my learnings.

Reach out to me

Use the links below to check out my other content, learn more about tutoring, reach out to me about projects, or just to say hi.

Small Snippets about Tech, AI and Machine Learning over here

AI Newsletter- https://artificialintelligencemadesimple.substack.com/

My grandma’s favorite Tech Newsletter- https://codinginterviewsmadesimple.substack.com/

Check out my other articles on Medium. : https://rb.gy/zn1aiu

My YouTube: https://rb.gy/88iwdd

Reach out to me on LinkedIn. Let’s connect: https://rb.gy/m5ok2y

My Instagram: https://rb.gy/gmvuy9

My Twitter: https://twitter.com/Machine01776819

I loved your interpretation of Baudrillard's work. I've only read his 'The Consumer Society,' but my journalism professor said his best work is 'Simulacra and Simulation.' I haven't read it, but your article was a great introduction, although AI-focused.

Great article, but it only increased the eternal dilemma. Should we then totally conform to culture, people, and now, even to AI if we want to succeed in human society, or not?

Should I self-censor all the time and become a spineless politician in all interactions in order to please the machine?

Because if the answer is yes, then I already experienced the future behind the Iron Curtain in the 80s, and trust me, it is the end of all creativity and originality.