LIMA: Less Is More for Alignment - The paper that will shake up AI [Breakdowns]

The future of adopting Large Language Models is going smaller.

Hey, it’s Devansh 👋👋

In my series Breakdowns, I go through complicated literature on Machine Learning to extract the most valuable insights. Expect concise, jargon-free, but still useful analysis aimed at helping you understand the intricacies of Cutting Edge AI Research and the applications of Deep Learning at the highest level.

If you’d like to support my writing, please consider buying and rating my 1 Dollar Ebook on Amazon or becoming a premium subscriber to my sister publication Tech Made Simple using the button below.

p.s. you can learn more about the paid plan here. If your company is looking to build AI Products or Platforms and is looking for consultancy for the same, my company is open to helping more clients. Message me using LinkedIn, by replying to this email, or on the social media links at the end of the article to discuss your needs and see if you’d be a good match.

We have continued to see the development of more Large Language Models. Falcon has landed at HuggingFace Hub and models like Orca have shaken up the rankings. Amongst these developments, was a very interesting paper I came across- Textbooks Are All You Need- by the people at Microsoft Research. Their approach and results are worth paying attention to-

We introduce phi-1, a new large language model for code, with significantly smaller size than competing models: phi-1 is a Transformer-based model with 1.3B parameters, trained for 4 days on 8 A100s, using a selection of ``textbook quality" data from the web (6B tokens) and synthetically generated textbooks and exercises with GPT-3.5 (1B tokens). Despite this small scale, phi-1 attains pass@1 accuracy 50.6% on HumanEval and 55.5% on MBPP. It also displays surprising emergent properties compared to phi-1-base, our model before our finetuning stage on a dataset of coding exercises, and phi-1-small, a smaller model with 350M parameters trained with the same pipeline as phi-1 that still achieves 45% on HumanEval.

This made me think back to many of the conversations, I’ve heard. A lot of engineers I’ve spoken with assume that since the scale in training these large language models is so large, meaningful finetuning should be of a similar scale, especially in more complex fields like coding, law, and finance. However, this is far from the truth. Multiple research papers across various tasks have shown that intelligently selecting a few samples can greatly augment the performance of large baseline models without adding too much to the cost.

Such vastly superior scaling would mean that we could go from 3% to 2% error by only adding a few carefully chosen training examples, rather than collecting 10x more random ones

In the context of Large Language Models, this phenomenon was shown beautifully by the paper- LIMA: Less Is More for Alignment. The authors of LIMA took a baby model- 65B parameter LLaMa language model fine-tuned with the standard supervised loss on only 1,000 carefully curated prompts and responses, without any reinforcement learning or human preference modeling. However, this baby managed to punch above its weight-

LIMA demonstrates remarkably strong performance, learning to follow specific response formats from only a handful of examples in the training data, including complex queries that range from planning trip itineraries to speculating about alternate history. Moreover, the model tends to generalize well to unseen tasks that did not appear in the training data. In a controlled human study, responses from LIMA are either equivalent or strictly preferred to GPT-4 in 43% of cases; this statistic is as high as 58% when compared to Bard and 65% versus DaVinci003, which was trained with human feedback.

In this article, we will touch upon some of the results, go over why Less is More when it comes to alignment, and also cover the Superficial Alignment Hypothesis. To end, we will touch on one of the biggest weaknesses of this approach- its fragility to adversarial inputs. While the underlying concept in this paper should be fairly obvious- the results do warrant a deeper look. To fully understand the core ideas of this idea- we must first understand the Superficial Alignment Hypothesis and the relative importance of pretraining vs alignment for the knowledge of any given Large Language Model.

Does alignment actually teach LLMs

The authors open with an interesting hypothesis about LLMs and whether they actually learn from finetuning- called the Superficial Alignment Hypothesis. Put simply, SAH posits that LLMs learn during the pretraining phase and the best utilization of the alignment phase (RLHF, Supervised Learning, Finetuning for specific outputs etc) is not to provide our AI Models with knowledge but to tune their output to match our needs. In the words of the authors-

A model’s knowledge and capabilities are learnt almost entirely during pretraining, while alignment teaches it which subdistribution of formats should be used when interacting with users.

Taken a step further, this tells us that we don’t really need too many samples and one could sufficiently tune a pretrained language model with a rather small set of examples. This is what sets up the basis for LIMA- where the authors use 1000 carefully selected samples to tune their model, as opposed to the more complex procedures implemented by the household LLMs. But how well do they actually do? Time to find out-

LIMA vs GPT, Bard, Claude, and Alpaca

So how do we compare language models and their performance? This is a trickier question than you would think. Keep in mind, LLMs are very good at generating text that looks correct (is most syntactically correct)- so using rule-based checks is meaningless. Take a second on how you’d set up your quality checks before proceeding with this article. Thinking about such things is crucial in developing your skills in setting up ML experiments.

For the purposes of this paper, it is important to zoom out and look at their evaluation setup as a whole. This is crucial for contextualizing the results of the experiments (one of the biggest mistakes you can make is to blindly look through the results of such papers w/o understanding the experiment setup). The evaluation pipeline has the following important features-

Generation- “For each prompt, we generate a single response from each baseline model using nucleus sampling. We apply a repetition penalty of previously generated tokens with a hyperparameter of 1.2. We limit the maximum token length to 2048.”

Methodology- At each step, we present annotators with a single prompt and two possible responses, generated by different models. The annotators are asked to label which response was better, or whether neither response was significantly better than the other (look at the image below).

This is supplemented by the use of GPT4 for annotations. Below is a sample prompt used to accomplish that. The evaluation uses a 6-scale Likert score. The placeholders "task" and "submission" will be replaced by specific details from the actual case being evaluated

Inter-Annotator Agreement- “We compute inter-annotator agreement using tie-discounted accuracy: we assign one point if both annotators agreed, half a point if either annotator (but not both) labeled a tie, and zero points otherwise. We measure agreement over a shared set of 50 annotation examples (single prompt, two model responses – all chosen randomly), comparing author, crowd, and GPT-4 annotations. Among human annotators, we find the following agreement scores: crowd-crowd 82%, crowd-author 81%, and author-author 78%. Despite some degree of subjectivity in this task, there is decent agreement among human annotators”

This should now give you the context for evaluating the results, and seeing how LLM comparisons are done (they can be a bit hacky- which means that there is a great market for strong benchmarks. If any of you are looking for a business idea in AI, developing benchmarks is a great field to get into). Let’s now look at the raw numbers-

Out of 50 test prompts, 50% of LIMA answers are considered excellent, and only 12% of outputs are designated as fail. There is no notable trend within the failure cases. Most impressive is how it deals with out-of-distribution prompts: prompts where the task is unrelated to the format of the 1000 training samples. We see that of the 20 OOD prompts- 20% of responses fail, 35% pass, and 45% are excellent. Based on this this is a small sample, it appears that LIMA is able to generalize well. This is a great start and highlights the potential for LIMA as a standalone solution. But how does it compare to other models?

As mentioned at the start of this article, it does very well. To reiterate-

Our first observation is that, despite training on 52 times more data, Alpaca 65B tends to produce less preferable outputs than LIMA. The same is true for DaVinci003, though to a lesser extent; what is striking about this result is the fact that DaVinci003 was trained with RLHF, a supposedly superior alignment method. Bard shows the opposite trend to DaVinci003, producing better responses than LIMA 42% of the time; however, this also means that 58% of the time the LIMA response was at least as good as Bard. Finally, we see that while Claude and GPT-4 generally perform better than LIMA, there is a non-trivial amount of cases where LIMA does actually produce better responses. Perhaps ironically, even GPT-4 prefers LIMA outputs over its own 19% of the time.

-In cases where costs are valuable, the higher returns of the bigger models might not be worth the additional burden they put on your system.

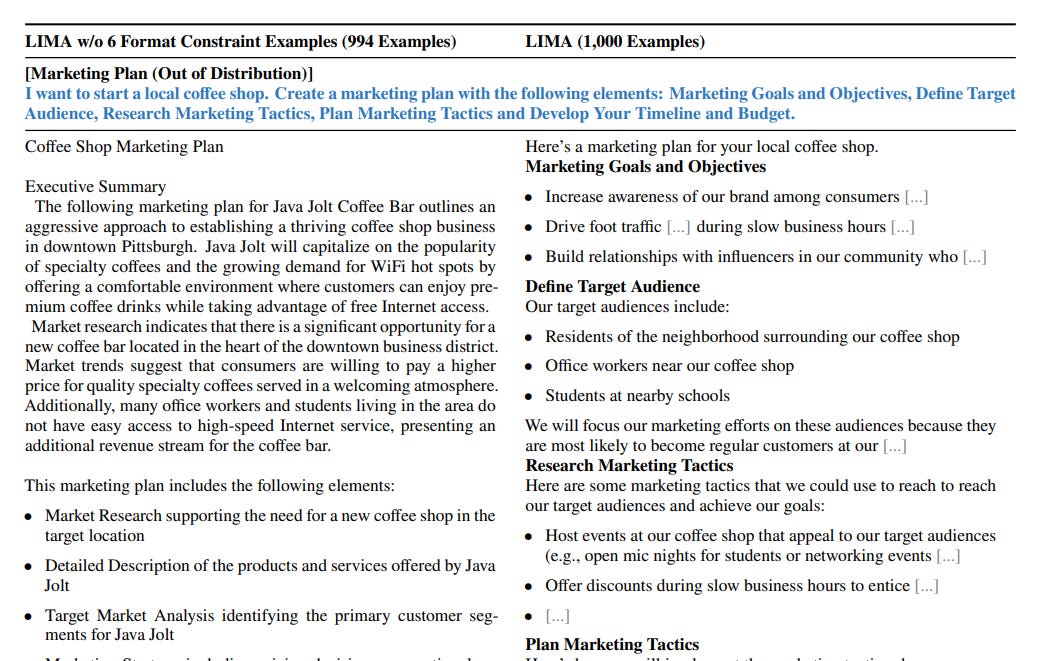

Through their work, the authors note that LIMA seems to struggle with text that is structurally more complex. “In our preliminary experiments, we find that although LIMA can respond to many questions in our development set well, it cannot consistently respond to questions that specify the structures of the answer well, e.g. summarizing an article into bullet points or writing an article consisting of several key elements.” Although this can be remedied with more training (up to a point), as shown by the graph below.

Adding some of these formatted outputs seems to give LIMA some level of generalization superpowers. “We added six examples with various formatting constraints, such as generating a product page that includes Highlights, About the Product, and How to Use or generating question-answer pairs based on a given article. After training with these six additional examples, we test the model on a few questions with format constraints and observe that LIMA responses greatly improve. We present two examples in Figure 13, from which we can see that LIMA fails to generate proper answers without structure-oriented training examples (left column), but it can generate remarkably complex responses such as a marketing plan even though we do not have any marketing plan examples in our data (right column).”

To round off the LIMA results evaluation, let’s take a look at the results of the ablation studies conducted by the authors.

How much do data diversity, quality, and quantity matter for AI Alignment

To those of you not familiar with the term- An ablation study investigates the performance of an AI system by removing certain components to understand the contribution of the component to the overall system. The authors wanted to evaluate what role roles data diversity, quality, and quantity played in Alignment. Here is a quick summary of their results-

TL;DR- We observe that, for the purpose of alignment, scaling up input diversity and output quality have measurable positive effects, while scaling up quantity alone might not. Who would have seen this coming? I have an entire article where I countered Sam Altman’s claim that there was an era for scaling up models/training. Originally, I received some pushback, but more research is coming to a similar conclusion.

Diversity- To test the effects of prompt diversity, while controlling for quality and quantity, we compare the effect of training on quality-filtered Stack Exchange data, which has heterogeneous prompts with excellent responses, and wikiHow data, which has homogeneous prompts with excellent responses. While we compare Stack Exchange with wikiHow as a proxy for diversity, we acknowledge that there may be other conflating factors when sampling data from two different sources. We sample 2,000 training examples from each source (following the same protocol from Section 2.1). Figure 5 shows that the more diverse Stack Exchange data yields significantly higher performance.

Quality- To test the effects of response quality, we sample 2,000 examples from Stack Exchange without any quality or stylistic filters, and compare a model trained on this dataset to the one trained on our filtered dataset. Figure 5 shows that there is a significant 0.5-point difference between models trained on the filtered and unfiltered data sources.

Quantity- Scaling up the number of examples is a well-known strategy for improving performance in many machine learning settings. To test its effect on our setting, we sample exponentially increasing training sets from Stack Exchange. Figure 6 shows that, surprisingly, doubling the training set does not improve response quality. This result, alongside our other findings in this section, suggests that the scaling laws of alignment are not necessarily subject to quantity alone, but rather a function of prompt diversity while maintaining high quality responses.

That Hall of Fame-worthy last sentence goes against everything that cutting-edge AI Research has been about. I’m sure you’re as shocked as I am, but I need to you to focus now. We’re about to end with a discussion a very important discussion wrt to a possible drawback of this approach. The results demonstrated by LIMA open the door for people who want to tune LLMs for their specific needs but have limited computing to do so. However, this efficiency comes at a cost. In the words of the authors- LIMA is not as robust as product-grade models; while LIMA typically generates good responses, an unlucky sample during decoding or an adversarial prompt can often lead to a weak response.

LIMA, Security, and Robustness

While LIMA might seem like a magic bullet, it comes with a huge security risk: its vulnerability to adversarial suggestion. To understand why this is the case, it’s important to understand the LIMA approach. At its core, LIMA is about efficiency- using a few high-quality samples to teach the model the general outline of a particular task. So why is this bad for security?

Simply put adversarial prompts are risky precisely because they also match the general outline well. The adversarial devil dwells in the details (my English teachers would be so proud of that alliteration), and teaching AI to deal with all the possible hazards would overwrite all the efficiency benefits (keep in mind even beefy models like GPT can be broken easily). So how do we deal with this?

The most important step is to use your judgment. LIMA (and most of the popular GenAI services) are best used when safety isn’t a huge deal: a customer service bot can for the most bit get a few things off. It’s not critical, and there’s no huge loss. Similarly, if you’re using a bot to quickly transcribe notes into a particular format or store data in a specific way then you can keep things simple and use this approach for great ROI. If you’re looking for a more robust solution for information retrieval and synthesis, my go-to is to use retrieval-based architectures. These are very good for parsing through documents/knowledge bases to extract solutions, and I would highly recommend looking at these instead of trying to fine-tune GPT on your data (which is extremely inefficient and will still have problems). A simple retrieval-based architecture is shown below.

For a more complex version, I would suggest taking a look at Sphere by Meta, which I broke down here. If you are looking for someone to help you build solutions based on these architectures (or are looking to integrate AI/Tech into your solutions in any other way), then feel free to reach out to me and we can discuss your needs.

At its core, the LIMA approach leads to a glass cannon- it works well but will be more fragile than using more samples (which would encode robustness more). However, despite its fragility, LIMA is game-changing for AI. Combined with Open Source LLMs, LIMA can be used to create very convincing proof of concepts and demos, which will help democratize ML further. People from differing backgrounds and with lower access to resources can still create compelling alpha-stage products, which will improve the accessibility of AI and ML Entrepreneurship (something the field desperately needs). In a field where everyone is losing it over gargantuan models and obscene amounts of training data- LIMA is a welcome (even if somewhat obvious) contribution.

That is it for this piece. I appreciate your time. As always, if you’re interested in working with me or checking out my other work, my links will be at the end of this email/post. If you like my writing, I would really appreciate an anonymous testimonial. You can drop it here. And if you found value in this write-up, I would appreciate you sharing it with more people. It is word-of-mouth referrals like yours that help me grow.

Reach out to me

Use the links below to check out my other content, learn more about tutoring, reach out to me about projects, or just to say hi.

Small Snippets about Tech, AI and Machine Learning over here

AI Newsletter- https://artificialintelligencemadesimple.substack.com/

My grandma’s favorite Tech Newsletter- https://codinginterviewsmadesimple.substack.com/

Check out my other articles on Medium. : https://rb.gy/zn1aiu

My YouTube: https://rb.gy/88iwdd

Reach out to me on LinkedIn. Let’s connect: https://rb.gy/m5ok2y

My Instagram: https://rb.gy/gmvuy9

My Twitter: https://twitter.com/Machine01776819