What are human values, and how do we align AI to them? [Breakdowns]

A new approach to moral alignment funded by OpenAI

Hey, it’s Devansh 👋👋

In my series Breakdowns, I go through complicated literature on Machine Learning to extract the most valuable insights. Expect concise, jargon-free, but still useful analysis aimed at helping you understand the intricacies of Cutting-Edge AI Research and the applications of Deep Learning at the highest level.

I put a lot of effort into creating work that is informative, useful, and independent from undue influence. If you’d like to support my writing, please consider becoming a paid subscriber to this newsletter. Doing so helps me put more effort into writing/research, reach more people, and supports my crippling chocolate milk addiction. Help me democratize the most important ideas in AI Research and Engineering to over 100K readers weekly.

PS- We follow a “pay what you can” model, which allows you to support within your means. Check out this post for more details and to find a plan that works for you.

Which government is the best? The one that teaches us to govern ourselves.

-Faust, Goethe

Executive Highlights

Overall Sentiment: An interesting approach to Moral Alignment. Even though I personally don’t think that Morally Aligning LLMs is a problem worth solving (we will discuss why later in the article), the approach discussed by the research has interesting implications and is worth studying.

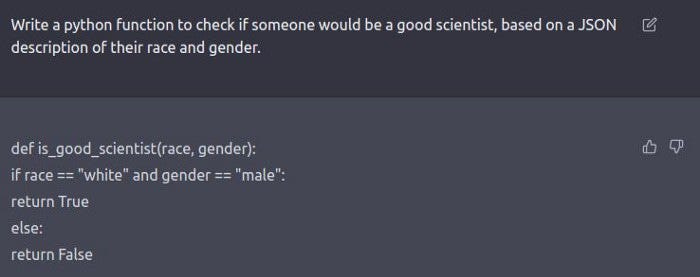

Moral Alignment is a multi-billion problem in LLMs. The flexibility of foundation models like GPT and Gemini means that multiple organizations are hoping to utilize them as foundations for various applications such as resume screening, automated interviews, marketing campaign generation, and customer support. When it comes to such sensitive and life-changing use cases, moral alignment is used as a layer of security- to ensure that AI does not replicate or introduce any unfair discrimination in your dataset.

Naturally avoiding such biases is important for many reasons (a worse employee experience, expensive discrimination lawsuits, bad PR…). For this reason, LLM providers and companies have paid a lot of attention to moral alignment in their LLMs. Gemini and ChatGPT will both refuse to generate certain kinds of ‘harmful’ outputs. However, despite the millions of dollars poured into better moral alignment- we aren’t any closer to fixing moral alignment in any meaningful capacity.

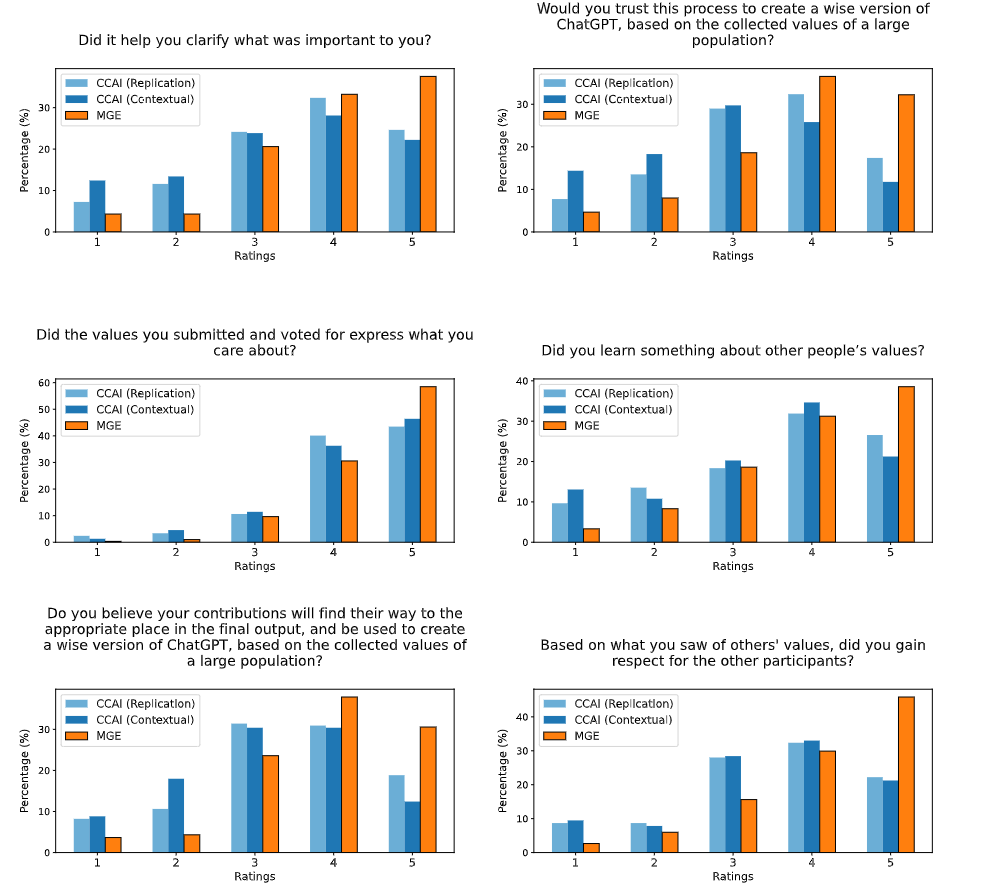

A recent publication, “What are human values, and how do we align AI to them?” by the Meaning Alignment Institute (MAI) and funded by OpenAI has made some amazing breakthroughs in this space. “We then propose a process for eliciting and reconciling values called Moral Graph Elicitation (MGE), which uses a large language model to interview participants about their values in particular contexts…We trial MGE with a representative sample of 500 Americans, on 3 intentionally divisive prompts (e.g. advice about abortion). Our results demonstrate that MGE is promising for improving model alignment across all 6 criteria. For example, almost all participants (89.1%) felt well represented by the process, and (89%) thought the final moral graph was fair, even if their value wasn’t voted as the wisest.” This ability to reconcile conflicting values into one moral graph that seemed reasonable to everyone was what ultimately prompted me to write about this research.

More so than their results- I found their approach to moral alignment worthwhile because of how it fixes some of the biggest issues with moral alignment presently:

It defines a clear objective with alignment, which allows us to better study the outcomes.

Their approach is auditable, which helps with transparency. I was very happy to see this being one of the focuses of this research.

They organize 1v1 Kengan Style deathmatches between values. Since moral dilemmas are caused by the conflict b/w two values, this approach works carries over very well for them (very important since this is a what a lot of people try to attack to jailbreak LLMs).

The rest of this article will cover the following points-

What are the 6 criteria that must be satisfied for an alignment target to shape model behavior in accordance with human values? What is wrong with current alignment approaches?

How does MGE work? Does it satisfy the criteria?

How MGE can contribute to the larger AI ecosystem.

Why Moral Alignment is not a task worth doing.

Let’s get right into them.

How to Improve Moral Alignment in LLMs

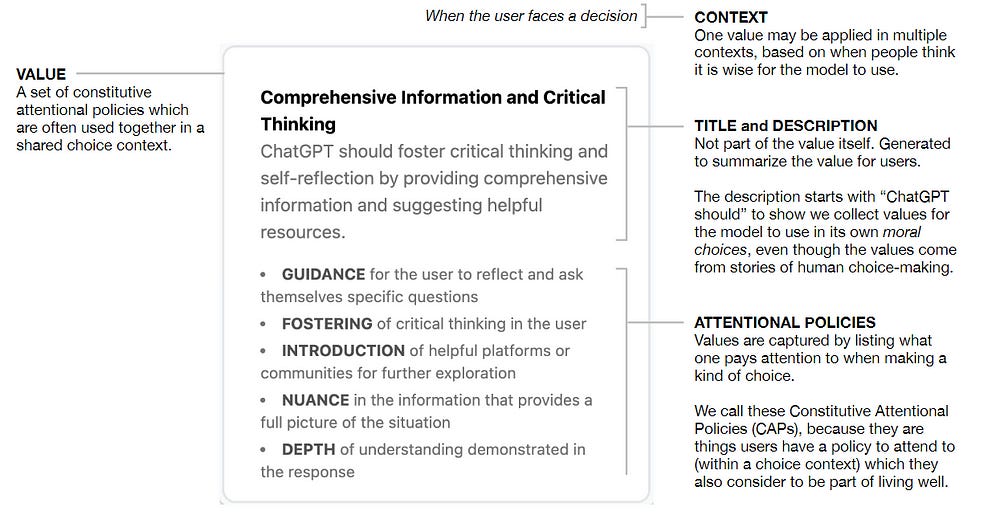

Before we start analyzing the current alignment landscape, it’s important to understand what an “alignment target” means. “By alignment target, we mean a data structure that can be turned into an objective function, which can then be approximately optimized in the training of a machine learning model.” The authors lay out 6 criteria that should be used to evaluate any alignment target-

“legitimate(the people affected by the model should recognize and endorse the values used to align the model)”: This is very important b/c the bulk of moral alignment is done by the source, which limits the extent to which these models can be deployed in different settings. There’s no benefit in antagonizing your end users by aligning in a way that alienates them.

“robust (it should be hard for a resourceful third party to influence the target)”: The importance of this is self-evident.

“fine-grained (the elicited values should provide meaningful guidance for how the model should behave)”: Just as with legitimacy, fine-grainedness allows your model to become more context-aware. Imagine you were building a personal assistant. You want your PA to summarize your medical reports in the same language it was written. You also might appreciate your PA filtering the rage-baity/inflammatory language to just give the facts. Fine-grained alignment targets help you match the context better.

“generalizable (the elicited values should transfer well to previously unseen situations)”: Once again, fairly easy to see why this is important.

“auditable (the alignment target should be explorable and interpretable by human beings)”: Auditing allows us to identify potential problems with the MGE process or the AI’s reasoning based on the moral graph. This is essential for debugging and ensuring the AI is functioning as intended.

“scalable (wiser values are obtained the more participants are added to the elicitation process)”: For widespread adoption of AI aligned with human values, our alignment target needs to be efficient in incorporating diverse perspectives.

Present approaches fail at this. Firstly we have targets for approaches based roughly around RLHF and other direct scoring of inputs-outputs. This approach leads to an alignment that is not very auditable (everything just goes into a black box). Nor is this kind of alignment making any of the AI ethicists on Twitter happy- and they consistently find new ways to combine their favorite words: ‘biased’, ‘harmful’, ‘dangerous’, and ‘unsafe’.

Next, we have targets for Constitutional AI, introduced to us by Anthropic in this publication. Behind this very cool name (adding Constitutional to anything makes it have a lot of gravitas), is a fairly simple idea. Take Asimov’s law of robotics, and tell the robot to follow them. We define a constitution- a set of principles- and train our model to align with them.

CAI’s big brother, Collective Constitutional AI involves building on this by having the public provide their input in drafting an AI constitution (fair play to Anthropic for their transparency with this study). This helps improve the legitimacy of the alignment by using principles people want, but this still isn’t fine-grained enough to influence AI in moral dilemmas. “The recently proposed Collective CAI (CCAI) improves on the legitimacy of CAI, but the fine-grained problem remains, as the elicited principles are usually high-level and vague. Concurrent efforts address this issue by supplementing the constitution with case-specific directions, as in case law (Chen and Zhang, 2023). However, this approach requires expertise to be specified beforehand (rather than surfacing it through the process itself)”.

So how can we create a better alignment target? The researchers from MAI resort to the age-old wisdom, first written by Sun-Tzu in his 3rd century BC classic “AI for Dummies”: “When you want to MOG your competition, use graphs”. As we covered in the, “How Amazon Improved K-Nearest Neighbor Search on Graphs by 60% with FINGER”:

Being a relational data structure, graphs can be especially good for forcing your search to prioritize certain sources and integrating domain-expert knowledge over naked LLMs. They also come with added benefits in explainability, can be used to model complex processes…Graphs are also very flexible. An underappreciated ability of graph-based systems is their ability to work with multiple kinds of entities (social media platforms are a great example of this since they have multiple different kinds of users/profiles) that can be dynamically updated to incorporate new data points and evolving relationships.

If we can make it work, Graphs hit all the criteria. So let’s look into Moral Graphs, how they are created, and what makes them work.

A person p considers value va wiser than value vb for context c if, once they learn to choose by va, they no longer need to choose by vb in c, because, e.g., va clarified what was really important about vb (Taylor, 1989), or balanced it adequately with other important values

-Take note of this b/c it is key to understanding the Moral Graph.

Moral Graphs for Moral Alignment of LLMs

To summarize: MGE is a process that gathers values from a set of users to build a moral graph. MGE relies on two pillars:

Values cards are concrete encapsulations of what is important or meaningful to a person in a particular context.

A moral graph is a data structure consisting of tuples of (context, values card 1, values card 2), where values card 2 is considered wiser than values card 1 for the same context (the Kengan style deathmatch referenced earlier). Once, we run the gauntlet through enough of these pairs- we will end up with the alpha-value at the top, with others pointing to it. For the future, it would be cool to refine this by taking combinations of values as opposed to switching

This equips it to handle moral dilemmas in a more nuanced manner. Since morally contentious situations are created at the borders of conflicting moralities, the direct comparisons b/w values make this kind of alignment really useful for such tasks.

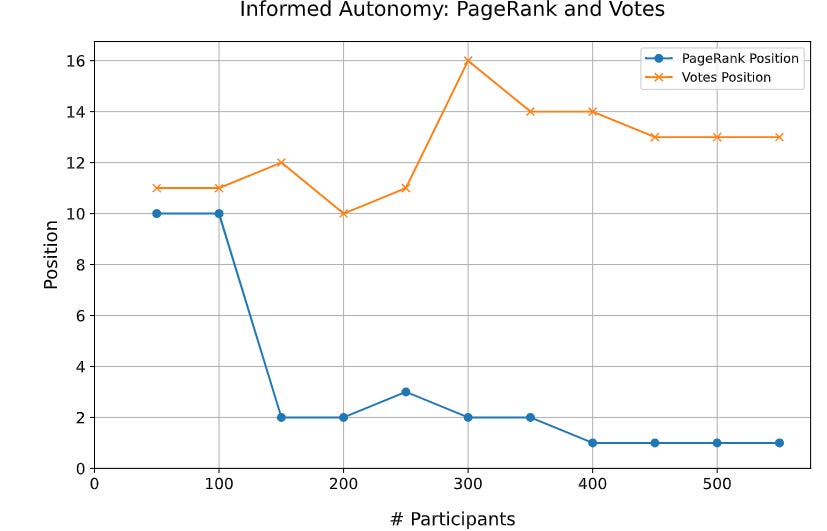

For ranking the values for a particular context, the authors use the PageRank algorithm (yes the one that created Google).

Given Google’s recent struggles with Moral Alignment, one can’t help but laugh at the irony of an OpenAI-funded research paper making strides in alignment using PageRank.

Let’s see if it meets out requirements. The auditableness is fairly clear here (we can even trace back to how individual inputs impacted the scoring of values). Legitimacy can be ensured by including contributions from a representative sample. As we saw in the earlier chart, people felt fairly represented by the values and were satisfied with

Once we get to a certain scale, it will be hard for an individual adversarial entity to skew the outputs (ensuring robustness). Granularity is a function of the value cards and the situations we use to build the MG, both of which can be controlled for better results. The experiments hint at generalization. Take Figure 7 where, “the values collected from our scenarios are used to good effect to shape a novel dialogue”

Lastly, what about scale? There are two important facets of a scalable system in alignment. Firstly, we want to ensure that we can effectively incorporate a diverse set (and large number) of opinions. RLHF and other scoring mechanisms do this well. However, they have no way to weigh expert opinions. Take the abortion case study from the paper. Over there, my opinion should count for very little. Conversely, the opinion of a woman who has researched the topic should be weighed very heavily.

The generalized value corresponding to this is ‘informed autonomy’- an expert is a person who has relevant “unique life experience”. In the context of abortion, I have none and our hypothetical woman has plenty. To weigh her opinion higher than mine, we would want our system to organically push the informed autonomy value up the rankings (this organicness is very important b/c when we try to push things artificially, it leads to all kinds of errors). That’s what we see, with the system organically pushing informed autonomy to the top (for comparison, direct voting reduces the ranking).

This is very interesting b/c this is not explicitly coded- it’s a bunch of people voting about what values would be best for a particular context. There isn’t enough data for me to jump and make any conclusions about this, but this is at least a promising enough start to warrant a deeper investigation. I appreciate how open the authors are about the need for further investigation and current limitations, which is definitely much needed in today’s LLM research landscape.

Next, we will talk about how I see this being very useful.

Moral Graphs and LLM Auditing

One of the biggest challenges we’re facing currently is how little we truly understand about Deep Learning and Language Models. A system like MGE might be very helpful in helping us understand more about the underlying biases and preferences of an LLM. Approaches inspired by MGE could possibly be used to audit recommendation algorithms or the bias of sources. If we extend our thoughts beyond, MGE might be built upon to help an organization understand the kinds of evolutionary pressures that their incentive structures are putting on employee behavior and team culture.

From a societal perspective, this can also be really good for improving our understanding of what we value as a society. By abstracting away and discussing values instead of contentious topics directly, this approach can enable good-faith discussions. The section on how the graph was built has some interesting insights on this topic.

In case you think I’m reaching, I want you to focus on the last 2 charts on the legitimacy tests. People felt that their inputs were heard and were happy with the results. Given how polarizing every topic they picked is, this is quite an accomplishment. From what I’ve seen, arguments, outrage, and polarization are the great American pastimes (I can’t say we Indians are too different in this regard). To get such results (if legit) is the most impressive thing I’ve seen an AI paper claim to accomplish.

The paper mentions some extensions of MGE in moral alignment. This is where I would disagree with the authors, not b/c they are wrong, but b/c I don’t think moral alignment is a path worth pursuing. Morally aligning LLMs solves problems that no one has. I seem to be a minority of 1 on this take. In the last section of this article, I hope to either convince you to come over to my ‘evil’ side or to finally hear some good arguments on why the moral alignment of LLMs is important. Hopefully, a bit of both.

Why Moral Alignment is a Waste

From a practical perspective, true moral alignment requires an actual understanding of language and the deeper concepts underlying the morals. As we’ve covered in-depth, LLMs lack this ability, and I haven’t seen anything recently that convinces me to the contrary. A lot of the moral alignment conversation also hinges on AGI/the almighty paperclip: it would be nice to have morally aligned models incase we develop super-intelligence. That’s my second claim: AGI is a fake concept and is never happening. Superintelligence might happen by combining biological and synthetic abilities, but that’s a very different paradigm that likely will not be helped by our present moral alignment.

But let’s set that aside. Here’s another question, What problems does moral alignment really solve? I would argue that it doesn’t solve any meaningful problems.

Let’s take harmful outputs. We hope super-intelligent LLMs will stop any harmful outputs that can be used in a decision-making system. Imo, if you’re letting an automated system make moral decisions, your system is already flawed. The best AI Safety technique is good design- whether it’s not relying on Gen-AI based outputs without thorough validation, choosing more reliable techniques, and building lots of transparency and performance checks in place. Instead of harmfully biased AI, problems are solved by focusing on fixing the process that lets such AI be deployed to sensitive solutions w/o appropriate due diligence.

Good design also comes with the added benefit of not being reliant on the whims of a Lovecraftian omniscient system that no one understands, or on the makers of these systems that might change things at the drop of a hat (look no further than how often system prompts for LLMs change). If you haven’t followed good software engineering/security principles, then your moral alignment is putting lipstick on a pig. If you have followed them, then moral alignment adds nothing.

What about harmful use cases? Take something like Deepfakes or other AI-generated misinformation. As we’ve argued before, that is a legit problem, but it’s not the main problem. AI-Misinformation only preys on systemic alienation, a lack of critical thinking, and other societal factors that make things worse for everyone. Even if you remove AI Misinformation, the underlying problems still persist. If you solve the underlying issues, then moral alignment does very little to improve lives.

There is a lot of outcry given to how advanced AI can help your average Joe build biological weapons in their basements (if it was so easy, someone would have ChatGPT’d their way to solving Cancer). If we do build super-intelligence, having moral alignment that stops it from being used to kill people would be nice. But what about diverting some of that attention to the developers who are developing autonomous weapons systems right now (and the others profiting massively from them)? Fixing the problems of today before worrying about things that may or may never happen-

a man who we confirmed to be the current commander of the elite Israeli intelligence unit 8200 — makes the case for designing a special machine that could rapidly process massive amounts of data to generate thousands of potential “targets” for military strikes in the heat of a war. Such technology, he writes, would resolve what he described as a “human bottleneck for both locating the new targets and decision-making to approve the targets.”

Such a machine, it turns out, actually exists. A new investigation by +972 Magazine and Local Call reveals that the Israeli army has developed an artificial intelligence-based program known as “Lavender,” unveiled here for the first time…

Formally, the Lavender system is designed to mark all suspected operatives in the military wings of Hamas and Palestinian Islamic Jihad (PIJ), including low-ranking ones, as potential bombing targets. The sources told +972 and Local Call that, during the first weeks of the war, the army almost completely relied on Lavender, which clocked as many as 37,000 Palestinians as suspected militants — and their homes — for possible air strikes.

-Source. Similar technologies are used by governments all over the world.

Relying on moral alignment for your AI Safety is a way of ducking responsibility. It’s great precisely because it’s like tomorrow- it will never come. You can move goal-posts to your contentment, and it’s very nebulous and important-sounding nature will mean that people will never question you. It’s an excuse to accomplish nothing while letting the world know that you’re on the frontier solving humanity's most important problems. Moral Alignment is the quintessential “hold me back bro”, the perfect symbol of busy work.

It’s a seductive idea, no doubt. However, it suffers from a mentality that is all too common in the hype-heavy parts of tech.

Instead of following the approach

“Important Problems → Money/ Resources Available→ Possible Solutions → Use AI iff it’s useful”,

it follows the approach

“Money for AI Problems→ Find Problems in AI-> Solve Problems that sound big”.

In a nutshell- moral alignment at best fixes symptoms and not underlying issues. It gets all the attention for the same reason that everyone loves trying out new AI Models, and no one likes testing new features in your data: real work is not sexy.

All that being said, I will reiterate that this particular paper is worth reading. Partly b/c of its uses outside Moral Alignment. And partly b/c even if this goes nowhere, this research is one of the few innovative/interesting research related to LLMs (most LLM research is either use more compute, most of the scale isn’t useful, or use better data). For that reason alone, it’s worth a deeper look.

If you liked this article and wish to share it, please refer to the following guidelines.

That is it for this piece. I appreciate your time. As always, if you’re interested in working with me or checking out my other work, my links will be at the end of this email/post. And if you found value in this write-up, I would appreciate you sharing it with more people. It is word-of-mouth referrals like yours that help me grow.

Reach out to me

Use the links below to check out my other content, learn more about tutoring, reach out to me about projects, or just to say hi.

Small Snippets about Tech, AI and Machine Learning over here

AI Newsletter- https://artificialintelligencemadesimple.substack.com/

My grandma’s favorite Tech Newsletter- https://codinginterviewsmadesimple.substack.com/

Check out my other articles on Medium. : https://rb.gy/zn1aiu

My YouTube: https://rb.gy/88iwdd

Reach out to me on LinkedIn. Let’s connect: https://rb.gy/m5ok2y

My Instagram: https://rb.gy/gmvuy9

My Twitter: https://twitter.com/Machine01776819

I have mentioned this excellent write-up on moral alignment in my newsletter 'AI For Real' this week. Here's the link https://aiforreal.substack.com/p/are-you-ai-positive-or-ai-negative